The official repo for the NeurIPS 2022 paper <PerfectDou: Dominating DouDizhu with Perfect Information Distillation>.

*Note: We only realease our pretrained model and the evaluation code. The training code is currently unavailable since a distributed system was used. The codes for calculating left hands and for feature engineering are provided as shared library (i.e., .so file), the details of these two modules could be found in the paper. We will inform you at the first time once we decide to open source these codes.

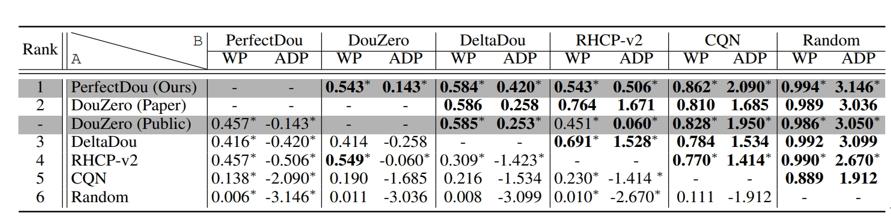

PerfectDou is current the state-of-the-art DouDizhu AI system for the game of DouDizhu (斗地主) developed by Netease Games AI Lab, with Shanghai Jiao Tong University and Carnegie Mellon University.

The proposed technique named perfect information distillation (a perfect-training-imperfect-execution framework) allows the agents to utilize the global information to guide the training of the policies as if it is a perfect information game and the trained policies can be used to play the imperfect information game during the actual gameplay.

For more details, please check our paper, where we show how and why PerfectDou beats all existing AI programs, and achieves state-of-the-art performance.

@inproceedings{yang2022perfectdou,

title={PerfectDou: Dominating DouDizhu with Perfect Information Distillation},

author={Yang, Guan and Liu, Minghuan and Hong, Weijun and Zhang, Weinan and Fang, Fei and Zeng, Guangjun and Lin, Yue},

booktitle={NeurIPS},

year={2022}

}The pre-trained model is provided in perfectdou/model/. For the convenience of comparison, the game environment and evaluation methods are the same as those in DouZero.

Some pre-trained models and heuristics as baselines have also been provided:

- random: agents that play randomly (uniformly)

- rlcard: the rule-based agent in RLCard

- DouZero: the ADP (Average Difference Points) version

- PerfectDou: the 2.5e9 frames version in the paper

First, clone the repo

git clone https://github.com/Netease-Games-AI-Lab-Guangzhou/PerfectDou.git

Make sure you have python 3.7 installed and then install dependencies:

cd PerfectDou

pip3 install -r requirements.txt

python3 generate_eval_data.py

Some important hyperparameters are as follows:

--output: where the pickled data will be saved--num_games: how many random games will be generated, default 10000

python3 evaluate.py

Some important hyperparameters are as follows:

--landlord: which agent will play as Landlord, which can be random, rlcard, douzero, perfectdou, or the path of the pre-trained model--landlord_up: which agent will play as LandlordUp (the one plays before the Landlord), which can be random, rlcard, douzero, perfectdou, or the path of the pre-trained model--landlord_down: which agent will play as LandlordDown (the one plays after the Landlord), which can be random, rlcard, douzero, perfectdou, or the path of the pre-trained model--eval_data: the pickle file that contains evaluation data--num_workers: how many subprocesses will be used

For example, the following command evaluates PerfectDou in Landlord position against DouZero agents

python3 evaluate.py --landlord perfectdou --landlord_up douzero --landlord_down douzero

- The demo is mainly based on RLCard-Showdown

- Evaluation code and game environment implementation is mainly based on DouZero

Please contact us if you have any problems.