This repo contains the code for the paper Observational Scaling Laws and the Predictability of Language Model Performance.

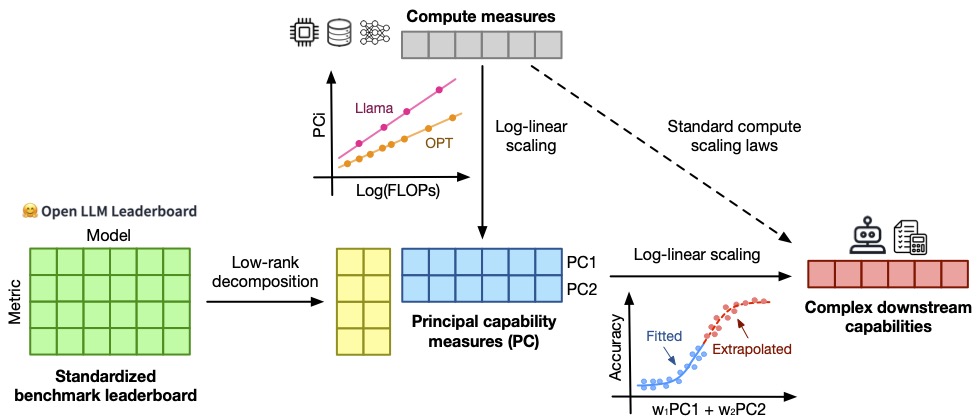

Observational scaling laws generalize scaling laws by identifying a low-dimensional capability measure extracted from standard LLM benchmarks (e.g., Open LLM Leaderboard) as a surrogate "scale" measure to analyze the scaling of complex LM downstream phenomena (e.g., agentic or "emergent" capabilities). The low-dimensional capability measure serves as a shared axis for comparing model families trained with different recipes (e.g., Llama-2, Phi, StarCoder, etc) and log-linearly correlates with compute measures (e.g., training FLOPs) within each model family, allowing us to utilize hundreds of public LMs for a training-free, high-resolution, and broad-coverage scaling analysis.

We release:

- Collected metadata and evaluation results for nearly 150 public pretrained models and instruction-tuned models

- Code for fitting observational scaling laws for scaling analyses

- Code and guidelines for selecting representative model subsets for low-cost scaling analyses

Updates

- [2024/10/01]

- Updaed pre-registered prediction results on newly released models

- Added unified benchmarking results on a new set of models

- Added unified benchmarking results of sub-10B models for cheap scaling analyses

Install the environment:

conda create -n obscaling python==3.10

conda activate obscaling

pip install -r requirements.txtTo fit a observational scaling law to analyze a particular downstream evaluation metric of your interest, follow the steps below:

Show code

from utils import *

# Load eval data

## Load LLM benchmark evaluation results (for base pretrained LLMs here

## or `load_instruct_llm_benchmark_eval()` for instruction-tuned models)

lm_benchmark_eval = load_base_llm_benchmark_eval()

## Load your downstream evaluation results to be analyzed for scaling

downstream_eval = load_your_downstream_eval()

## Merge eval results

lm_eval = pd.merge(lm_benchmark_eval, downstream_eval, on="Model")

# Fit scaling laws

## Specify scaling analysis arguments

### Base metric list for extracting PCs

metric_list = ['MMLU', 'ARC-C', 'HellaSwag', 'Winograd', 'TruthfulQA', 'GSM8K', 'XWinograd', 'HumanEval']

## Predictor metric, 3 PCs by default, use other PCs by `PC_METRIC_NUM_{N}`

## or compute measures like `MODEL_SIZE_METRIC` and `TRAINING_FLOPS_METRIC`

x_metrics = PC_METRIC_NUM_3

## Target metric to be analyzed for scaling

y_metric = "your_downstream_metric"

## Specific analysis kwargs, see the docstring of `plot_scaling_predictions` for details

setup_kwargs = {

# PCA & Imputation

"apply_imputation": True,

"imputation_metrics": metric_list,

"imputation_kwargs": {

'n_components': 1,

},

"apply_pca": True,

"pca_metrics": metric_list,

"pca_kwargs": {

'n_components': 5,

},

# Non-lineariy: by default, sigmoid with parametrized scale and shift

"nonlinearity": "sigmoid-parametric",

# Cutoff: e.g., 8.4E22 FLOPs corresponding to LLama-2 7B

"split_method": "cutoff_by_FLOPs (1E21)",

"cutoff_threshold": 84,

# Regression: ordinary least squares

"reg_method": "ols",

}

## Plot scaling curves

plt.figure(figsize=(7.5, 4.5))

_ = plot_scaling_predictions(

lm_eval, x_metrics, y_metric,

**setup_kwargs,

)We provide a simple guideline and minimal examples of selecting representative model subsets from available public models for low-cost scaling analyses (Sec 5 of the paper).

We have collected LLM evaluation metrics from standardized benchmarks or with unified evaluation protocols included in eval_results/.

In particular, we included the base LLM benchmark results in base_llm_benchmark_eval.csv, which can be used for your scaling analyses.

We have also filtered a set of sub-10B models that can run on a single A100 GPU for cheap scaling analyzes at smaller scales, the results are included in base_llm_benchmark_eval_sub_10b.csv.

If you would like to add additional LLMs for your analyzes, we suggest following our procedures as described below:

Show instructions

- For standard benchmarks including MMLU, ARC-C, HellaSwag, Winograd, and TruthfulQA, we collect them from the Open LLM leaderboard with the following command:

huggingface-cli download open-llm-leaderboard/results --repo-type dataset --local-dir-use-symlinks True --local-dir leaderboard_data/open-llm-leaderboard --cache-dir <CACHE_DIR>/open-llm-leaderboard-

For those models without exisiting evaluation results on the Open LLM leaderboard, we follow their evaluation protocals and use the Eleuther AI Harness @b281b0921 to evaluate them.

-

For coding benchmarks (HumanEval), we use the EvalPlus @477eab399 repo for a unified evaluation.

-

For other benchmarks like XWinograd, we use the Eleuther AI Harness @f78e2da45 for a unified evaluation.

Feel free to make a pull request to contribute your collected data to our repo for future research!

We provide notebooks to reproduce our major results in the paper, including:

We provide all our collected data in eval_results.

@article{ruan2024observational,

title={Observational Scaling Laws and the Predictability of Language Model Performance},

author={Ruan, Yangjun and Maddison, Chris J and Hashimoto, Tatsunori},

journal={arXiv preprint arXiv:2405.10938},

year={2024}

}