The goal of the AH-CoLT is to provide an efficient and augmentative annotation tool to facilitate creating large labeled visual datasets. This toolbox presents an efficient semi-automatic groundtruth generation framework for unlabeled images/videos. AH-CoLT enables accurate groundtruth labeling by incorporating the outcomes of state-of-the-art AI recognizers into a time-efficient human-based review and revise process.

So far, we have integrated different 2D pose inference models into our toolbox:

- Hourglass: Single person 16 keypoints human body pose estimation in MPII fasion.

- Faster RCNN: Single person 17 keypoints human body pose estimation in COCO fashion.

- FAN: Single person 68 facial landmarks estmation based on FAN's face alignment.

Contact:

Xiaofei Huang, Shaotong Zhu, Sarah Ostadabbas

- Requirements

- Main Annotation Function Selection

- Body Keypoints Estimation

- Facial Landmarks Estimation

- Citation

- License

- Acknowledgements

The interface of toolbox is developed by tkinter in python3.7 on Ubuntu 18.04. It also passed the test on Windows10 platform with python3.7 and CPU.

- Install following libraries:

-

(1) pyTorch

For Windows

pip install torch==1.7.0+cpu torchvision==0.8.1+cpu torchaudio===0.7.0 -f \ https://download.pytorch.org/whl/torch_stable.htmlFor Ubuntu

pip install torch==1.7.0+cu101 torchvision==0.8.1+cu101 torchaudio==0.7.0 -f \ https://download.pytorch.org/whl/torch_stable.html -

(2) detectron2

For Windows

git clone https://github.com/facebookresearch/detectron2.git python -m pip install -e detectron2For Ubuntu

python -m pip install detectron2 -f \ https://dl.fbaipublicfiles.com/detectron2/wheels/cpu/torch1.6/index.html -

(3) face-alignment

For Windows and Ubuntu

pip install face-alignment

-

- Run

pip install -r requirements.txtto install other libraries. - Download one of pretrained models (e.g. 8-stack hourglass model)

and put the model folder into

./Models/Hourglass/data/mpii. - Download a weights file for YOLOv3 detector here, and place it into

./Models/Detection/data. - Download one of COCO Person Keypoint Detection models from Detectron2 Model Zoo. (e.g. keypoint_rcnn_R_50_FPN_3x, and put the file into

./Models/Detectron2/models.

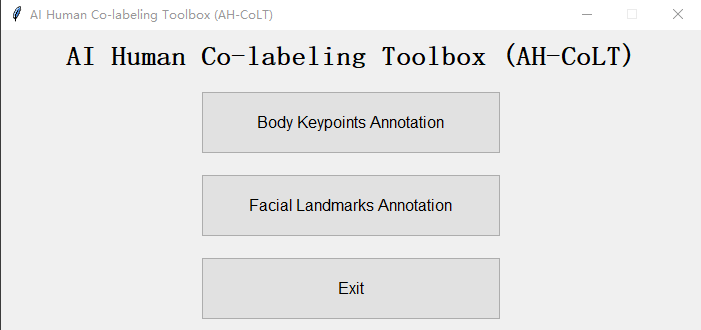

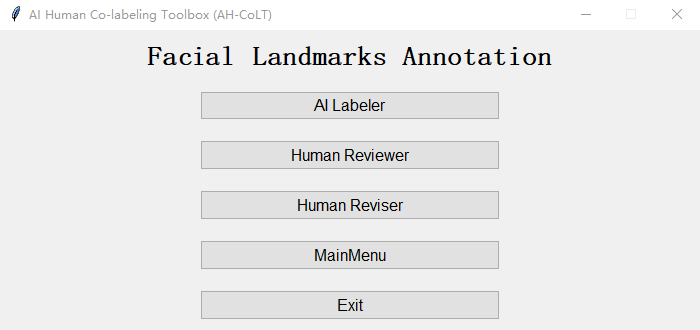

Run Toolbox.py to launch main window of AH-CoLT. The first step is to choose the subject of annotation. AH-CoLT provides both facial landmarks annotation and body keypoints annotation function.

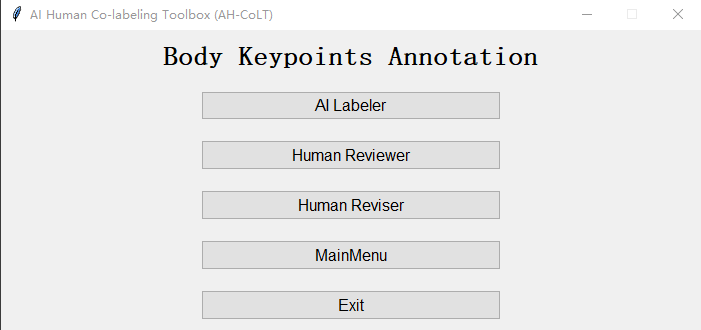

The three stages annotation process,which includes: AI Labeler, Human Reviewer, and Human Reviser, will follow once clicking either function button. For user convenience, each stage can be employed independently.

The task selection window

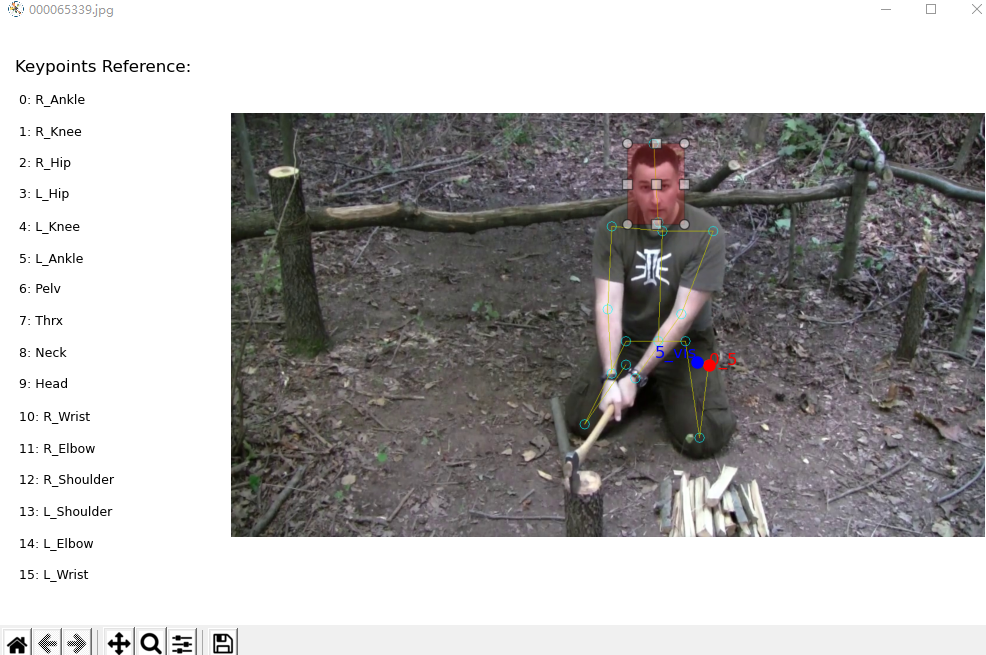

The Body Keypoints Annotation is mainly used to annotate the human body area for pose estimation.

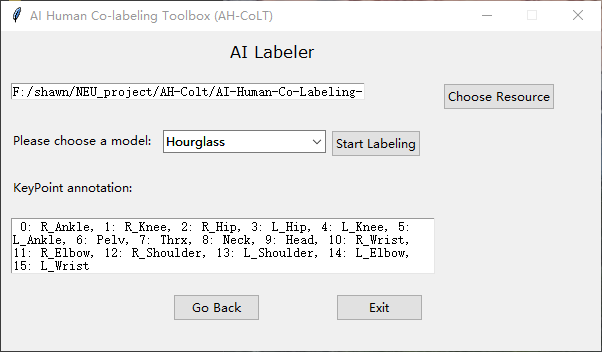

AI labeler interface allows users to load a video or a collection of images as the unlabeled data source and select an appropriate already trained model as the initial AI labeler. The types of labels outputted by the AI model is then displayed in the textbox.

Model Selection:

- Hourglass: 16 MPII keypoints for single-person images.

- Faster R-CNN: 17 COCO keypoints for single-person images.

The "AI Labeler" window

Choose a directory of images set or a file of video. Each image could be .jpeg or .png file. The format of video file could be MP4, AVI or MOV.

{ROOT}/img

- If resource is a video, the corresponding frames will be generated first in folder

${root}/video_name, which is named the video file name. - Predicted keypoints of all images/frames will be save in

dirname_model.pklunder root path. Heredirnamerepresents the name of images/frames set andmodelis the abbreviation of name of selected model.

${ROOT}/img_hg.pkl

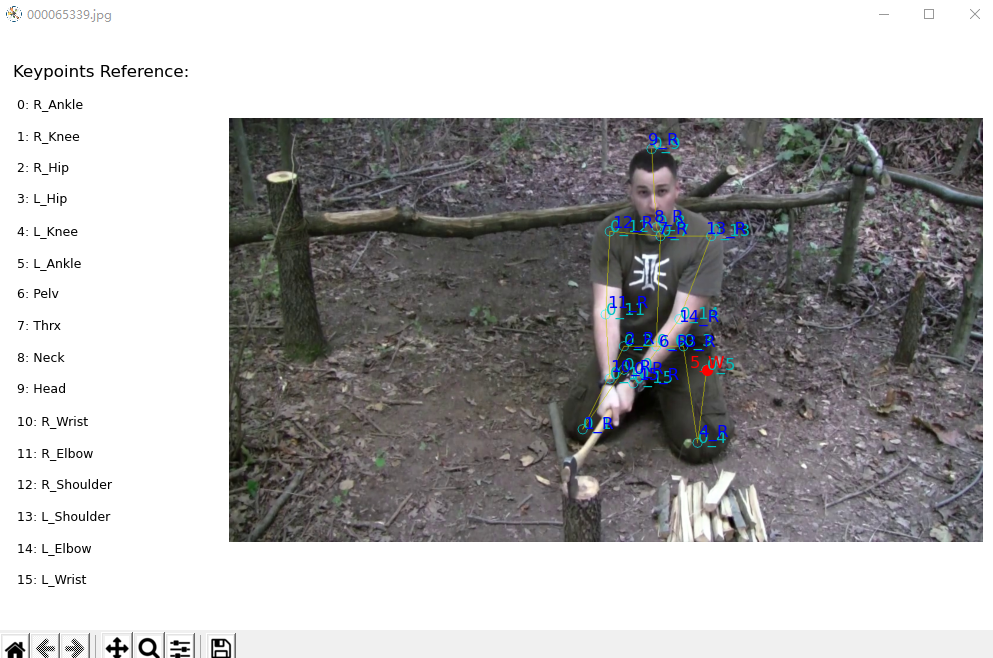

In this stage, the output of the AI labeling is given to a human reviewer for his/her evaluation. Reviewer needs check keypoints in order.

- Folder of images/frames set

- AI predicted keypoint file, which is generated by AI labeler.

When all images have been reviewed, a flag list file will be save in dirname_flag.pkl under root path. Here dirname represents

the name of images/frames set.

${ROOT}/img_flag.pkl

The format of labels, which represent the AI predicted keypoints, is poseindex_keypointindex.

| Operation | Description |

|---|---|

| Click left button of mouse | Accept current predicted keypoint |

| Click right button of mouse | Reject current predicted keypoint |

| Press 'i' on keyboard | Insert a keypoint |

| Press 'd' on keyboard | Delete current keypoint |

| Press 'u' on keyboard | Undo |

| Press 'y' on keyboard | Confirm reviewing of current image (ONLY work after checking all keypoints) |

| Press 'n' on keyboard | Recheck current image |

#####Note: As we default all keypoints visible, if considering the visibility of each keypoint, please mark obscured keypoints as errors, so that they can be annotated as invisible.

In this stage, only the AI model errors detected by the human reviewer need to be revised.

- Folder of images/frames set

- AI predicted keypoint file, which is generated by AI labeler.

- Corresponding flag list file, which is generated by Human Reviewer.

When all images have been revised, a groundtruth will be save in dirname_gt.pkl under root path. Here dirname represents

the name of images/frames set.

${ROOT}/img_gt.pkl

The keypoints, which need to be revised, are displayed in red. After correcting all keypoints marked as errors in one image, human reviser needs to capture the head bounding box by holding and releasing left button to create a pink rectangle.

| Operation | Description |

|---|---|

| Click left button of mouse | Capture the new position of red keypoint and set as 'visible' |

| Click right button of mouse | Capture the new position of red keypoint and set as 'invisible' |

| Press 'u' on keyboard | Undo |

| Hold and release left button of mouse | Create a rectangle box |

| Press 'y' on keyboard | Confirm revising of current image (ONLY work after revising all keypoints and capturing bounding box) |

Note: In MPII fashion or for the 68 facial landmarks, the rectangle box is created for head bounding box, while it is for body bounding box in COCO fashion.

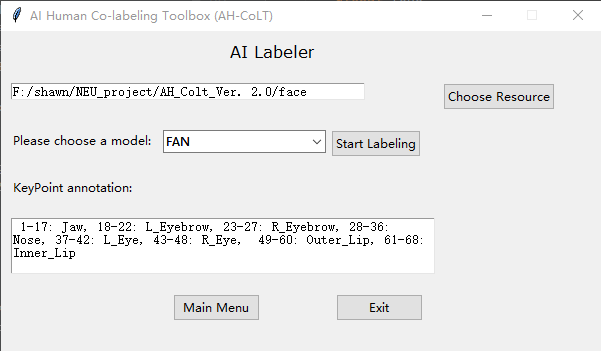

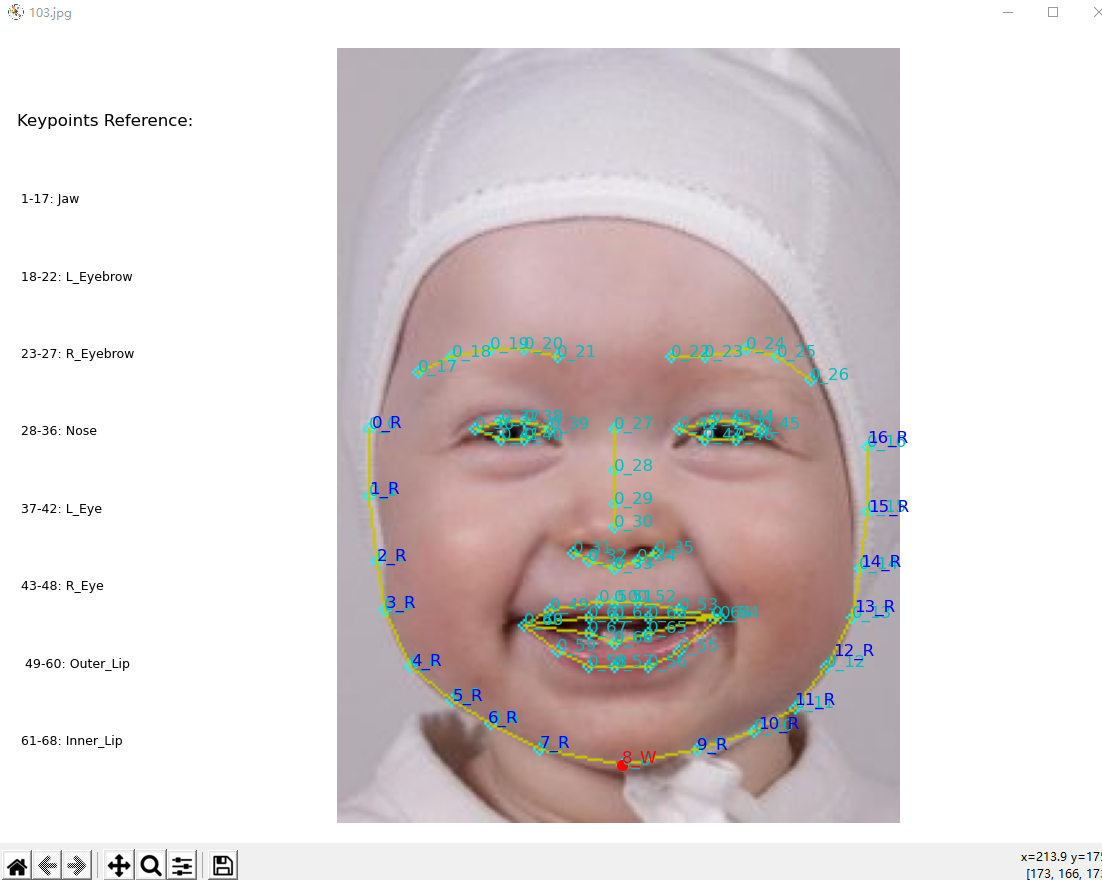

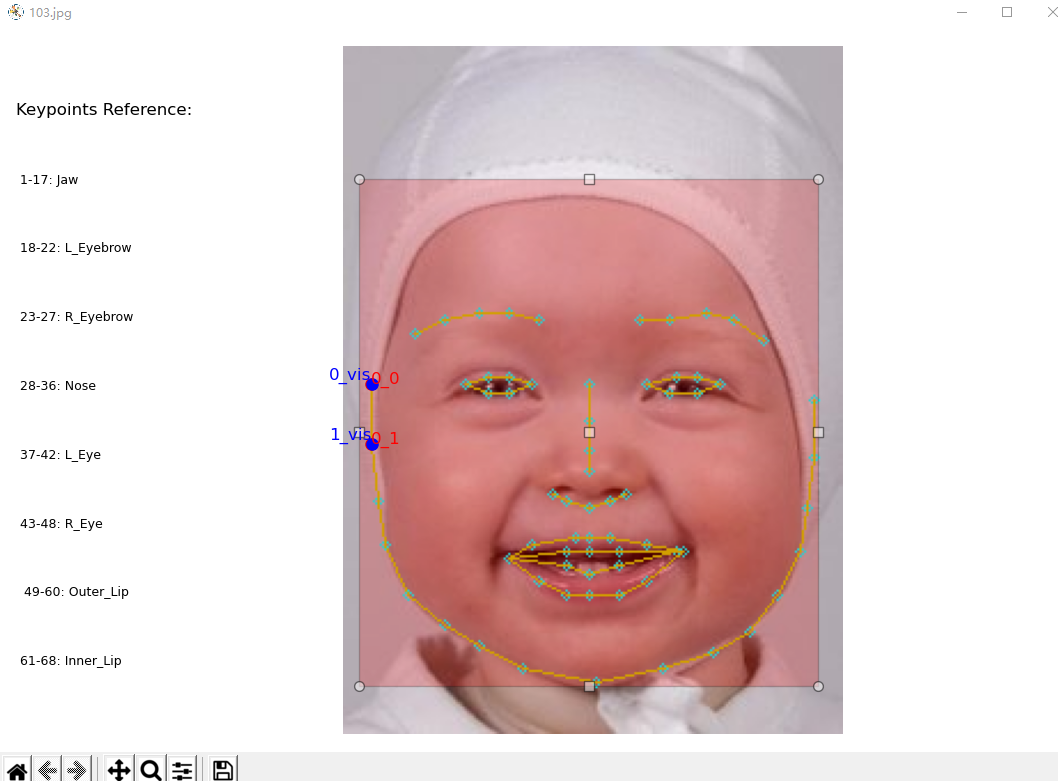

The Facial Landmarks Annotation is mainly used to annotate the facial area.

AI labeler interface allows users to load a video or a collection of images as the unlabeled data source and select an appropriate already trained model as the initial AI labeler. The types of labels outputted by the AI model is then displayed in the textbox.

Model Selection:

- FAN: 68 facial landmarks for single-person images.

The "AI Labeler" window

Choose a directory of images set or a file of video. Each image could be .jpeg or .png file. The format of video file could be MP4, AVI or MOV.

{ROOT}/face

- If resource is a video, the corresponding frames will be generated first in folder

${root}/video_name, which is named the video file name. - Predicted keypoints of all images/frames will be save in

dirname_model.pklunder root path. Heredirnamerepresents the name of images/frames set andmodelis the abbreviation of name of selected model.

${ROOT}/face_fan.pkl

In this stage, the output of the AI labeling is given to a human reviewer for his/her evaluation. Reviewer needs check keypoints in order.

- Folder of images/frames set

- AI predicted keypoint file, which is generated by AI labeler.

When all images have been reviewed, a flag list file will be save in dirname_flag.pkl under root path. Here dirname represents

the name of images/frames set.

${ROOT}/face_flag.pkl

The format of labels, which represent the AI predicted keypoints, is poseindex_keypointindex.

| Operation | Description |

|---|---|

| Click left button of mouse | Accept current predicted keypoint |

| Click right button of mouse | Reject current predicted keypoint |

| Press 'i' on keyboard | Insert a keypoint |

| Press 'd' on keyboard | Delete current keypoint |

| Press 'u' on keyboard | Undo |

| Press 'y' on keyboard | Confirm reviewing of current image (ONLY work after checking all keypoints) |

| Press 'n' on keyboard | Recheck current image |

#####Note: As we default all keypoints visible, if considering the visibility of each keypoint, please mark obscured keypoints as errors, so that they can be annotated as invisible.

In this stage, only the AI model errors detected by the human reviewer need to be revised.

- Folder of images/frames set

- AI predicted keypoint file, which is generated by AI labeler.

- Corresponding flag list file, which is generated by Human Reviewer.

When all images have been revised, a groundtruth will be save in dirname_gt.pkl under root path. Here dirname represents

the name of images/frames set.

${ROOT}/face_gt.pkl

The keypoints, which need to be revised, are displayed in red. After correcting all keypoints marked as errors in one image, human reviser needs to capture the head bounding box by holding and releasing left button to create a pink rectangle.

| Operation | Description |

|---|---|

| Click left button of mouse | Capture the new position of red keypoint and set as 'visible' |

| Click right button of mouse | Capture the new position of red keypoint and set as 'invisible' |

| Press 'u' on keyboard | Undo |

| Hold and release left button of mouse | Create a rectangle box |

| Press 'y' on keyboard | Confirm revising of current image (ONLY work after revising all keypoints and capturing bounding box) |

Note: In MPII fashion or for the 68 facial landmarks, the rectangle box is created for head bounding box, while it is for body bounding box in COCO fashion.

@inproceedings{huang2019ah,

title={AH-CoLT: an AI-Human Co-Labeling Toolbox to Augment Efficient Groundtruth Generation},

author={Huang, Xiaofei and Rezaei, Behnaz and Ostadabbas, Sarah},

booktitle={2019 IEEE 29th International Workshop on Machine Learning for Signal Processing (MLSP)},

pages={1--6},

year={2019},

organization={IEEE}

}-

This code is for non-commercial purpose only.

-

For other uses please contact Augmented Cognition Lab (ACLab) at Northeastern University.

- The person detector is brought from pytorch-yolo-v3, which is based on YOLOv3: An Incremental Improvement.

- The hourglass pose estimation for AI Labeler comes from pytorch-pose.

- The Faster R-CNN pose estimation for AI Labeler comes from Detectron2

- The facial landmarks detector comes from face-alignment