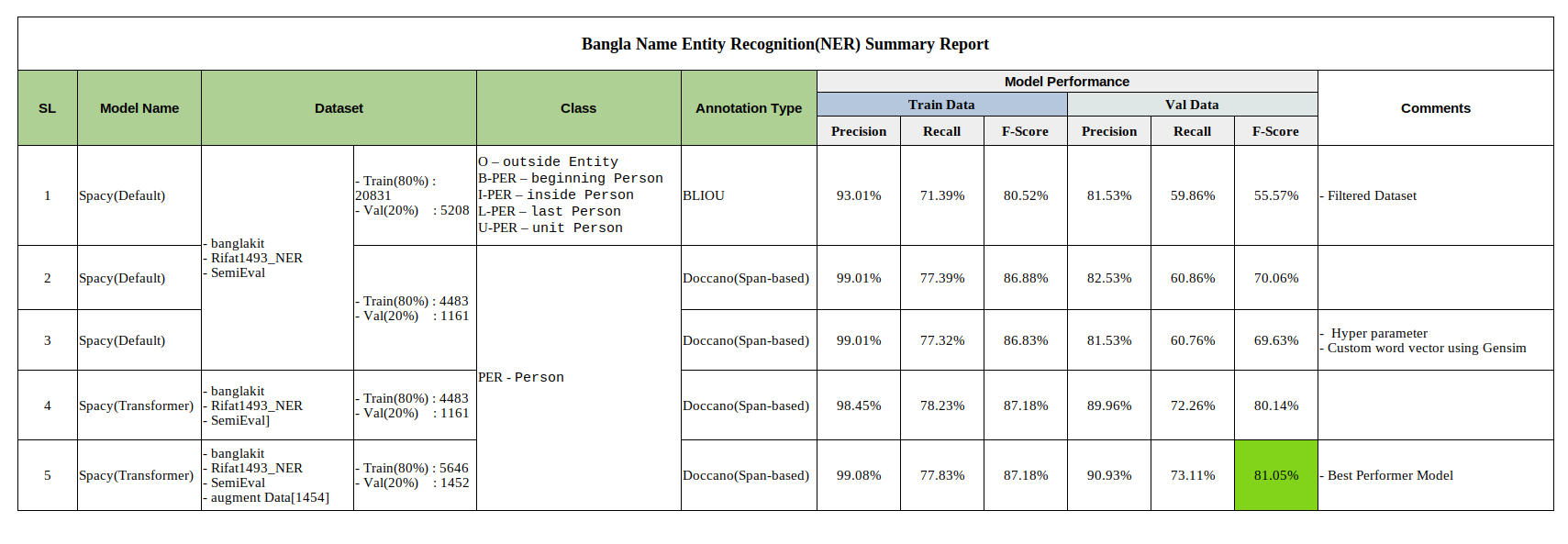

Bangla Name Entity Recognition (NER) is extracting human names from input Bangla string or text. To solve this problem select Spacy pipeline and try 5 experimental approaches.

The experiment is done only using one entity name (person) labeled as PER. After completing the experiment we got the best performance from the spacy transformer-based model.

For more detail please check the experimental details The best model F1 score is ~.81.05.

## Table of Contents

1. Installation

2. Dataset and Data Processing

3. Model Building

4. Model Evaluation

5. Inference

6. Docker

7. End Point API

8. Bert Pipeline[New Experiments]

9. References

conda create -n bn_ner python=3.8

pip install -r requirements.txt

N.B: if raise CuPy error, install pip install CuPy==12.3.0 version for GPU acceleration.

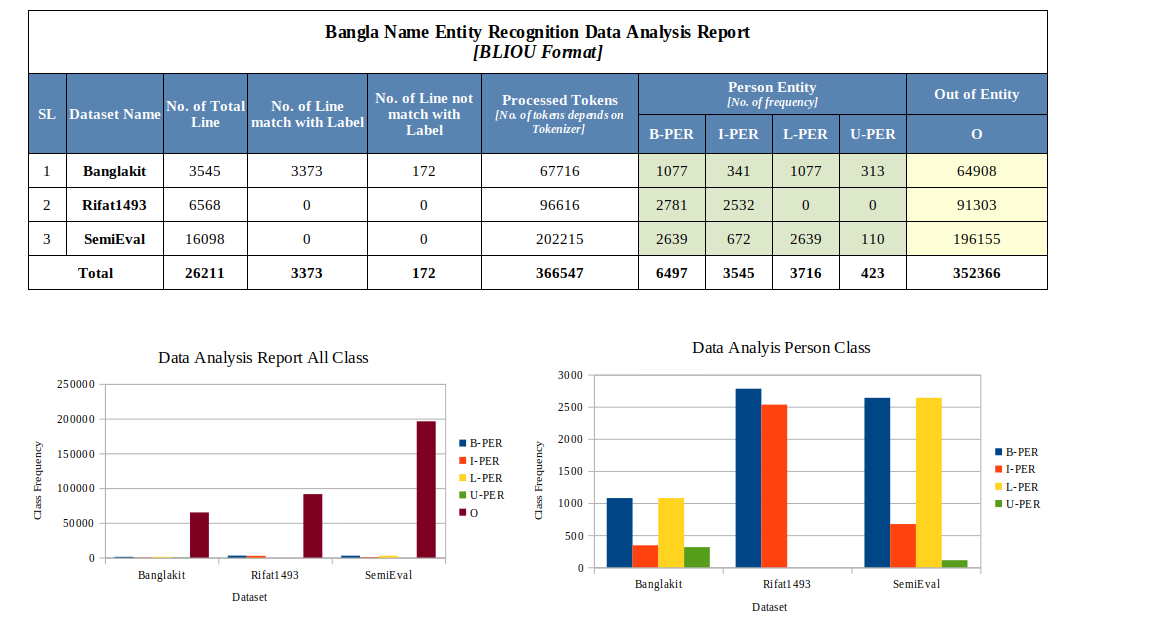

2.1 EDA Report

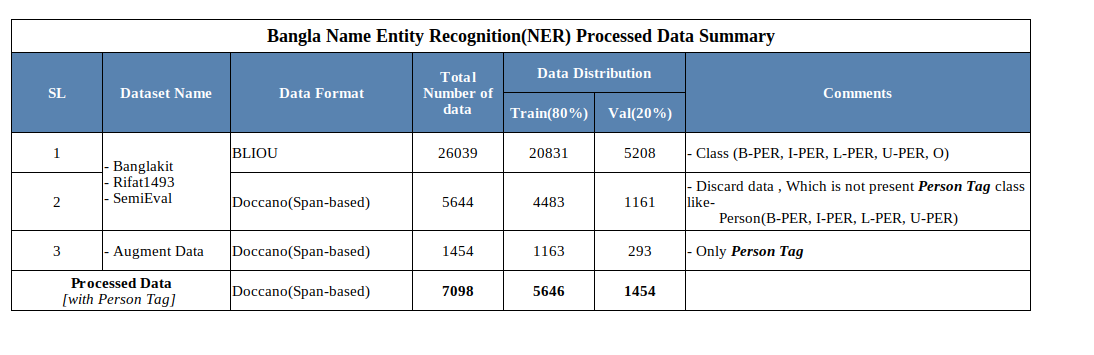

2.2 Processed Data Summary

2.3 Data Collection Information

2.4 Data Annotation Information

2.5 Data Processing

Check the training/utils folder for the Data processing script.

Bangla NER data is collected from,

-

banglakit Bangla NER Dataset Link

-

Rifat1493 Bangla NER Dataset Link

-

SemEval2022 Bangla NER Dataset Link

raw dataset structure into raw_data folder,

raw_data

├── banglakit

│ └── main.jsonl

├── Rifat1493_NER_txt

│ └── all_data.txt

└── SemiEval

├── bn_dev.json

├── bn_train.json

└── statistics.md

Please Check the annotation structure and information about the Bangla NER dataset check

Data processing approach,

2.5.1 Raw Data Processing

2.5.2 Span Based Data Processing(Doccano NER format)

2.5.3 Augment Data Generation

2.5.4 Preparing Training Spacy Format

Raw data processing steps:

- Exclude other classes without PERSON Class(PER)

- Discard the dataset not matching with label

- Clean IOB and remove data that is in the wrong IOB format

- Minimal data removal which is logically valid and matching token.

- BLIOU Annotation checking and correction

- Data Distribution training (80%) and validation (20%)

- Randomly data shuffle - 4 times

Get for information about BLIOU format please check Example

For processing steps please check the script utils/data_processing.py line number 443 to 445 dataset path directory,

input:

# make sure the input dataset path

banglakit= "./data/raw_data/banglakit/main.jsonl"

rifat1493 = "./data/raw_data/Rifat1493_NER_txt/all_data.txt"

semieval = "./data/raw_data/SemiEval"

Register the data line number 453,

register_dataset = {

"BanglakitNER" : banglakit,

"Rifat1493BnNER" : rifat1493,

"SemiEvalBnNER" : semieval_files

}N.B: if any dataset is not possible to collect, avoid the data assigning into the register field. data will be processed which have registered.

Run

python utils/data_processing.py

Output,

No. of Training data: 20831

No. of Validation data: 5208

Data Save directory,

data

└── ner_bliou_processed_data

├── train.json

└── val.jsonMake sure input and output path directory into script python utils/conversion_bliou_to_span_format.pyline number 201,

#make sure the input path directory

data_path = "./data/ner_bliou_processed_data"

#make sure output path directory

output_dir = "./data/ner_spanbased_process_data"output :

processed file : ./data/ner_spanbased_process_data/train.jsonl

Total number of lines: 20831

Person Tag Found: 4483

processed file : ./data/ner_spanbased_process_data/val.jsonl

Total number of lines: 5208

Person Tag Found: 1161Annotation structure,

{"text": "অ্যাভনার ফ্রিডম্যান (জন্ম ১৯৩২), আংশিক পার্থক্য সমীকরণ", "label": [[0, 19, "PER"]]}

{"text": "এটি ১৯১৪ সালে এডওয়ার্ড মেয়ারিক দ্বারা বর্ণনা করা হয়েছিল।", "label": [[14, 32, "PER"]]}

{"text": "আমার কাছে একটি সুদৃশ্য ল্যাপ স্টিল ছিল যা ডেভিড লিন্ডলি বেছে নিয়েছিল।", "label": [[42, 55, "PER"]]}

For more details about the Doccano annotation format please check link

Generate augment data 25.79% compared with original data[5644]. So the number of augmented data is 1454 and distributed as training[80%] 1163 and validation[20%] 293.

Proposed Approach,

- Scraping human name data from several websites

- Replace the name into the text string using span position(starting and ending) and update the IOU span of the text.

Explanation,

Original Data,

{"text": "তিনি মোহাম্মদ বাকির আল-সদর এর ছাত্র ছিলেন।", "label": [[5, 26, "PER"]]}

Augmented Data,

{"text": "তিনি সাইফুল ইসলাম এর ছাত্র ছিলেন।", "label": [[5, 17, "PER"]]}

replace the the human name মোহাম্মদ বাকির আল-সদর using name সাইফুল ইসলাম and generate augment data.

Convert process data to spacy format and make sure data path into script utils/conversion_spacy_format.py,

# input path dir

input_dir = "./data/ner_spanbased_process_data"

# output path dir

output_dir = "./data/ner_spanbased_process_data"Output:

# convert train data to spacy format and save the dir

Spacy Processed file : ./data/ner_spanbased_process_data/train.spacy

No. of Processed line: 4483

# None span found

No. of Skip Entity: 11

# convert val data to spacy format and save the dir

Spacy Processed file : ./data/ner_spanbased_process_data/val.spacy

No. of Processed line: 1146

# None span found

No. of Skip Entity : 3check the annotation visualization notebook

All the processing is complete, let's accelerate the model training.

N.B: if you want to train the spacy ner pipeline using BLIOU format, run below the command,

Data conversion command for BLIOU, Convert `BLIOU` JSON format to `.spacy` data format

python -m spacy convert data/bangla_ner_data/train.json ./data

python -m spacy convert data/bangla_ner_data/val.json ./data

Please check the training strategy and procedure here

or colab know about into colab check,

Model Performance Summary

For more details check report

Make sure the data annotation path and run the eval.py script.

N.B: If any issue occurs for model download, please download manually from here and place it into ```inference/models folder

python eval.py

For more information check The experimental details

For the inference, run the inference.py script the model will download from huggingface and save it in the "inference/models" folder. for manual downloading, check the Model Evaluation N.B section.

run,

python inference.pyOr Check the colab for instance inference,

Code Example,

import spacy

# Load English tokenizer, tagger, parser and NER

nlp = spacy.load("models/bangla_ner_model/model-best")

text_list = [

"আব্দুর রহিম নামের কাস্টমারকে একশ টাকা বাকি দিলাম",

"নতুন বছরে জ্বলছেন আরও একজন—রজার ফেদেরার ।",

"ডিপিডিসির স্পেশাল টাস্কফোর্সের প্রধান মুনীর চৌধুরী জানান",

"তিনি মোহাম্মদ বাকির আল-সদর এর ছাত্র ছিলেন।",

"লিশ ট্র্যাক তৈরির সময় বেশ কয়েকজন শিল্পীর দ্বারা অনুপ্রাণিত হওয়ার কথা স্মরণ করেন, বিশেষ করে ফ্রাঙ্ক সিনাত্রা ।",

]

for text in text_list:

doc = nlp(text)

print(f"Input: {text}")

for entity in doc.ents:

print(f"Entity: {entity.text}, Label: {entity.label_}")

print("---")Ouptut

Input: আব্দুর রহিম নামের কাস্টমারকে একশ টাকা বাকি দিলাম

Entity: আব্দুর রহিম, Label: PER

---

Input: নতুন বছরে জ্বলছেন আরও একজন—রজার ফেদেরার ।

---

Input: ডিপিডিসির স্পেশাল টাস্কফোর্সের প্রধান মুনীর চৌধুরী জানান

Entity: মুনীর চৌধুরী, Label: PER

---

Input: তিনি মোহাম্মদ বাকির আল-সদর এর ছাত্র ছিলেন।

Entity: মোহাম্মদ বাকির আল-সদর, Label: PER

---

Input: লিশ ট্র্যাক তৈরির সময় বেশ কয়েকজন শিল্পীর দ্বারা অনুপ্রাণিত হওয়ার কথা স্মরণ করেন, বিশেষ করে ফ্রাঙ্ক সিনাত্রা ।

Entity: ফ্রাঙ্ক সিনাত্রা, Label: PEROr

inference notebook check

For docker install please check

sudo docker build -t bangla_ner.

Check the Docker image and image ID,

sudo docker images

Output,

REPOSITORY TAG IMAGE ID CREATED SIZE

bangla_ner latest c34a96d16f48 4 minutes ago ...GB

For CPU run,

# run it first

sudo docker run -it -p 5000:5000 bangla_ner /bin/bash

# go inference directory

cd inference/

# run

python3 app.pyAfter running the app file output shows IP-Address looks like,

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:8008

* Running on http://172.17.0.2:8008N.B: For API request and docker inference, Take the last ip and change the End Point API request url http://localhost:8008/ner" using this http://172.17.0.2:8008. this approch only for inside docker API request purposes. otherwise no need to change End Point API

Some instruction as inference section. run app.py model will download and the server will run.

For more details about API requests, please check

Bert Model F1 score is ~.80

Inference Pipeline : huggingface

Training Pipeline :

import torch

from transformers import BertTokenizerFast, BertConfig, BertForTokenClassification

device = 'cuda' if torch.cuda.is_available() else 'cpu'

MAX_LEN = 512

labels_to_ids = {'O': 0, 'B-PER': 1, 'L-PER': 2, 'I-PER': 3, 'U-PER': 4}

ids_to_labels = {value:key for key, value in labels_to_ids.items()}

tokenizer = BertTokenizerFast.from_pretrained('saiful9379/BanglaNER_BERT')

model = BertForTokenClassification.from_pretrained('saiful9379/BanglaNER_BERT', num_labels=len(labels_to_ids))

model.to(device)

text_list = [

": আল স্কিনিয়ার গিটার, পিয়ানো, ভোকাল, মগ সিনথেসাইজার",

"আব্দুর রহিম নামের কাস্টমারকে একশ টাকা বাকি দিলাম",

"রহিম নামের কাস্টমারকে একশ টাকা বাকি দিলাম",

"সাইফুল ইসলাম ন্যাচারাল ল্যাঙ্গুয়েজে প্রসেসিং খুব বেশি ভালো পারে না । তাই সে বেশি বেশি স্টাডি করতেছে।",

"ডিপিডিসির স্পেশাল টাস্কফোর্সের প্রধান মুনীর চৌধুরী জানান",

"তিনি মোহাম্মদ বাকির আল-সদর এর ছাত্র ছিলেন।",

"লিশ ট্র্যাক তৈরির সময় বেশ কয়েকজন শিল্পীর দ্বারা অনুপ্রাণিত হওয়ার কথা স্মরণ করেন, বিশেষ করে ফ্রাঙ্ক সিনাত্রা ।",

]

def inference(sentence):

inputs = tokenizer(sentence, padding='max_length', truncation=True, max_length=MAX_LEN, return_tensors="pt")

# move to gpu

ids = inputs["input_ids"].to(device)

mask = inputs["attention_mask"].to(device)

# forward pass

outputs = model(ids, mask)

logits = outputs[0]

active_logits = logits.view(-1, model.num_labels) # shape (batch_size * seq_len, num_labels)

flattened_predictions = torch.argmax(active_logits, axis=1) # shape (batch_size*seq_len,) - predictions at the token level

tokens = tokenizer.convert_ids_to_tokens(ids.squeeze().tolist())

token_predictions = [ids_to_labels[i] for i in flattened_predictions.cpu().numpy()]

wp_preds = list(zip(tokens, token_predictions)) # list of tuples. Each tuple = (wordpiece, prediction)

word_level_predictions = []

for pair in wp_preds:

if (pair[0].startswith(" ##")) or (pair[0] in ['[CLS]', '[SEP]', '[PAD]']):

# skip prediction

continue

else:

word_level_predictions.append(pair[1])

str_rep = " ".join([t[0] for t in wp_preds if t[0] not in ['[CLS]', '[SEP]', '[PAD]']]).replace(" ##", "")

print(str_rep)

print(wp_preds)

print(word_level_predictions)

print("="*30)N.B : BERT Pipeline add readme (13/12/2023)