Vision Transformer (ViT) is a type of neural network architecture that has been introduced to address the problem of image classification. Unlike traditional convolutional neural networks (CNNs), which rely on convolutions to extract local features from an image, ViT employs a self-attention mechanism to extract global features for classification.

Containerize the training and inference pipeline using .

pip install torch==1.11.0+cu113 torchvision==0.12.0+cu113 -f https://download.pytorch.org/whl/torch_stable.html

pip install transformers==4.22.1

Which containt 4 classes:

1) berry

2) bird

3) dog

4) flower

In this class containts some outlier like berry class folder containt other object. defined those object data we can remove outlier.

dataset

├── train[class_folder and it's image]

└── test [class_folder and it's image]

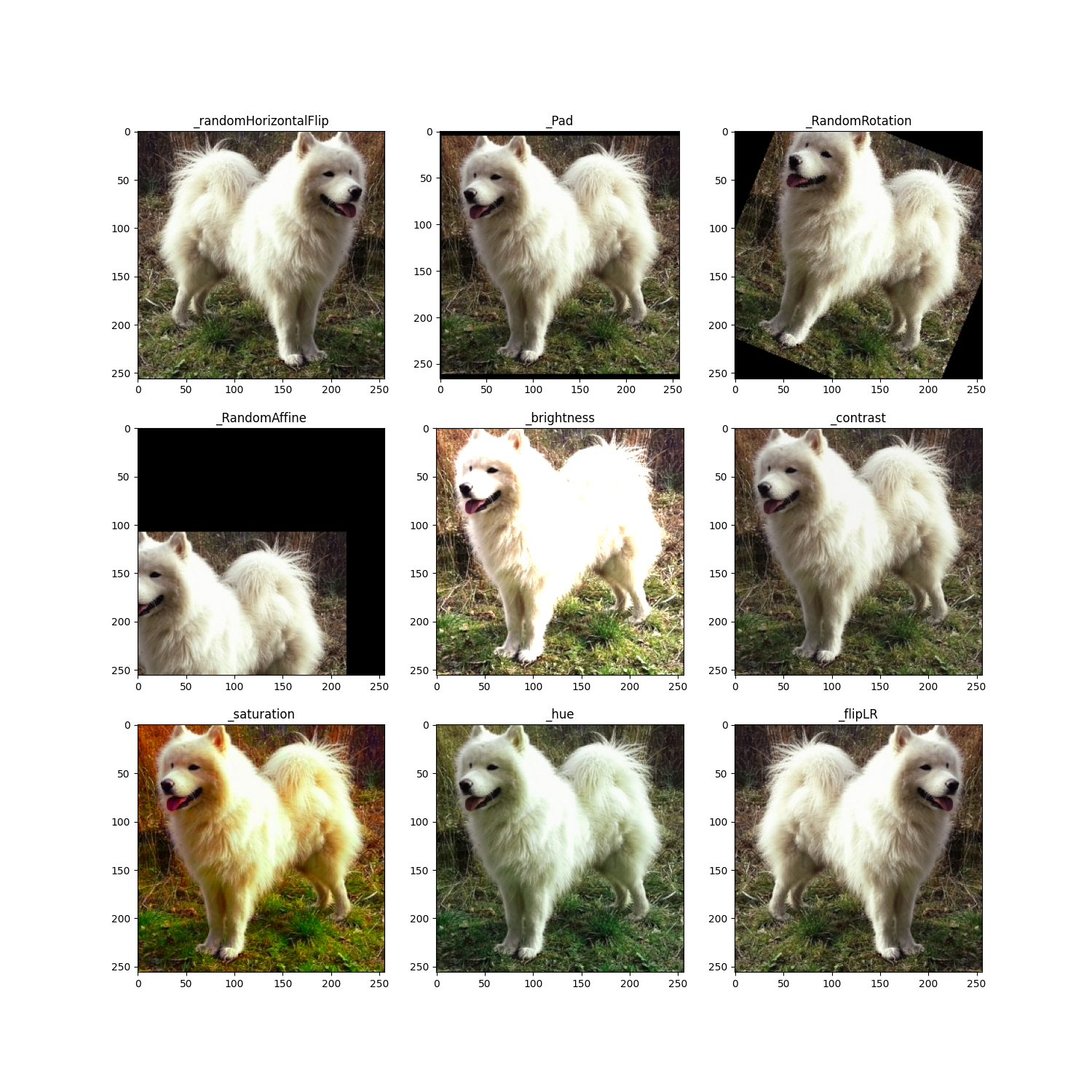

Run data_augmentation.py script

python data_augmentation.py1. randomHorizontalFlip

2. Padding

3. RandomRotation

4. RandomAffine

5. brightness

6. contrast

7. saturation

8. hue

9. shift_operation

10. random_noise

11. blurred_gaussian

12. flipLR

13. flipUD

Check the config file config.py script for data path as well as below this mention variable.

# Make sure the dataset path directory

DATASET_PATH = "/dataset"

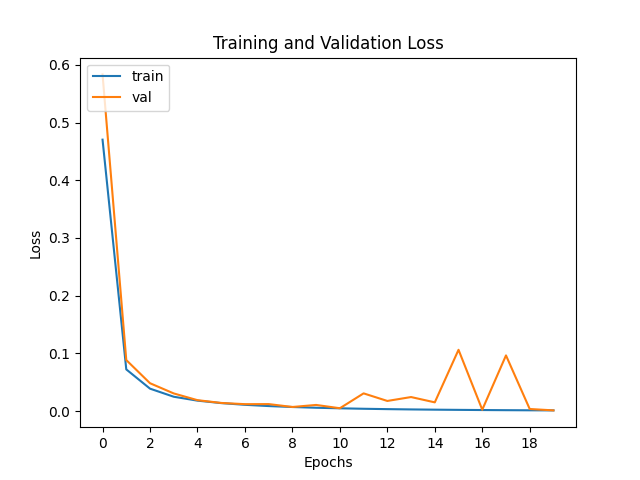

EPOCHS = 20

Run.

python train_vit.py

this plot matrices will save into log directory.

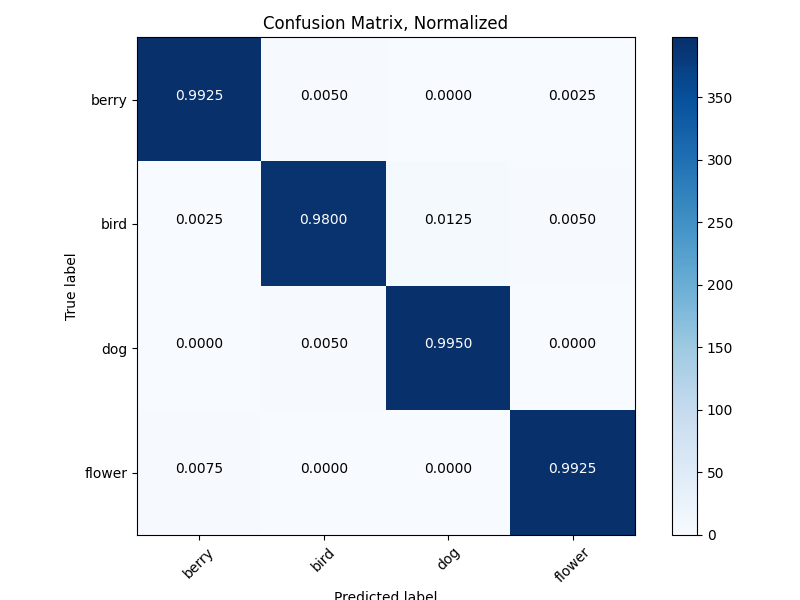

Model Evaluation run vit_eval.py script,

python vit_eval.py

Evaluation Training model.

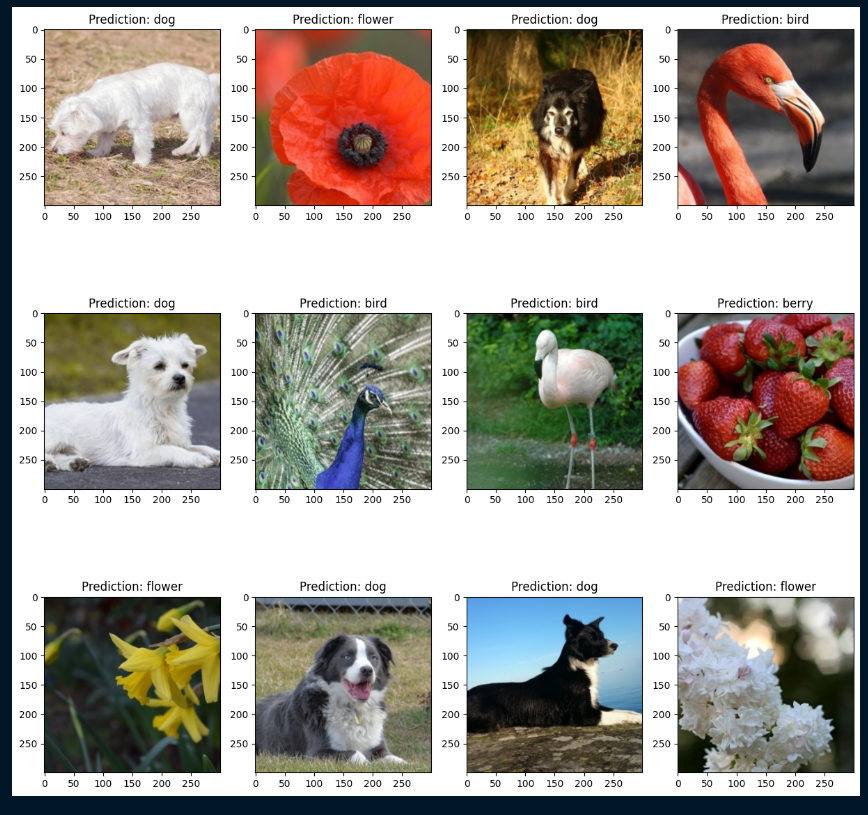

Inference Grid.