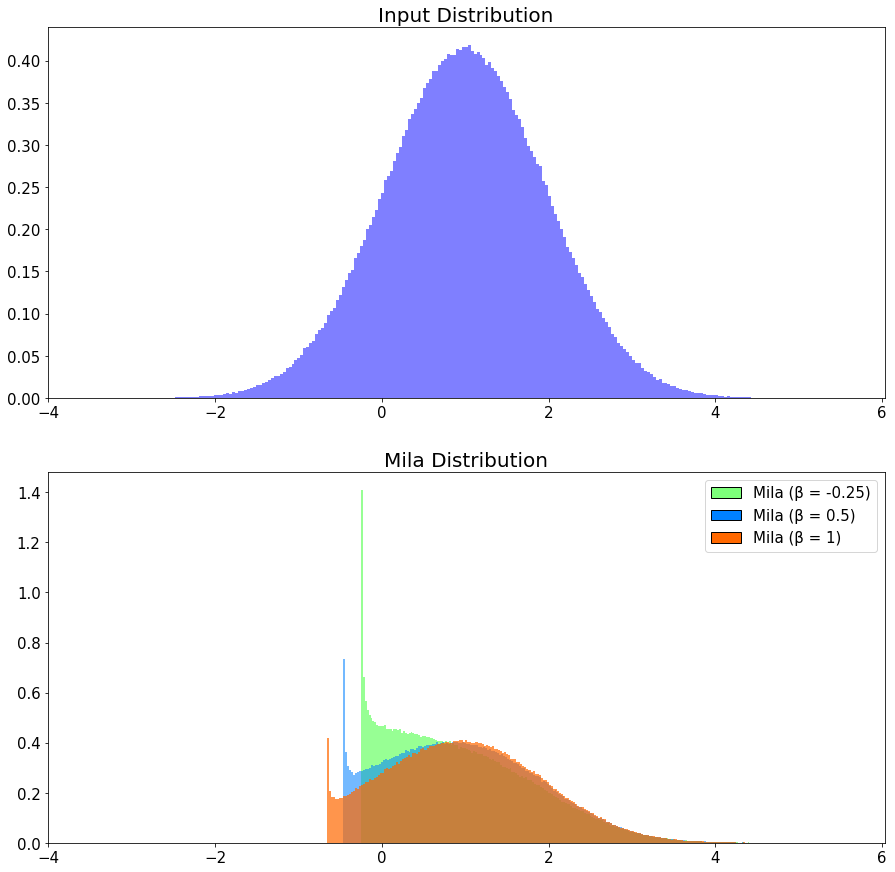

Mila is an uniparametric activation function inspired from the Mish Activation Function. The parameter β is used to control the concavity of the Global Minima of the Activation Function where β=0 is the baseline Mish Activation Function. Varying β in the negative scale reduces the concavity and vice versa. β is introduced to tackle gradient death scenarios due to the sharp global minima of Mish Activation Function.

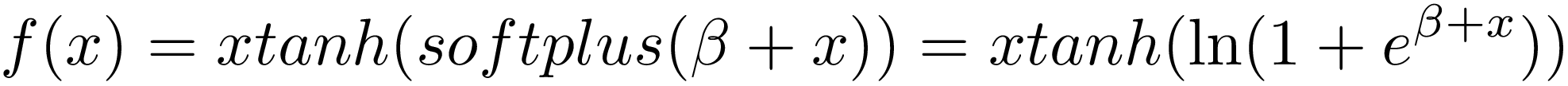

The mathematical function of Mila is shown as below:

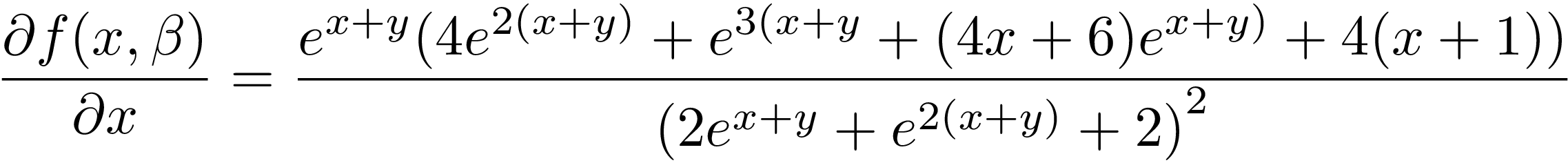

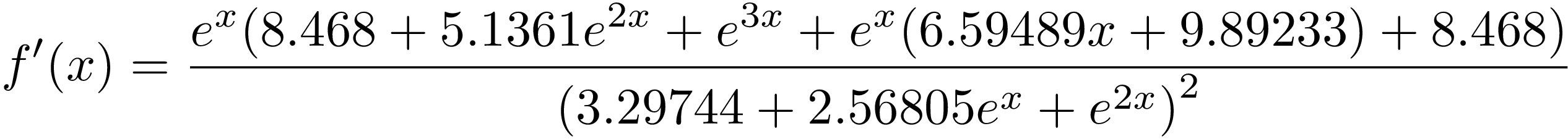

It's partial derivatives:

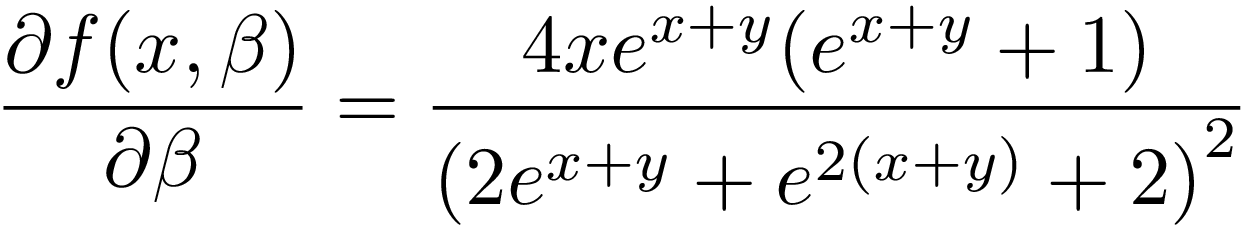

1st derivative of Mila when β=-0.25:

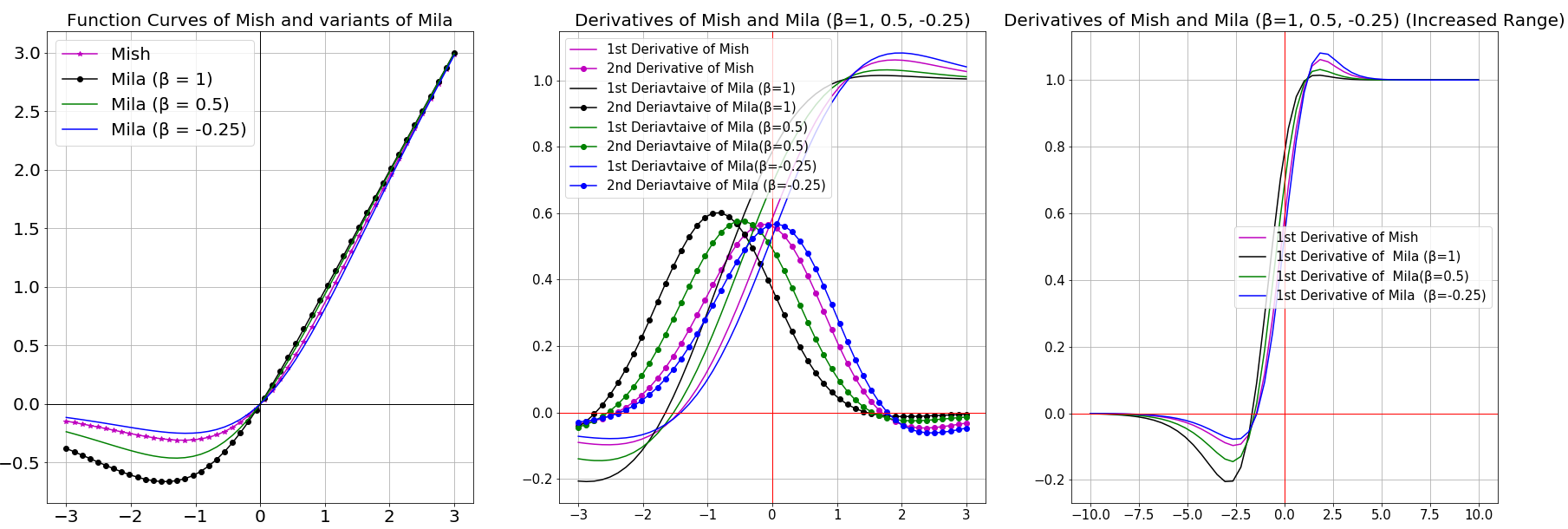

Function and it's derivatives graphs for various values of β:

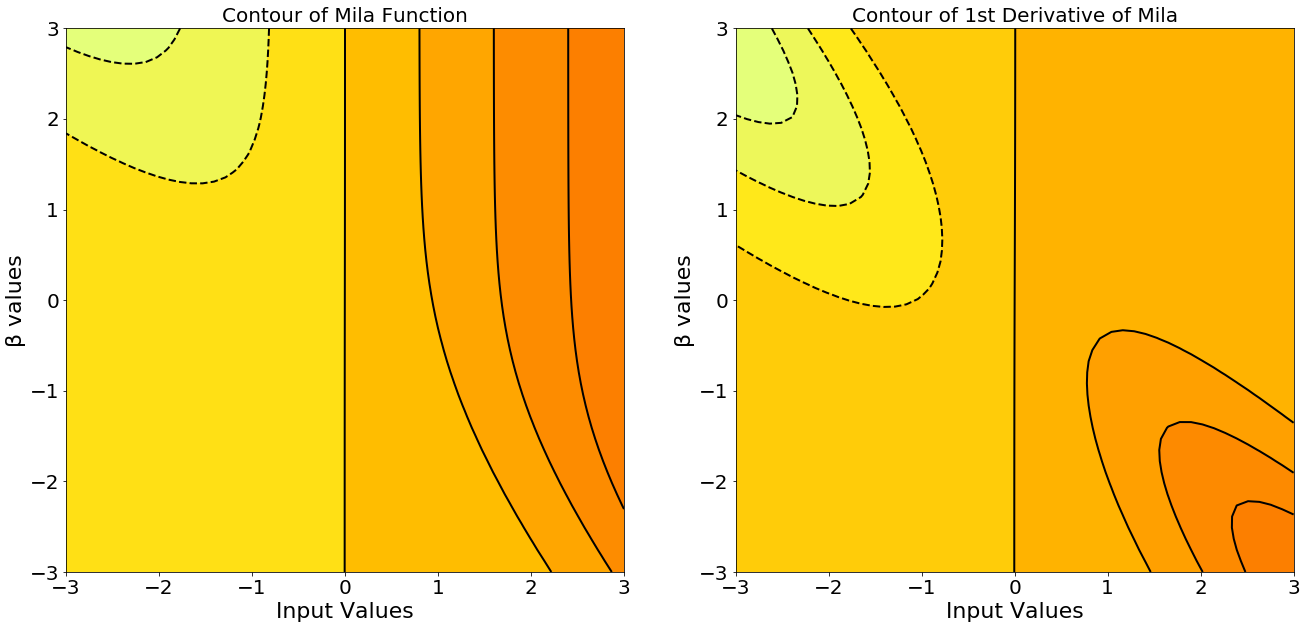

Contour Plot of Mila and it's 1st derivative:

Early Analysis of Mila Activation Function:

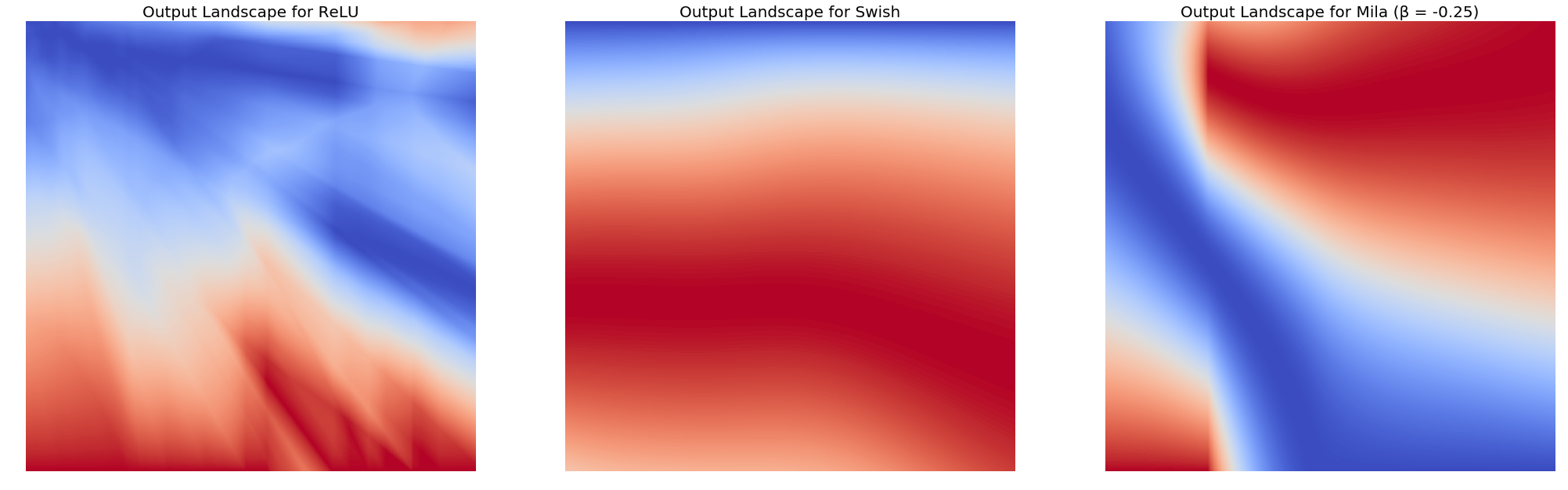

The output landscape of 5 layer randomly initialized neural network was compared for ReLU, Swish and Mila (β = -0.25). The observation clearly shows the sharp transition between the scalar magnitudes for the co-ordinates of ReLU as compared to Swish and Mila (β = -0.25). Smoother transition results in smoother loss functions which are easier to optimize and hence the network generalizes better.

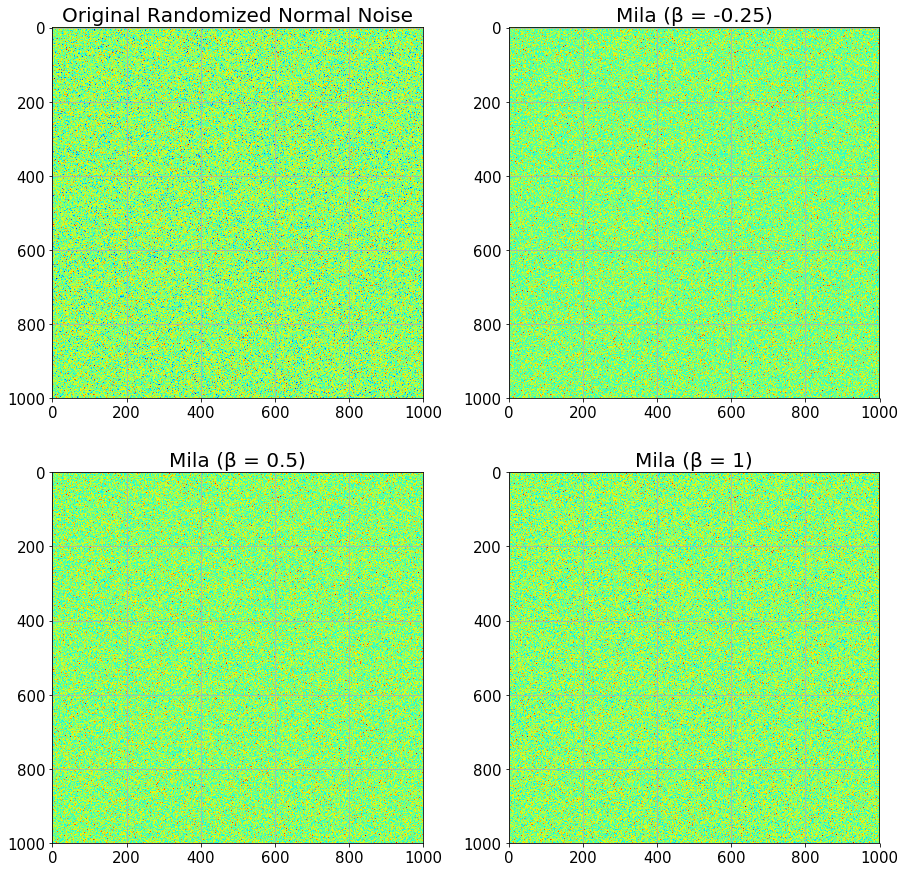

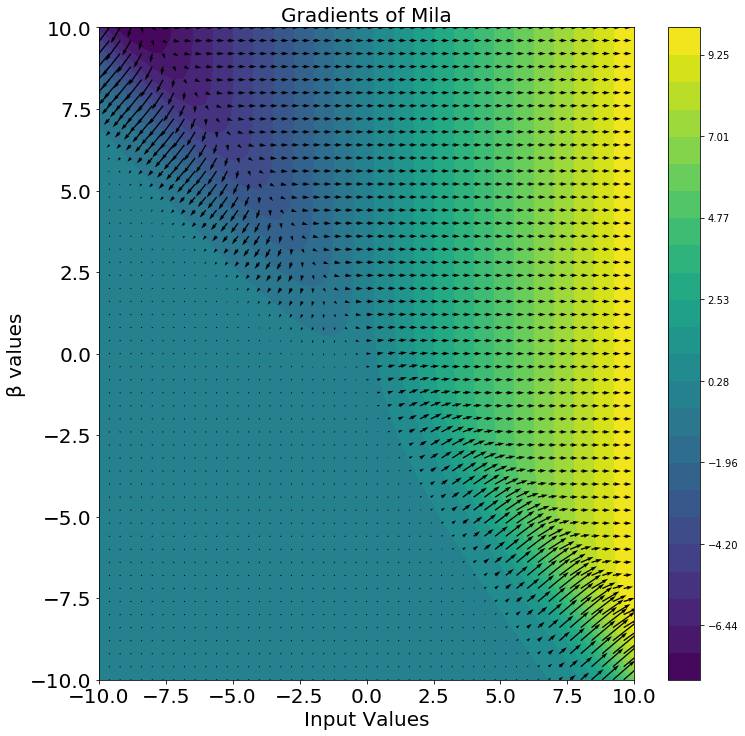

The Gradients of Mila's scalar function representation was also visualized:

Please view the Results.md to view the benchmarks.

Run the demo.py file to try out Mila in a simple network for Fashion MNIST classification.

- First clone the repository and navigate to the folder using the following command.

cd \path_to_Mila_directory

- Install dependencies

pip install requirements.txt

- Run the Python demo script.

python3 demo.py --activation mila --model_initialization class

Note: The demo script is initialized with Mila having a β to be -0.25. Change the β ('beta' variable) in the script to try other beta values