-

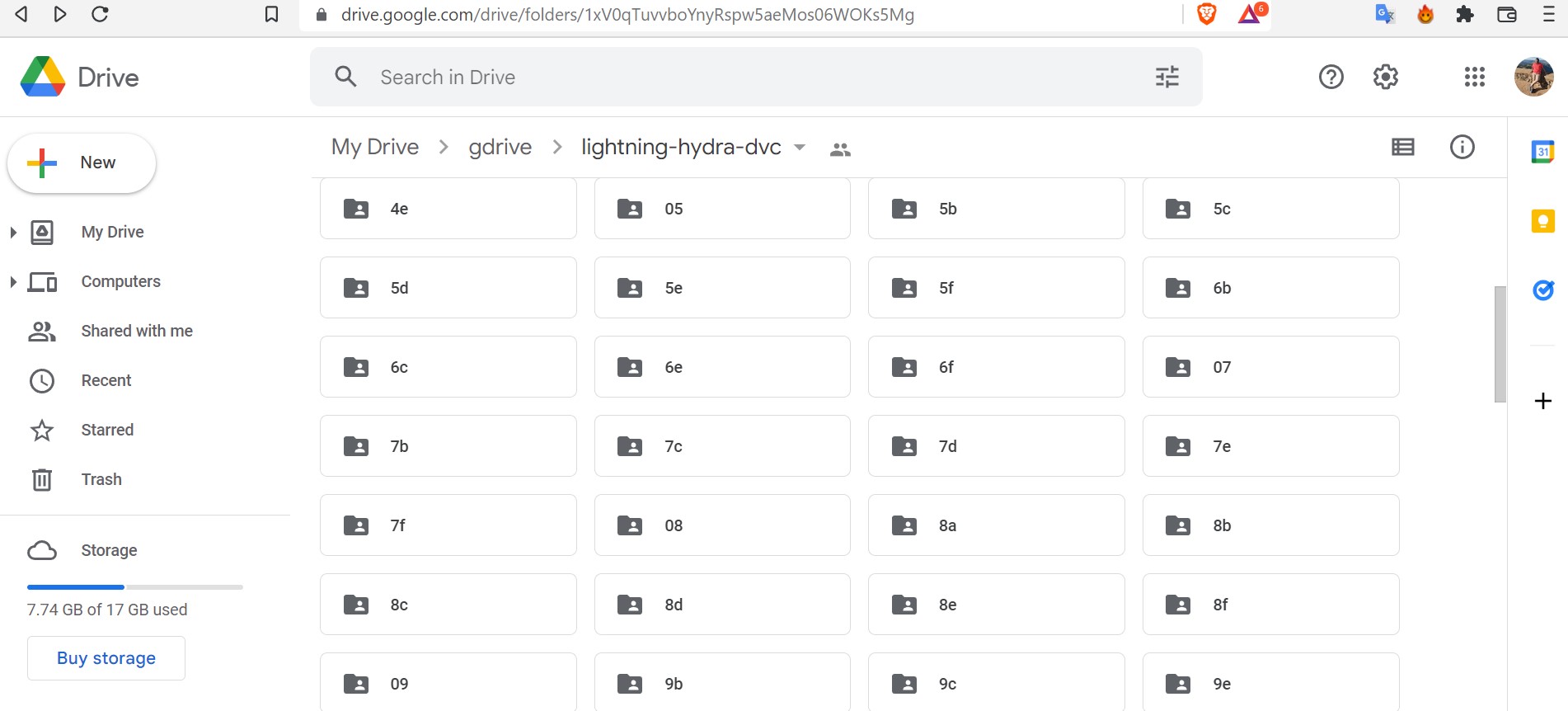

Google drive Link for dvc - https://drive.google.com/drive/folders/1xV0qTuvvboYnyRspw5aeMos06WOKs5Mg?usp=sharing

-

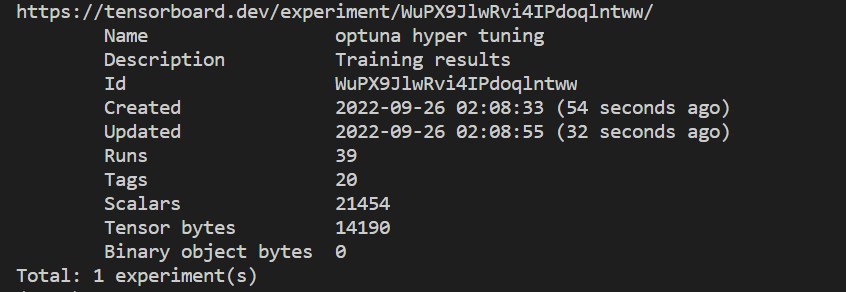

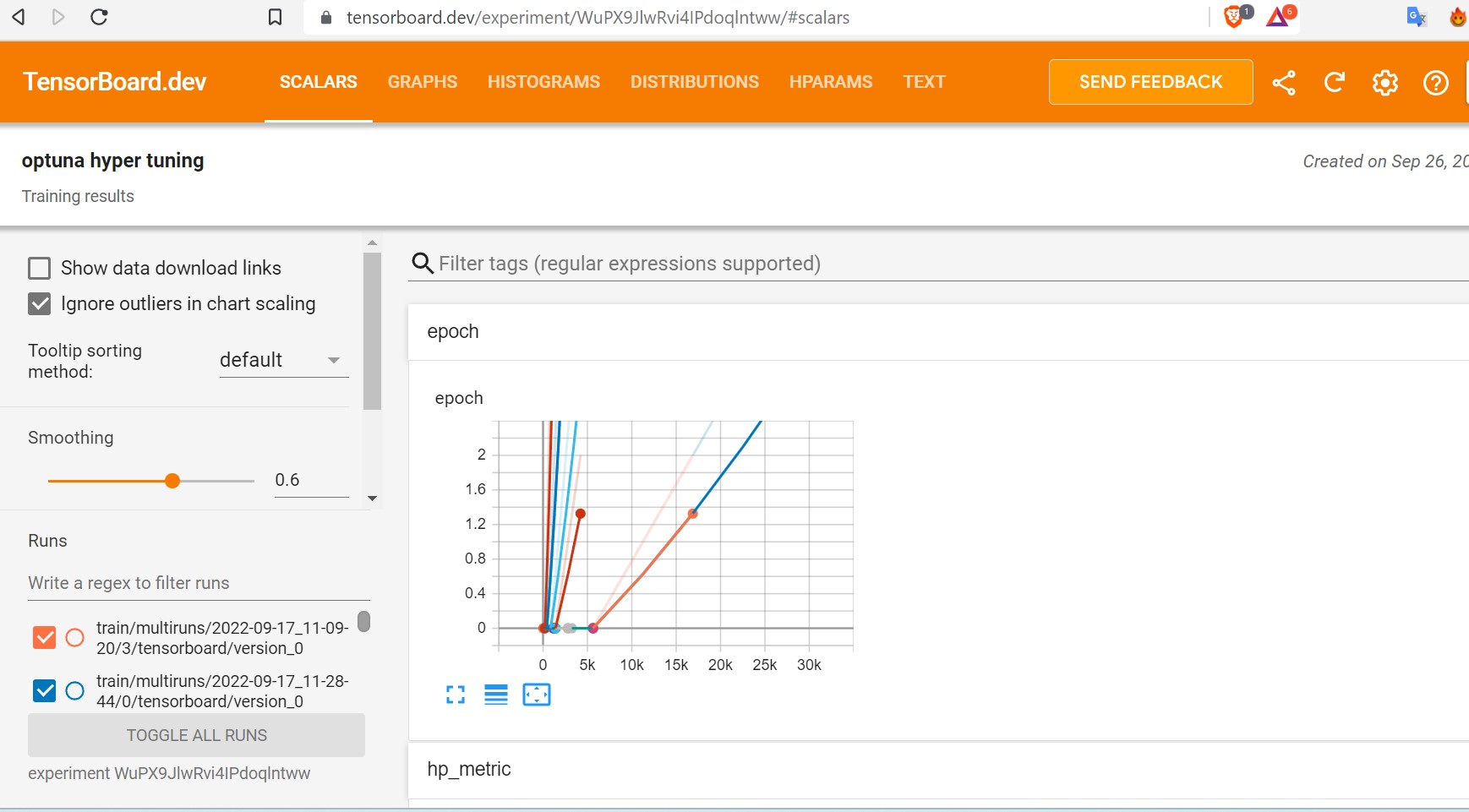

Tensorboard Link for dvc - https://tensorboard.dev/experiment/WuPX9JlwRvi4IPdoqlntww/#scalars

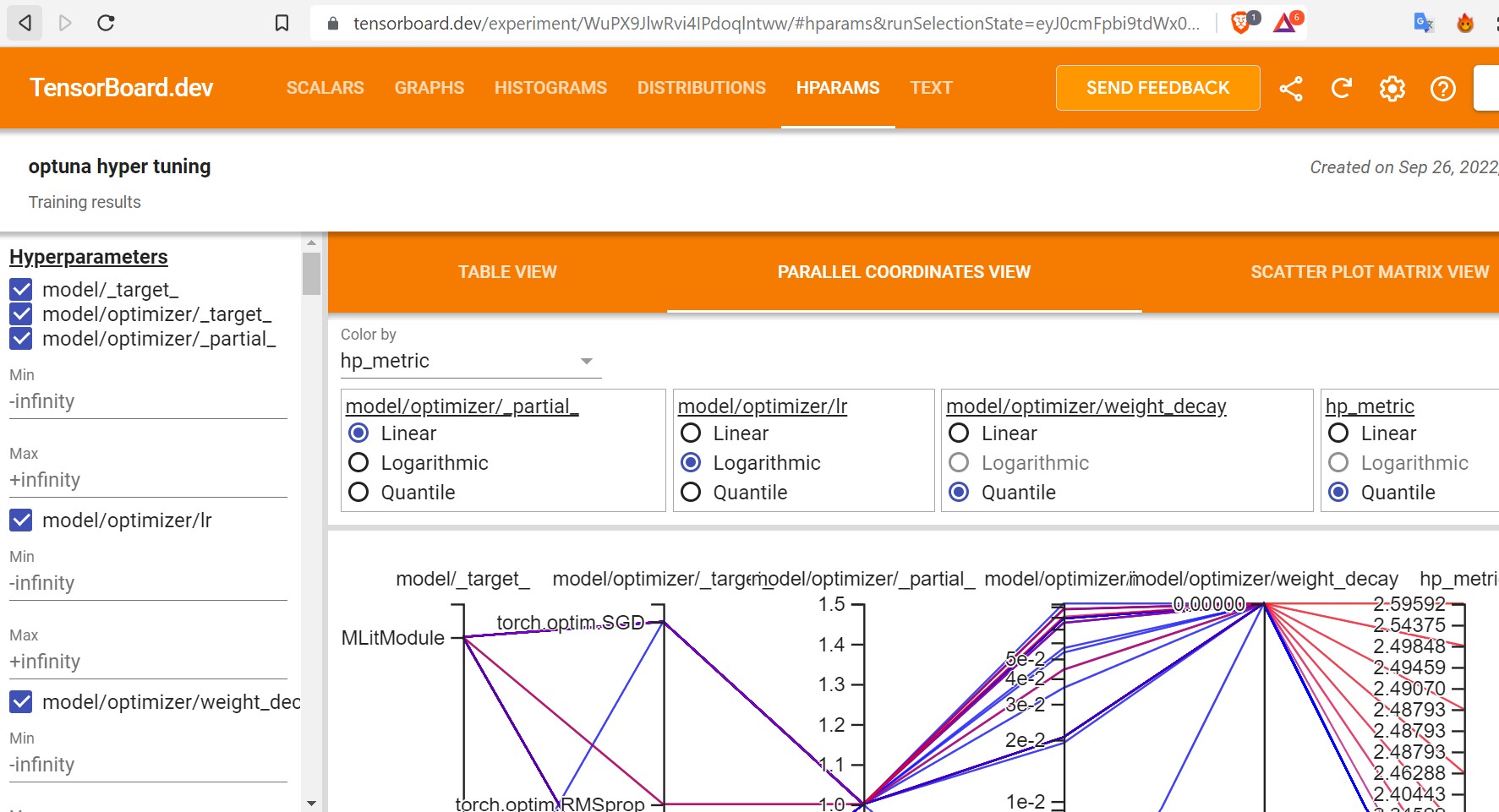

Best HyperParameter from experiment

name: optuna

best_params:

model.optimizer._target_: torch.optim.Adam

model.optimizer.lr: 0.080057

datamodule.batch_size: 8

dvc add data

dvc add logs

dvc push -r gdrive

Link : https://drive.google.com/drive/folders/1xV0qTuvvboYnyRspw5aeMos06WOKs5Mg?usp=sharing

- Set hyper-parameters for experiment tracking

- Find the best batch_size and learning rate, and optimizer

- Optimizers have to be one of Adam, SGD, RMSProp