This project is an NLP project conducted by IIPL. This project, which intends to apply latent-variables to various fields of NLP, aims to improve the performance of various NLP tasks such as low-resource machine translation, text style transfer, and dataset shift.

This code is written in Python. Dependencies include

- Python == 3.6

- PyTorch == 1.8

- Transformers (Huggingface) == 4.8.1

- NLG-Eval == 2.3.0 (https://github.com/Maluuba/nlg-eval)

- WMT 2014 translation task DE -> EN (--task=translation --data_name=WMT2014_de_en)

- WMT 2016 multimodal DE -> EN (--task=translation --data_name=WMT2016_Multimodal)

- Korpora EN -> KR (--task=translation --data_name=korpora)

- Grammarly's Yahho Answer Formality Corpus Informal -> Formal (--task=style_transfer --data_name=GYAFC)

- Wiki Neutrality Corpus Biased -> Neutral (--task=style_transfer --data_name=WNC)

- CNN & Daily Mail News Summarization (--task=summarization --data_name=cnn_dailymail)

- IMDB Sentiment Analysis (--task=classification --data_name=IMDB)

- NSMC Sentiment Analysis (Coming soon...)

- Korean Hate Speech Toxic Classification (Coming soon...)

Before training the model, it needs to go through a preprocessing step. Preprocessing is performed through the '--preprocessing' option and the pickle file of the set data is saved in the preprocessing path (--preprocessing_path).

python main.py --preprocessing

Available options are

- tokenizer (--tokenizer; If you choose Pre-trained Langauge Model's tokenizer, Pre-trained version will load.)

- SentencePiece model type (--sentencepiece_model; If tokenizer is spm)

- source text vocabulary size (--src_vocab_size)

- target text vocabulary size (--trg_vocab_size)

- padding token id (--pad_id)

- unknown token id (--unk_id)

- start token id (--bos_id)

- end token id (--eos_id)

python main.py --preprocessing --tokenizer=spm --sentencepiece_model=unigram \

--src_vocab_size=8000 --trg_vocab_size=8000 \

--pad_id=0 --unk_id=3 --bos_id=1 --eos_id=2

If you want to use pre-trained tokenizer, you can use it by entering its name in the tokenizer option. In this case, options such as vocabulary size and ID are ignored because of using a pre-trained tokenizer. Currently available pre-trained tokenizers are 'Bart', 'Bert', and 'T5'.

python main.py --preprocessing --tokenizer=bart

To train the model, add the training (--training) option. Currently, only the Transformer model is available, but RNN and Pre-trained Language Model will be added in the future.

python main.py --training

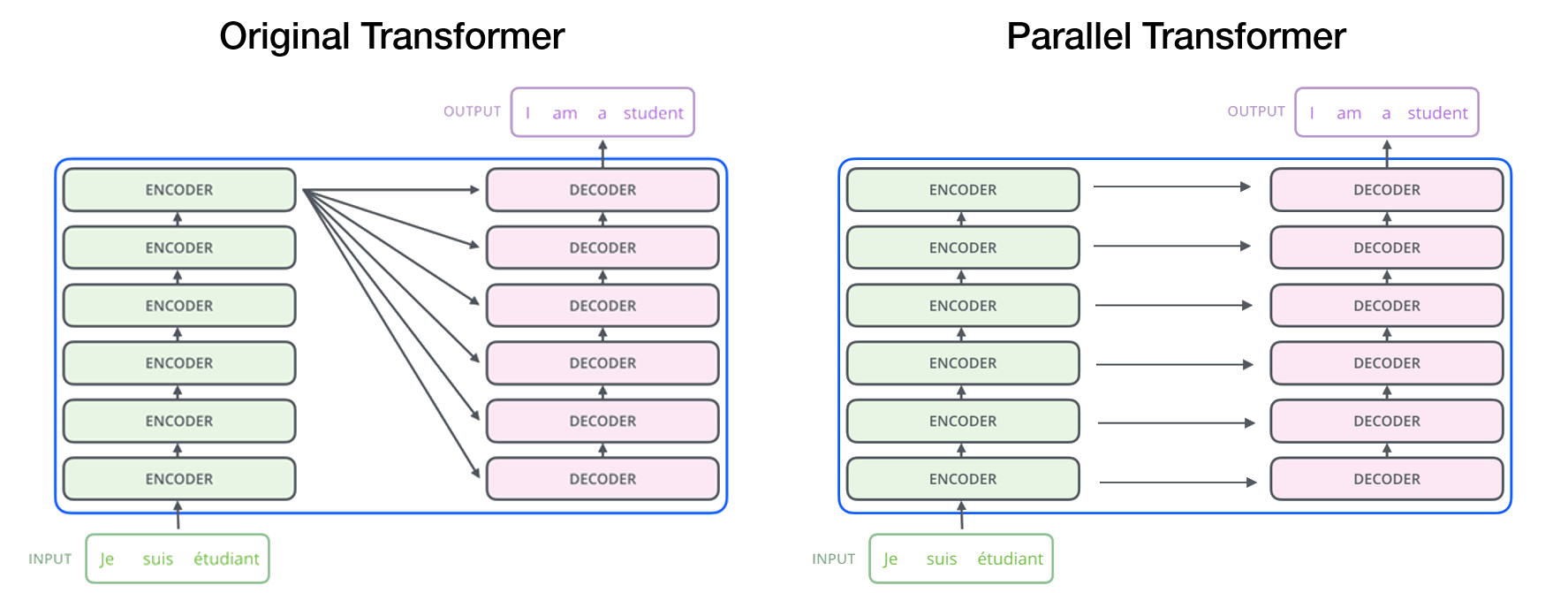

Implementation of the Transformer model in "Attention is All You Need" (Ashish Vaswani, Noam Shazeer, Niki Parmar, Jakob Uszkoreit, Llion Jones, Aidan N. Gomez, Lukasz Kaiser and Illia Polosukhin, NIPS 2017).

Available options are

- model dimension (--d_model)

- embedding dimension (--d_embedding)

- multi-head attention's head count (--n_head)

- feed-forward layer dimension (--dim_feedforward)

- dropout ratio (--dropout)

- embedding dropout ratio (--embedding_dropout)

- number of encoder layers (--num_encoder_layer)

- number of decoder layers (--num_decoder_layer)

- weight sharing between decoder embedding and decoder linear layer (--trg_emb_prj_weight_sharing)

- weight sharing between encoder embedding and decoder embedding (--emb_src_trg_weight_sharing)

python main.py --training --d_model=768 --d_embedding=256 --n_head=16 \

--dim_feedforward=2048 --dropout=0.3 --embedding_dropout=0.1 --num_encoder_layer=8 \

--num_decoder_layer=8 --trg_emb_prj_weight_sharing=False --emb_src_trg_weight_sharing=True

python main.py --training --parallel=True

Implementation of the Bart model in "BART: Denoising Sequence-to-Sequence Pre-training for Natural Language Generation, Translation, and Comprehension" (Mike Lewis, Yinhan Liu, Naman Goyal, Marjan Ghazvininejad, Abdelrahman Mohamed, Omer Levy, Ves Stoyanov and Luke Zettlemoyer, ACL 2020).

python main.py --training --model_type=bart

Available options are

- Beam size (--beam_size)

- Length normalization (--beam_alpha)

- Penelize word that already generated (--repetition_penalty)

python main.py --testing --test_batch_size=48 --beam_size=5 --beam_alpha=0.7 --repetition_penalty=0.7

- Kyohoon Jin - Project Manager - [Link]

- Juhwan Choi - Enginner - [Link]

- Junho Lee - Enginner - [Link]

See also the list of contributors who participated in this project.

If you have any questions on our survey, please contact me via the following e-mail address: fhzh@naver.com or fhzh123@cau.ac.kr