Key Objective - provide optimal chunking for use with large language models (LLMs)

Chunking is the splitting of large texts into smaller pieces with related contents. This could include things like splitting an article into sections, a book into chapters, or a screeplay into scenes.

Example Use Cases

- Retrieval-Augmented Generation (RAG) - RAG utilizes a database of relevant documents to give LLMs the proper context to answer a parse a particular query. Better chunking results in more relevant and specific texts being included in the LLMs context window, resulting in better responses.

- Classification - Chunking can be used to seperate texts into similar sections which can then be classified and assigned labels.

- Semantic Search - Better chunking can enhance the accuracy and reliability of semantic searching algorithms that return results based off of similarity in semantic meaning instead of keyword matching.

- Review min compute requirements for desired role

- Read through Bittensor documentation

- Ensure you've gone through the appropriate checklist

This repository requires python3.8 or higher. To install, simply clone this repository and install the requirements.

git clone https://github.com/VectorChat/chunking_subnet

cd chunking_subnet

python -m pip install -r requirements.txt

python -m pip install -e Register your wallet to testnet or localnet

Testnet:

btcli subnet register --wallet.name $coldkey --wallet.hotkey $hotkey --subtensor.network test --netuid $uidLocalnet:

btcli subnet register --wallet.name <COLDKEY> --wallet.hotkey <HOTKEY> --subtensor.chain_endpoint ws://127.0.0.1:9946 --netuid 1Running a validator requires an OpenAI API key. To run the validator:

# on localnet

python3 neurons/validator.py --netuid 1 --subtensor.chain_endpoint ws://127.0.0.1:9946 --wallet.name <COLDKEY> --wallet.hotkey <HOTKEY> --log_level debug --openaikey <OPENAIKEY>

# on testnet

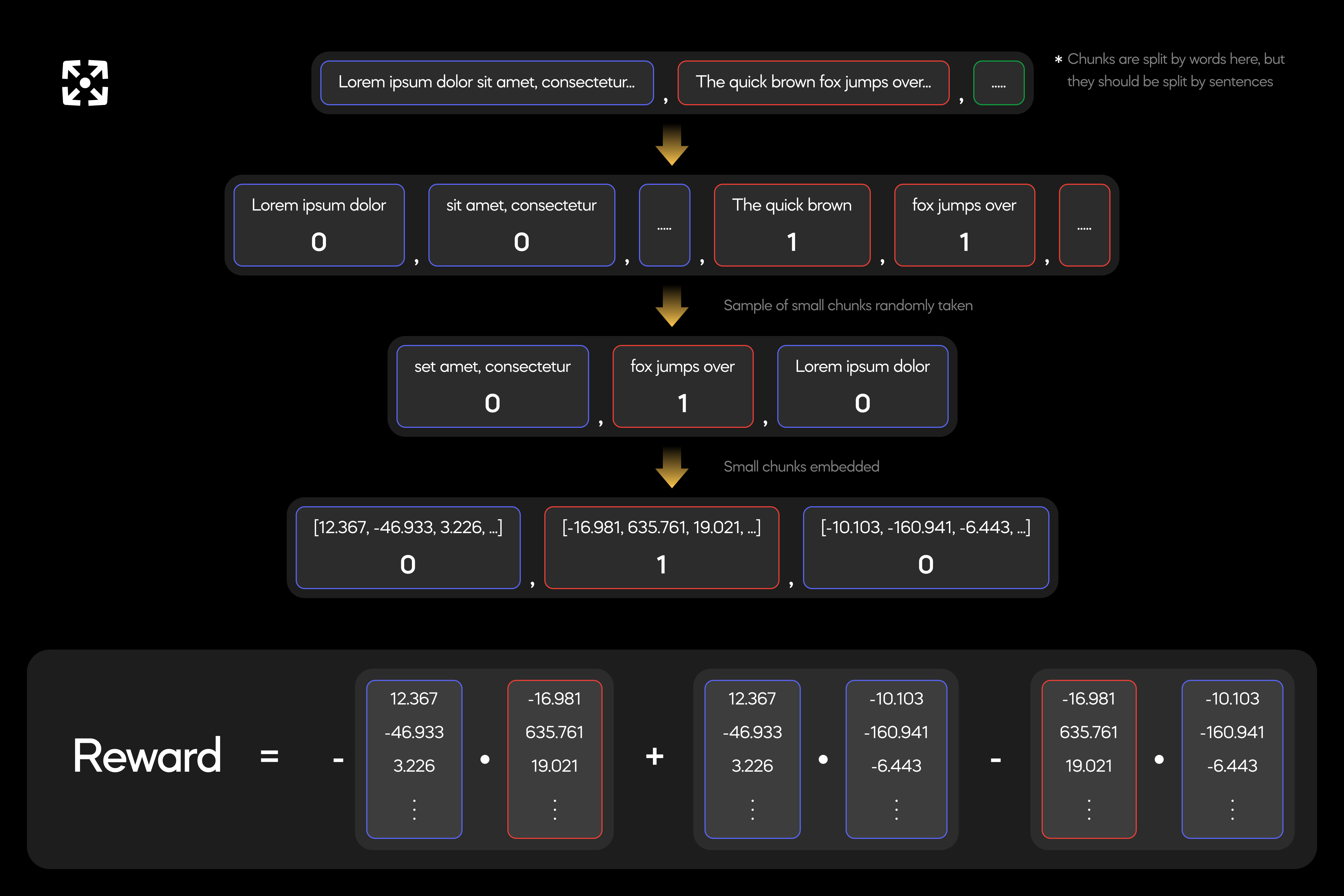

python3 neurons/validator.py --netuid $uid --subtensor.network test --wallet.name <COLDKEY> --wallet.hotkey <HOTKEY> --log_level debug --openaikey <OPENAIKEY>Something to consider when running a validator is the number of embeddings you're going to generate per miner evaluation. When scoring a miner, a random sample of 3-sentence segments are taken from the response and embedded. The dot product of every possible pair of these embeddings is then compared and added to the final score if the embeddings originated from the same chunk or subtracted from the final score if they originated from different chunks. A greater sample size will likely result in a more accurate evaluation and higher dividends. This comes at the cost of more API calls to generate the embeddings and more time and resources to compare them against each other.

It is highly recomended that you write your own logic for neurons.miner.forward in order to achieve better chunking and recieve better rewards, for help on doing this, see Custom Miner.

# on localnet

python3 neurons/miner.py --netuid 1 --subtensor.chain_endpoint ws://127.0.0.1:9946 --wallet.name <COLDKEY> --wallet.hotkey <HOTKEY> --log_level debug

# on testnet

python3 neurons/miner.py --netuid $uid --subtensor.network test --wallet.name <COLDKEY> --wallet.hotkey <HOTKEY> --log_level debugThe default miner simply splits the incoming document into individual sentences and then forms each chunk by concatenating adjacent sentences until the token limit is reached. This is not an optimal strategy and will likely see you deregistered. For help on writing a custom miner, see Custom Miner.

To change the behavior of the default miner, edit the logic in neurons.miner.forward to your liking.

Validators check each chunk that is sent to them against the source document that they sent out. To ensure that your chunks match the source document, it is highly encouraged that you use NLTKTextSplitter to split the document by sentences before combining them into chunks.

Each incoming query contains a variable called maxTokensPerChunk. Exceeding this number of tokens in any of your chunks will result in severe reductions in your score, so ensure that your logic does not produce chunks that exceed that number of tokens.

Chunk quality is calculated based on the semantic consistency within a given chunk and its semantic similarity to other chunks. In order to produce the best chunks, ensure that all text in a chunk is related and that text from different chunks are not.

There are various approaches to chunking that can produce high-quality chunks. We recommend that you use recursive or semantic chunking. To learn more about chunking, we recommend you read through this blogpost

Recursive chunking first splits the document into a small number of chunks. It then preforms checks if the chunks fit the desired criteria (size, semantic self-similarity, etc.) If the chunks do not fit those criteria, the recursive chunking algorithm is called on the individual chunks to split them further.

Semantic chunking first splits the document into individual sentences. It then creates a sentence group for each sentence consisting of the 'anchor' sentence and some number of surrounding sentences. These sentence groups are then compared to each other sequentially. Wherever there is a high semantic difference between adjacent sentence groups, one chunk is deliniated from the next.

There exist many freely available chunking utilities that can help get you started on your own chunking algorithm. See this pinecone repo and this document for more information.