CoSMix: Compositional Semantic Mix for Domain Adaptation in 3D LiDAR Segmentation [ECCV2022 - TPAMI]

The official implementation of our works "CoSMix: Compositional Semantic Mix for Domain Adaptation in 3D LiDAR Segmentation" and "Compositional Semantic Mix for Domain Adaptation in Point Cloud Segmentation".

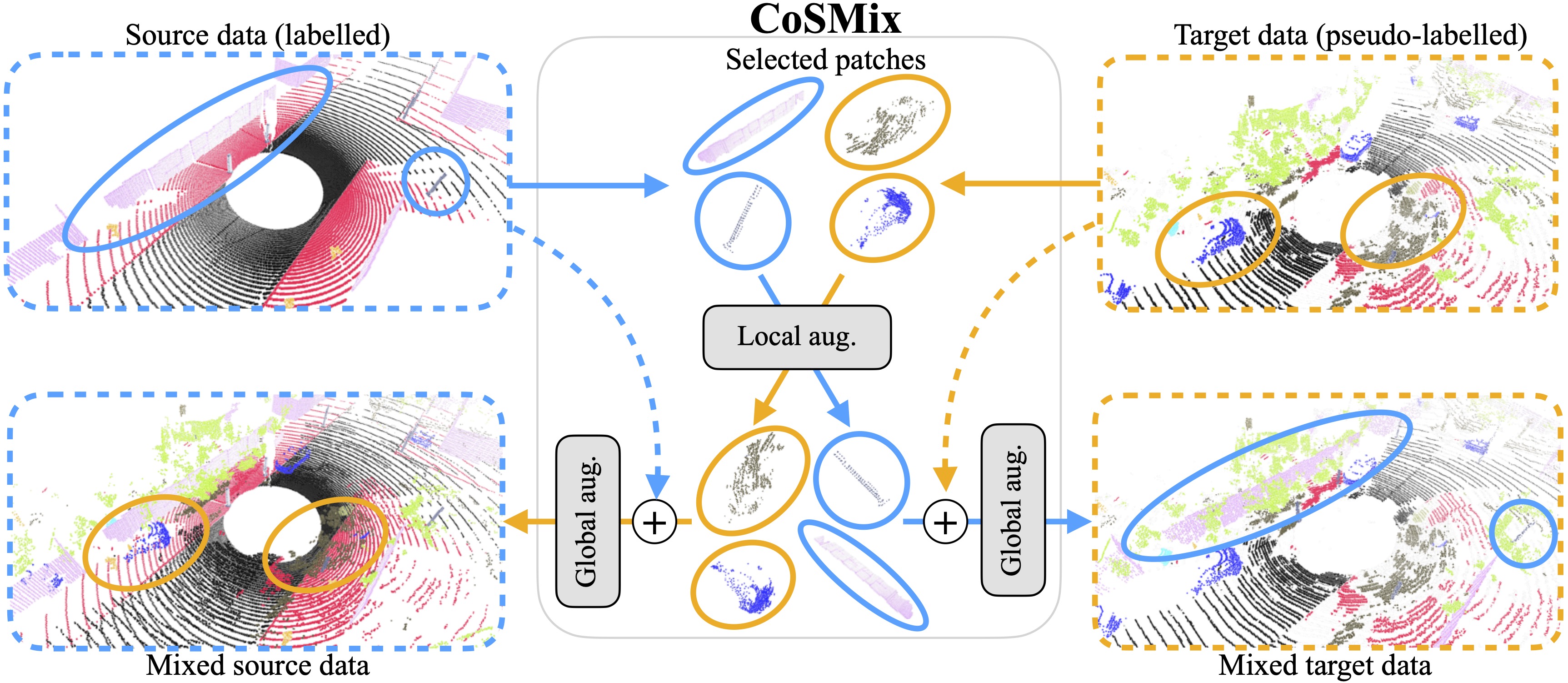

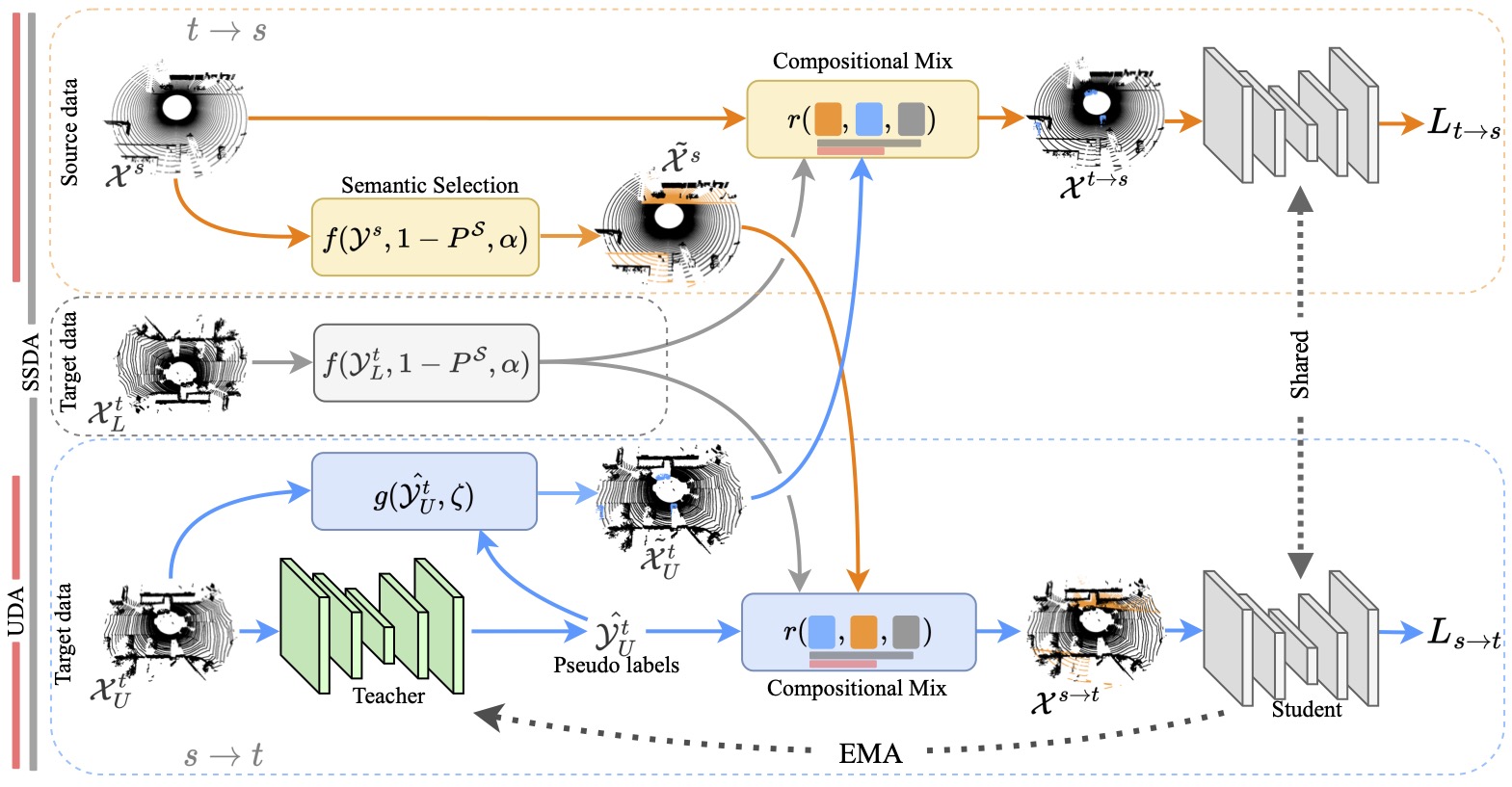

Several Unsupervised Domain Adaptation (UDA) methods for point cloud data have been recently proposed to improve model generalization for different sensors and environments. Meanwhile, researchers working on UDA problems in the image domain have shown that sample mixing can mitigate domain shift. We propose a new approach of sample mixing for point cloud UDA, namely Compositional Semantic Mix (CoSMix), the first UDA approach for point cloud segmentation based on sample mixing. CoSMix consists of a two-branch symmetric network that can process synthetic labelled data (source) and real-world unlabelled point clouds (target) concurrently. Each branch operates on one domain by mixing selected pieces of data from the other one, and by using the semantic information derived from source labels and target pseudo-labels. We further extend CoSMix for the one-shot semi-supervised DA settings (SSDA). In this extension, (one-shot) target labels are easily integrated in our pipeline, providing additional noise-free guidance during adaptation.

🔥 For more information follow the PAPER link!:fire:

Authors: Cristiano Saltori, Fabio Galasso, Giuseppe Fiameni, Nicu Sebe, Elisa Ricci, Fabio Poiesi

- 3/2024: CoSMix SSDA code has been RELEASED!

- 8/2023: CoSMix extension to one-shot SSDA has been accepted at T-PAMI!:fire: Paper link

- 12/2022: CoSMix leads the new SynLiDAR to SemanticKITTI benchmark! 🚀

- 7/2022: CoSMix code has been RELEASED!

- 7/2022: CoSMix is accepted to ECCV 2022!:fire: Our work is the first using compositional mix between domains to allow adaptation in LiDAR segmentation!

The code has been tested with Docker (see Docker container below) with Python 3.8, CUDA 10.2/11.1, pytorch 1.8.0 and pytorch-lighting 1.4.1. Any other version may require to update the code for compatibility.

In your virtual environment follow MinkowskiEnginge. This will install all the base packages.

Additionally, you need to install:

- open3d 0.13.0

- pytorch-lighting 1.4.1

- wandb

- tqdm

- pickle

If you want to use Docker you can find a ready-to-use container at crissalto/online-adaptation-mink:1.3, just be sure to have installed drivers compatible with CUDA 11.1.

Download SynLiDAR dataset from here, then prepare data folders as follows:

./

├──

├── ...

└── path_to_data_shown_in_config/

└──sequences/

├── 00/

│ ├── velodyne/

| | ├── 000000.bin

| | ├── 000001.bin

| | └── ...

│ └── labels/

| ├── 000000.label

| ├── 000001.label

| └── ...

└── 12/

To download SemanticKITTI follow the instructions here. Then, prepare the paths as follows:

./

├──

├── ...

└── path_to_data_shown_in_config/

└── sequences

├── 00/

│ ├── velodyne/

| | ├── 000000.bin

| | ├── 000001.bin

| | └── ...

│ ├── labels/

| | ├── 000000.label

| | ├── 000001.label

| | └── ...

| ├── calib.txt

| ├── poses.txt

| └── times.txt

└── 08/

To download SemanticPOSS follow the instructions here. Then, prepare the paths as follows:

./

├──

├── ...

└── path_to_data_shown_in_config/

└── sequences

├── 00/

│ ├── velodyne/

| | ├── 000000.bin

| | ├── 000001.bin

| | └── ...

│ ├── labels/

| | ├── 000000.label

| | ├── 000001.label

| | └── ...

| ├── tag

| ├── calib.txt

| ├── poses.txt

| └── instances.txt

└── 06/

After you downloaded the datasets you need, create soft-links in the data directory

cd cosmix-uda

mkdir data

ln -s PATH/TO/SEMANTICKITTI SemanticKITTI

# do the same for the other datasets

We use SynLiDAR as source synthetic dataset. The first stage of CoSMix consists of a warm-up stage in which the teacher is pretrained on the source dataset. To warm-up the segmentation model on SynLiDAR2SemanticKITTI run

python train_source.py --config_file configs/source/synlidar2semantickitti.yaml

while to warm-up the segmentation model on SynLiDAR2SemanticPOSS run

python train_source.py --config_file configs/source/synlidar2semanticposs.yaml

NB: we provide source pretrained models, so you can skip this step and move directly on adaptation! 🚀

NB: the code uses wandb for logs. Follow the instructions here and update your config.wandb.project_name and config.wandb.entity_name.

You can download the pretrained models on both SynLiDAR2SemanticKITTI and SynLiDAR2SemanticPOSS form here and decompress them in cosmix-uda/pretrained_models/.

To adapt with CoSMix-UDA on SynLiDAR2SemanticKITTI run

python adapt_cosmix_uda.py --config_file configs/adaptation/uda/synlidar2semantickitti_cosmix.yaml

while to adapt with CoSMix-UDA on SynLiDAR2SemanticPOSS run

python adapt_cosmix_uda.py --config_file configs/adaptation/uda/synlidar2semanticposs_cosmix.yaml

First, finetune the pre-trained model by running

python finetune_ssda.py --config_file configs/finetuning/synlidar2semantickitti_custom.yaml

this will finetune the pre-trained model over one-shot labelled samples from the target domain (check the paper for additional info).

To adapt with CoSMix-SSDA on SynLiDAR2SemanticKITTI run

python adapt_cosmix_ssda.py --config_file configs/adaptation/synlidar2semantickitti_cosmix.yaml

Repeat the same procedure for SemanticPOSS.

NB: Remember to update model paths according to your pre-trained model path and the WANDB information of your account.

To evaluate pretrained models after warm-up

python eval.py --config_file configs/config-file-of-the-experiment.yaml --resume_path PATH-TO-EXPERIMENT

with --eval_source for running evaluation on source data and --eval_target on target data.

This will iterate over all the checkpoints and run evaluation of all the checkpoints in PATH-TO-EXPERIMENT/checkpoints/.

Similarly, after adaptation use

python eval.py --config_file configs/config-file-of-the-experiment.yaml --resume_path PATH-TO-EXPERIMENT --is_student

Where --is_student specifies that the model to be evaluated is a student model.

You can save predictions for future visualizations by adding --save_predictions.

If you use our work, please cite us:

@article{saltori2023compositional,

title={Compositional Semantic Mix for Domain Adaptation in Point Cloud Segmentation},

author={Saltori, Cristiano and Galasso, Fabio and Fiameni, Giuseppe and Sebe, Nicu and Poiesi, Fabio and Ricci, Elisa},

journal={IEEE Transactions on Pattern Analysis and Machine Intelligence},

year={2023},

publisher={IEEE}

}

@inproceedings{saltori2022cosmix,

title={Cosmix: Compositional semantic mix for domain adaptation in 3d lidar segmentation},

author={Saltori, Cristiano and Galasso, Fabio and Fiameni, Giuseppe and Sebe, Nicu and Ricci, Elisa and Poiesi, Fabio},

booktitle={European Conference on Computer Vision},

pages={586--602},

year={2022},

organization={Springer}

}

This work was partially supported by OSRAM GmbH, by the MUR PNRR project FAIR (PE00000013) funded by the NextGenerationEU, by the EU project FEROX Grant Agreement no 101070440, by the PRIN PREVUE (Prot. 2017N2RK7K), the EU ISFP PROTECTOR (101034216) and the EU H2020 MARVEL (957337) projects and, it was carried out in the Vision and Learning joint laboratory of FBK and UNITN.

We thank the opensource project MinkowskiEngine.