- Create a cluster using the

./create-cluster.shscript - Apply the sysdig agents

cd sysdig-agents && ./sysdig-agents-GKE-install.sh && cd .. - Run the hipsterapp script

./hipsterapp.sh

- Show the GCP console with the newly created cluster

- Go to the

Applicationstab and talk about how to deploy the Sysdig agent directly from there. Also talk about eBPF and how it is used to deploy for COS. - Mention the different ways to deploy our agent: As an application, operator, helm charts, and kubectl commands. We deployed this ahead of time using kubectl commands.

- Show the hipster app by going to the

Servicestab and clicking on the loadbalancer IP of the frontend service. - Go to the powerpoint and explain the workflow of the CI/CD pipeline. Also explain the 4 bulletpoints in the powerpoint slide that cover the next two points below.

- The Jenkins pipeline is built only for the frontend microservice. So you can go to

src/frontendand make changes there. - Add a vulnerability to the frontend microservice under

src/frontend, expose port 22 in the Dockerfile, add an env variable with a password and add file with a private key, and use an old version of alpine (3.4). You can just uncomment the comments in the Dockerfile and comment others as appropriate - Run

git commit -am 'vuln introduced'and thegit push - Show the pipeline progress in Jenkins https://54.208.144.191/jenkins/job/hipster-frontend/

- Show the failure report.

- Show the scanning in GCR under sysdig-anthos-demo (you have a dev and prod repo) and highlight the difference between the vulnerability scanning

- You can make changes to src/frontend/templates/header.html and change the page title under the head section. Change the title to

Hipster Shop VERSION 2.0 underunder<a href="/" class="navbar-brand d-flex align-items-center">This should already be done for you. - Uncomment and comment to get the Dockerfile back in shape

- Run

git commit -am 'vuln removed'and thegit push - Show the Jenkins pipeline succeeding

- Show the Hipster app with the new

Hipster Shop VERSION 2.0title. - Finish off by showing the Sysdig Scanning Policy that was in place. It's called

GoogleEventDemo

- Show the Topology map at the bottom of the

K8s Golden Signals for Hipsterdashboard and show that things are going well. - Run the command:

kubectl delete deployment checkoutservice - Show that the app broke by going to the Hipster app and try to purchase something. This will also generate a 500 error for the capture file. Sometimes the load generator doesn't generate this in time.

- To see the performance degradation in Response Time and Error Rate. Check the

K8s Golden Signals for Hipsterdashboard and change the time scale between 1 minute, 10 minutes and 10 seconds. - This can be skipped depending on time -- Check the capture file and go to HTTP Errors and drill in. Show the connection problem to the given IP and port. Then run

kubectl get svcto show that the frontend service can't talk to the checkout service. The error looks likerpc error: code = Unavailable desc = all SubConns are in TransientFailure, latest connection error: connection error: desc = "transport: Error while dialing dial tcp 10.35.246.154:5050: i/o timeout" failed to complete the order - Run the command:

kubectl apply -f release/kubernetes-manifests.yamlto bring things back to normal.

- Privilege Escalation: Launch Privileged Container -- use

kubectl apply -f privilegedContainer.yamlwhich will trigger the policy - Execution: Run a terminal shell in container -- use

kubectl exec -it nginx-privileged bash - Discovery: Launch Suspicious Network Tool in Container -- first create a bash history file because it's not there usually and run an nmap scan -- use

touch ~/.bash_history && nmap 10.35.244.69 -Pn -p 50051 - Credential Access: Search Private Keys or Passwords -- use

grep -ri -e "BEGIN RSA PRIVATE" /app - Exfiltration: Interpreted procs outbound network activity -- in the nmap container run

cp /app/key/throwAway.pem my_file.txt && python /app/connect.pythis will trigger the policy - Defense Evasion: Delete Bash History -- run

shred -f ~/.bash_history - Check the capture file generated based on the Exfiltration event. (Sysdig Inspect Sometimes it shows that

Unable to load datathat's okay you can still follow the below instructions) a. ClickSysdig Secure Notifications,Executed Commands,New SSH Connections, andAccessed Filesand zoom aroundNew SSH Connectionsand talk about how it happened right before the trigger. b. Drill intoNew SSH Connectionsand show the outbound connection to a public IP. c. Go back and drill intoAccessed Files. d. Search using Find Text for thethrowAwayfile and drill into I/O stream. e. Show how that file contains a private key f. Go back toAccessed Filesand search forconnect.pyg. Drill into I/O stream. h. Talk about the scp operation that exfiltrated the private key to a hacker's machine - Talk about how we could have looked for private key files during the scanning process. Also talk about the kill container action that we could have taken in the very beginning.

Destroy the cluster using the destroy-cluster.sh script

- You can do kubectl run using

kubectl run -i --tty nmap --image=samgabrail/networktools -- bash - There are three files in this folder called

ScanningRule.json,CustomFalcoRules.yaml, andMITRE_SysdigSecure_Policies.jsonthat are needed to be deployed via the sdc-cli or the API to Sysdig Secure. - The

samgabrail/networktoolscontainer that is used in this demo is found on dockerhub at https://hub.docker.com/r/samgabrail/networktools and the github repo issamgabrail/falcoat https://github.com/samgabrail/falco

The Notes below are the default notes for the Hipster App taken from their github repo at https://github.com/GoogleCloudPlatform/microservices-demo

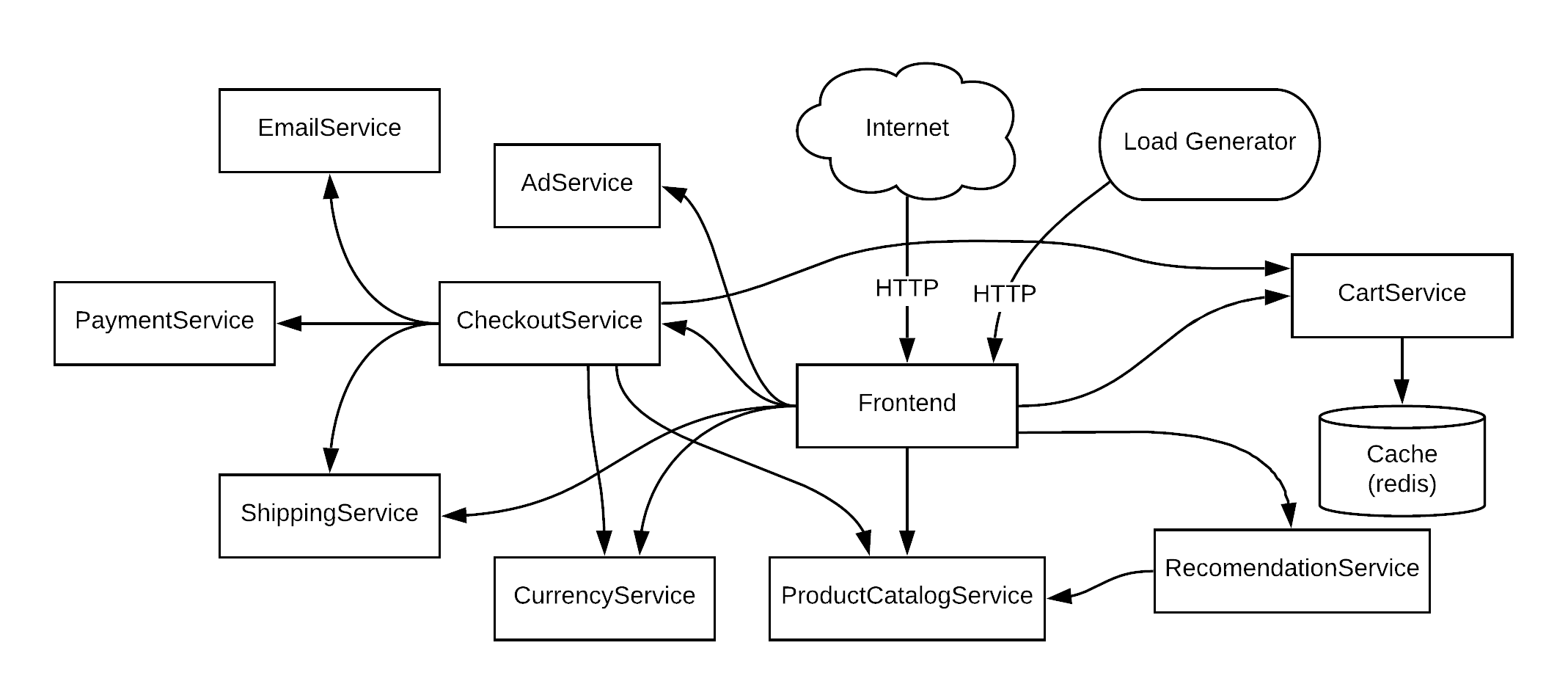

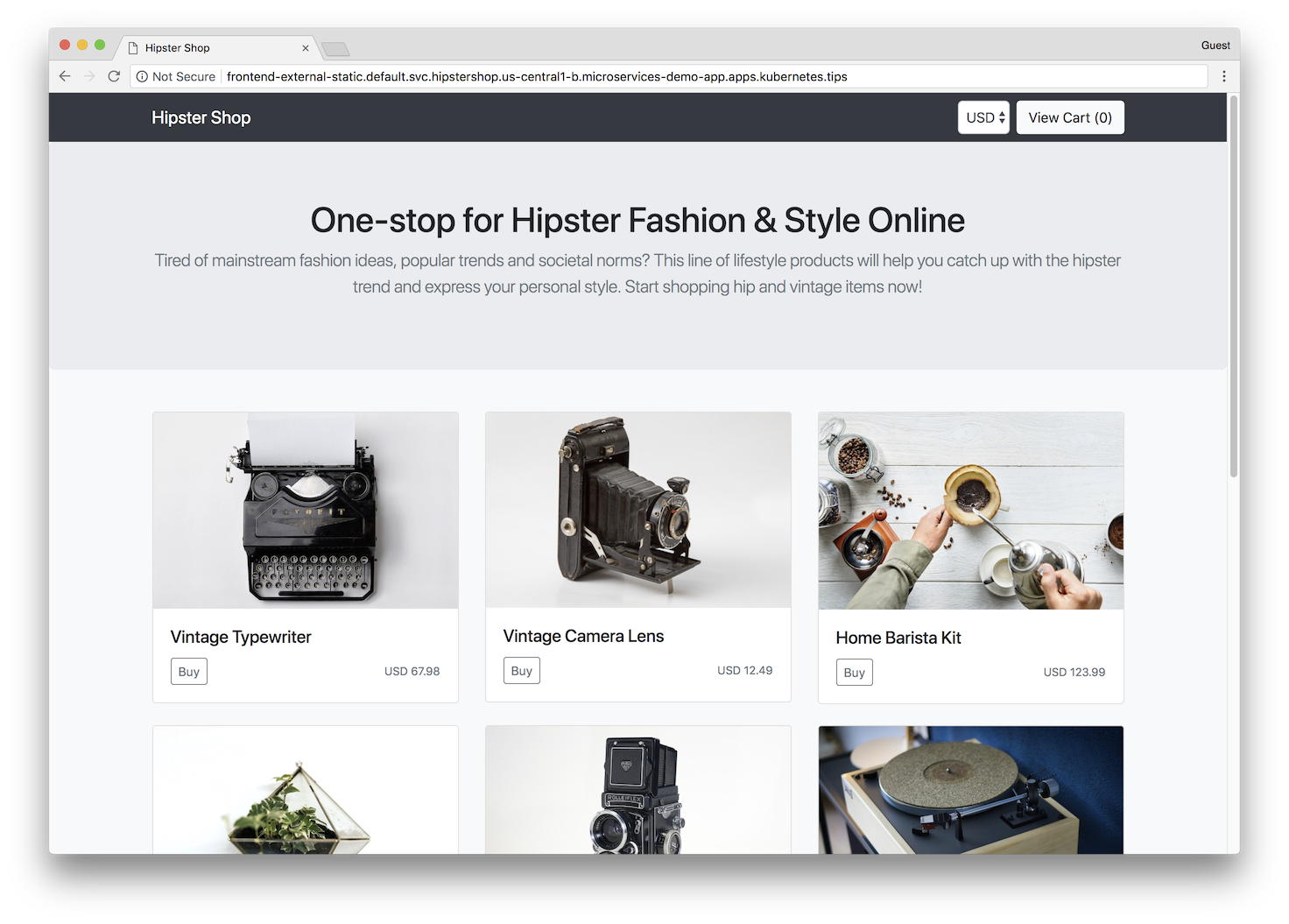

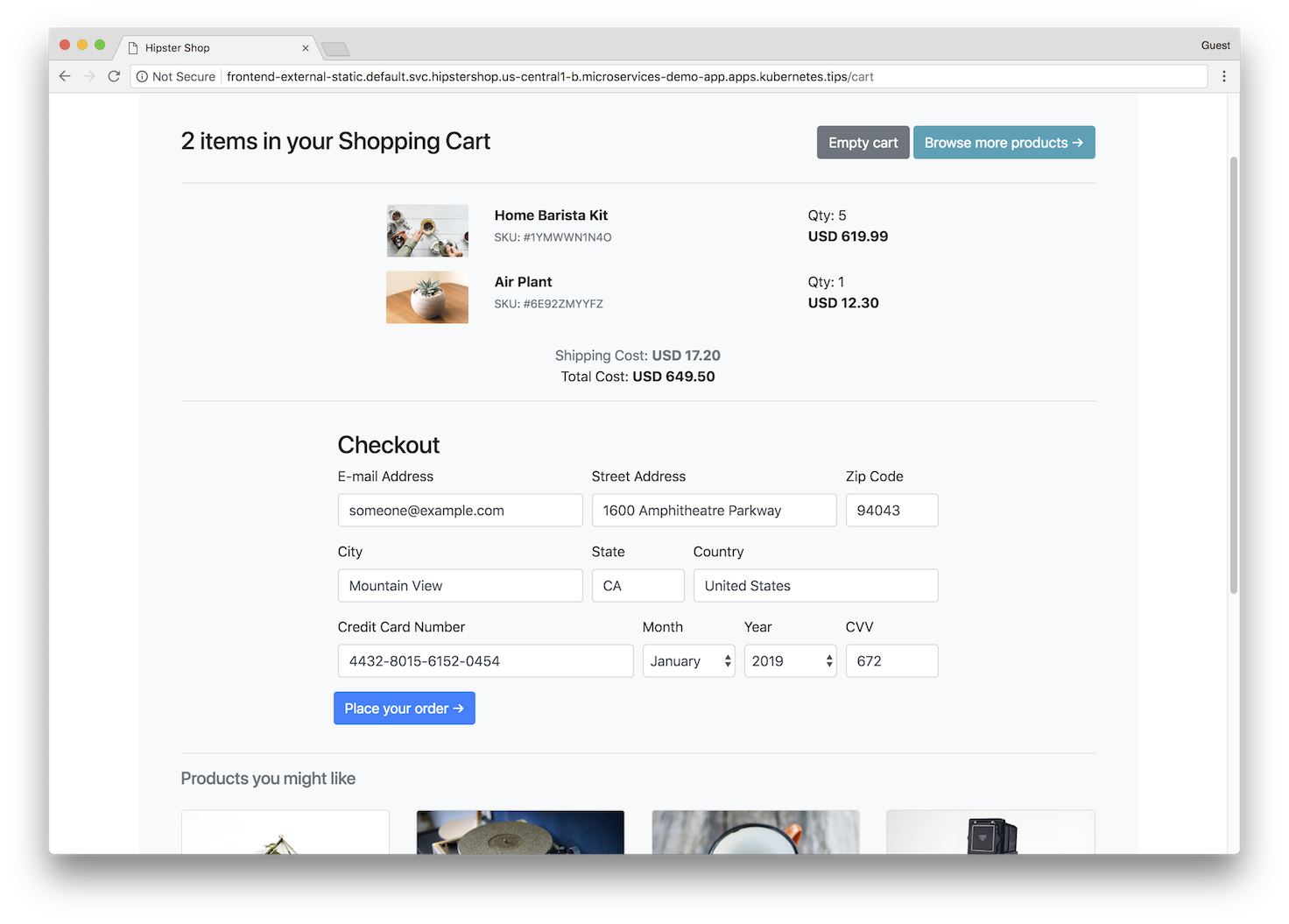

This project contains a 10-tier microservices application. The application is a web-based e-commerce app called “Hipster Shop” where users can browse items, add them to the cart, and purchase them.

Google uses this application to demonstrate use of technologies like Kubernetes/GKE, Istio, Stackdriver, gRPC and OpenCensus. This application works on any Kubernetes cluster (such as a local one), as well as Google Kubernetes Engine. It’s easy to deploy with little to no configuration.

If you’re using this demo, please ★Star this repository to show your interest!

👓Note to Googlers: Please fill out the form at go/microservices-demo if you are using this application.

| Home Page | Checkout Screen |

|---|---|

|

|

Hipster Shop is composed of many microservices written in different languages that talk to each other over gRPC.

Find Protocol Buffers Descriptions at the ./pb directory.

| Service | Language | Description |

|---|---|---|

| frontend | Go | Exposes an HTTP server to serve the website. Does not require signup/login and generates session IDs for all users automatically. |

| cartservice | C# | Stores the items in the user's shipping cart in Redis and retrieves it. |

| productcatalogservice | Go | Provides the list of products from a JSON file and ability to search products and get individual products. |

| currencyservice | Node.js | Converts one money amount to another currency. Uses real values fetched from European Central Bank. It's the highest QPS service. |

| paymentservice | Node.js | Charges the given credit card info (mock) with the given amount and returns a transaction ID. |

| shippingservice | Go | Gives shipping cost estimates based on the shopping cart. Ships items to the given address (mock) |

| emailservice | Python | Sends users an order confirmation email (mock). |

| checkoutservice | Go | Retrieves user cart, prepares order and orchestrates the payment, shipping and the email notification. |

| recommendationservice | Python | Recommends other products based on what's given in the cart. |

| adservice | Java | Provides text ads based on given context words. |

| loadgenerator | Python/Locust | Continuously sends requests imitating realistic user shopping flows to the frontend. |

- Kubernetes/GKE: The app is designed to run on Kubernetes (both locally on "Docker for Desktop", as well as on the cloud with GKE).

- gRPC: Microservices use a high volume of gRPC calls to communicate to each other.

- Istio: Application works on Istio service mesh.

- OpenCensus Tracing: Most services are instrumented using OpenCensus trace interceptors for gRPC/HTTP.

- Stackdriver APM: Many services are instrumented with Profiling, Tracing and Debugging. In addition to these, using Istio enables features like Request/Response Metrics and Context Graph out of the box. When it is running out of Google Cloud, this code path remains inactive.

- Skaffold: Application is deployed to Kubernetes with a single command using Skaffold.

- Synthetic Load Generation: The application demo comes with a background job that creates realistic usage patterns on the website using Locust load generator.

We offer three installation methods:

-

Running locally with “Docker for Desktop” (~20 minutes) You will build and deploy microservices images to a single-node Kubernetes cluster running on your development machine.

-

Running on Google Kubernetes Engine (GKE)” (~30 minutes) You will build, upload and deploy the container images to a Kubernetes cluster on Google Cloud.

-

Using pre-built container images: (~10 minutes, you will still need to follow one of the steps above up until

skaffold runcommand). With this option, you will use pre-built container images that are available publicly, instead of building them yourself, which takes a long time).

💡 Recommended if you're planning to develop the application or giving it a try on your local cluster.

-

Install tools to run a Kubernetes cluster locally:

- kubectl (can be installed via

gcloud components install kubectl) - Docker for Desktop (Mac/Windows): It provides Kubernetes support as noted here.

- skaffold (ensure version ≥v0.20)

- kubectl (can be installed via

-

Launch “Docker for Desktop”. Go to Preferences:

- choose “Enable Kubernetes”,

- set CPUs to at least 3, and Memory to at least 6.0 GiB

- on the "Disk" tab, set at least 32 GB disk space

-

Run

kubectl get nodesto verify you're connected to “Kubernetes on Docker”. -

Run

skaffold run(first time will be slow, it can take ~20 minutes). This will build and deploy the application. If you need to rebuild the images automatically as you refactor the code, runskaffold devcommand. -

Run

kubectl get podsto verify the Pods are ready and running. The application frontend should be available at http://localhost:80 on your machine.

💡 Recommended if you're using Google Cloud Platform and want to try it on a realistic cluster.

-

Install tools specified in the previous section (Docker, kubectl, skaffold)

-

Create a Google Kubernetes Engine cluster and make sure

kubectlis pointing to the cluster.gcloud services enable container.googleapis.comgcloud container clusters create demo --enable-autoupgrade \ --enable-autoscaling --min-nodes=3 --max-nodes=10 --num-nodes=5 --zone=us-central1-akubectl get nodes -

Enable Google Container Registry (GCR) on your GCP project and configure the

dockerCLI to authenticate to GCR:gcloud services enable containerregistry.googleapis.comgcloud auth configure-docker -q

-

In the root of this repository, run

skaffold run --default-repo=gcr.io/[PROJECT_ID], where [PROJECT_ID] is your GCP project ID.This command:

- builds the container images

- pushes them to GCR

- applies the

./kubernetes-manifestsdeploying the application to Kubernetes.

Troubleshooting: If you get "No space left on device" error on Google Cloud Shell, you can build the images on Google Cloud Build: Enable the Cloud Build API, then run

skaffold run -p gcb --default-repo=gcr.io/[PROJECT_ID]instead. -

Find the IP address of your application, then visit the application on your browser to confirm installation.

kubectl get service frontend-externalTroubleshooting: A Kubernetes bug (will be fixed in 1.12) combined with a Skaffold bug causes load balancer to not to work even after getting an IP address. If you are seeing this, run

kubectl get service frontend-external -o=yaml | kubectl apply -f-to trigger load balancer reconfiguration.

💡 Recommended if you want to deploy the app faster in fewer steps to an existing cluster.

NOTE: If you need to create a Kubernetes cluster locally or on the cloud,

follow "Option 1" or "Option 2" until you reach the skaffold run step.

This option offers you pre-built public container images that are easy to deploy by deploying the release manifest directly to an existing cluster.

Prerequisite: a running Kubernetes cluster (either local or on cloud).

-

Clone this repository, and go to the repository directory

-

Run

kubectl apply -f ./release/kubernetes-manifests.yamlto deploy the app. -

Run

kubectl get podsto see pods are in a Ready state. -

Find the IP address of your application, then visit the application on your browser to confirm installation.

kubectl get service/frontend-external

Note: you followed GKE deployment steps above, run

skaffold deletefirst to delete what's deployed.

-

Create a GKE cluster (described in "Option 2").

-

Use Istio on GKE add-on to install Istio to your existing GKE cluster.

gcloud beta container clusters update demo \ --zone=us-central1-a \ --update-addons=Istio=ENABLED \ --istio-config=auth=MTLS_PERMISSIVENOTE: If you need to enable

MTLS_STRICTmode, you will need to update several manifest files:kubernetes-manifests/frontend.yaml: delete "livenessProbe" and "readinessProbe" fields.kubernetes-manifests/loadgenerator.yaml: delete "initContainers" field.

-

(Optional) Enable Stackdriver Tracing/Logging with Istio Stackdriver Adapter by following this guide.

-

Install the automatic sidecar injection (annotate the

defaultnamespace with the label):kubectl label namespace default istio-injection=enabled

-

Apply the manifests in

./istio-manifestsdirectory. (This is required only once.)kubectl apply -f ./istio-manifests

-

Deploy the application with

skaffold run --default-repo=gcr.io/[PROJECT_ID]. -

Run

kubectl get podsto see pods are in a healthy and ready state. -

Find the IP address of your Istio gateway Ingress or Service, and visit the application.

INGRESS_HOST="$(kubectl -n istio-system get service istio-ingressgateway \ -o jsonpath='{.status.loadBalancer.ingress[0].ip}')" echo "$INGRESS_HOST"

curl -v "http://$INGRESS_HOST"

If you've deployed the application with skaffold run command, you can run

skaffold delete to clean up the deployed resources.

If you've deployed the application with kubectl apply -f [...], you can

run kubectl delete -f [...] with the same argument to clean up the deployed

resources.

- Google Cloud Next'18 London – Keynote showing Stackdriver Incident Response Management

- Google Cloud Next'18 SF

- Day 1 Keynote showing GKE On-Prem

- Day 3 – Keynote showing Stackdriver APM (Tracing, Code Search, Profiler, Google Cloud Build)

- Introduction to Service Management with Istio

- KubeCon EU 2019 - Reinventing Networking: A Deep Dive into Istio's Multicluster Gateways - Steve Dake, Independent

This is not an official Google project.