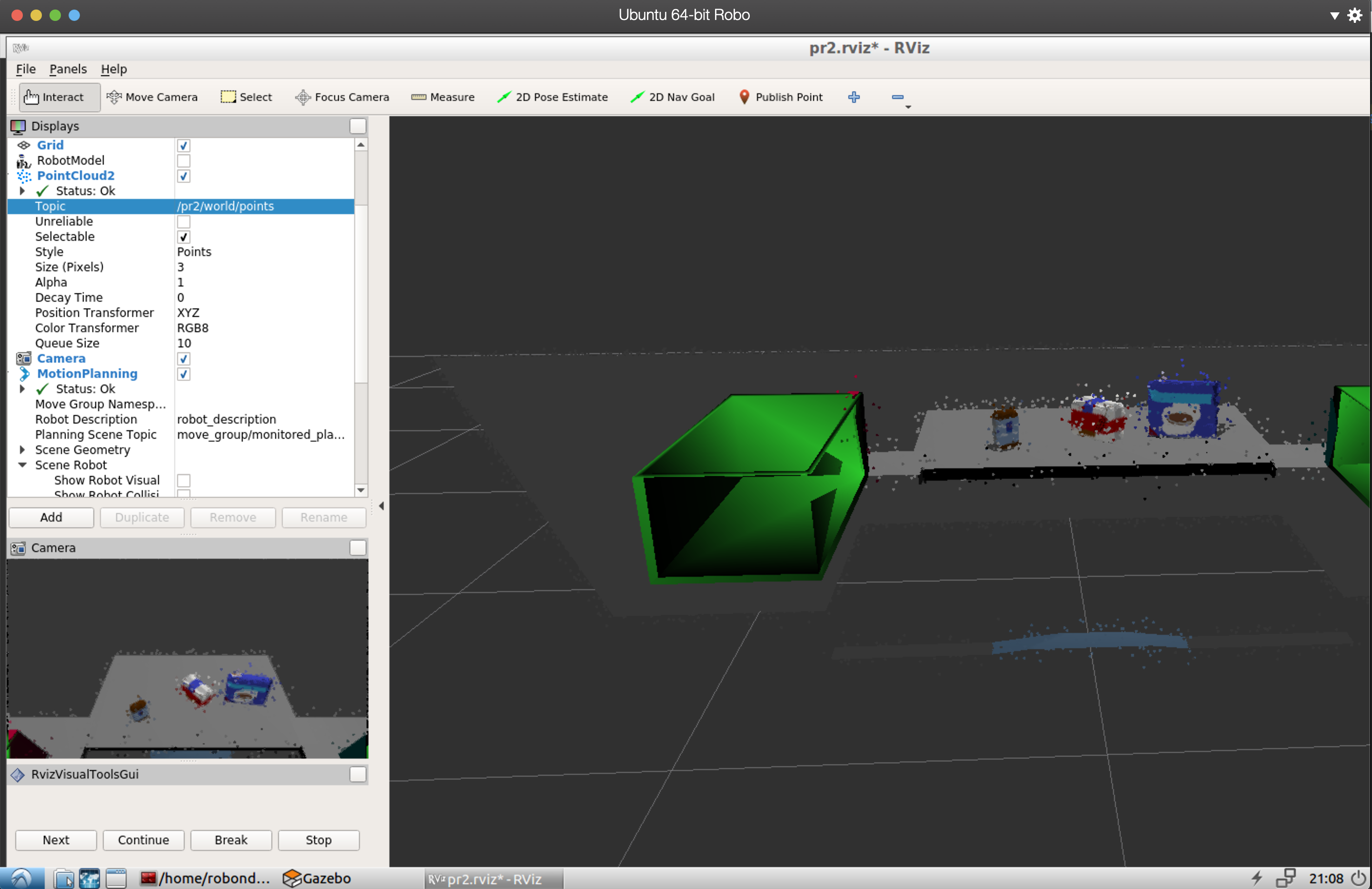

The raw picture that captured from RGB-D camera is noisy, so I first applied a Statistical Outlier filter with a 0.02 threshold scale factor to eliminate as many noise points as possible. The image bellow is the filtered picture.

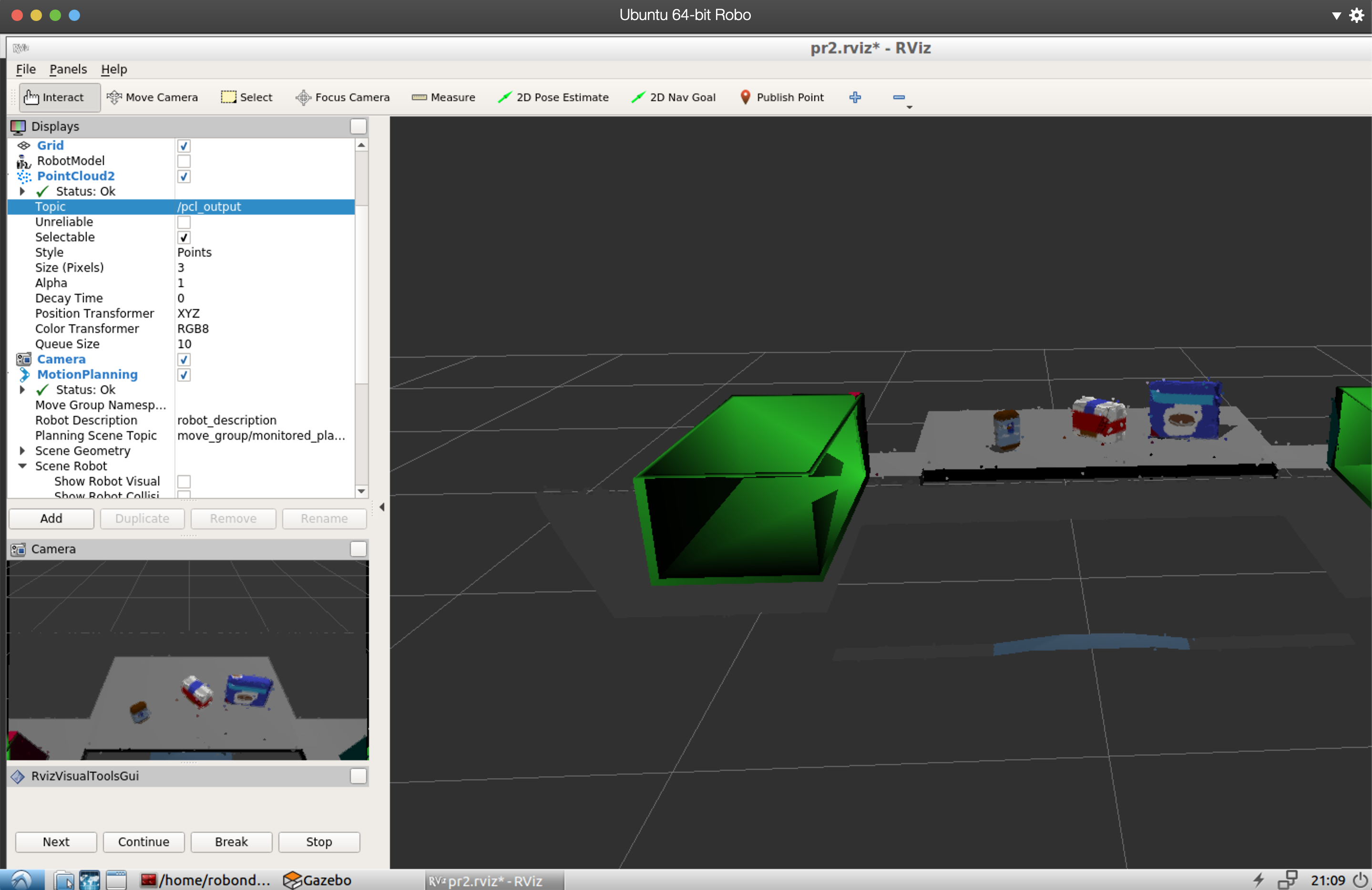

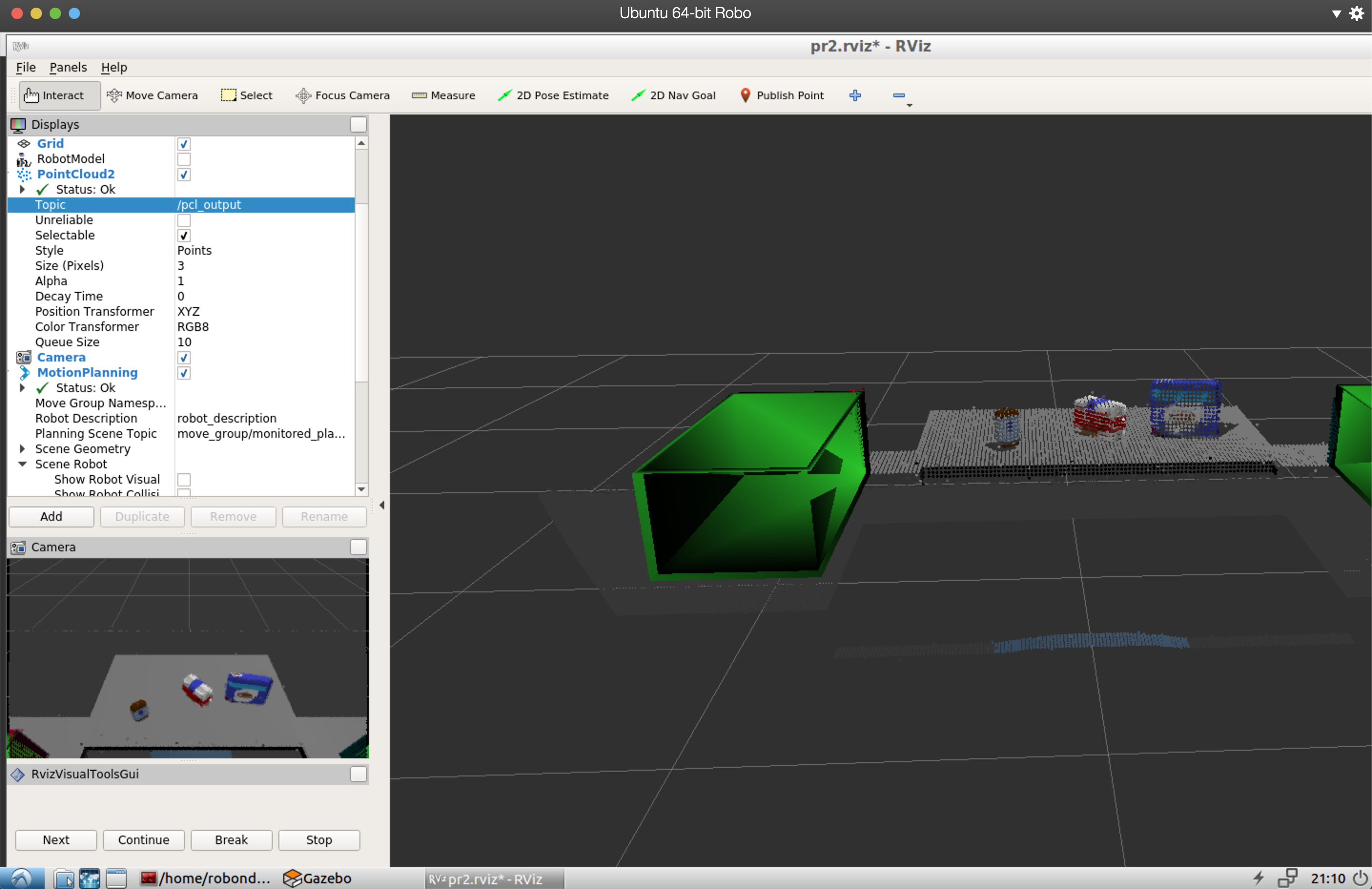

Next, I applied a Voxel Grid Downsampling filter with a 0.01 leaf size to make a sparsely sampled point cloud.

Then I used a Pass Through filter along z axis to make a region of interest in range [0.607, 1.1]. But I soon realized the camera also captured a potion of both drop boxes at left and right corner, which had no use in object perception. So I applied another Pass Through filter along y axis to narrow the region that contains only objects on table.

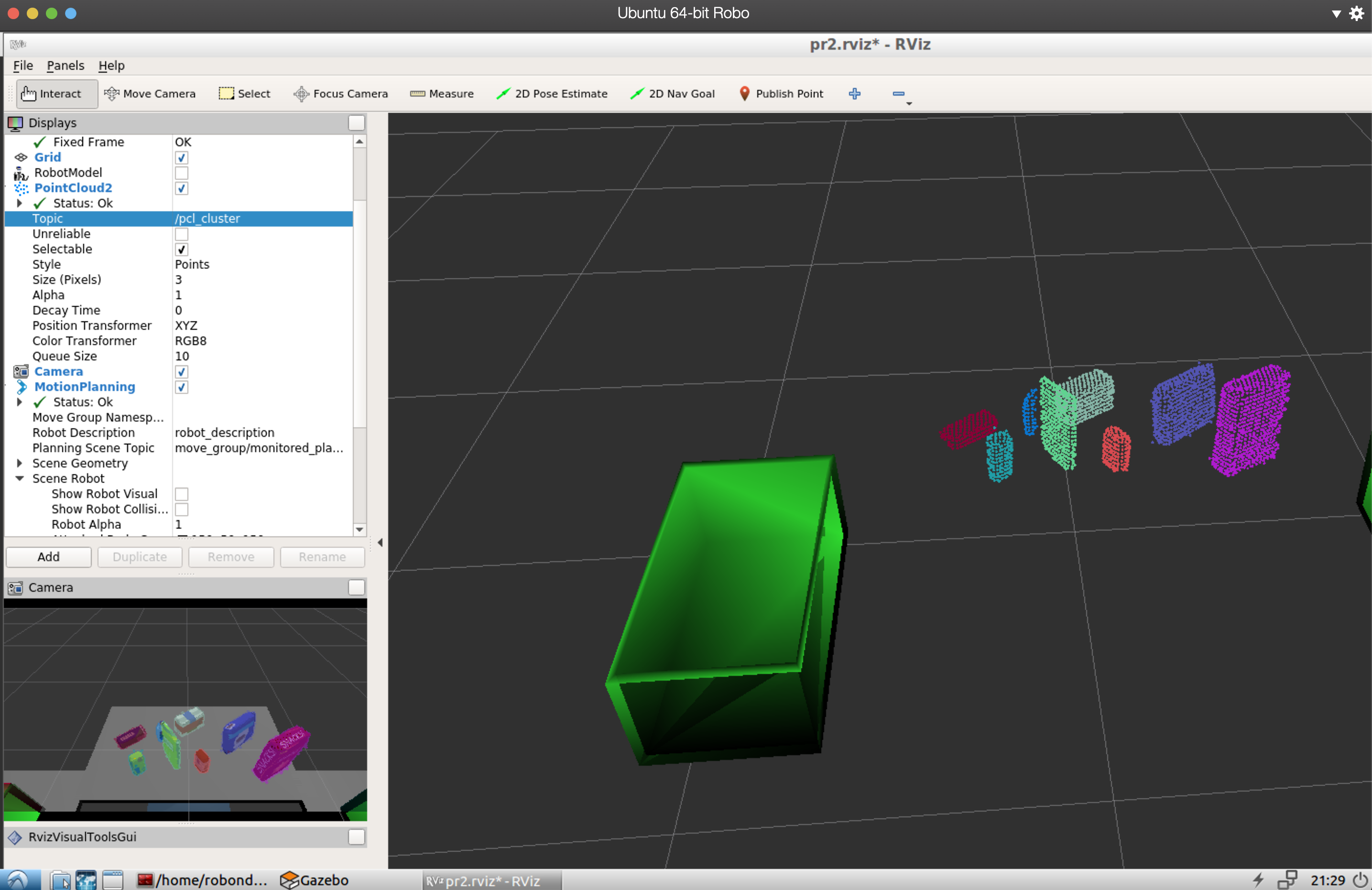

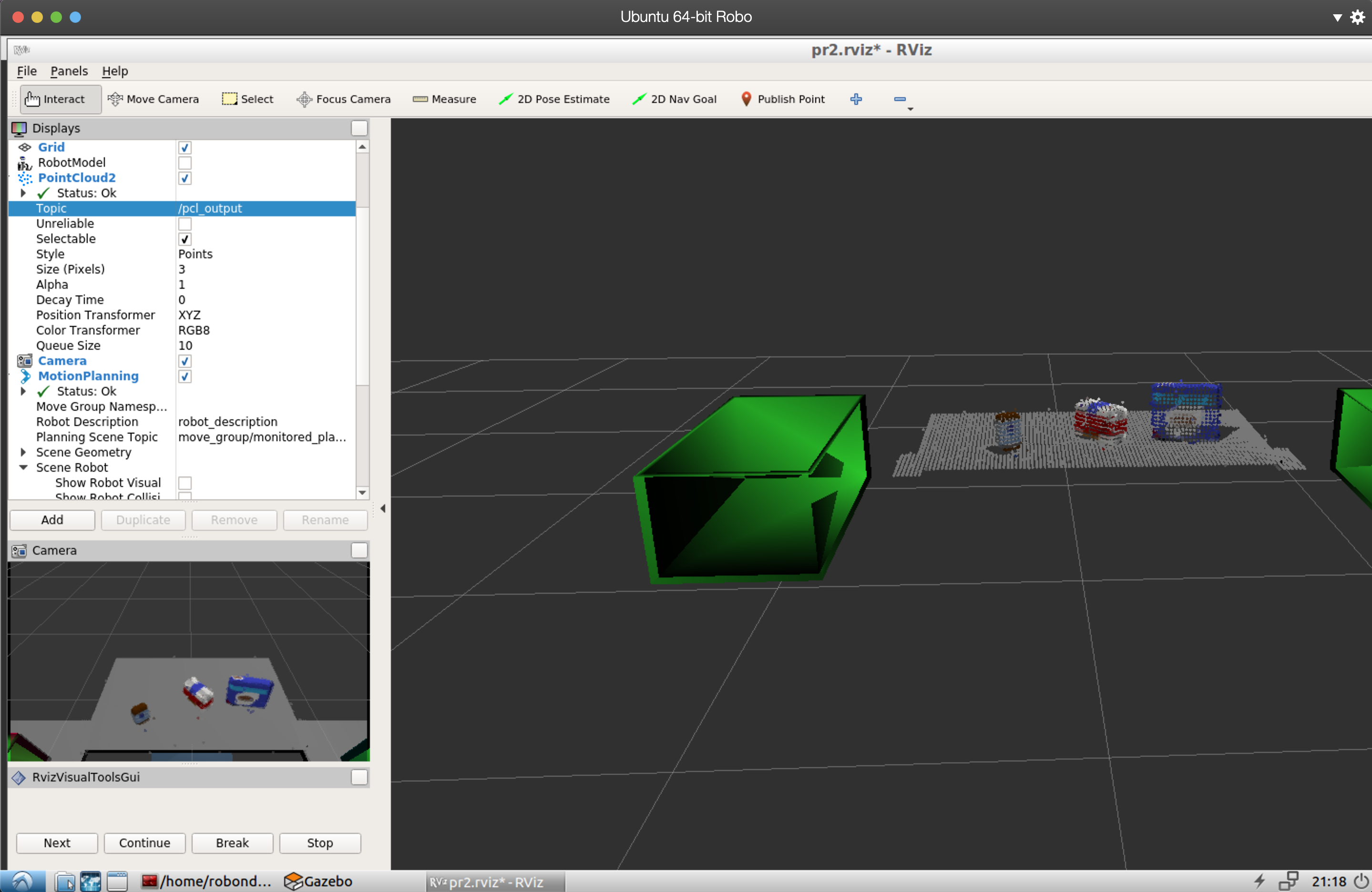

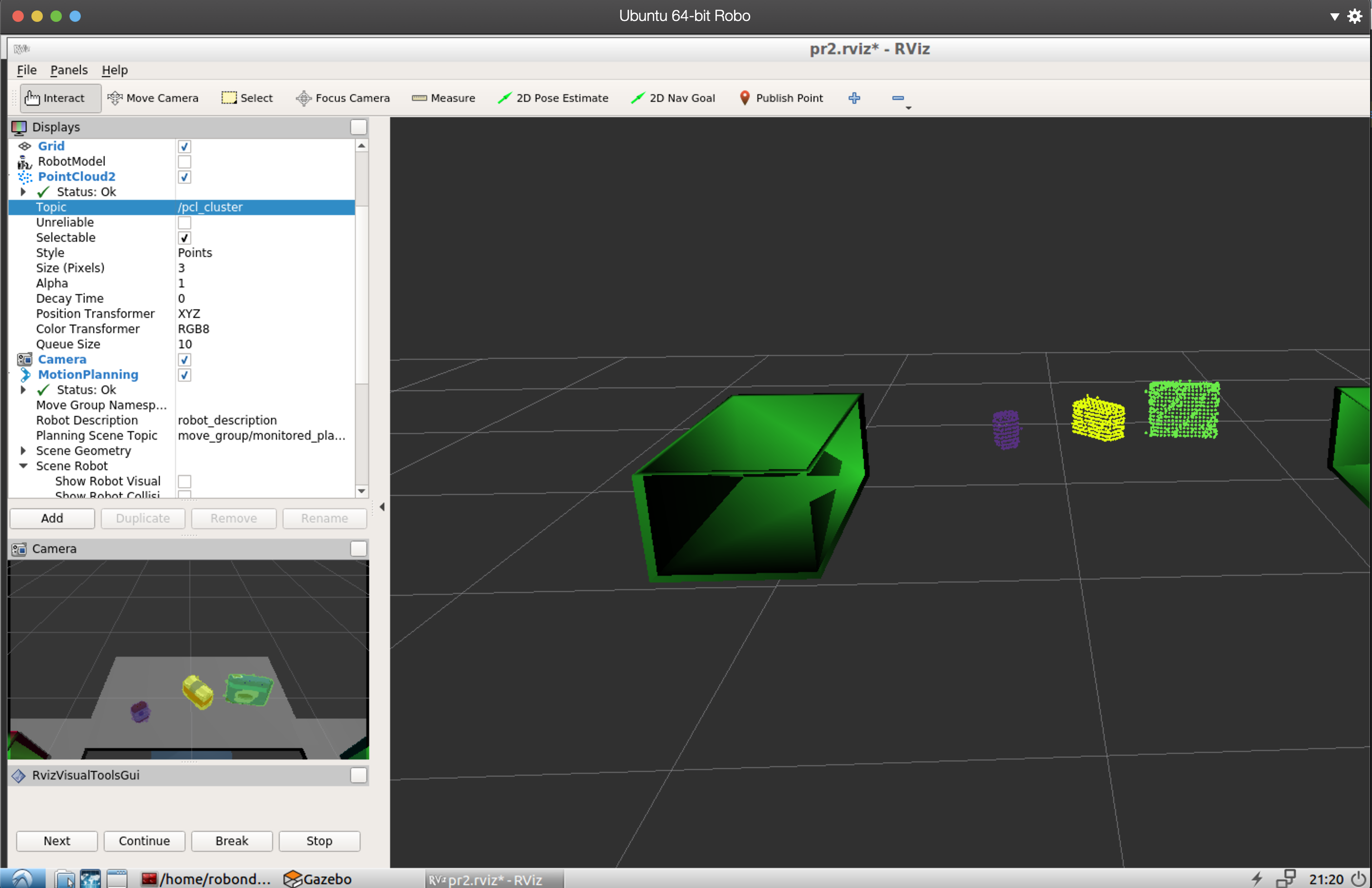

At this step, I set a 0.01 distance threshold for the RANSAC Plane segmentation. For Euclidean Clustering, I chose a 0.02 cluster tolerance with size in range [10, 3000] and the results were as follows,

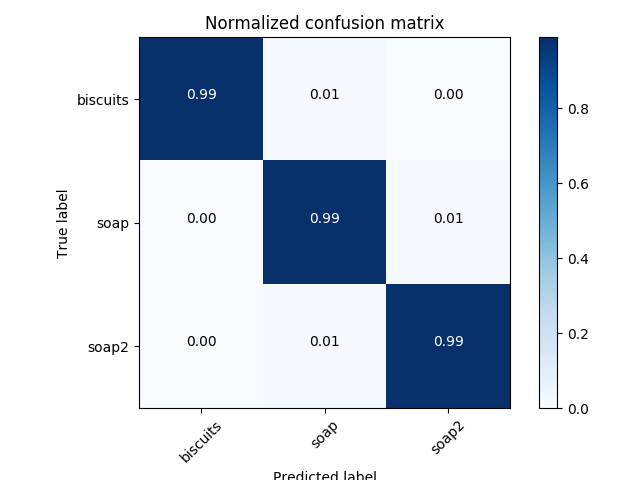

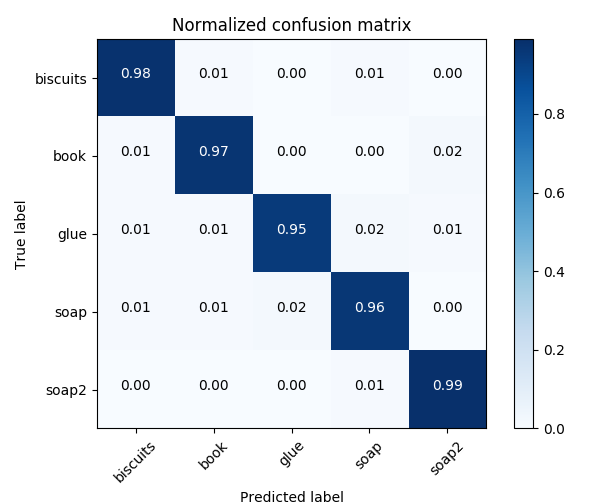

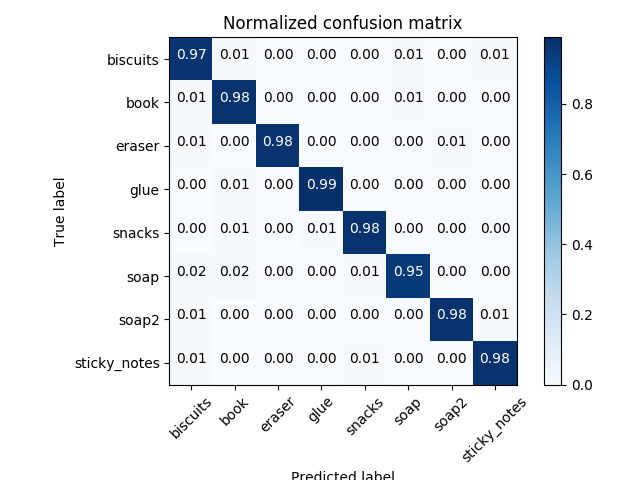

The trained SVM models I got generated following normalized confustion matrices for the three worlds respectively,

1. For all three tabletop setups (test*.world), perform object recognition, then read in respective pick list (pick_list_*.yaml). Next construct the messages that would comprise a valid PickPlace request output them to .yaml format.

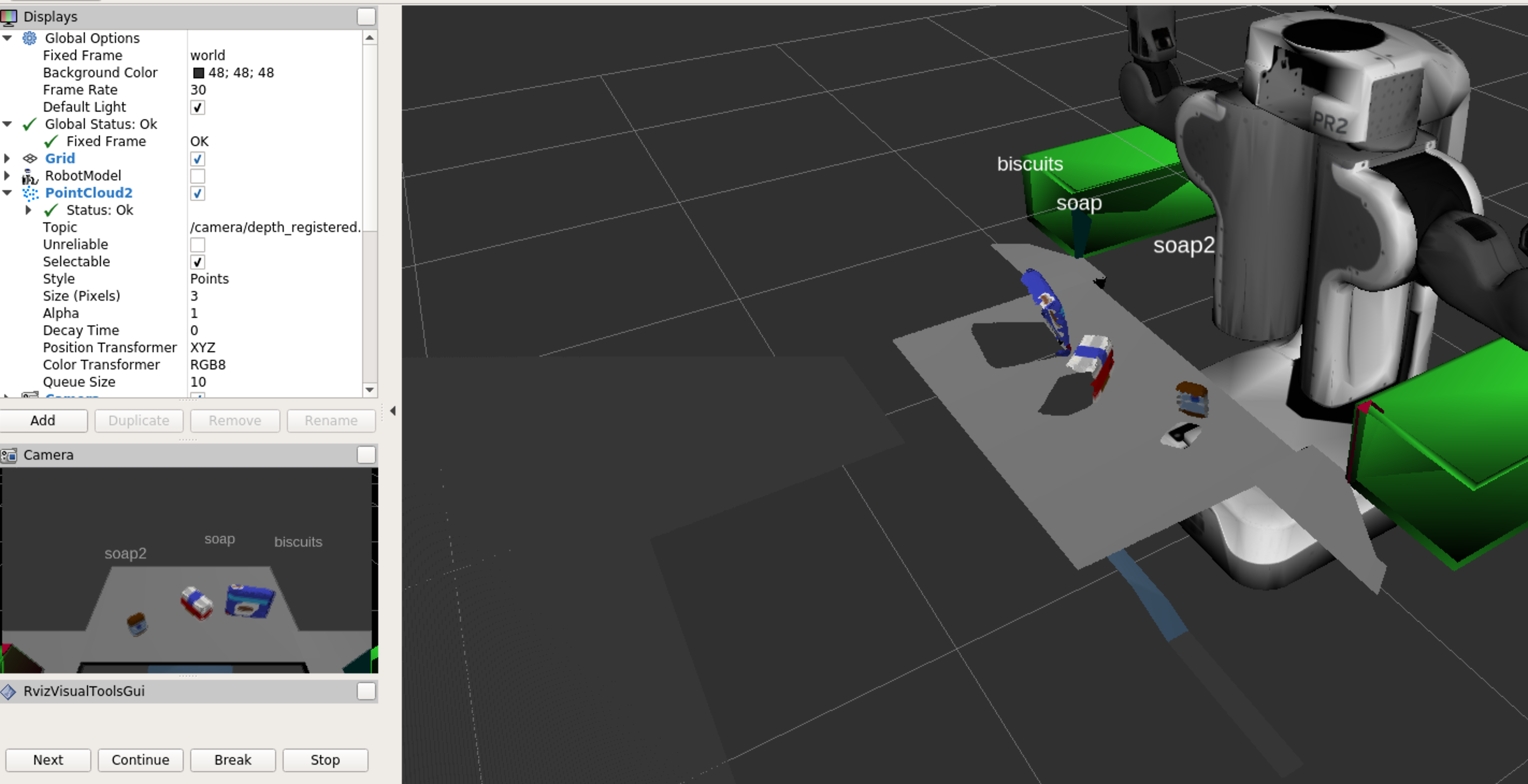

Here's output_1.yaml of test world 1 and corresponding screen snapshot,

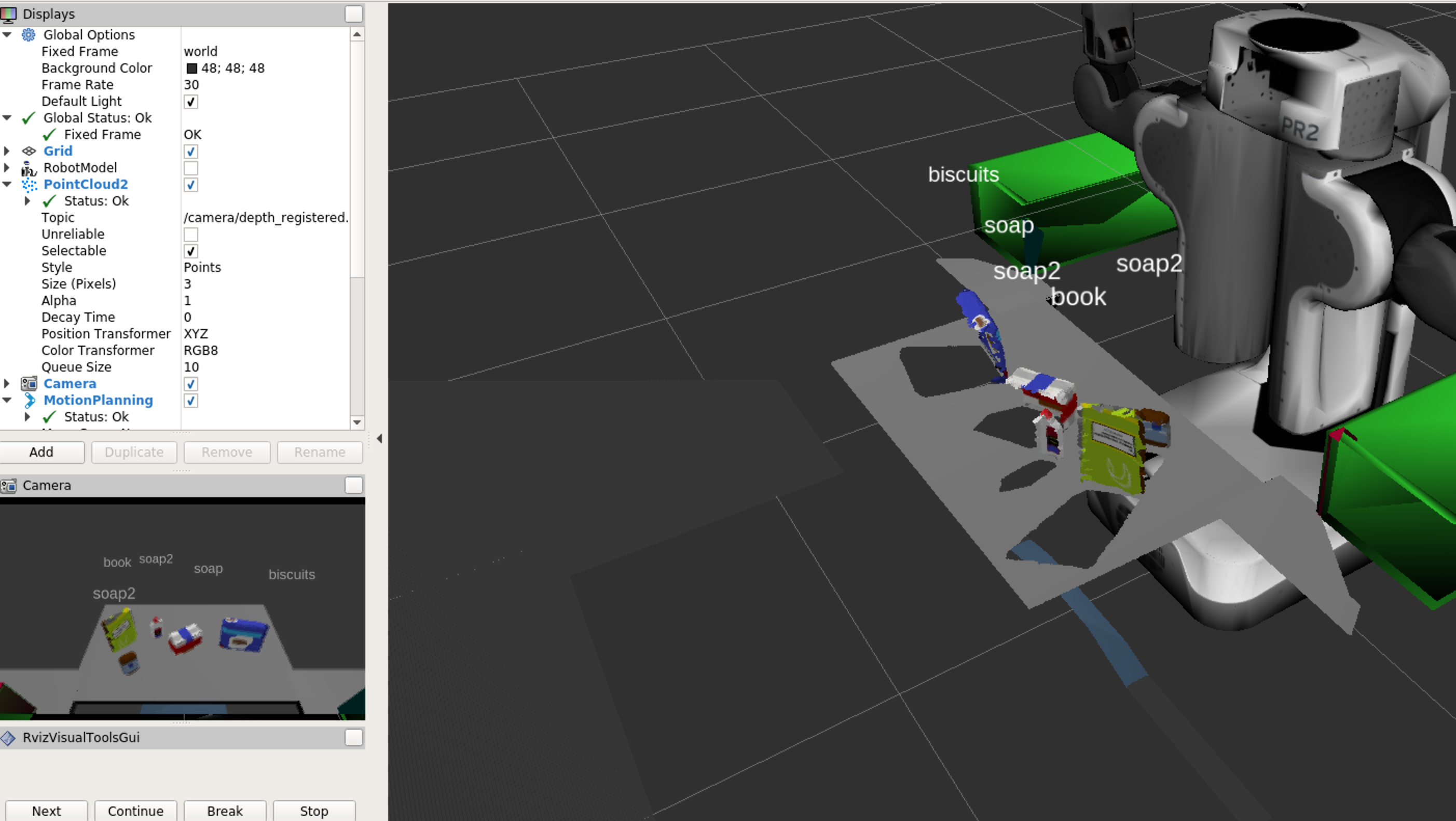

output_2.yaml of test world 2 and screen snapshot,

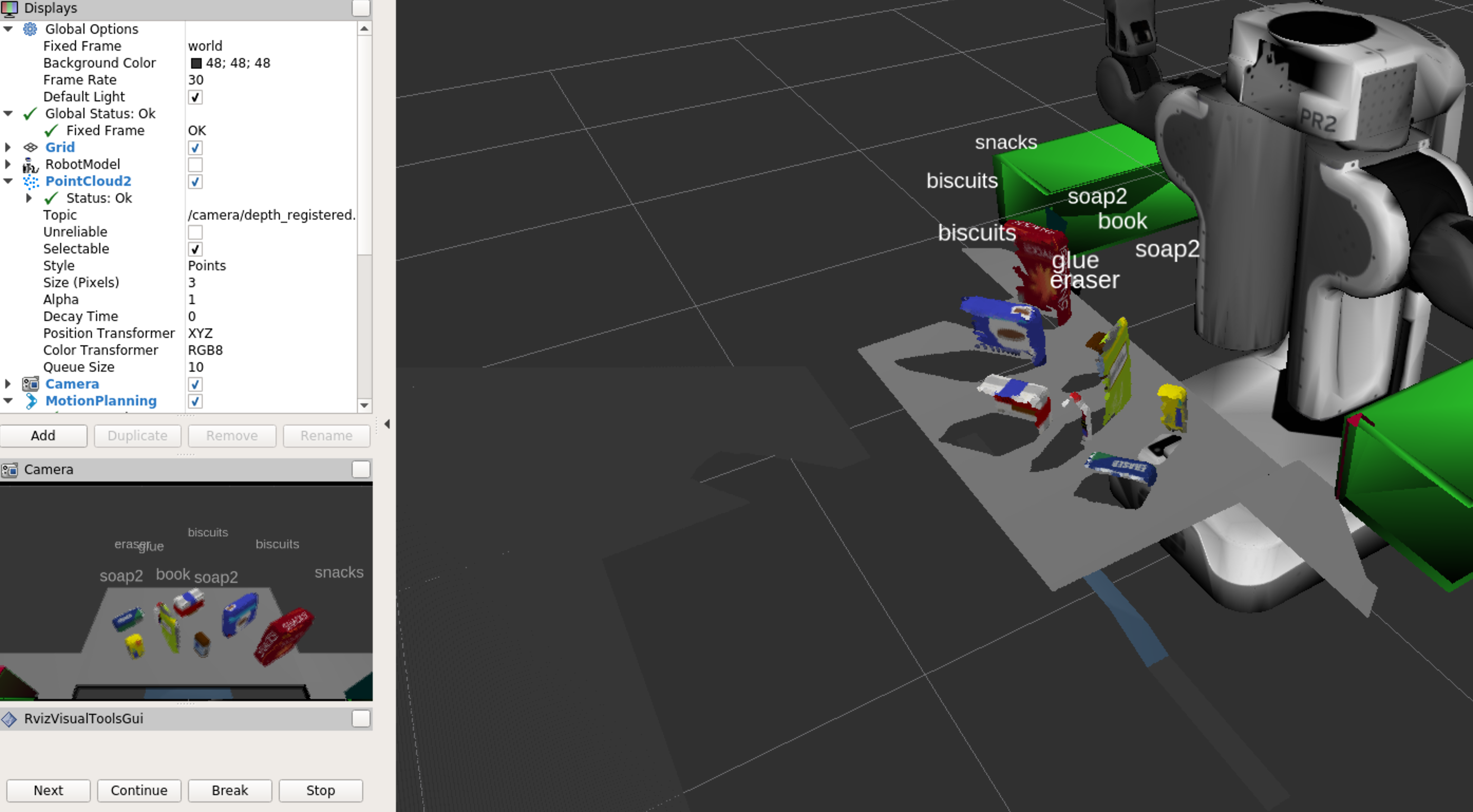

output_3.yaml of test world 3 and screen snapshot,

For all three test worlds, I generated 100 point cloud samples for each object to train the linear SVM model. The average accuracy, especially world 2 and 3, is around 96%, and as in the 'output_1/2/3.yaml' shown, the models barely meet the passing requirement. I'd like to tweak the SVM parameters, or other approach, for a higher prediction score in future pursue.

I would also like to get my hands on the challenge world later, as well as completing the collision map in order to make PR2 performing pick and place.