Training & Speed Evaluation Tools for gymnax

In this repository we provide training pipelines for gymnax agents in JAX. Furthermore, you can use the utilities to speed benchmark step transitions and to visualize trained agent checkpoints. Install all required dependencies via:

pip install -r requirements.txt

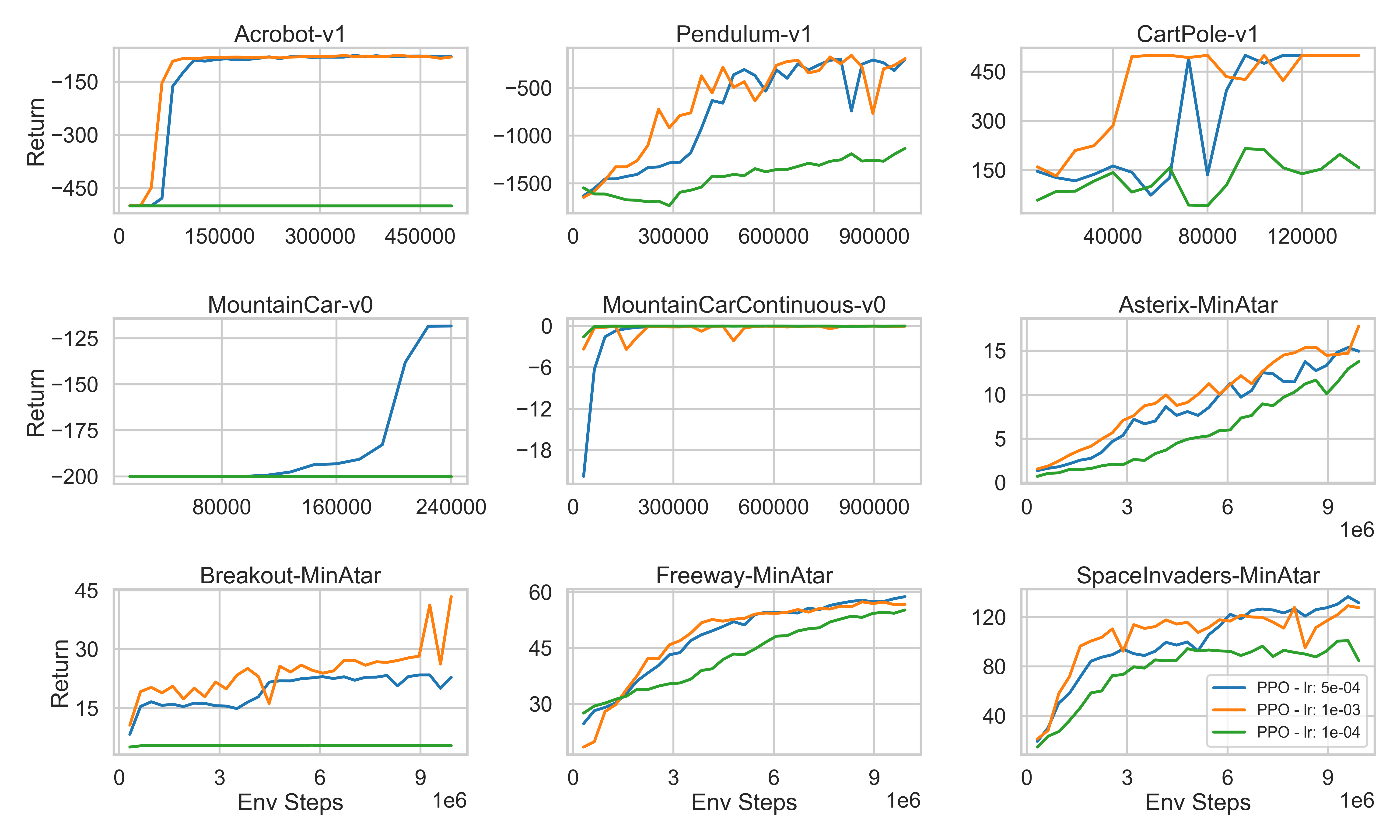

Accelerated Training of Agents with gymnax

We provide training routines and the corresponding checkpoints for both Evolution Strategies (mostly OpenES using evosax and PPO (using an adaptation of @bmazoure's implementation). You can train the agents as follows:

python train.py -config agents/<env_name>/ppo.yaml

python train.py -config agents/<env_name>/es.yaml

This will store checkpoints and training logs as pkl in agents/<env_name>/ppo.pkl. Collect all training runs sequentially via:

bash exec.sh train

Visualization of gymnax Environment Rollouts

You can also generate GIF visualizations of the trained agent's behaviour as follows:

python visualize.py -env <env_name> -train <{es/ppo}>

Collect all visualizations sequentially via:

bash exec.sh visualize

Note that we do not support visualizations for most behavior suite environments, since they do not lend themselves to visual display (e.g. markov reward process, etc.).

|

|

|

|

|

|

|

|

|

|

|

|

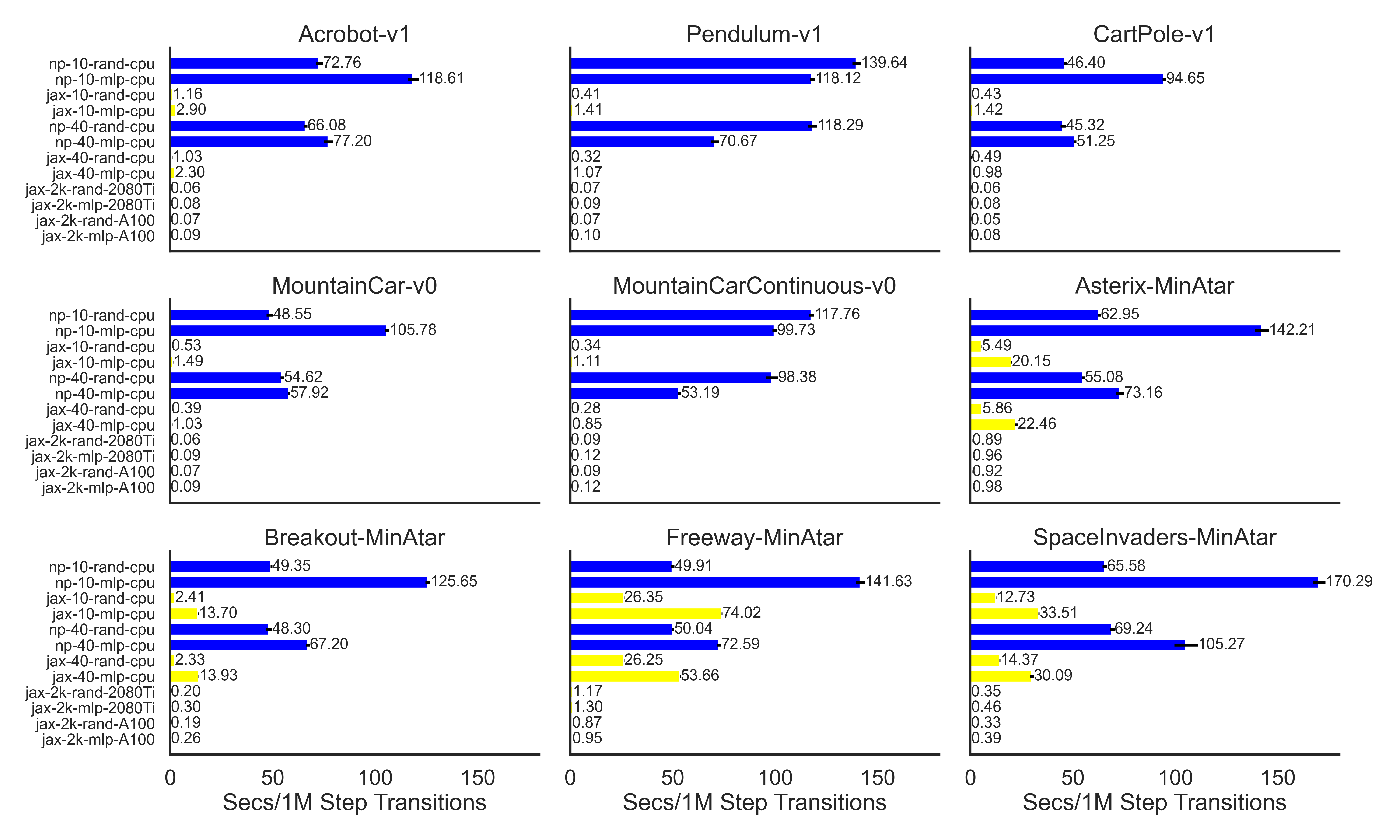

Speed Up Evaluation for gymnax Environments

Finally, we provide simple tools for benchmarking the speed of step transitions on different hardware (CPU, GPU, TPU). For a specific environment the speed estimates can be obtained as follows:

python speed.py -env <env_name> --use_gpu --use_network --num_envs 10

Collect all speed estimates sequentially via:

bash exec.sh speed

For each environment we estimate the seconds required to execute 1 Mio steps on various hardware and for both random (R)/neural network (N) policies. We report the mean over 10 independent runs:

| Environment Name | np CPU 10 Envs |

jax CPU 10 Envs |

np CPU 40 Envs |

jax CPU 40 Envs |

jax 2080Ti 2k Envs |

jax A100 2k Envs |

|---|---|---|---|---|---|---|

Acrobot-v1 |

R: 72 N: 118 |

R: 1.16 N: 2.90 |

R: 66 N: 77.2 |

R: 1.03 N: 2.3 |

R: 0.06 N: 0.08 |

R: 0.07 N: 0.09 |

Pendulum-v1 |

R: 139 N: 118 |

R: 0.41 N: 1.41 |

R: 118 N: 70 |

R: 0.32 N: 1.07 |

R: 0.07 N: 0.09 |

R: 0.07 N: 0.10 |

CartPole-v1 |

R: 46 N: 94 |

R: 0.43 N: 1.42 |

R: 45 N: 51 |

R: 0.49 N: 0.98 |

R: 0.06 N: 0.08 |

R: 0.05 N: 0.08 |

MountainCar-v0 |

R: 48 N: 105 |

R: 0.53 N: 1.49 |

R: 54 N: 57 |

R: 0.39 N: 1.03 |

R: 0.06 N: 0.09 |

R: 0.07 N: 0.09 |

MountainCarContinuous-v0 |

R: 117 N: 99 |

R: 0.34 N: 1.11 |

R: 98 N: 53 |

R: 0.28 N: 0.85 |

R: 0.09 N: 0.12 |

R: 0.09 N: 0.12 |

Asterix-MinAtar |

R: 62.95 N: 142.21 |

R: 5.49 N: 20.15 |

R: 55.08 N: 73.16 |

R: 5.86 N: 22.46 |

R: 0.89 N: 0.96 |

R: 0.92 N: 0.98 |

Breakout-MinAtar |

R: 49.35 N: 125.65 |

R: 2.41 N: 13.70 |

R: 48.30 N: 67.20 |

R: 2.33 N: 13.93 |

R: 0.20 N: 0.30 |

R: 0.19 N: 0.26 |

Freeway-MinAtar |

R: 49.91 N: 141.63 |

R: 26.35 N: 74.02 |

R: 50.04 N: 72.59 |

R: 26.25 N: 53.66 |

R: 1.17 N: 1.30 |

R: 0.87 N: 0.95 |

SpaceInvaders-MinAtar |

R: 65.58 N: 170.29 |

R: 12.73 N: 33.51 |

R: 69.24 N: 105.27 |

R: 14.37 N: 30.09 |

R: 0.35 N: 0.46 |

R: 0.33 N: 0.39 |

Catch-bsuite |

- | R: 0.99 N: 2.37 |

- | R: 0.87 N: 1.82 |

R: 0.17 N: 0.21 |

R: 0.15 N: 0.21 |

DeepSea-bsuite |

- | R: 0.84 N: 1.97 |

- | R: 1.04 N: 1.61 |

R: 0.27 N: 0.33 |

R: 0.22 N: 0.36 |

MemoryChain-bsuite |

- | R: 0.43 N: 1.46 |

- | R: 0.37 N: 1.11 |

R: 0.14 N: 0.21 |

R: 0.13 N: 0.19 |

UmbrellaChain-bsuite |

- | R: 0.64 N: 1.82 |

- | R: 0.48 N: 1.28 |

R: 0.08 N: 0.11 |

R: 0.08 N: 0.12 |

DiscountingChain-bsuite |

- | R: 0.33 N: 1.32 |

- | R: 0.21 N: 0.88 |

R: 0.06 N: 0.07 |

R: 0.06 N: 0.08 |

FourRooms-misc |

- | R: 3.12 N: 5.43 |

- | R: 2.81 N: 4.6 |

R: 0.09 N: 0.10 |

R: 0.07 N: 0.10 |

MetaMaze-misc |

- | R: 0.80 N: 2.24 |

- | R: 0.76 N: 1.69 |

R: 0.09 N: 0.11 |

R: 0.09 N: 0.12 |

PointRobot-misc |

- | R: 0.84 N: 2.04 |

- | R: 0.65 N: 1.31 |

R: 0.08 N: 0.09 |

R: 0.08 N: 0.10 |

BernoulliBandit-misc |

- | R: 0.54 N: 1.61 |

- | R: 0.42 N: 0.97 |

R: 0.07 N: 0.10 |

R: 0.08 N: 0.11 |

GaussianBandit-misc |

- | R: 0.56 N: 1.49 |

- | R: 0.58 N: 0.89 |

R: 0.05 N: 0.08 |

R: 0.07 N: 0.09 |

TPU v3-8 comparisons will follow once I (or you?) find the time and resources 🤗