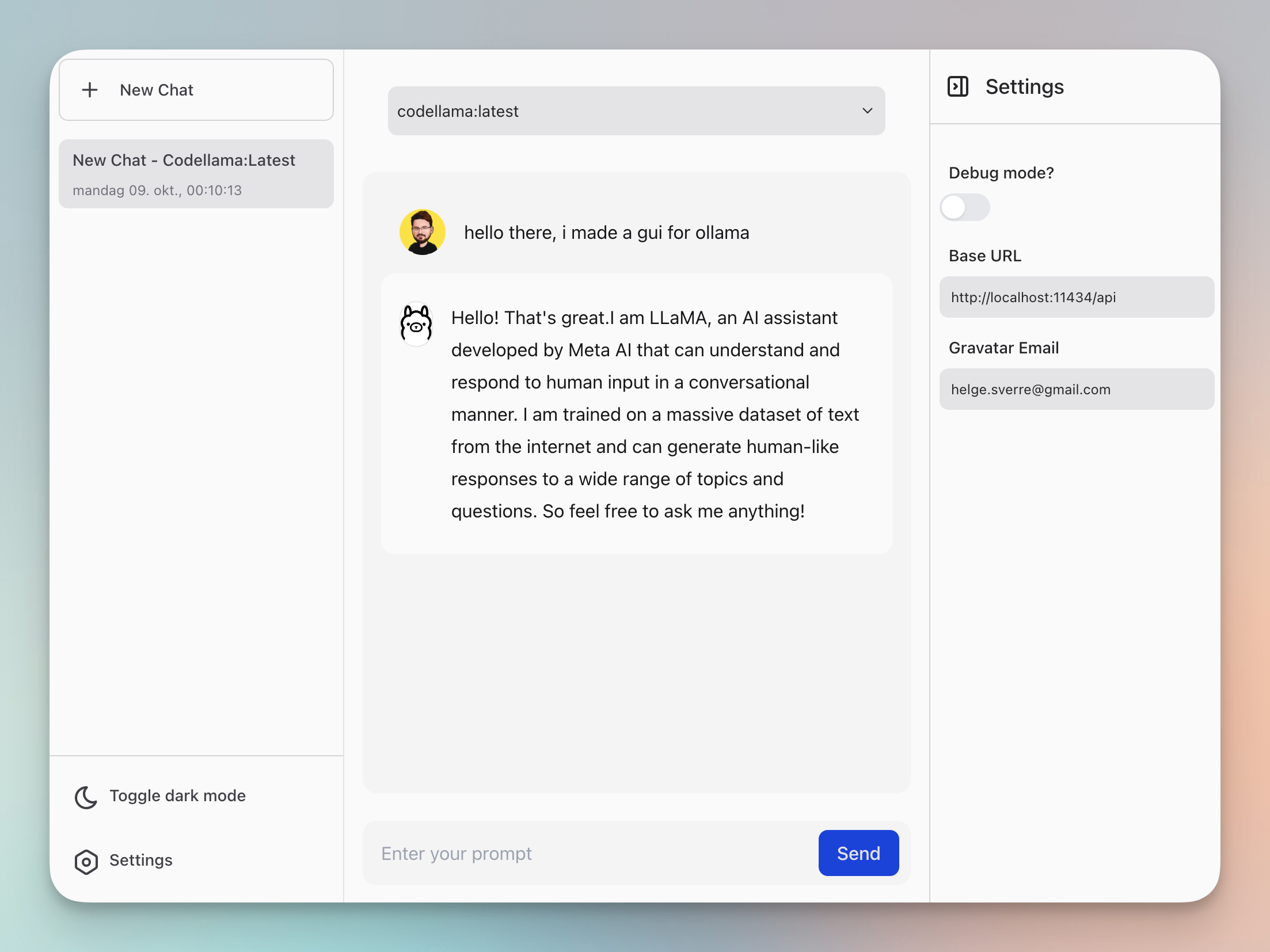

Ollama GUI is a web interface for ollama.ai, a tool that enables running Large Language Models (LLMs) on your local machine.

- Download and install ollama CLI.

- Download and install yarn and node

ollama pull <model-name>

ollama serve- Clone the repository and start the development server.

git clone https://github.com/HelgeSverre/ollama-gui.git

cd ollama-gui

yarn install

yarn devOr use the hosted web version, by running ollama with the following origin command (docs)

OLLAMA_ORIGINS=https://ollama-gui.vercel.app ollama serveTo run Ollama GUI using Docker, follow these steps:

-

Make sure you have Docker (or OrbStack) installed on your system.

-

Clone the repository:

git clone https://github.com/HelgeSverre/ollama-gui.git cd ollama-gui -

Build the Docker image:

docker build -t ollama-gui . -

Run the Docker container:

docker run -p 8080:8080 ollama-gui

-

Access the application by opening a web browser and navigating to

http://localhost:8080.

Note: Make sure that the Ollama CLI is running on your host machine, as the Docker container for Ollama GUI needs to communicate with it.

For convenience and copy-pastability, here is a table of interesting models you might want to try out.

For a complete list of models Ollama supports, go to ollama.ai/library.

- Properly format newlines in the chat message (PHP-land has

nl2brbasically want the same thing) - Store chat history using IndexedDB locally

- Cleanup the code, I made a mess of it for the sake of speed and getting something out the door.

- Add markdown parsing lib

- Allow browsing and installation of available models (library)

- Ensure mobile responsiveness (non-prioritized use-case atm.)

- Add file uploads with OCR and stuff.

- Ollama.ai - CLI tool for models.

- LangUI

- Vue.js

- Vite

- Tailwind CSS

- VueUse

- @tabler/icons-vue

Licensed under the MIT License. See the LICENSE.md file for details.