Note: It has been a while since this project has been updated. As you work through the code, if you feel like this can be updated, even slightly, feel free to send in a PR

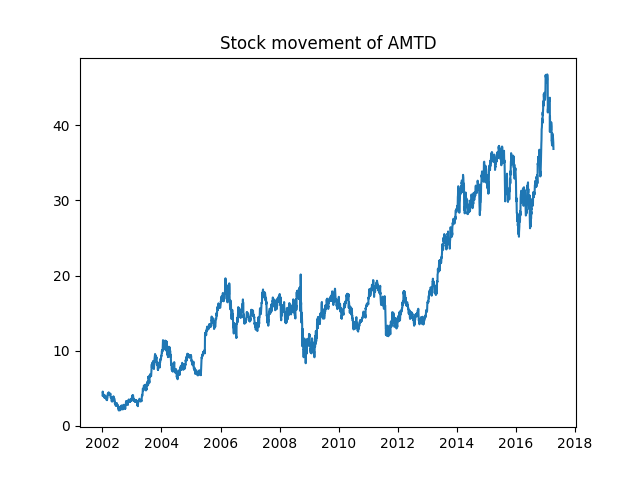

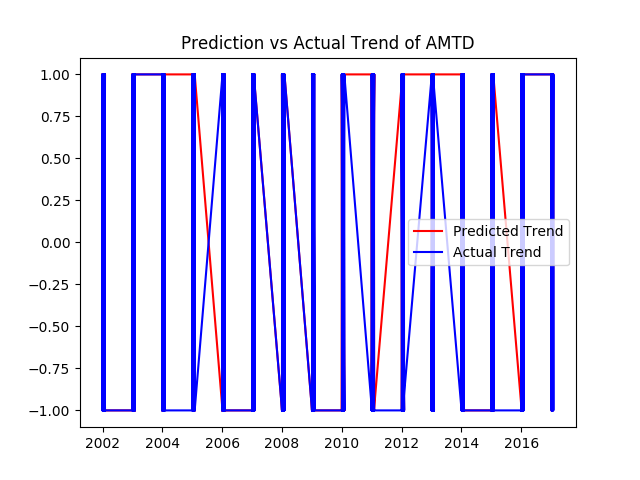

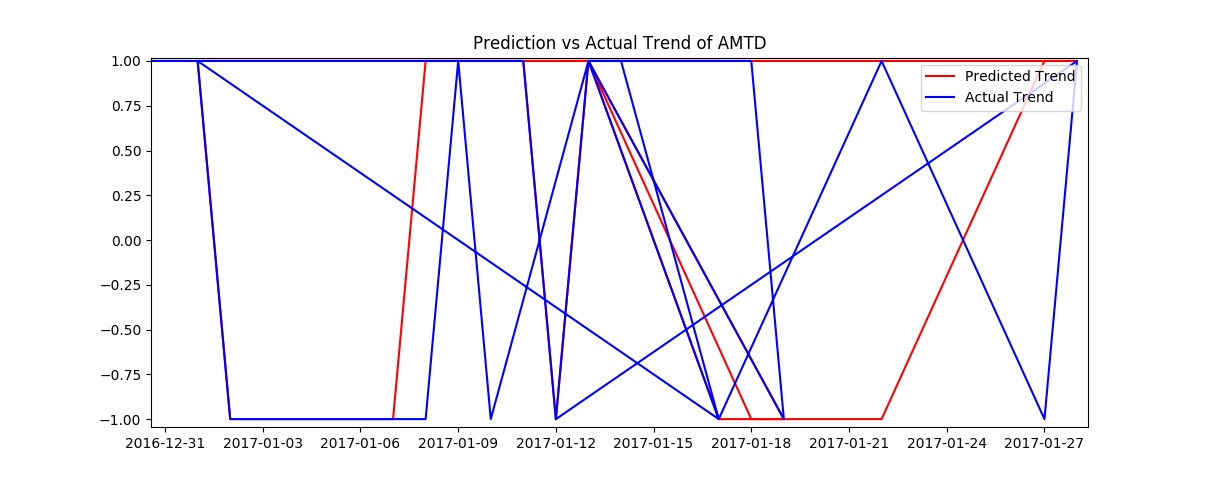

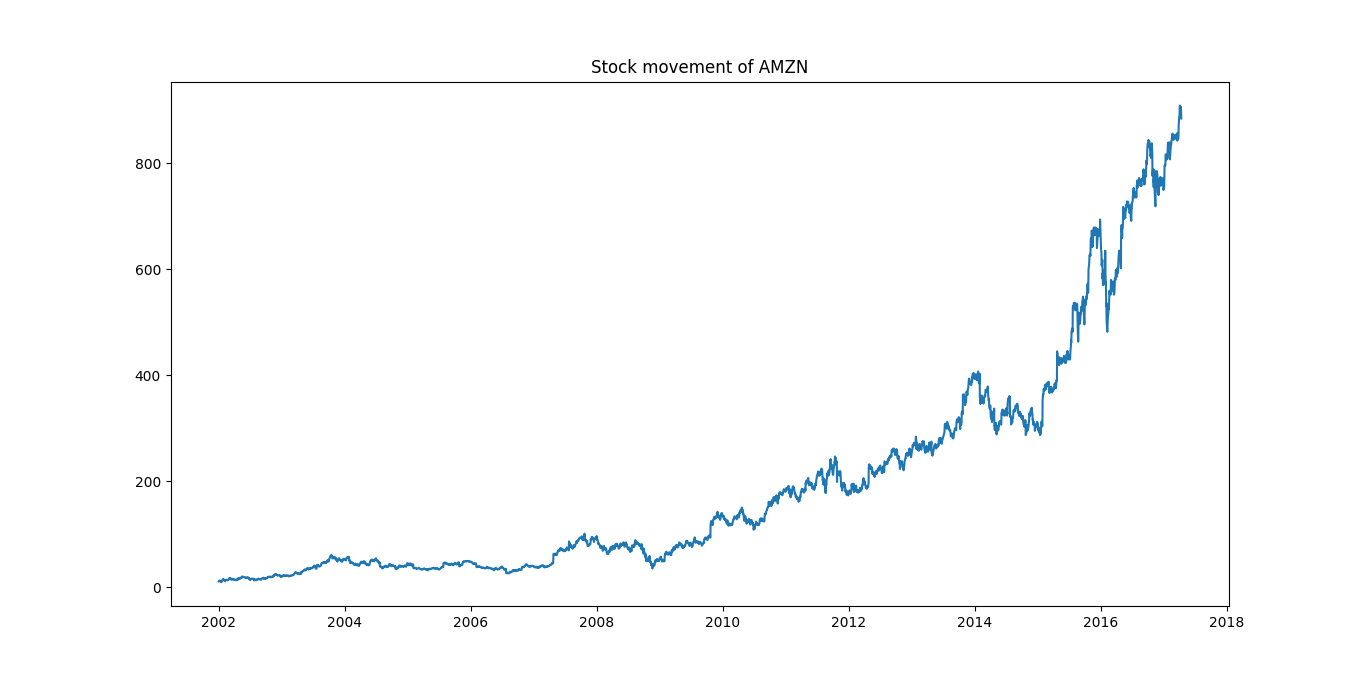

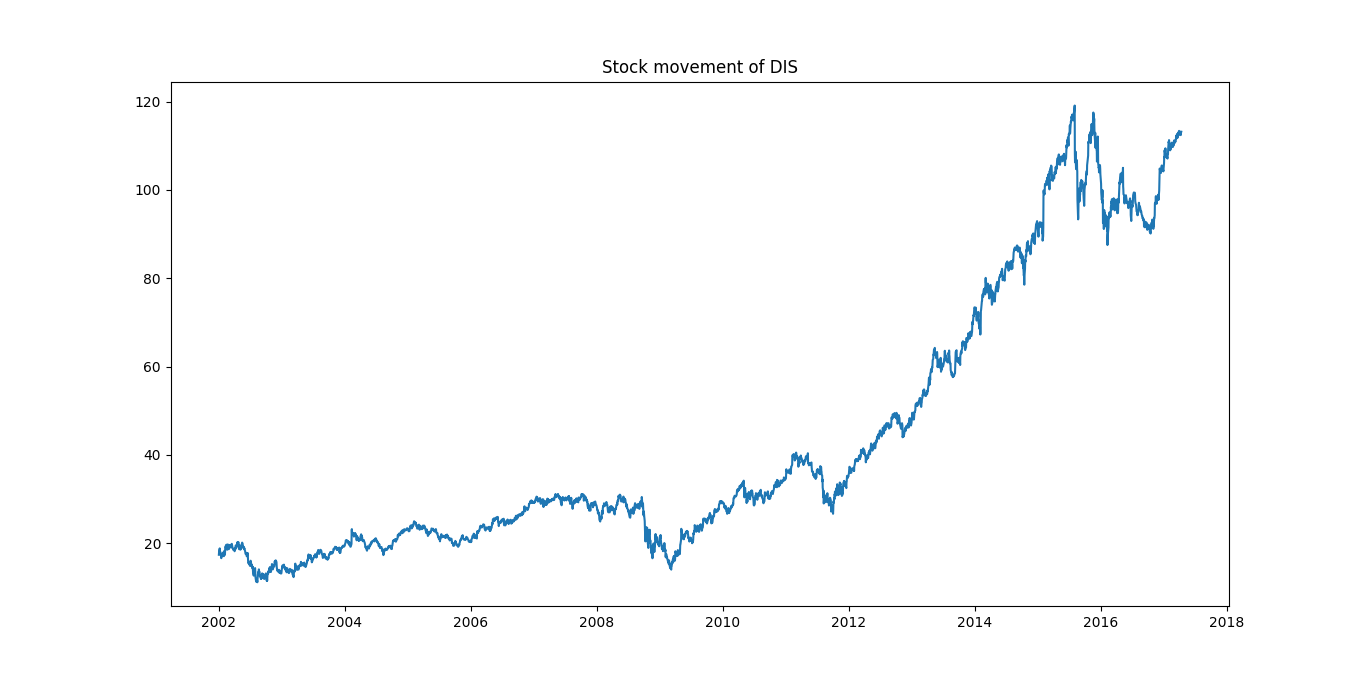

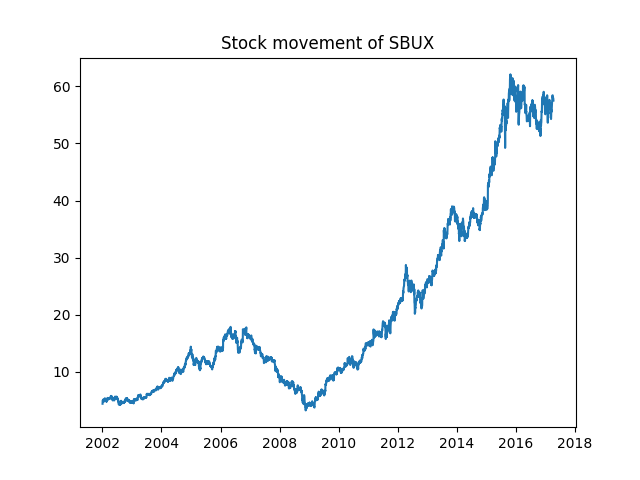

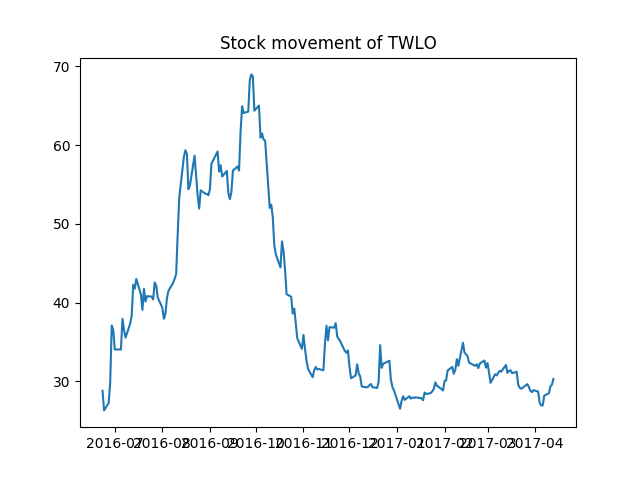

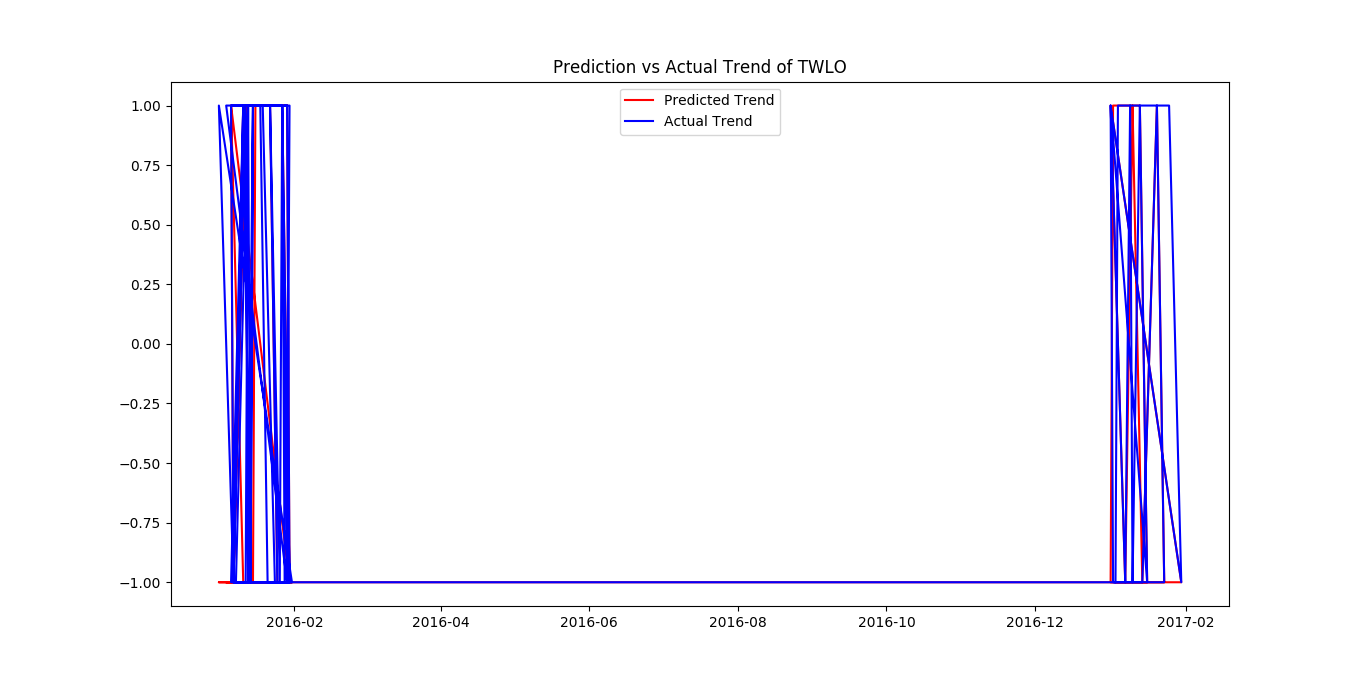

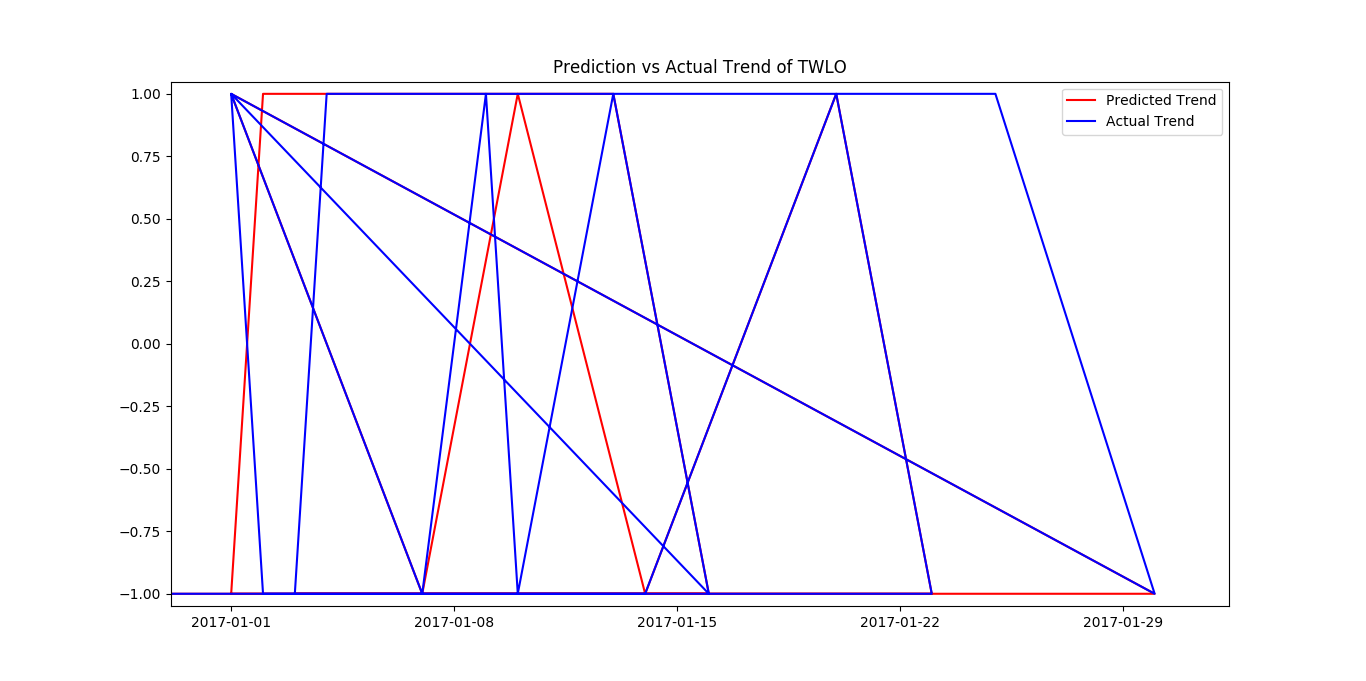

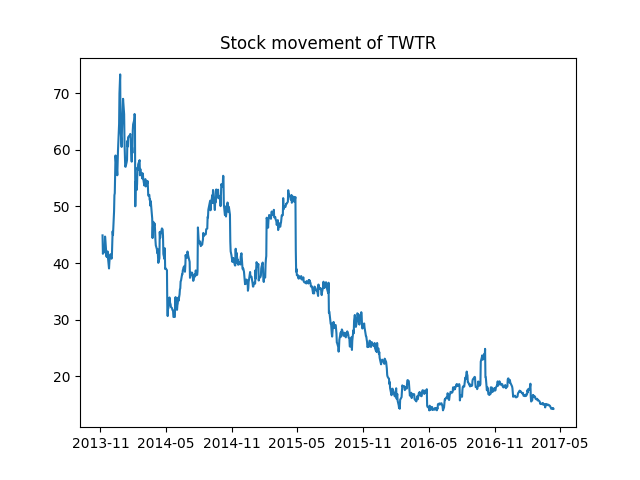

I applied k-nearest neighbor algorithm and non-linear regression approach in order to predict stock proces for a sample of six major companies listed on the NASDAQ stock exchange to assist investors, management, decision makers, and users in making correct and informed investments decisions.

A more detailed paper on this can be found in final.pdf

cd <this directory>

pip install -r requirements.txt

python knnAlgorithm.py

OPEN is the price of the stock at the beginning of the trading day (it need not be the closing price of the previous day).

High is the highest price of the stock at closing time.

Low is the lowest price of the stock on that trading day.

Close is the price of the stock at closing time.

Volume indicates how many stocks were traded.

Adjusted Close is the closing price of the stock that adjusts the price of the stock for corporate actions.

While stock prices are considered to be set mostly by traders, stock splits (when the company makes each extant stock worth two and halves the price) and dividends (payout of company profits per share) also affect the price of a stock and should be accounted for.

The model for kNN is the entire training dataset. When a prediction is required for a unseen data instance, the kNN algorithm will search through the training dataset for the k-most similar instances. The prediction attribute of the most similar instances is summarised and returned as the prediction for the unseen instance.

The similarity measure is dependent on the type of data. For real-valued data, the Euclidean distance can be used. For other types of data, such as categorical or binary data, Hamming distance can be used.

In the case of regression problems, the average of the predicted attribute may be returned.

In case of classification, the most prevalent class may be returned.

The kNN algorithm belongs to the family of instance-based, competitive learning and lazy learning algorithms.

Finally, kNN is powerful because it does not assume anything about the data, other than that distance measure can be calculated consistently between any two instances. As such, it is called non-linear as it does not assume a functional form.

- Handle data: Open the dataset from CSV and split into test/train datasets

- Similarity: Calculate the distance between two data instances

- Neighbors: Locate k most similar data instances

- Response: Generate a response from a set of data instances

- Accuracy: Summarize the accuracy of predictions

- Main: Tie it all together

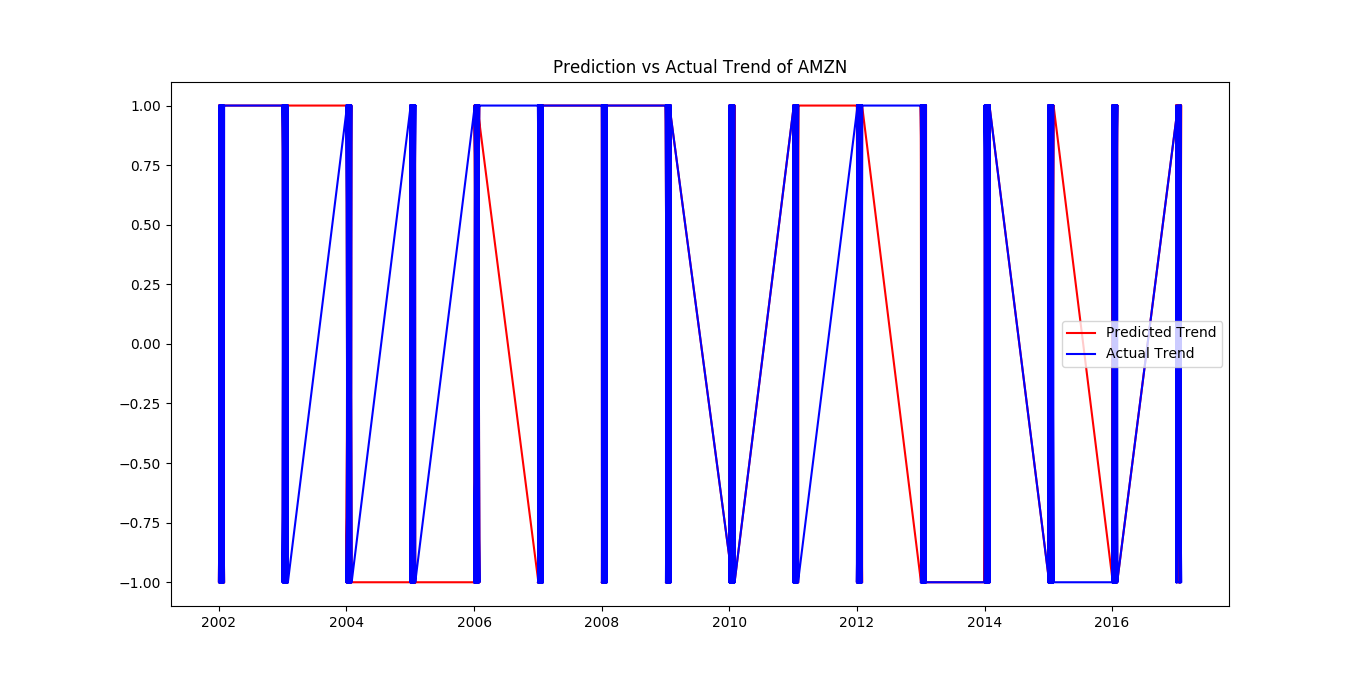

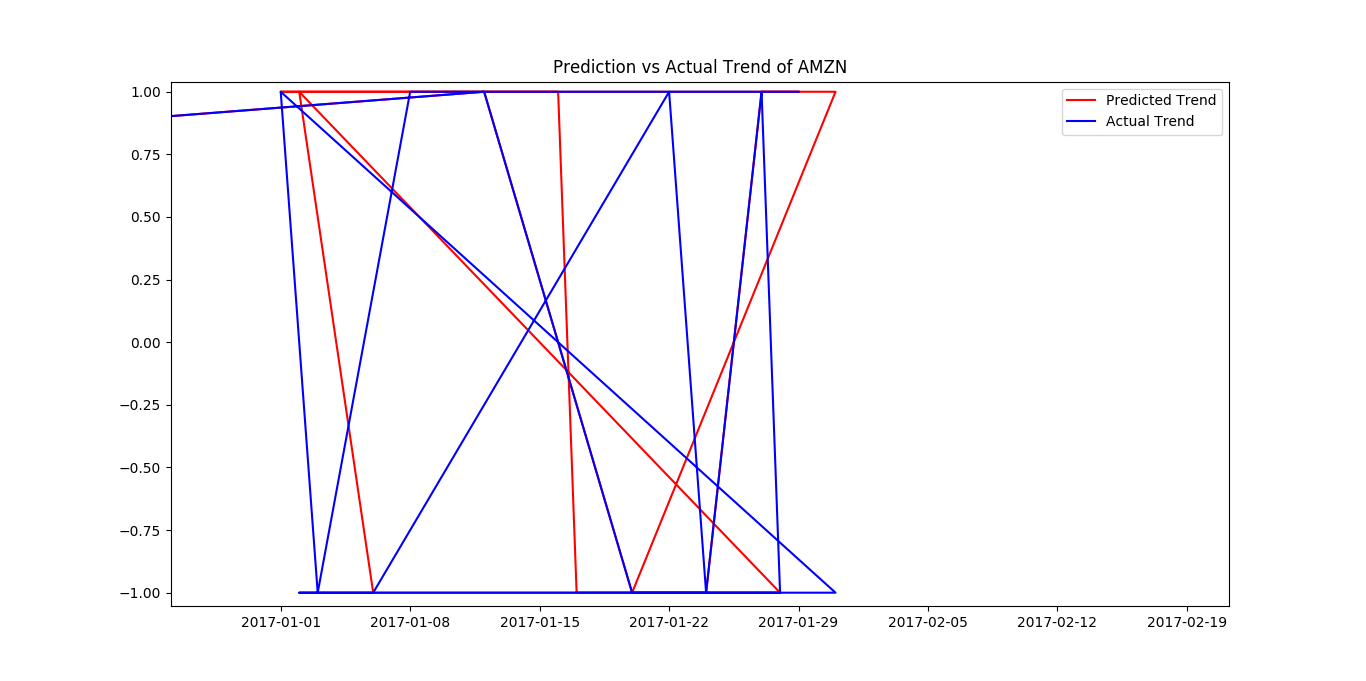

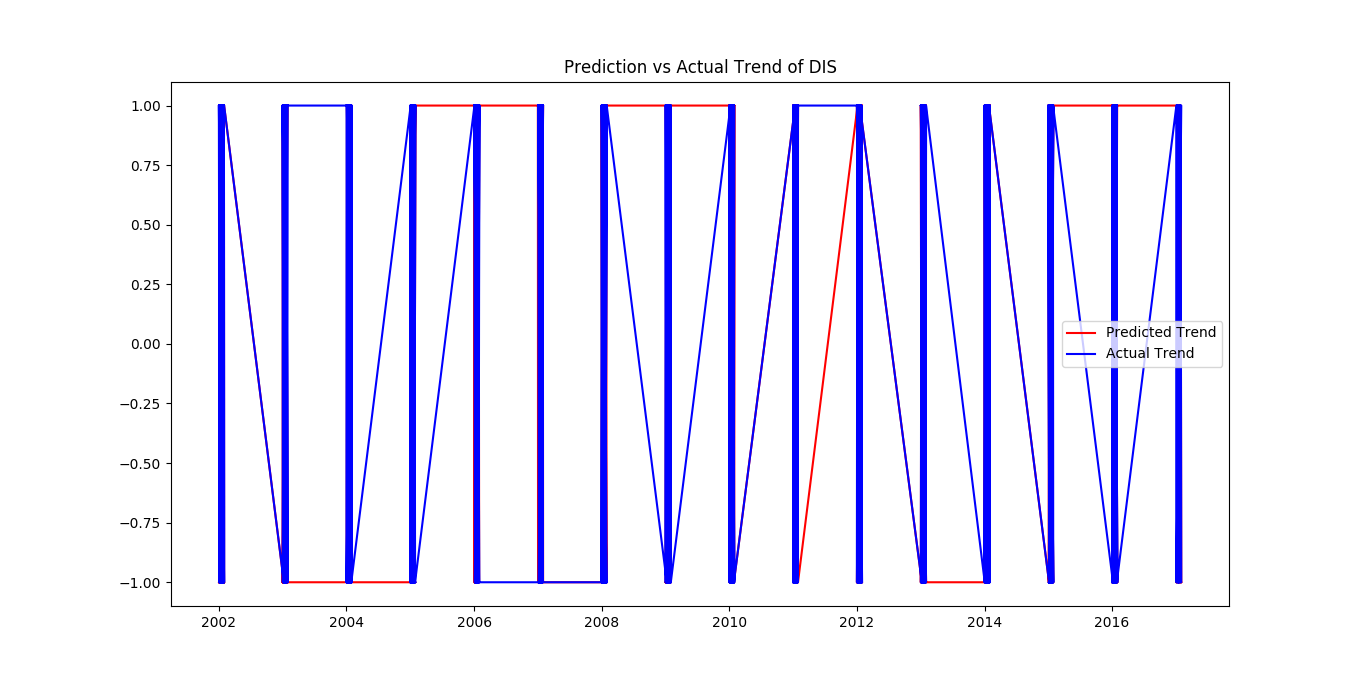

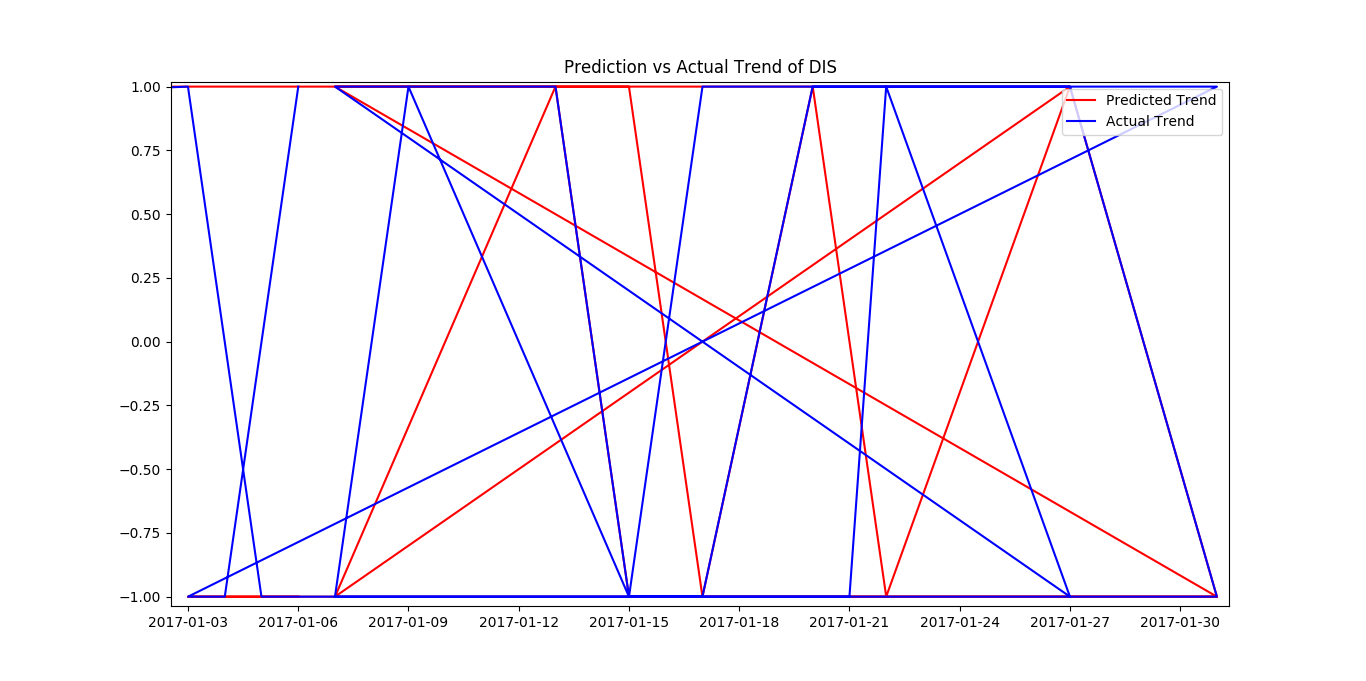

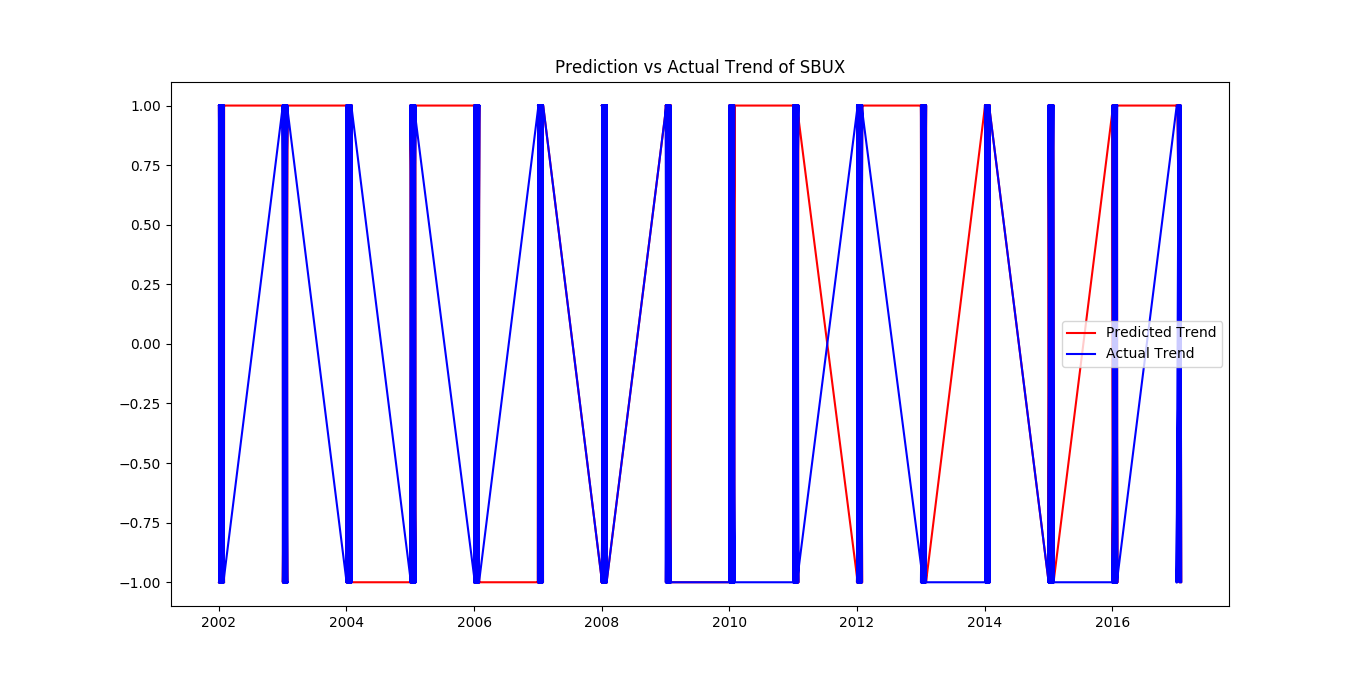

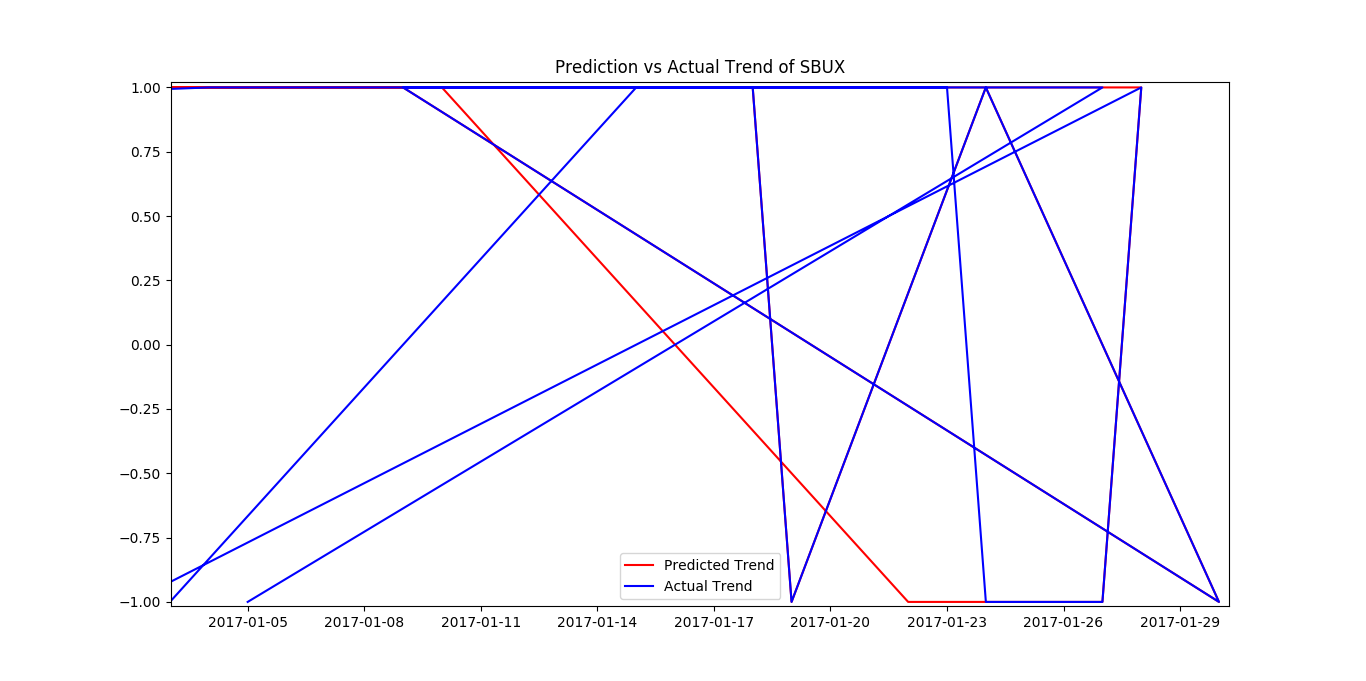

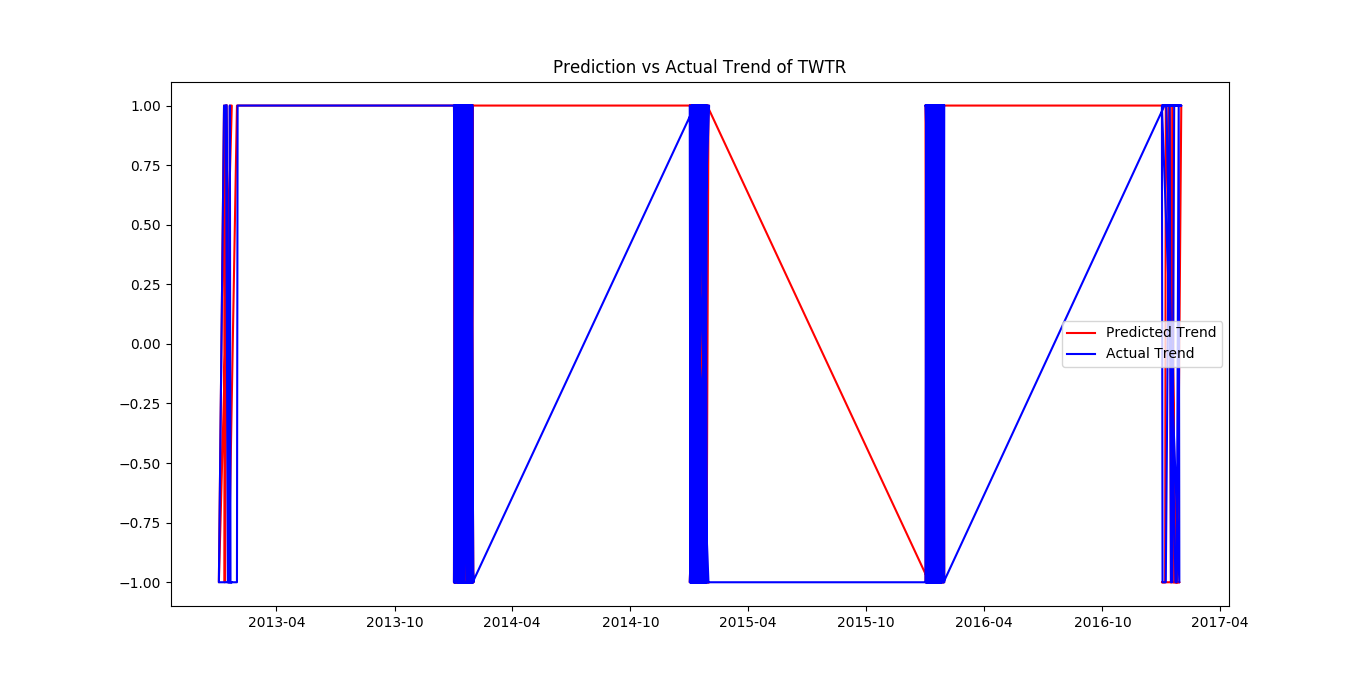

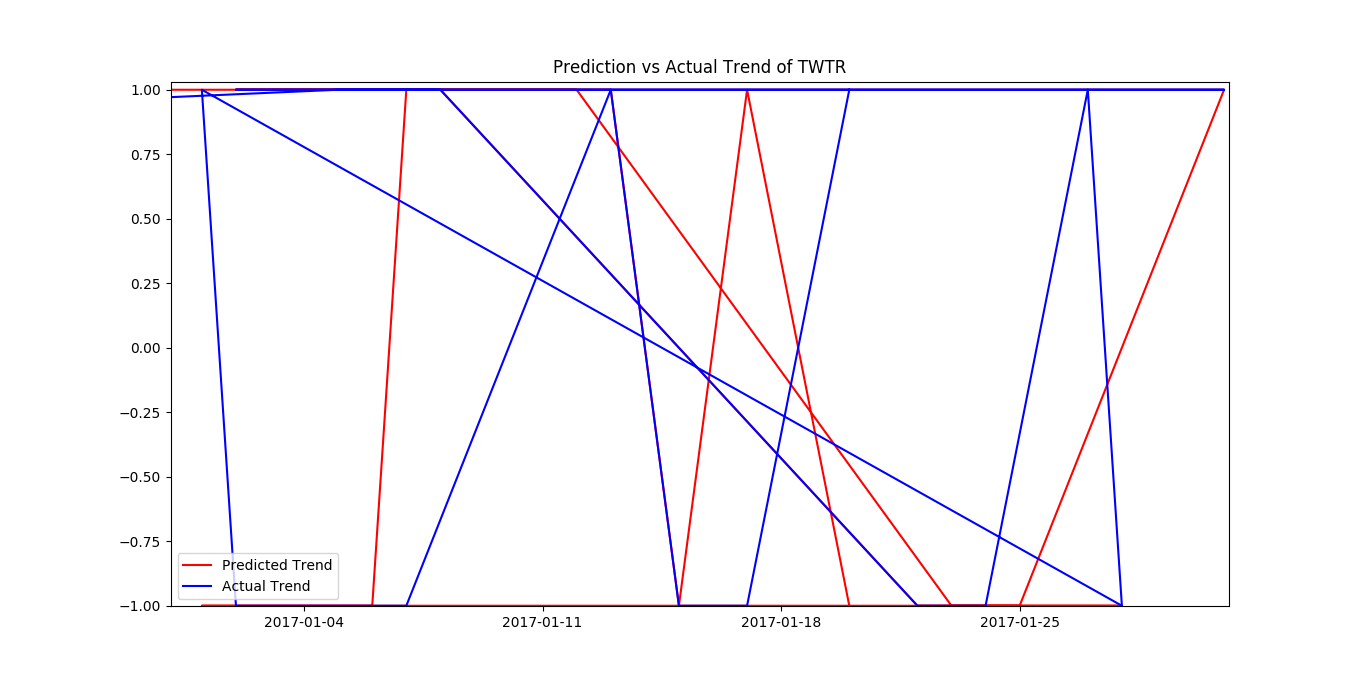

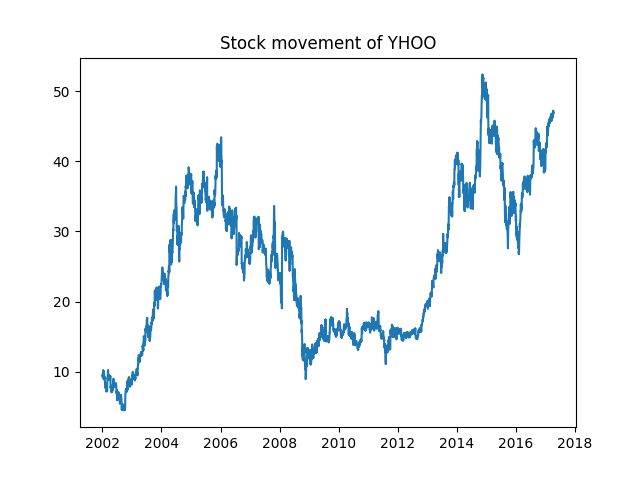

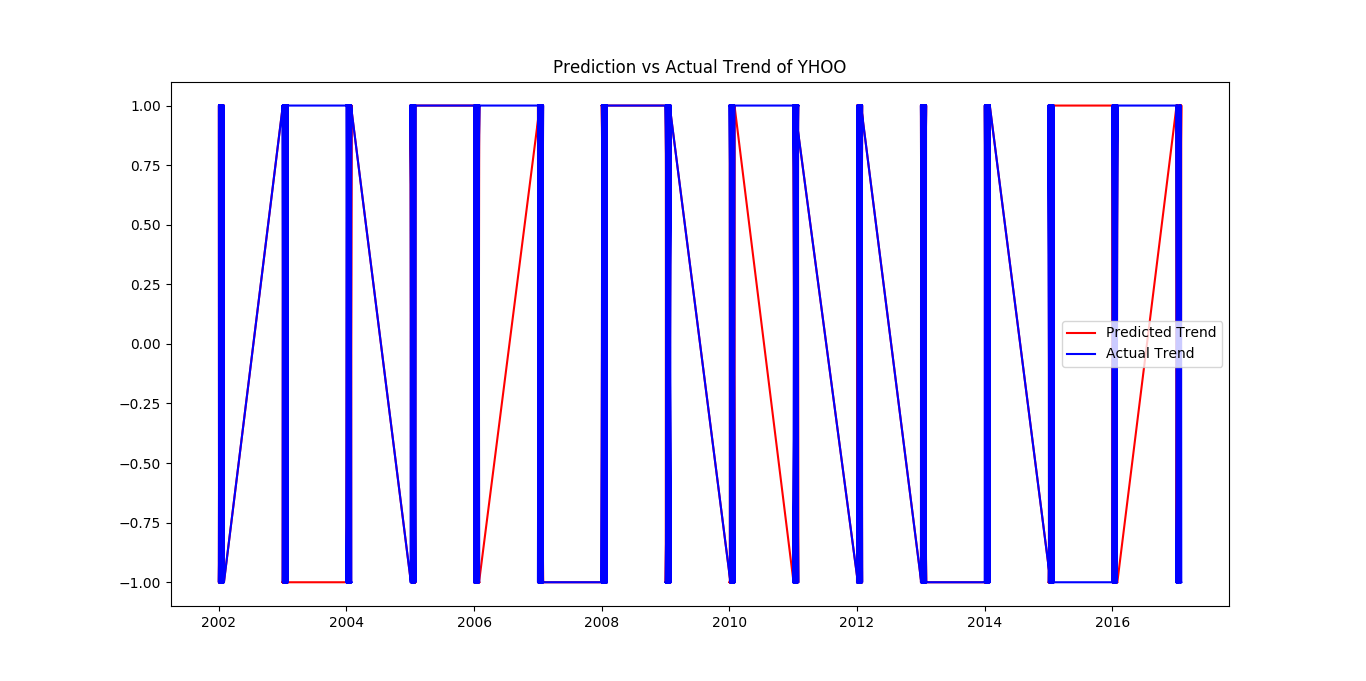

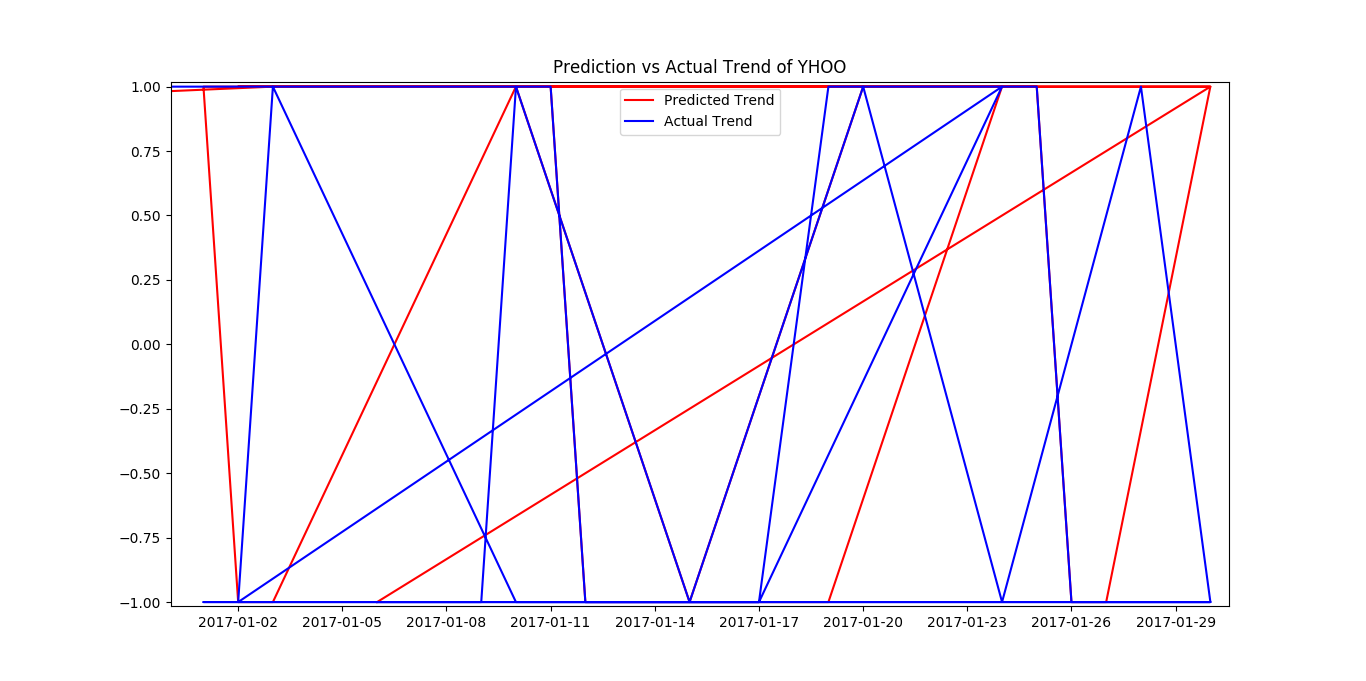

The following graphs show the result. We achieved a 70% accuracy in the prediction. So, a module that will predict, given a stock, will rise tommorow will be posted in coming days. For now, enjoy the graphs and be glad that there now exists a ML algorithm that give 70% certainty on if you should buy a certain stock or not.