Various numerical analysis methods for solving a linear system of equations involving the Hilbert Matrix.

Basically, we simply want to solve for

Where

And

- In this implementation, we will fix the order of

$n$ to be 100.

The main metric used in this project is the Relative residual norm given by:

In this project, we implemented the following methods for aproximating the solution:

- LU decomposition

- Cholesky decomposition

- Jacobi Over-Relaxation (JOR)

- Successive Over-Relaxation (SOR)

- Gradient Ascent

- Conjugate Gradient

All methods are implemented from scratch.

Since LU is a relatively stable direct method for Symmetric Positive Definite (SDP) matrices, we can check the relative residual norm at each order

Overall, this is the best method for SDP matrices. However, due to the bad conditioning of

In order to prevent this, we can create a new aproximated matrix. We just need to add small values

Since

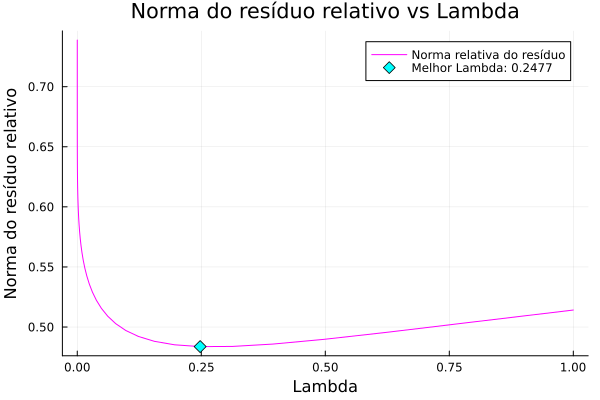

So we can simply iterate over various

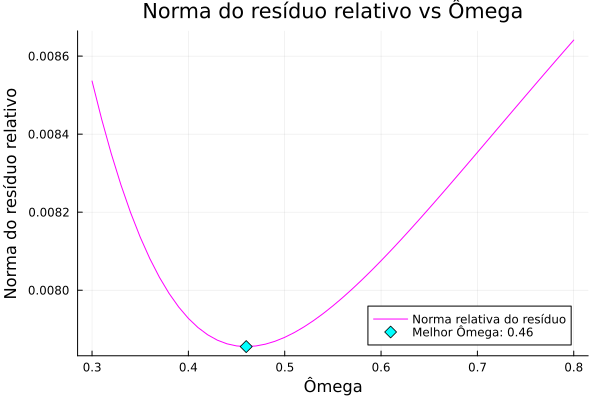

Same thing as JOR, simply iterate over all possible

A really good family of methods are the iterative methods around the gradient. Deriving from optimization, the method converges really fast to an acceptable tolerance, but struggles to reach a good score.

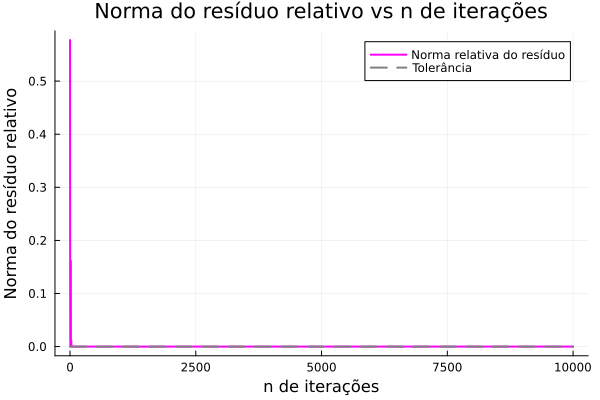

The theory AND the performace are INSANE, literaly SMASHED the other methods. In just 30 iterations it reached the desired tolerance of 1e-7. Absolute beast.