Data pipeline that extracts historical daily prices for all tickers currently trading on NASDAQ from the Yahoo Finance API (via the yfinance library) using Python, Airflow, MinIO, Spark, PostgreSQL, dbt, Metabase all through Docker.

This project create a pipeline that will extract all the symbols from NASDAQ and then download each symbol's daily historical prices from Yahoo Finance API and store them in a local datalake (which is compatible with Amazon S3) called MinIO, then using Spark to load the data from the datalake to Postgres and create some models using DBT. All the services is run through Docker's container which help the pipeline can be run on any machines and environments.

- Containerization - Docker, Docker Compose

- Datalake - MinIO

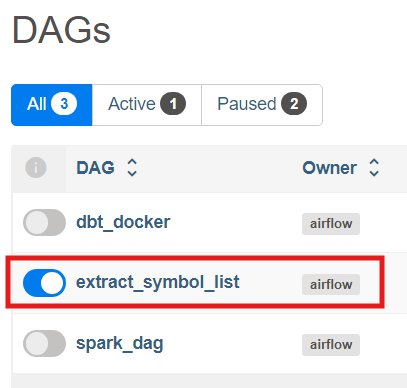

- Orchestration - Airflow

- Batch processing - Spark

- Database - PostgreSQL

- Transformation, modeling - dbt

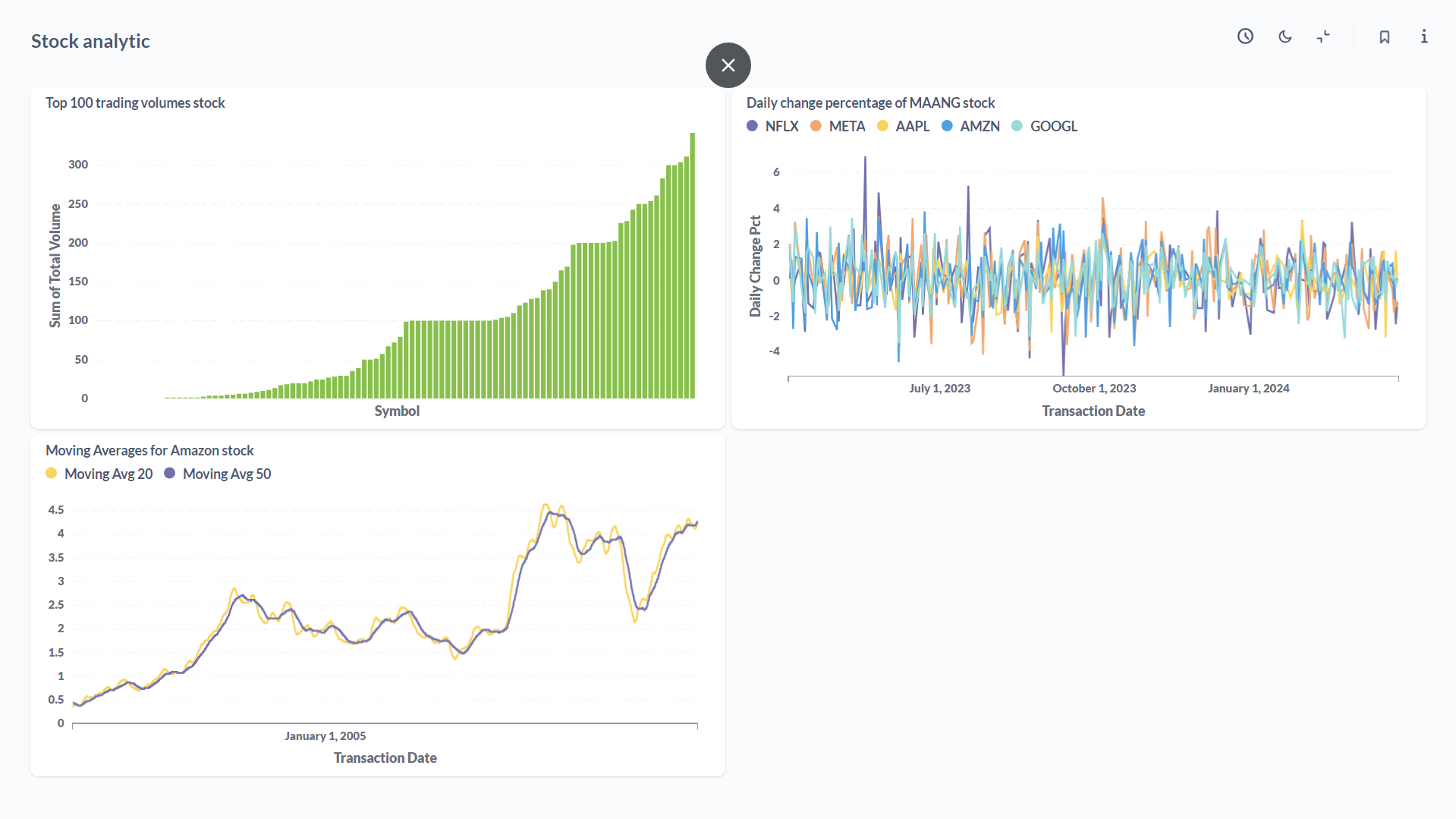

- Data Visualization - Metabase

- Language - Python

flowchart TD

subgraph Airflow["Airflow"]

NASDAQ["Extract NASDAQ Symbols"]

YahooAPI["Extract Symbole's Historical Data"]

MinIO[("MinIO")]

Spark["Apache Spark"]

Postgres[("PostgreSQL Database")]

DBT["DBT Modeling"]

Metabase["Metabase Dashboard"]

end

NASDAQ -.-> YahooAPI

YahooAPI -.-> MinIO

MinIO --|Historical data|--> Spark

Spark -->|Transformed data| Postgres

Postgres -->|Data for modeling| DBT

DBT --> Metabase

- Extract NASDAQ Symbols to MinIO

- Download historical data of symbols to MinIO

- Submit Spark job to Spark cluster to load and transform the data from MinIO to Postgres

- Create data models though DBT

- Create Metabase dashboard

- Docker

- Docker Compose

Every tools is installed through docker compose. You can find those in the docker-compose.yml

There are also separate docker compose files for Airflow, MinIO, Spark, PostgreSQL, dbt. You can run each one with this command:

docker compose up --build -f airflow-docker-compose.yml

docker compose up -f minio-docker-compose.yml

docker compose up -f spark-docker-compose.yml

docker compose up -f postgres-docker-compose.yml

docker compose up --build -f dbt-docker-compose.yml

Before installing, you'll need to create a docker network first:

docker network create stock-market-network

Installation

docker compose up --build

Setup tables in PostgreSQL:

psql -h localhost -U postgres -d stock_market < setup.sql

To submit Spark job to Airflow, you'll need to create Spark connection for Airflow first.

docker compose run airflow-webserver airflow connections add 'spark_stock_market' --conn-type 'spark' --conn-host 'spark://spark-master-1:7077'

Setup postgres connection in Metabase using environment variables from postgres-docker-compose.yml

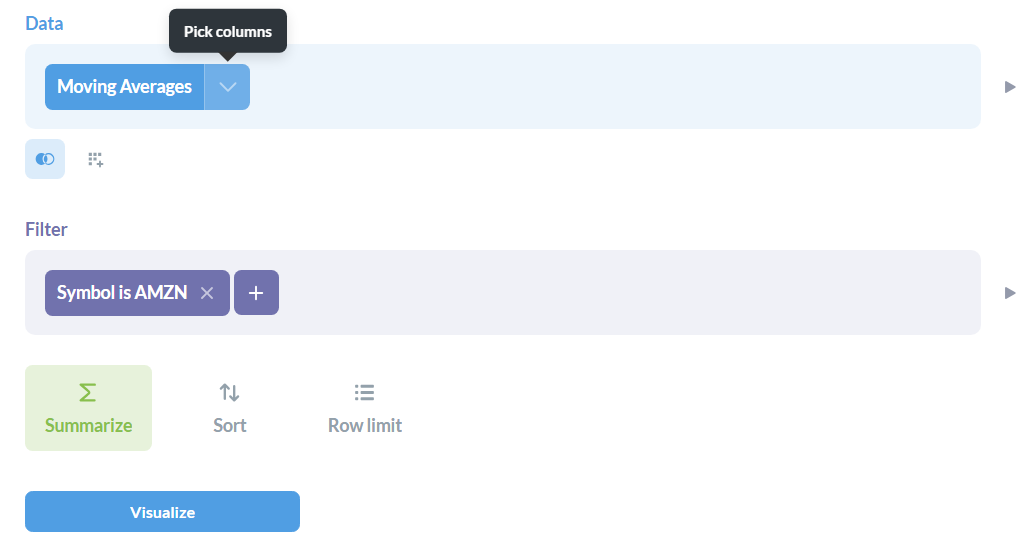

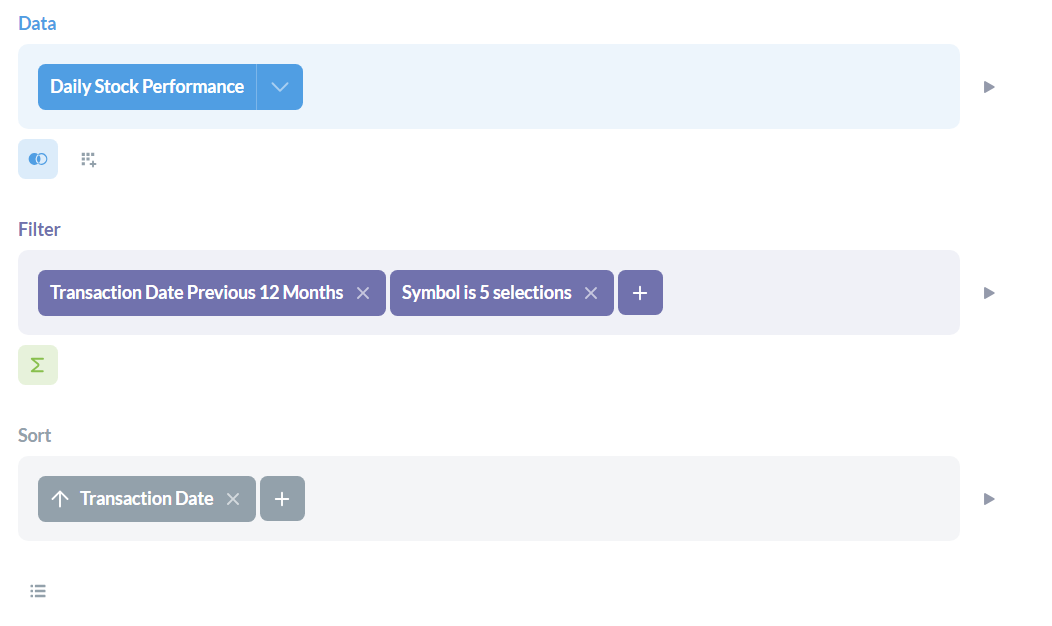

Create these questions (using the public_stock_market schema):

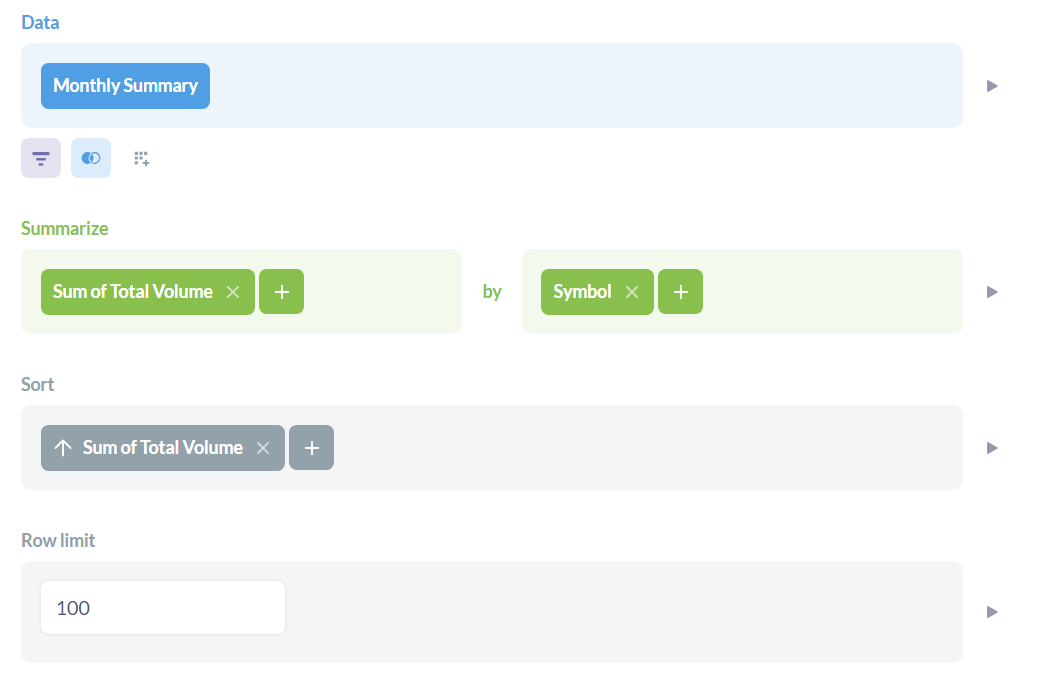

Top 100 trading volume symbols