Meilisearch Python Async

Meilisearch Python Async is a Python async client for the Meilisearch API. Meilisearch also has an official Python client.

Which of the two clients to use comes down to your particular use case. The purpose for this async client is to allow for non-blocking calls when working in async frameworks such as FastAPI, or if your own code base you are working in is async. If this does not match your use case then the official client will be a better choice.

Installation

Using a virtual environmnet is recommended for installing this package. Once the virtual environment is created and activated install the package with:

pip install meilisearch-python-asyncRun Meilisearch

There are several ways to run Meilisearch. Pick the one that works best for your use case and then start the server.

As as example to use Docker:

docker pull getmeili/meilisearch:latest

docker run -it --rm -p 7700:7700 getmeili/meilisearch:latest ./meilisearch --master-key=masterKeyUseage

Add Documents

- Note: `client.index("books") creates an instance of an Index object but does not make a network call to send the data yet so it does not need to be awaited.

from meilisearch_python_async import Client

async with Client('http://127.0.0.1:7700', 'masterKey') as client:

index = client.index("books")

documents = [

{"id": 1, "title": "Ready Player One"},

{"id": 42, "title": "The Hitchhiker's Guide to the Galaxy"},

]

await index.add_documents(documents)The server will return an update id that can be used to get the status of the updates. To do this you would save the result response from adding the documets to a variable, this will be a UpdateId object, and use it to check the status of the updates.

update = await index.add_documents(documents)

status = await client.index('books').get_update_status(update.update_id)Basic Searching

search_result = await index.search("ready player")Base Search Results: SearchResults object with values

SearchResults(

hits = [

{

"id": 1,

"title": "Ready Player One",

},

],

offset = 0,

limit = 20,

nb_hits = 1,

exhaustive_nb_hits = bool,

facets_distributionn = None,

processing_time_ms = 1,

query = "ready player",

)Custom Search

Information about the parameters can be found in the search parameters section of the documentation.

index.search(

"guide",

attributes_to_highlight=["title"],

filters="book_id > 10"

)Custom Search Results: SearchResults object with values

SearchResults(

hits = [

{

"id": 42,

"title": "The Hitchhiker's Guide to the Galaxy",

"_formatted": {

"id": 42,

"title": "The Hitchhiker's Guide to the <em>Galaxy</em>"

}

},

],

offset = 0,

limit = 20,

nb_hits = 1,

exhaustive_nb_hits = bool,

facets_distributionn = None,

processing_time_ms = 5,

query = "galaxy",

)Benchmark

The following benchmarks compare this library to the official Meilisearch Python library. Note that all of the performance gains seen are achieved by taking advantage of asyncio. This means that if your code is not taking advantage of asyncio or blocking the event loop the gains here will not be seen and the performance between the two libraries will be very close to the same.

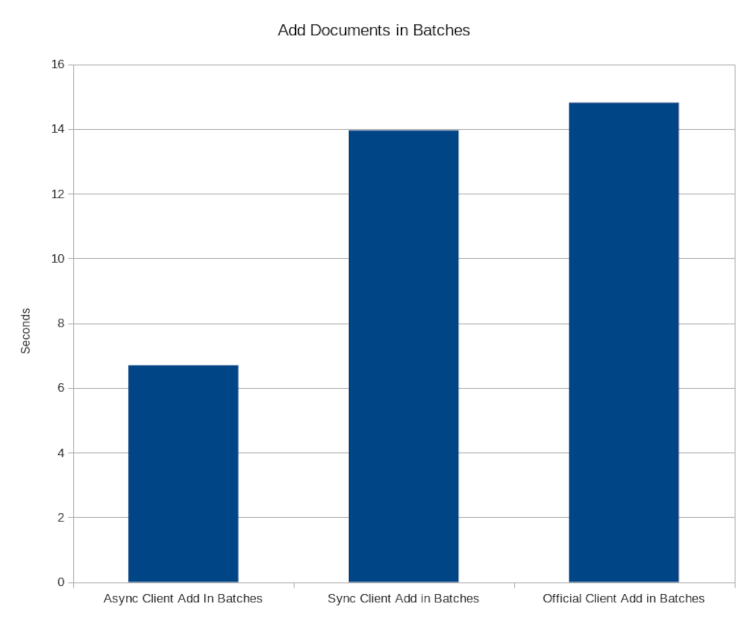

Add Documents in Batches

This test compares how long it takes to send 1 million documents in batches of 1 thousand to the Meilisearch server for indexing (lower is better). The time does not take into account how long Meilisearch takes to index the documents since that is outside of the library functionality.

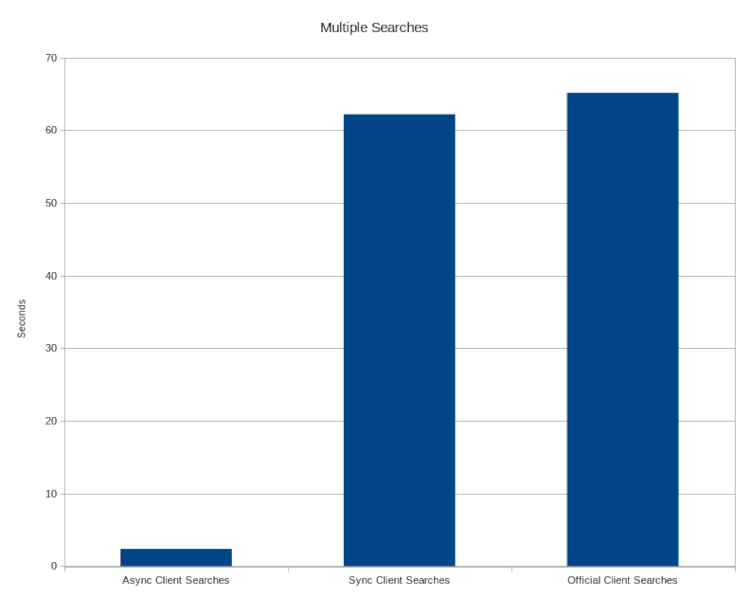

Muiltiple Searches

This test compares how long it takes to complete 1000 searches (lower is better)

Independent testing

Prashanth Rao did some independent testing and found this async client to be ~30% faster than the sync client for data ingestion. You can find a good write-up of the results how he tested them in his blog post.

Documentation

See our docs for the full documentation.

Contributing

Contributions to this project are welcome. If you are interested in contributing please see our contributing guide