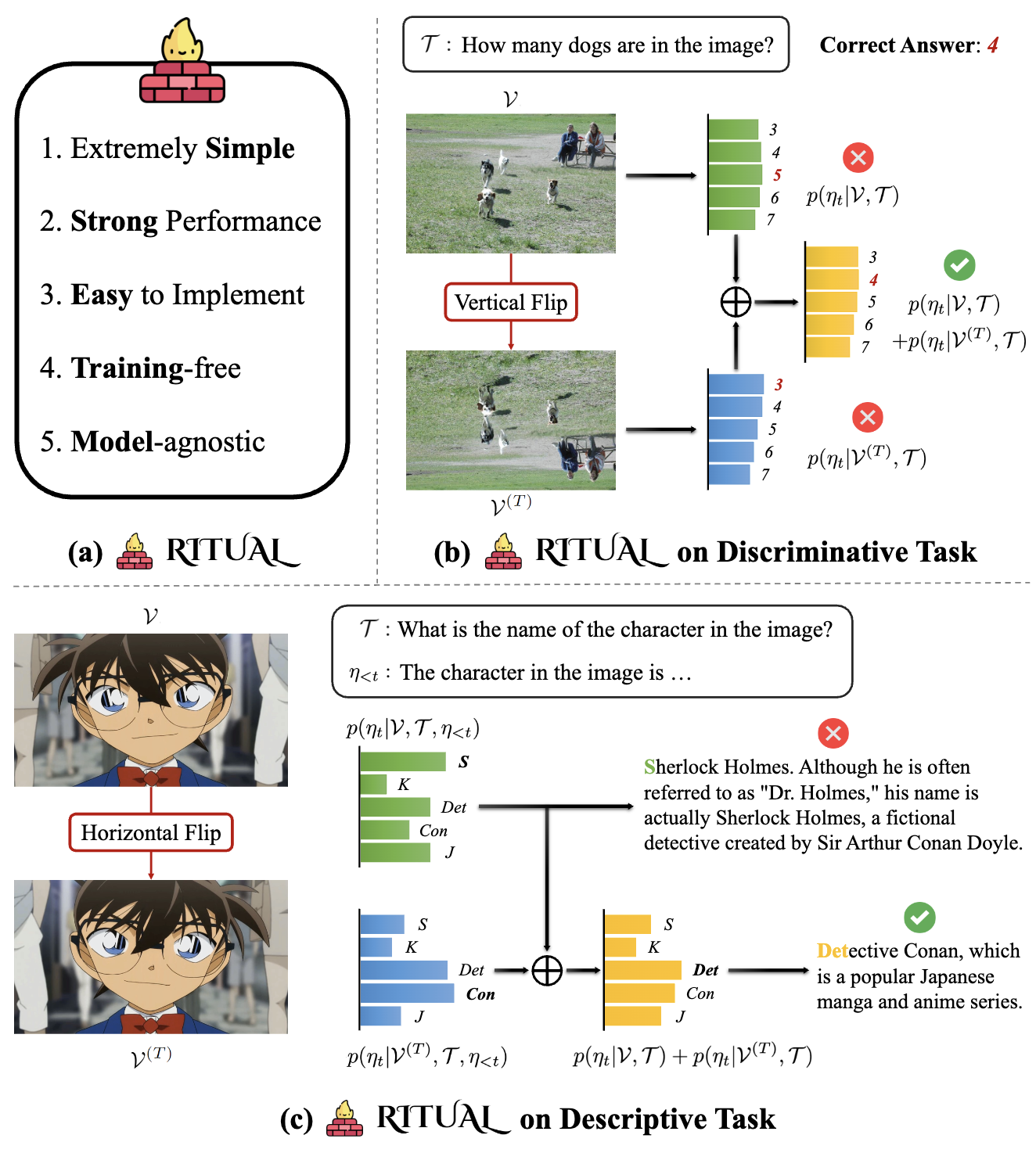

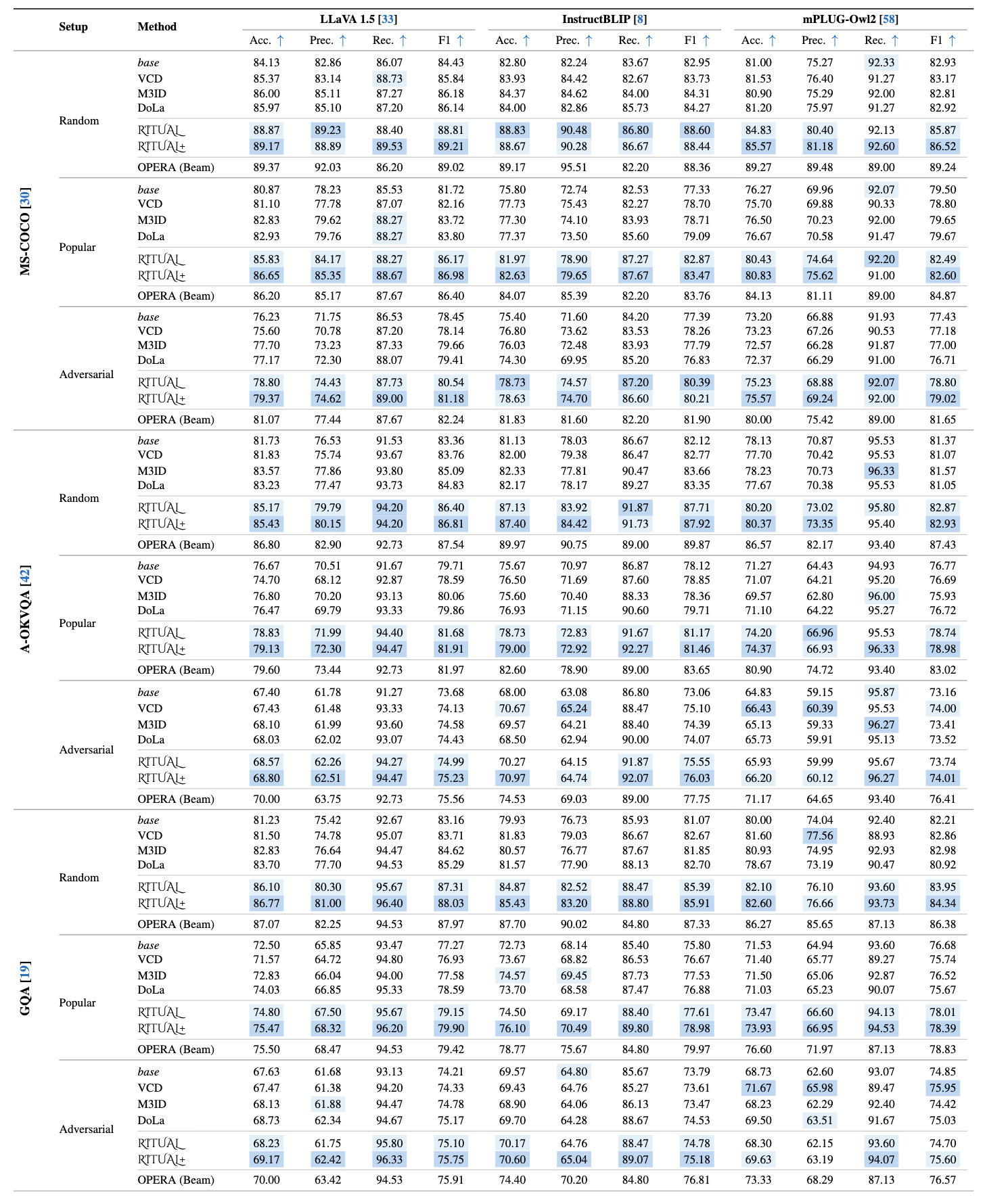

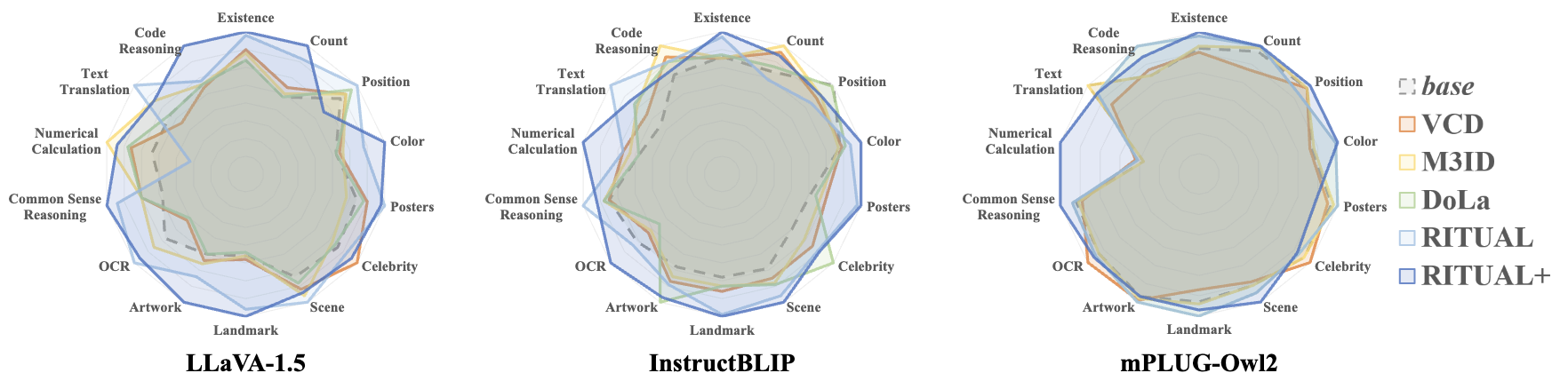

This repository contains the official pytorch implementation of the paper: "RITUAL: Random Image Transformations as a Universal Anti-hallucination Lever in LVLMs".

- 2024.05.29: Build project page

- 2024.05.29: RITUAL Paper online

- 2024.05.28: Code Release

conda create RITUAL python=3.10

conda activate RITUAL

git clone https://github.com/sangminwoo/RITUAL.git

cd RITUAL

pip install -r requirements.txtAbout model checkpoints preparation

- LLaVA-1.5: Download LLaVA-1.5 merged 7B

- InstructBLIP: Download InstructBLIP

- POPE:

bash eval_bench/scripts/pope_eval.sh- Need to specify "model", "model_path"

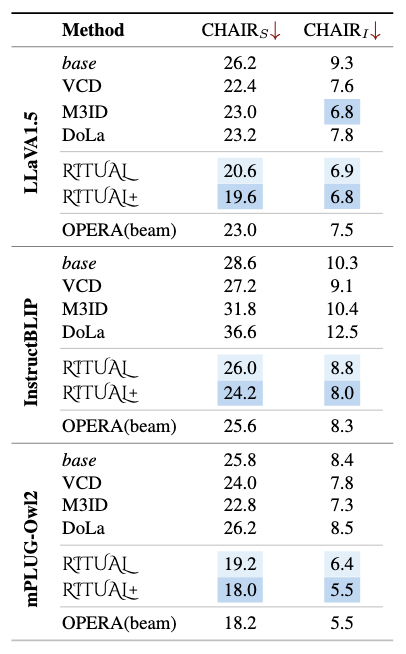

- CHAIR:

bash eval_bench/scripts/chair_eval.sh- Need to specify "model", "model_path", "type"

- MME:

bash experiments/cd_scripts/mme_eval.sh- Need to specify "model", "model_path"

About datasets preparation

- Please download and extract the MSCOCO 2014 dataset from this link to your data path for evaluation.

- For MME evaluation, see this link.

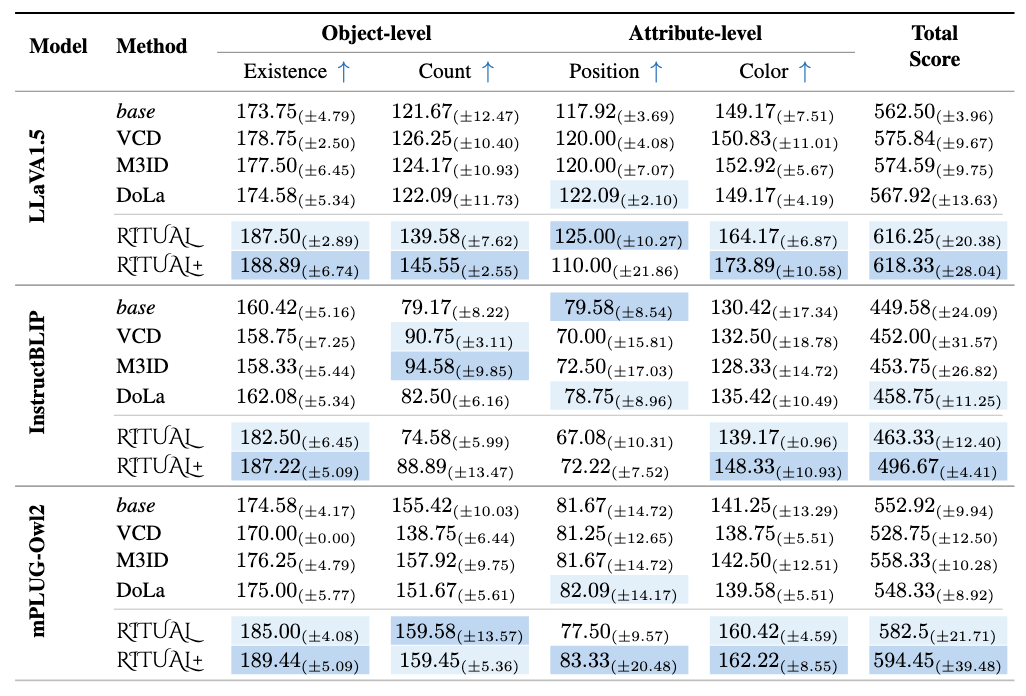

MME-Fullset

MME-Hallucination

This codebase borrows from most notably VCD, OPERA, and LLaVA. Many thanks to the authors for generously sharing their codes!

If you find this repository helpful for your project, please consider citing our work :)

@article{woo2024ritual,

title={RITUAL: Random Image Transformations as a Universal Anti-hallucination Lever in LVLMs},

author={Woo, Sangmin and Jang, Jaehyuk and Kim, Donguk and Choi, Yubin and Kim, Changick},

journal={arXiv preprint arXiv:2405.17821},

year={2024},

}