Tally to Database Server: Data Loader Utility

Commandline utility to load data into Database Server from Tally software, intended for further use by

- MS Excel / Google Sheet (for tabular reports)

- Power BI / Google Data Studio (for dashboards)

Index

- Version

- Upcoming Features

- Requirements

- Download

- Utility

- Tally

- Node JS

- SQL Server

- MySQL Server

- MariaDB Server

- Tally XML Server

- Tally Prime

- Tally.ERP 9

- Database Creation

- Utility Installation

- Configuration Setup

- Database Connection

- Tally Options

- Steps

- Tutorial

- Tally Export Config

- Commandline Options

- Logs

- Reports

- Power BI Template

- Google BigQuery Database CSV loading

- Develop Further

- License

- Contact

- Credits

- Known Issues

- Frequently Asked Questions

- Release History

Version

Latest Version: 1.0.14

Updated on: 28-Feb-2022

*Note: I keep on fixing utility and adding fields into database. So you are requested to re-create existing databases and re-download utility folder *

Upcoming Features

Stagnant water is hazardous. Same applies to human brain. Improvement / Exploration is a one of key aspect of this project.

In-progress features:

- Direct data push to Google BigQuery (a robust offering by Google Cloud with plently of opportunity to explore in Always Free plan)

- Support for IBM DB2 database (they offer a free plan with a DB2 database & access to analytical tool)

Tentative Features:

- Graphical Interface for the utility (Electron JS powered App)

- Export to Excel (cannot promise, is a bit difficult to implement in Node.JS)

Requirements

Utility requires installation of following as a pre-requisite (along with download link)

- Windows 10

- Tally.ERP 9 / Tally Prime

- Node JS

- Database Server (supports any of below)

Free version of all the above Database Servers are available for download. Also all of them are available on popular cloud like Microsoft Azure / Google Cloud Platform / Amazon Web Services

Preferred versions:

- SQL Server - version 2019

- MySQL - version 8.x

- PostgreSQL - 11.x or above

Note: Utility and SQL Queries for reports are deviced considering latest version of the above Database Server. Running it in lower version might hamper few of the functionalities, as some SQL syntax were introduced in latest version

Download

Database Loader Utility is portable, and does not have a setup wizard like we find for software installation. Zip archive of utility can be downloaded from below link. Kindly use open-source & free software 7-zip file archiver to un-compress utility archive.

Download Database Loader Utility

Also, it is a commandline utility having no window interface (to keep it minimal and faster)

Tally XML Server

Tally has in-built XML Server capability, which can import/export data in/out of Tally. This utility sends export command to Tally along with report specification written in TDL (Tally Developer Language) in XML format. In response, Tally returns back the requested data (in CSV format), which is then imported into Database Server. This utility works for both Tally.ERP 9 and Tally Prime both. Kindly ensure that XML Server of Tally is enabled (one-time). Avoid running Tally Prime & ERP.9 both at same time, as by default, both of them would try to block port numbert 9000 for XML Server. If you still need to run both simulteneously, change the port number of Tally Prime, or you can also disable XML Server of any of the Tally instance.

Tally.ERP 9

- Gateway of Tally > Configure (F12) > Advanced Configuration

- Set Tally.ERP 9 is acting as Both

Tally Prime

- Help (F1) > Settings > Connectivity

- Client/Server configuration

- Set TallyPrime is acting as Both

Database Creation

Database first needs to be created and then Tables needs to be created in which data from Tally will be loaded, before running utility. File database-structure.sql contains SQL for creating tables of database. Just ensure to create database using any of GUI Database Manager. That database name should be updated in schema property of config.json. Open-source database editor available freely are

- SQL Server Management Studio (SQL Server)

- Azure Data Studio (SQL Server)

- pgAdmin (PostgreSQL Server)

- MySQL Workbench (MySQL Server)

- Heidi SQL (SQL Server / MySQL / MariaDB / PostgreSQL)

Note: Database structure creation SQL script for PostgreSQL is avilable inside platform/postgresql folder of project. In future, database technology-wise separate SQL Script will be available for individual technologies.

Utility support import into database server installed and hosted on

- Same PC where Tally is

- On any machine on LAN

- Virtual Private Server

- Cloud Database [ Microsoft Azure / Amazon Web Services (AWS) / Google Cloud Platform / Oracle Cloud ]

Configuration Setup

Utility contains a file config.json containing database connection and tally related settings.

Database Connection

Database Connection credentials needs to be set in the file in database section of config.json. A sample configuration file

SQL Server

"database": {

"technology": "mssql",

"server": "localhost",

"port": 1433,

"ssl": false,

"schema": "<database_name>",

"username": "sa",

"password": "<your_password>",

"loadmethod": "insert"

}MySQL / MariaDB Server

"database": {

"technology": "mysql",

"server": "localhost",

"port": 3306,

"ssl": false,

"schema": "<database_name>",

"username": "root",

"password": "<your_password>",

"loadmethod": "insert"

}PostgreSQL Server

"database": {

"technology": "postgres",

"server": "localhost",

"port": 5432,

"ssl": false,

"schema": "<database_name>",

"username": "postgres",

"password": "<your_password>",

"loadmethod": "insert"

}| Settings | Value |

|---|---|

| technology | mssql: Microsoft SQL Server mysql: MySQL Server or MariaDB Server postgres: PostgreSQL Server csv: Generate CSV dump for further import (below parameters of database connection are dummy when CSV setting is applied) |

| server | IP Address of PC on which Database Server is hosted (localhost = same machine) |

| port | Port number on which Database Server is listening mssql: Default port is 1433 mysql: Default port is 3306 postgres: Default port is 5432 |

| ssl | true: Secured (to be used only if Database Server is on Cloud) false: Unsecured [default] (to be used when Database Server is on same machine / within LAN / within VPN) Supported for mssql / postgres only |

| schema | Database name in which to insert data |

| username | Username mssql: Default user is sa mysql: Default user is root postgres: Default user is postgres |

| password | Password for corresponding user. It is set during installation of Database Server. Note: Trusted Login (password-less) of SQL Server not supported by this utility |

| loadmethod | insert: loads rows in database tables using SQL query with multiple rows. This is most compatible method which works everywhere (Compatibility: High / Performance: Slow ) file: loads rows in database table using file based loading method. This method works only when database server and utility is running on same machine. So this method is not compatible with Cloud databases (Compatibility: Low / Performance: Fast ) |

Kindly override configurations, as per respective Database Server setup

Note: Utility supports SQL Server connection via TCP/IP port only. This option is disabled by default, which needs to be enabled. Kindly refer FAQ where it has been elaborated in detail along with screenshots (applicable for Microsoft SQL Server only)

Tally Options

Few of the options of Tally may need modification, if default settings of Tally are specifically over-ridden (due to port clashes). A sample configuration of tally is demonstrated as below

"tally": {

"server": "localhost",

"port": 9000,

"master": true,

"transaction": true,

"fromdate" : "20190401",

"todate" : "20200331",

"company": ""

}| Setting | Value |

|---|---|

| server | IP Address or Computer Name on which Tally XML Server is running (localhost is default value equivalent of IP Address 127.0.0.1). Change this if you need to capture data from a Tally running on different PC on your LAN |

| port | By default Tally runs XML Server on port number 9000. Modify this if you have assigned different port number in Tally XML Server settings (typically done when you want run Tally.ERP 9 and Tally Prime both at a same time parallely, where you will be changing this port number) |

| master / transaction | true = Export master/transaction data from Tally (default) false = Skip master/transaction data |

| fromdate / todate | YYYYMMDD = Period from/to for export of transaction and opening balance (in 8 digit format) auto = This will export complete transactions (irrespective of selected Financial Year) from Tally by auto-detection of First & Last date of transaction |

| company | Name of the company from which to export data or leave it blank to export from Active company of Tally (this parameter is intended for use when user needs to export data from specific company irrespective of it is active or not. Setup a powershell script to run a loop when multiple companies needs to be targeted one-by-one) |

Steps

- Create database in Database Server along with tables inside it (use database-structure.sql to create tables) [ignore if already created]

- Ensure options are properly set in config.json

- Ensure Tally is running and target company from which to export data is Active

- Run the file run.bat

- Commandline window will open, attempt to import data and will get closed after import/error

- Check for import status in import-log.txt file and errors (if any) in error-log.txt file

Tutorial

YouTube tutorial video are availabe (link below)

SQL Server

MySQL Server

Tally Export Config

Certain times we may require to add or remove any of the fields from export (to add user defined fields created by TDL Developer in Tally customisations). So this export specification is defined in tally-export-config.yaml file in YAML format. This file is divided into Master and Transaction, containing multiple tables in it. To understand structure and nomenclature, an example of this is given below

master:

- name: mst_group

collection: Group

fields:

- name: guid

field: Guid

type: textname: mst_group (Database Table name)

collection: Group (Tally Collection name)

name: guid (Database Column name)

field: Guid (Tally field name)

type: text / logical / date / number / amount / quantity / rate / custom

amount: Credit = positive / Debit = negative

quantity: In Quantity = positive / Out Quantity = negative

rate: Rate type of data (is always positive)

custom: Any custom expression in TDL format

Commandline Options

Utility is completely driven by configuration specified in config.json file. In case if specific configuration(s) needs to be overriden without changing it in config file, it can be done using commandline switches as follows:

node ./dist/index.js [[--option 01] [value 01] [--option 02] [value 02] ...]option: Syntax for option is --parent-child , parent is the main config name followed by child is the sub-config name in config.json . (Refer example for further explanation)

value: Value of config for corresponsing option

Examples:

Scenario 01: We have created separate databases for individual clients & currently need to load data of client in database named airtel in SQL Server, with rest of the settings unchanged, then below is the command for desired output

node ./dist/index.js --database-schema airtelScenario 02: We need to set from & to date dynamically (without changing config file), lets say FY 2019-20 Q3, then below is the command for that

node ./dist/index.js --tally-fromdate 20191001 --tally-todate 20191231Scenario 03: You have a tally company named Reliance Industries, created database of it by name client_reliance and want to export FY 2019-20 Then below is the command for that

node ./dist/index.js --tally-fromdate 20191001 --tally-todate 20191231 --tally-company "Reliance Industries" --database-schema client_relianceScenario 04: You are using Amazon Web Services (AWS) as database server, and have multiple servers for each client group of companies with multiple separate database for each subsidiary company. You intend to sync data for FY 2020-21 from Tally into Jio company database residing in Reliance server hosted at Mumbai region data centre of AWS. Command will be

node ./dist/index.js --tally-fromdate 20200401 --tally-todate 20210331 --database-server database-1.reliance.in-mumbai-1.rds.amazonaws.com --database-schema jioLogs

Utility creates log of import specifying how many rows in each tables were loaded. This log can be found in import-log.txt file. If any error occurs, then details of error(s) are logged in error-log.txt file

Reports

Project hosts library of SQL Queries to generate some popularly used reports, required for preparing Dashboards in Microsoft Power BI and Google Data Studio. Due to minor difference in SQL syntax & functions of SQL Server and MySQL, SQL for same report is provided for both of these Server platforms.

Author actively supports Google BigQuery (fully cloud-based solution of Google), and even shared equivalent SQL query for BiQuery. BigQuery acts as a input for Google Data Studio Dashboards and also supports easy export of tabular output to Google Sheets. Interested users can sign-up for a free Google Cloud account and use BigQuery with free daily limits

Power BI Template

For further data analysis and dashboard preparation, users can load data into Power BI. Template files containing table structure and M language scripts to load data are already in-built into the Power BI template file. Below are the files available

- CSV File Loading: This template loads CSV files generated by the utility into Power BI data model. Just set TargetFolder parameter as documented in table

Download tally-powerbi-csv.pbix

| Parameter | Value |

|---|---|

| TargetFolder | Folder path of CSV files (e.g: D:\utility\csv\ ) |

| Host | URL of Tally XML Server (default: http://localhost:9000) |

| TargetCompany | Name of Target company with special characters escaped in XML (default: ##SVCurrentCompany represents active company) |

| FromDate | Period From in DD-MM-YYYY format (dependent on Region settings of your PC) |

| ToDate | Period To in DD-MM-YYYY format (dependent on Region settings of your PC) |

Note: XML Server loading method of template attempts to fetch Tally XML Server, whenever Refresh button is pressed. This process is CPU and memory intensive, consuming large amount of RAM. Power BI too consumes high RAM during this phase of data loading. So, both the applications running together may drain out RAM, choking other operations of PC. So it is recommended to use CSV loading method. Also ensure to close Tally after data pull operation, as it lacks functionality to dispose blocked memory after export of data.

Google BigQuery Database CSV loading

Utility supports pure CSV data dumping by setting technology parameter as csv in config.json. CSV files are generated and stored in csv folder. These files can be imported into BigQuery by creating & uploading these into a bucket in Cloud Storage. BigQuery can then import & create table from these files. Bash Shell Script file bigquery-bulk-load.sh has been provided in the project to automate import of files from Cloud Storage bucket to BigQuery dataset. Video tutorial for the same will be made available shortly

Develop Further

If you intend to develop and modify this utility further to next level for your use-case, then you can clone this project from Git and run the project as below

- Clone the project repository

- Install Visual Studio and open the project repository folder

- Install required npm packages by following command npm install

- Install global instance of typescript compiler available on Node Package Manager by following command npm install typescript -g

- Run the project in Visual Studio code (launch.json file already provided in .vscode folder to run it with required settings)

License

This project is under MIT license. You are free to use this utility for commercial & educational purpose.

Contact

Project developed & maintained by: Dhananjay Gokhale

For any query email to dhananjay1405@gmail.com or Whatsapp on (+91) 90284-63366

Credits

Bug fixes or enhancements from various contributors

- CA Venugopal Gella - Fixing of Tally Prime 2.0.1 export issue

Known Issues

- Data loading in Microsoft SQL Server (technology: mssql) via loadmethod as insert does not support pushing of unicode characters (i.e. Indian language characters & extended characters beyond ASCII table). Kindly use file method for this if at all required. You will notice question marks instead of these unicode characters in database. For rest of the technology, this limitation does not apply.

- When multiple companies are selected in Tally & specific company name is specified in config.json, it has been observed that in a rare case (especially on Windows Server), Tally fails to fetch data from that target company & internally produces an error that specified company is not loaded.

- It has been observed that sometimes when Tally remain running for several days on PC then in a rare case Tally fails to return back updated / latest data (especially on Windows Server) & you may have to restart Tally.

- If you have configured automatic sync of data via Windows Task Schedular, then make sure you don't log-off, but just disconnect as Tally is graphical based software.

Frequently Asked Question

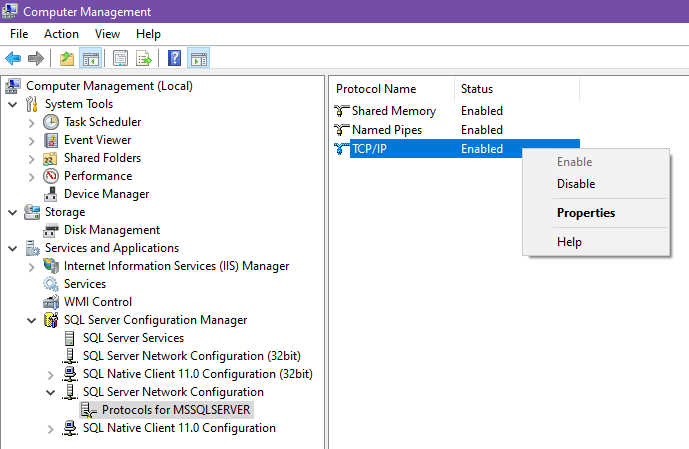

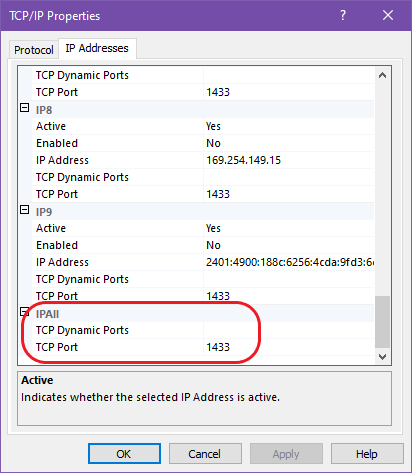

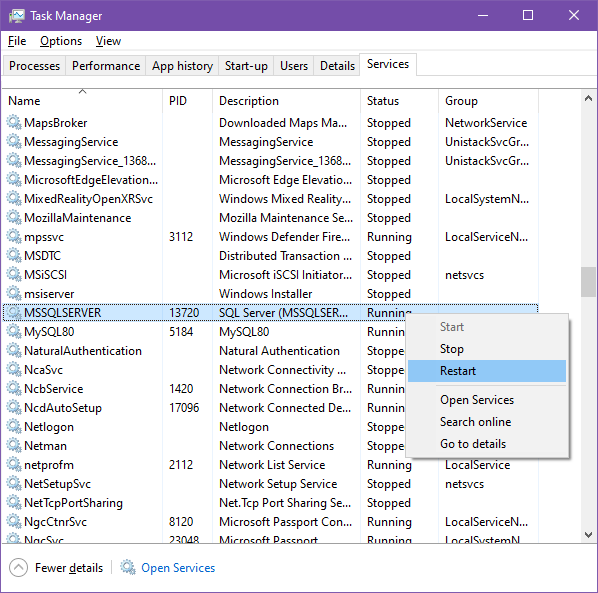

Ques: How to enable SQL Server connectivity via TCP/IP port for Microsoft SQL Server ?

Ans: Utility supports connection to MS SQL Server via TCP/IP port only. It does not support connecting through named instance like PC-NAME\SQLEXPRESS. This setting can be enabled using below steps:

- Launch Computer Management (just type it in Windows Search bar)

- Open: Services and Applications > SQL Server Configuration Manager > SQL Server Network Configuration > Protocols for MSSQLSERVER > TCP/IP

- Right Click and Enable it

- Double Click (or right click and open Properties) to open TCP/IP Properties window

- Goto IP Addresses tab > IPAll > and set

- Restart SQL Server: Task Manager > Services > MSSQLSERVER > right click > Restart

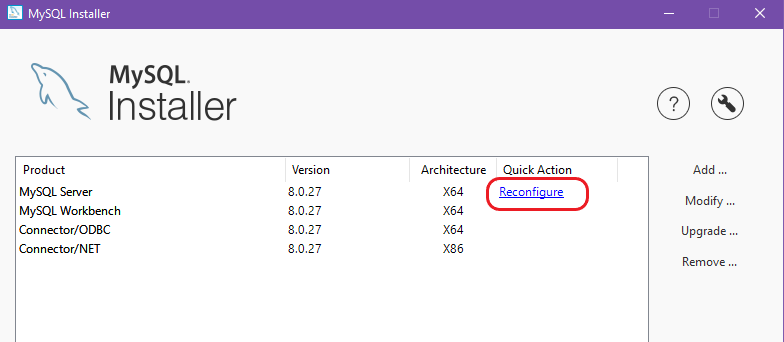

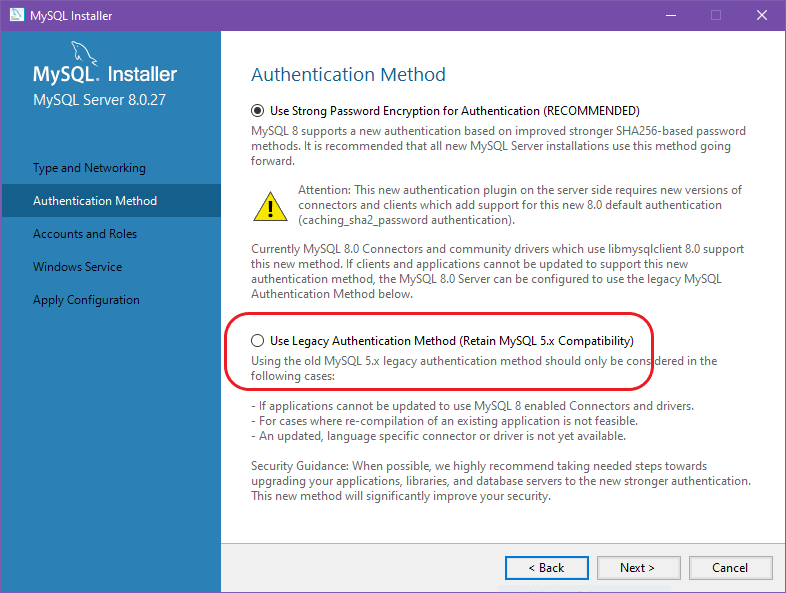

Ques: How to enable MySQL Server invalid authentication mode error ?

Ans: In MySQL 8.x new authentication method was introduce. Currently project's MySQL client driver does not support that method. So you will have to modify your MySQL Server settings to Legacy Authentication Method. For windows PC follow steps as below:

Release History

Version: 1.0.14 [28-Feb-2022]

Fixed:

- Commandline config --tally-fromdate and --tally-todate was not effecting from/to date while sending export request to Tally, now corrected

Version: 1.0.13 [21-Feb-2022]

Fixed:

- Due to undocumented breaking changes in Tally Prime 2.0.1 export functionality was affected, now restored with fresh code changes

- In few cases, utility was not getting list of open companies from Tally Prime properly, now fixed

- PostgreSQL SSL connection was being rejected for self-signed certificates, now fixed by setting ignore flag

Version: 1.0.12 [20-Nov-2021]

Added:

- Support of Postgre SQL Database Server Server.

- SSL (Secured Socket Layer) database connections for secure exchange of data especially for cloud database (PostgreSQL / SQL Server).

Fixed:

- Use of single quotes in INSERT statement, string type values, instead of double quotes, to bring uniformity of SQL Statements across multiple RDBMS platforms.

- CSV folder was used as parking space for intermediate process in database import method. Now the folder is deleted once data is imported successfully into database.

Version: 1.0.11 [07-Nov-2021]

Fixed:

- Introduced specific error message related to database and connectvity.

- Reduced repetation of call stack in error messages to once for improved error diagnostic.

Version: 1.0.10 [10-Oct-2021]

Added:

- 2 new fields in trn_voucher table. Party field was added for easy determination of Sundry Debtor / Creditor in any transaction and optimized aggregation.

Version: 1.0.9 [08-Aug-2021]

Added:

- 2 new fields of amount in forex and currency in trn_accounting table. For Indian currency transaction, this field amount will be same as amount field.

Fixed:

- Crashing of Tally in the case when compound unit of measurement was used. TDL extraction expression is fixed.

- Failure in fetching data from specific company, when target company name was specified in config.json . Mechanism was failing when company had special characters like dash, brackets in its name.

- Default date in SQL queries in demo report section, changed to FY 2020-21.

Version: 1.0.8 [01-Jul-2021]

Added:

- Rate column in stock item and inventory transaction table

- Added 2 new tables for opening batch & bill allocation (useful when company is split and there are pending bills as on split date)

- Introduced compatibility for field containing rate type of data in Tally. YAML export configuration now supports type rate

Version: 1.0.7 [23-Jun-2021]

Added:

- Table named mst_gst_effective_rate containing rate of GST applicable on different stock item on multiple dates

- Email and Bank details related fields into mst_ledger table

- Power BI template for importing CSV files into Power BI model, is made available

Fixed:

- Exporting date type fields with blank value from Tally was causing issue while import. So now empty date field will be treated as NULL for database. During CSV export, same fields will remain as blank

- UTF-8 BOM (Byte Order Mark) is emmited to CSV files, when choosing CSV export in config.json so Without BOM, any field containing Unicode value was not decoded properly by excel

Version: 1.0.6 [11-Jun-2021]

Fixed:

- Parent nature field of Tally containing value Primary, is converted to value as blank string, by custom TDL expression in YAML. In few tables this expression was missing, which is now fixed

Version: 1.0.5 [05-Jun-2021]

Added:

- YAML format tally tables & fields definition specification file tally-export-config.yaml, for easy expansion of User Defined Fields as utility now aims for easy export of fields created by TDL Developers customising Tally. TDL XML is created on-the-fly by reading this specification file.

- Timestamps in import-log.txt file, to know exactly when utility was run

- No of seconds it took for each table of Tally to export. This information might be helpful when user wants to skip any heavy tables of Tally from export, by removing it from tally-export-config.yaml specification file for quick export

Removed:

- XML folder containing specification of Tally tables and fields, as this XML is now automatically created on-the-fly. Also table-info.json file was removed, as tally-export-config.yaml already contains equivalent name of fields in database for corresponding Tally fields.

- In config.json file, batch mode of transaction export where we could specify it full or daily is removed, as whole logic is now revamped. Revamping of logic resulted in longer time to export data from Tally, but significant lower usage of RAM while export (as high RAM usage by Tally Prime was hindering other process in few cases)

Version: 1.0.4 [17-May-2021]

Added:

- Support for selecting specific company from which to export data (using Powershell script loop can be setup to automate this for multiple companies)

- Voucher Reference number field added

- 5 more tables added related to cost centre, bill reference, batch allocation

Version: 1.0.3 [24-Apr-2021]

Added:

- SQL Queries for generating reports in a folder named reports. Due to difference in SQL functions names and syntax nomenclature in MySQL and MS-SQL, query for same report is made available for both database technologies

- Option to simply generate CSV files dump and then exit utility, by setting technology as csv in config.json. This option is introduced with an aim of transferring these CSV dumps to PC when Database Server is not directly accessible via Network (LAN/Internet). Also, these files can be used for Google BigQuery schema tables loading for cloud-based reporting

Fixed:

- CSV file dump, adopted ISO date format of YYYY-MM-DD instead of YYYYMMDD for easy detection of dates by Database Server

- Database table trn_voucher field date was erronously assigned datetime data type instead of date now fixed

Version: 1.0.2 [12-Apr-2021]

Added:

- A configuration option batch has been added to handle cases where export of large number transaction rows from Tally in a single HTTP request results in freezing of Tally (due to huge amount of RAM usage). So, assigning value daily to this settings exports transactions (or vouchers) data day-by-day into CSV file and then pushes it to Database at once.

- New fields of Tally related to GST (HSN Code, type of supply, etc) have been added in stock item table

- 3 more flag type of fields added to voucher table to determine if voucher is of type accounting / inventory / order. These fields speed-up SQL Query for calculating of closing balance as on date

Fixed:

- port and server settings for Tally section in the file config.json were not effected if default value was overriden. This issue is now fixed

- In file based loading mode, first row of CSV file containing header was even treated as data row. So modified that query to skip 1 row from top

- SQL Server does not accept text enclosed in double quotes in SQL query for row insert by default. Due to this file based bulk loading of data failed for MS SQL Server . So modified SQL query for MS SQL Server where QUOTED_IDENTIFIER flag is set to OFF before the SQL statement

Version: 1.0.1 [06-Apr-2021]

Added:

- Header column in CSV files is introduced for easy viewing of CSV files from Excel to know exact error. Also these files can easily be imported, if database server is in protected PC with no external access

Fixed:

- \ character in text field generated invalid CSV files, interrupting database loading. Proper escaping of backslah is now fixed

- Character limit for PAN(10) & GST Number(15) field increased in database-structure.sql as older versions of Tally were found to be accepting extra characters

- Closing Stock values for trn_closingstock_ledger table were missing 0 if no amount was specified for corresponding date. So now fixed with 0 in amount

- Commandline process exits with code of 0 = Success / 1 = Error, so that any other dependent programs/scripts can utilise this exit code for troubleshooting

Version: 1.0.0 [26-Mar-2021]

- Utility released