AWS Cheat Sheet

Table of Content

Storage

Compute

Networking & Content Delivery

Database

Application Integration

Security, Identity & Compliance

AWS Identity & Access Management (AWS IAM)

AWS Resource Access Manager (AWS RAM)

Cryptography & PKI

AWS Ket Management Service (AWS KMS)

Management & Governance

Analytics

Developer Tools

Others

S3

- Object based storage (files)

- Files can be from 0 Bytes & 5 TB

- Bucket web address:

https://s3-<Region name>.amazonaws.com/<bucketname>e.g.https://s3-eu-west-1.amazonaws.com/myuniquename - Bucket name has to be unique across all regions

- Read after write consistency for PUTs of new objects

- Eventual consistency for overwrite PUTs and DELETEs

- Designed for -

- 99.99% availability for S3 Standard, Glacier, Glacier Deep Archive

- 99.9% for S3 - IA, Intelligent Tiering

- 99.5% for S3 One Zone - IA

- Amazon guarantees availability (SLA) -

- 99.9% for S3 Standard, Glacier, Glacier Deep Archive

- 99% for S3 - IA, S3 One Zone - IA, Intelligent Tiering

- Amazon guarantees durability of 99.999999999% (11 * 9's) for all storage classes

- Replicated to >= 3 AZ (except S3 One Zone IA)

- S3 Standard - Frequently accessed

- S3 Infrequently Accessed (IA) - Provides rapid access when needed

- S3 Infrequently Accessed (IA) - Less storage cost but has data retrieval cost

- S3 One Zone IA - Data is stored in a single AZ + Retrieval charge

- S3 Intelligent Tiering - ML based - moves objects to different storage classes based on its learning about usage of the objects

- S3 Glacier - Archive + Retrieval time configurable from minutes to hours + Retrieval charge (Separate service integrated with S3)

- S3 Glacier Deep Archive - Retrieval time of 12 hrs + Retrieval charge

- S3 Reduced Redundacy - Deprecated. Sustains loss of data in a single facility

- Minimum storage period

- Standard - NA

- Intelligent Tiering - 30 days

- Standard IA - 30 days

- One Zone IA - 30 days

- Glacier - 90 days

- Glacier Deep Archive - 180 days

- Charged based on

- Storage

- No. of Requests

- Storage Management (Tiers)

- Data Transfer

- Transfer Acceleration

- Cross Region Replication

- Cross Region Replication for High Availability or Disaster Recovery

- Cross Region Replication requires versioning to be enabled in both source and destination

- Cross Region Replication is not going to replicate

- File versions created before enabling cross region replication

- Delete marker

- Version deletions

- Cross Region Replication is asynchronous

- Cross Region Replication can replicate to buckets in different account

- Transfer Acceleration for reduced upload time

- Transfer Acceleration takes advantage of CloudFront's globally distributed edge locations and then routes data to the S3 bucket through Amazon's internal backbone network

- The bucket access logs can be stored in another bucket which must be owned by the same AWS account in the same region. For user information CloudTrail Data Events need to be configured

- Enabling logging on a bucket from the management console also updates the ACL on the target bucket to grant write permission to the Log Delivery group

- Encryption at Rest -

- SSE-S3 (Amazon manages key)

- SSE-KMS (Amazon + User manages keys)

- SSE-C (User manages keys)

- Client Side Encryption (User manages keys and encrypt objects)

- SSE-S3

- Server side encryption

- Key managed by S3

- AES 256 in GCM mode

- Must set header -

"x-amz-server-side-encryption":"AES256"

- SSE-KMS

- Server side encryption

- Key managed by KMS

- More control on rotation of key

- Audit trail on how the key is used

- Must set header -

"x-amz-server-side-encryption":"aws:kms" - Prefer SSE-KMS over SSE-S3 to comply with PCI-DSS, HIPAA/HITECH, and FedRAMP industry requirements

- SSE-C

- Server side encryption

- Key managed by customer outside AWS

- HTTPS is a must

- Key to be supplied in HTTP header of every request

- Client Side Encryption

- Key managed by customer outside AWS

- Clients encrypt / decrypt data

- When encryption is enabled on an existing file, a new version will be created (provided versioning is enabled)

- Prefer default encryption settings over S3 bucket policies to encrypt objects at rest

- S3 Bucket Policy can be used to enforce upload of only encrypted objects. The policy will deny any PUT request that does not have the appropriate header

x-amz-server-side-encryption - S3 Bucket Policy can be used to provide public read access to all files in the bucket instead of providing public access to each individual file

- S3 evaluates and applies bucket policies before applying bucket encryption settings. Even if bucket encryption settings is enabled, PUT requests without encryption information will be rejected if there are bucket policies to reject such PUT requests

- With SSE-KMS enabled, the KMS limits might need to be increased to avoid throttling of a lot of small uploads

- Versioning, once enabled, cannot be disabled. It can only be suspended

- Versioning is enabled at the bucket level

- If versioning is enabled, S3 can be configured to require multifactor authentication for

- permanently deleting an object version

- suspend versioning on bucket

- Only root account can enable MFA Delete using CLI

- In a bucket with versioning enabled, even if the file is deleted, the bucket cannot be deleted using AWS CLI until all the versions are deleted

- When each individual file is given public access separately, uploading a new version of an existing file doesn't automatically give public access to the latest version (unless an S3 bucket policy exists to give public access)

- Lifecycle management rules (movement to different storage type and expiration) can be set for current version and previous versions separately

- Lifecycle management rules can be used to delete incomplete multipart uploads after a configurable no. of days

- Files are stored as key-value pair. Key is the file name with the entire path and value is the file content as a sequence of bytes

- More than 5 GB files must be uploaded using multipart upload

- Files larger than 100 MB should be uploaded using multipart upload

- Multipart upload advantages -

- Retry is faster

- Run in parallel to improve performance and utilize network bandwidth

- S3 static website URL:

<bucket-name>.s3-website-<aws-region>.amazonaws.comor<bucket-name>.s3-website.<aws-region>.amazonaws.com - Pre-signed URL allows users to get temporary access to buckets and objects

- S3 Inventory allows producing reports about S3 objects daily or weekly in a different S3 bucket

- S3 Inventory reports format can be specified and the data can be queried using Athena

- Storage class needs to be specified during object upload

- S3 Analytics, when enabled, generates reports in a different S3 bucket to give insights about the object usage and this can be used to recommend when the object should be moved from one storage class to another

- To host a static website, the S3 bucket must have the same name as the domain (

example.com) or subdomain (www.example.com).www.example.combucket can redirect toexample.combucket - S3 notification feature enables the user to receive notifications when certain events happen in a bucket. S3 supports following destinations

- Amazon SNS

- Amazon SQS

- AWS Lambda

- Supports at least 3,500 requests per second to add data and 5,500 requests per second to retrieve

- Amazon S3 Object Lock blocks deletion of an object for the duration of a specified retention period (WORM)

- Object Lock mode - Governance mode (allows deletion by user with appropriate IAM permissions), Compliance mode (even root account cannot delet)

- Object Lock - Legal Hold creates Object Lock for an indefinite period until explicitly removed

- Amazon S3 uses a combination of Content-MD5 checksums and cyclic redundancy checks (CRCs) to detect data corruption

- Objects uploaded or transitioned to S3 Intelligent-Tiering are automatically stored in the frequent access tier. S3 Intelligent-Tiering works by monitoring access patterns and then moving the objects that have not been accessed in 30 consecutive days to the infrequent access tier. If the objects are accessed later, S3 Intelligent-Tiering moves the object back to the frequent access tier

- Amazon S3 supports the following lifecycle transitions between storage classes using a lifecycle configuration:

- You can transition from the STANDARD storage class to any other storage class.

- You can transition from any storage class to the GLACIER or DEEP_ARCHIVE storage classes.

- You can transition from the STANDARD_IA storage class to the INTELLIGENT_TIERING or ONEZONE_IA storage classes.

- You can transition from the INTELLIGENT_TIERING storage class to the ONEZONE_IA storage class.

- You can transition from the GLACIER storage class to the DEEP_ARCHIVE storage class.

- The following lifecycle transitions are not supported:

- You can't transition from any storage class to the STANDARD storage class.

- You can't transition from any storage class to the REDUCED_REDUNDANCY storage class.

- You can't transition from the INTELLIGENT_TIERING storage class to the STANDARD_IA storage class.

- You can't transition from the ONEZONE_IA storage class to the STANDARD_IA or INTELLIGENT_TIERING storage classes.

- You can transition from the GLACIER storage class to the DEEP_ARCHIVE storage class only.

- You can't transition from the DEEP_ARCHIVE storage class to any other storage class.

- Other restrictions -

- From the STANDARD storage classes to STANDARD_IA or ONEZONE_IA - Objects must be stored at least 30 days in the current storage class

- From the STANDARD_IA storage class to ONEZONE_IA - Objects must be stored at least 30 days in the STANDARD_IA storage class

- Glacier Deep Archive retrieval options:

- Standard - default tier and lets you access any of your archived objects within 12 hours

- Bulk - lets you retrieve large amounts, even petabytes of data inexpensively and typically completes within 48 hours

- S3 Query in-place options -

- S3 Select - Simple query

- Amazon Athena - complex joins, window functions

- Amazon Redshift Spectrum - exabytes of unstructured data

- S3 Same Region Replication (SRR)

- S3 Batch Operations - Operations across multiple objects

- Access logs are not automatically encrypted

Glacier

- Archive size from 1B to 40TB

- Retrieval Options (Different from retrieval policies)

- Expedited (1 - 5 mins retrieval)

- Standard (3 - 5 hours)

- Bulk (5 - 12 hours)

- Object (in S3) == archive (in Glacier)

- Bucket (in S3) == vault (in Glacier)

- Archive files can be upto 40 TB (note more than S3)

- An archive can represent a single file or you may choose to combine several files to be uploaded as a single archive

- Each vault has ONE vault policy & ONE lock policy

- Vault Policy - similar to S3 bucket policy - restricts user access

- Lock Policy - immutable - once set cannot be changed

- WORM Policy - write once read many

- Forbid deleting an archive if it is less than 1 year (configurable) = regulatory compliance

- Multifactor authentication on file access

- Files retrieved from Glacier will be stored in Reduced Redundancy Storage or S3 Standard IA class for a specified number of days

- For faster retrieval from Glacier based on Retrieval Options, Capacity Units may need to be purchased

- Amazon S3 Glacier automatically encrypts data at rest using Advanced Encryption Standard (AES) 256-bit symmetric keys

- Glacier range retrieval (byte range) is charged as per the volume of data retrieved

- In a single Glacier upload, an archive of maximum 4GB (note 1GB less than S3) size can be uploaded

- Any Glacier upload above 100 MB should use multipart upload (note same as S3)

- A Glacier vault can be deleted only when all its content archives are deleted

- Glacier allows the user or application to be notified through SNS when the requested data becomes available

- Bucket access policy (for S3) or Vault access policy (for Glacier) are resource based policies (directly attached to a particular resource - vault/bucket in this case), whereas IAM policies are user based policies

- One Vault access policy can be attached to each Vault

- One Glacier retrieval policy per region

- S3 Glacier Select -

- Allows to select a subset of rows and colums using SQL without retrieving the entire file

- Joins and subqueries not allowed

- Files can be compressed with GZIP or BZIP2

- Works with file format CSV, JSON, Parquet

- Works with all 3 retrieval options - Expedited, Standard & Bulk

- Glacier inventory (of available objects) is updated every 24 hours - no real time data

- The archives cannot be uploaded from the S3 Glacier management console directly

CloudFront

- Content Delivery Network (CDN) - reduced latency and reduced load on server

- Reasons for good performance -

- Cache content at edge location POP (Point of Presence)

- Regional edge caches with larger caches than POP and holding less popular contents between POP and origin server

- Data transfer over Amazon's backbone network between the origin server and the edge location

- Persistent connections with the origin server

- Default caching behavior -

- period - 24 hours

- Don't cache based on caching headers

- You can use the Cache-Control and Expires headers to control how long objects stay in the cache. Settings for Minimum TTL, Default TTL, and Maximum TTL also affect cache duration

- After a file expires in cache, subsequent edge location requests are forwarded to the origin server. The response from the origin -

- 304 status code (Not Modified), if the cache has the latest version

- 200 status code (OK) and the latest version of the file, if the cache doesn't have the latest version

- By default, CloudFront doesn't automatically compress contents

- To access the contents from own domain, say www.example.com

- Add a CNAME in CloudFront for www.example.com

- Add a CNAME in DNS to route traffic to the CloudFront for a query against www.example.com

- Choose a certificate to use that covers the alternate domain name. The list of certificates can include any of the following:

- Certificates provided by AWS Certificate Manager (ACM)

- Certificates that you purchased from a third-party certificate authority and uploaded to ACM

- Certificates that you purchased from a third-party certificate authority and uploaded to the IAM certificate store

- Origin server could be S3, EC2, ELB or any external server

- A distribution can hanve a maximum 10 origin servers (soft limit)

- Video content -

- HTTP / HTTPS

- Apple HTTP Live Streaming (HLS)

- Microsoft Smooth Streaming

- RTMP

- Adobe Flash multimedia content

- HTTP / HTTPS

- Origin Access Identity (OAI) is an user used by CloudFront to access the S3 files

- S3 bucket policy gives access to OAI and thus preventing users from directly accessing the S3 files bypassing the Cloudfront

- CloudFront accesslogs can be stored in an S3 bucket - contains detailed information about every user request that CloudFront receives

- Supports SNI (Server Name Indication - a TLS extension). This allows CloudFront (also ELB) distributions to support multiple TLS certificates

- SNI enables to serve multiple websites with different domain and certificates from the same IP and port. The webserver (e.g. Apache web server) can be configured to have separate document root for each domain (certificate)

- Invalidation requests to remove something from the cache is chargeable - removes both from regional edge cache and POP

- Avoid invalidation request charges and unpredictable caching behavior using version numbers in file names or directory names for changes to take effect immediately (Only invalidation of index.html migh be required)

- An invalidation request path that includes the * wildcard counts as one path even if it causes CloudFront to invalidate thousands of files

- CloudFront supports Field Level Encryption, so that the sensitive data can only be decrypted and viewed by a few specific components or services that have access to the private key

- To use Field Level Ecryption, the specific fields and encryption public key need to be configured in CloudFront

- CloudFront uses algorithm RSA/ECB/OAEPWithSHA-256AndMGF1Padding for Field Level Encryption

- Use signed URLs for the following cases:

- RTMP distribution. Signed cookies aren't supported for RTMP distributions

- Restrict access to individual files, for example, an installation download for your application

- Users are using a client (for example, a custom HTTP client) that doesn't support cookies

- Use signed cookies for the following cases:

- Provide access to multiple restricted files, for example, all of the files for a video in HLS format or all of the files in the subscribers' area of a website

- You don't want to change your current URLs

- Charges are applicable for -

- Serving contents from edge locations

- Transfering data to your origin, which includes DELETE, OPTIONS, PATCH, POST, and PUT requests

- HTTPS requests

- Field level encryption

- Price class - collection fo edge locations for the purpose of controlling cost

- Contents are served only from the edge locations of the selected price class

- Default price class includes all edge locations including the expensive ones

- If S3 is used as an origin server, the bucket name should be in all lowercase and cannot contain space

- For a given distribution, multiple origins can be configured including both S3 and HTTP servers (EC2 / external servers)

- The default cache behavior will cause CloudFront to get objects from one of the origins only. Hence a separate cache behavior needs to be configured for each origin specifying which URL path will be routed to which origin

- Cache behavior - CloudFront does not consider query strings or cookies when evaluating the path pattern

- Cache behavior -- CloudFront caches responses to GET and HEAD requests and, optionally, OPTIONS requests. CloudFront does not cache responses to requests that use the other methods

- GET, HEAD, OPTIONS, PUT, POST, PATCH, DELETE: You can use CloudFront to get, add, update, and delete objects, and to get object headers. In addition, you can perform other POST operations such as submitting data from a web form

- Cache behavior - Cache Based on Selected Request Headers

- None (improves caching) – CloudFront doesn't cache your objects based on header values

- Whitelist – CloudFront caches your objects based only on the values of the specified headers. Use Whitelist Headers to choose the headers that you want CloudFront to base caching on

- All – CloudFront doesn't cache the objects that are associated with this cache behavior

- Cache behavior - CloudFront can cache different versions of your content based on the values of query string parameters. Options are:

- None (Improves Caching) - origin returns the same version of an object regardless of the values of query string parameters

- Forward all, cache based on whitelist - if your origin server returns different versions of your objects based on one or more query string parameters. Then specify the parameters that you want CloudFront to use as a basis for caching

- Forward all, cache based on all - if your origin server returns different versions of your objects for all query string parameters

- Supports specifying custom error page based on HTTP response code

- Supports websocket

- You cannot invalidate objects that are served by an RTMP distribution

- Using CloudFront can be more cost effective if your users access your objects frequently because, at higher usage, the price for CloudFront data transfer is lower than the price for Amazon S3 data transfer

- If distribution is configured to compress files, CloudFront determines whether the file is compressible:

- The file type must be one that CloudFront compresses

- The file size must be between 1,000 and 10,000,000 bytes

- The response must include a Content-Length header so CloudFront can determine whether the size of the file is in the range that CloudFront compresses

- The response must not include a Content-Encoding header

- If you configure CloudFront to compress content, CloudFront removes the ETag response header from the files that it compresses

- ETag header helps to determine whether the edge cache has the latest file. However, after compression the two versions are no longer identical. As a result, when a compressed file expires and CloudFront forwards another request to your origin, the origin always returns the file to CloudFront instead of an HTTP status code 304 (Not Modified)

- When configured for compression, CloudFront compresses files in each edge location. it doesn't compress files when

- the file is already in edge locations

- the file is expired but origin returns HTTP status code 304

- You might not find the imported certificate or ACM certificate if - (Note - IAM certificate store supports ECDSA):

- The imported certificate is using an algorithm other than 1024-bit RSA or 2048-bit RSA.

- The ACM certificate wasn't requested in the same AWS Region as the load balancer or CloudFront distribution

- To ensure that users can access content only through CloudFront, change the following settings in the CloudFront distributions:

- Origin Custom Headers - Configure CloudFront to forward custom headers to your origin (headers should be rotated periodically). Origin server to deny requests not containing correct header

- Viewer Protocol Policy - Configure your distribution to require viewers to use HTTPS to access CloudFront

- Origin Protocol Policy - Configure your distribution to require CloudFront to use the same protocol as viewers to forward requests to the origin (This ensures the headers will remain encrypted)

- If you want CloudFront to cache different versions of your objects based on the user device, configure CloudFront to forward the applicable headers to your custom origin:

- CloudFront-Is-Desktop-Viewer

- CloudFront-Is-Mobile-Viewer

- CloudFront-Is-SmartTV-Viewer

- CloudFront-Is-Tablet-Viewer

- To cache different versions of your objects based on the language specified in the request, program your application to include the language in the Accept-Language header, and configure CloudFront to forward the Accept-Language header to your origin

- To cache different versions of your objects based on the country that the request came from, configure CloudFront to forward the CloudFront-Viewer-Country header to your origin

- To cache different versions of your objects based on the protocol of the request, HTTP or HTTPS, configure CloudFront to forward the CloudFront-Forwarded-Proto header to your origin

- Caching based on query parameters and cookies are also possible with the similar options. It is recommended to forward only those cookies or query parameters to the origin server for which the server returns different objects

- To create signed cookies or signed URLs

- identify an AWS account as a trusted signer

- create a CloudFront key pair for the trusted signer

- assign the trusted signer to the distribution or a specific cache behvior

- Web didtributions can add a trusted signer to a specific cache behavior and thus using signed cookie or signed URL for a specific set of files only. However, for RTMP distributions, it has to be for the entire distribution

- For signed URLs CloudFront checks if the URL has expired only at the begining of the download or play. If after the download or streaming starts the URL expires, the download or streaming will continue

- Geo restriction applies to an entire web distribution. If you need to apply one restriction to part of your content and a different restriction (or no restriction) to another part of your content, you must either create separate CloudFront web distributions or use a third-party geolocation service

- You can set up CloudFront with origin failover for scenarios that require high availability. To get started, create an origin group in which you designate a primary origin for CloudFront plus a second origin that CloudFront automatically switches to when the primary origin returns specific HTTP status code failure responses

- Access logs are not automatically encrypted

Snowball

- When to use - if it takes more than a week to transfer data over the network, prefer Snowball

- Snowball Edges have computational capabilities

- Can be Storage Optimized (24 vCPU) or Compute Optimized (52 vCPU) & optional GPU

- Allows processing on the go

- Use cases - Useful for IoT capture, machine learning, data migration, image collation etc.

- An 80 TB Snowball appliance has 72 TB usable capacity

- 100 TB Snowball Edge appliance has 83 TB of usable capacity

Snowmobile

- 100 PB in capacity

- When to use - Better than snowball if data to be transferred is more than 10 PB

Storage Gateway

- Storage Gateway supports hybrid cloud by allowing the on-primise resources access the cloud storage like EBS, S3 etc. through standard protocols

- By default, Storage Gateway uses Amazon S3-Managed Encryption Keys (SSE-S3) to server-side encrypt all data it stores in Amazon S3

- Storage Gateway types -

- File Gateway

- Volume Gateway

- Tape Gateway

- File Gateway -

- Configured S3 buckets accessible through NFS & SMB protocol

- Bucket access using IAM roles for each File Gateway

- Most recently used data is cached in File Gateway

- Can be mounted on many servers

- Volume Gateway -

- Block storage using iSCSI protocol backed by S3

- EBS snapshots stored S3 buckets

- Cached Volume - Recently used data cached

- Stored Volume - Entire dataset in premise with scheduled backups in S3

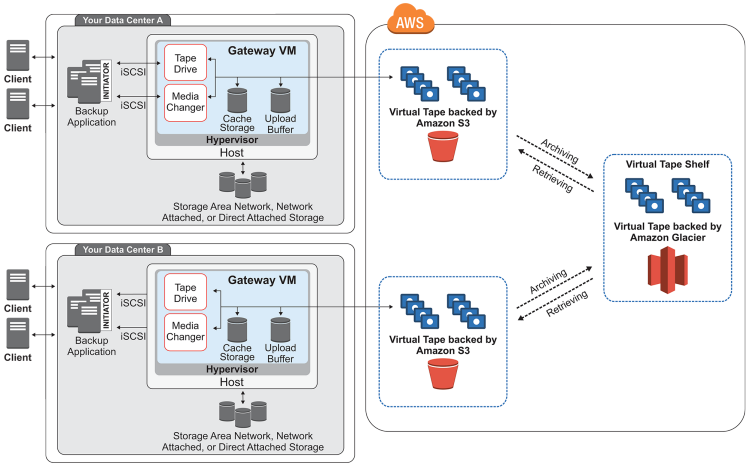

- Tape Gateway -

- Backup from on premise tape to S3 Glacier using iSCSI protocol

- VTL - Virtual Tape Library - S3

- VTS - Virtual Tape Shelf - Glacier or Glacier Deep Archive

Athena

- Serverless service (built on Presto)

- Allows to do analytics directly on S3

- Supports data formats CSV, JSON, ORC, Avro, Parquet

- Uses SQL to query the files

- Good for analyzing VPC Flow Logs, ELB Logs etc. at scale by creating a table and linking it with the S3 bucket holding the logs

IAM

- Global service

- Users for individuals

- User Groups for grouping users with similar permission requirements

- Roles are for machines or internal AWS resources. One IAM Role for ONE application

- You can create an identity broker that sits between your corporate users and your AWS resources to manage the authentication and authorization process without needing to recreate all your users as IAM users in AWS. The identity broker application has permissions to access the AWS Security Token Service (STS) to request temporary security credentials

- Login to EC2 operating system can be done using:

- Assymmetric key pair

- Local operating system users

- Active Directory

- Session Manager of AWS Systems Manager

- Two types of policies

- Resource policies - Attached to the individual resources

- Capability policies - Attached to IAM users, groups or roles

- Resource policies and capability policies and are cumulative in nature: An individual user’s effective permissions is the union of a resources policies and the capability permissions granted directly or through group membership. However, the resource policy permissions can be denied explicitly in the capability policy

- IAM policies can be used to restrict access to a specific source IPaddress range, or during specific days and times of the day, as well as based on other conditions

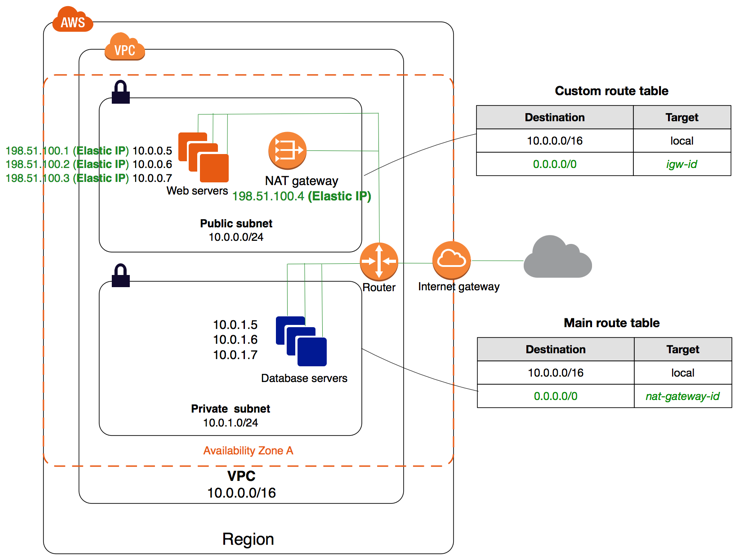

EC2

- Security Groups are for network security

- Security Groups are locked down to a region / VPC combination

- Security Groups can refer to other Security Groups. For e.g. to ensure that a web server in an EC2 instance serves requests only from an ALB, assign a security group to EC2 to open port 80 for traffic from the security group of the ALB

- One security group can be attached to multiple EC2 instances

- Multiple security groups can be assigned to a single EC2 instance

- If connection to application times out, it could be a security group issue. If connection is refused, it's an application issue

- Security Group - All inbound traffic is blocked by default

- Security Group - All outbound traffic is authorized by default

- Security Groups are stateful - if the inbound traffic on a port is allowed, the related outbound traffic is automatically allowed

- Change in security group takes effect immediately

- Security groups cannot blacklist an IP or port. Everything is blocked by default, we need to specifically open ports

- Elastic IP gives a fixed public IP to an EC2 instance across restarts

- One AWS account can have 5 elastic IP (soft limit)

- Prefer load balancer over Elastic IP for high availability

- EC2 User Data script runs once (with root privileges) at the instance first start

- EC2 Launch types -

- On-demand instances - short workload, predictable pricing

- Reserved instances - long workload (>= 1 year) - upto 75% discount

- Convertible reserved instances - long workload with flexible instance types - upto 54% discount

- Scheduled reserved instances - reserved for specific time window

- Spot instances - short workload, cheap, can lose instances, good for batch jobs, big data analytics etc. - upto 90% discount

- Dedicated instances - no other customer will share the hardware, but instances from same AWS account can share hardware, no control on instance placement

- Dedicated hosts - the entire server is reserved, provides more control on instance placement, more visibility into sockets and cores, good for "bring your own licenses (BYOL)", complicated regulatory needs - 3 years period reservation

- Billing by second with a minimum of 60 seconds

- Spot Price - If your Spot instance is terminated or stopped by Amazon EC2 in the first instance hour, you will not be charged for that usage. However, if you terminate the instance yourself, you will be charged to the nearest second. If the Spot instance is terminated or stopped by Amazon EC2 in any subsequent hour, you will be charged for your usage to the nearest second. If you are running on Windows or Red Hat Enterprise Linux (RHEL) and you terminate the instance yourself, you will be charged for an entire hour

- Spot Price - You will pay the price per instance-hour set at the beginning of each instance-hour for the entire hour, billed to the nearest second

- T2/T3 are burstable instances. Spikes are handled using burst credits that are accumulated over time. If burst credits are all consumed, performance will suffer

- M instance types are balanced

- Instance Type

- R - More RAM (use cases - in-memory caches)

- C - More CPU (use cases - compute / databases)

- M - Medium (use cases - general / webapp)

- I - More I/O - instance storage (use cases - databases)

- G - More GPU - (use cases - video rendering / machine learning)

- T2/T3 - burstable instances (up to a capacity / unlimited)

http://169.254.169.254/latest/user-data/gives user data scriptshttp://169.254.169.254/latest/meta-data/gives meta datahttp://169.254.169.254/latest/meta-data/public-ipv4/gives public IPhttp://169.254.169.254/latest/meta-data/local-ipv4/gives local IP- Three types of placement groups

- Clustered Placement Group -

- Grouping of instances within a single AZ, single rack

- Use cases - recommended for applications that need low network latency and high network throughput

- Only certain specific instance types can be launched in this placement group

- cannot span multiple AZ

- Partitioned Placement Group –

- spreads instances across logical partitions

- use cases - large distributed and replicated workloads, such as Hadoop, Cassandra, and Kafka

- each partition within a placement group has its own set of racks. Each rack has its own network and power source

- allows max 7 instances per AZ

- partitions can be distributed across AZ

- provides visibility as to which instance belongs to which partition through metadata

- not supported for Dedicated Hosts

- dedicated Instances can have a maximum of two partitions

- Spread Placement Group -

- instances are placed in distinct hardware

- each intance is placed on distinct racks, with each rack having its own network and power source

- use cases - recommended for applications that have a small number of critical instances that should be kept separate from each other

- can span multiple AZ

- allows max 7 instances per AZ

- not supported for Dedicated Instances or Dedicated Hosts

- Clustered Placement Group -

- The instances within a placement group should be homogeneous

- Placement groups can't be merged

- You can move an existing instance to a placement group, move an instance from one placement group to another, or remove an instance from a placement group. Before you begin, the instance must be in the stopped state

- Instance store backed EC2 instances can only be rebooted and terminated. They cannot be stopped unlike EBS-backed instances

- With instance store, the entire image is downloaded from S3 before booting and hence the boot time is usually around 5 mins

- With EBS-backed instances, only the part needed for booting is first downloaded from the EBS Snapshot and hence the boot time is shorter around 1 min

- EBS-backed instances once stopped, all data in any attached instance store will be deleted

- When an Amazon EBS-backed instance is stopped, you're not charged for instance usage; however, you're still charged for volume storage

- EC2-Classic is the original version of EC2 where the elastic IP would get disassociated when the instance stopped

- With EC2-VPC, the Elastic IP does not get disassociated when stopped

- When EC2 instance is stopped, it may get moved to a different underlying host

- EC2 instance states that are billed

- running

- stopping (only when the instannce is preparing to hibernate - NOT when the instance is being stopped)

- terminated (only for reserved instances only that are still in their contracted term)

- Select Auto-assign Public IP option so that the launched EC2 instance has a public IP from Amazon's public IP pool

- A custom AMI can be created with pre-installed software packages, security patches etc. instead of writing user data scripts, so that the boot time is less during autoscaling

- AMIs are built for a specific region, but can be copied across regions

- AWS AMI Virtualization types -

- Paravirtual (PV)

- Hardware Virtual Machine (HVM) - Amazon recommends

- All AMIs are categorized as either

- backed by Amazon EBS or

- backed by instance store

- AMIs with encrypted volumes cannot be made public. It can be shared with specific accounts along with the KMS CMK

- During the AMI-creation process, Amazon EC2 creates snapshots of your instance's root volume and any other EBS volumes attached to your instance. You're charged for the snapshots until you deregister the AMI and delete the snapshots

- If any volumes attached to the instance are encrypted, the new AMI only launches successfully on instances that support Amazon EBS encryption

- Encrypting during the CopyImage action applies only to Amazon EBS-backed AMIs. Because an instance store-backed AMI does not rely on snapshots, you cannot use copying to change its encryption status

- AWS does not copy launch permissions, user-defined tags, or Amazon S3 bucket permissions from the source AMI to the new AMI

- You can't copy an AMI that was obtained from the AWS Marketplace, regardless of whether you obtained it directly or it was shared with you. Instead, launch an EC2 instance using the AWS Marketplace AMI and then create an AMI from the instance

- If you copy an AMI that has been shared with your account, you are the owner of the target AMI in your account. The owner of the source AMI remains unchanged

- To copy an AMI that was shared with you from another account, the owner of the source AMI must grant you read permissions for the storage that backs the AMI, either the associated EBS snapshot (for an Amazon EBS-backed AMI) or an associated S3 bucket (for an instance store-backed AMI). If the shared AMI has encrypted snapshots, the owner must share the key or keys with you as well

- If you specify encryption parameters while copying an AMI, you can encrypt or re-encrypt its backing snapshots

- To coordinate Availability Zones across accounts, you must use the AZ ID, which is a unique and consistent identifier for an Availability Zone because us-east-1a AZ of one AWS account may not be same as us-east-1b of another AWS account

- When an instance is terminated, Amazon Elastic Compute Cloud (Amazon EC2) uses the value of the DeleteOnTermination attribute for each attached EBS volume to determine whether to preserve or delete the volume when the instance is terminated

- By default, the DeleteOnTermination attribute for the root volume of an instance is set to true, but it is set to false for all other volume types

- Using the console, you can change the DeleteOnTermination attribute when you launch an instance. To change this attribute for a running instance, you must use the command line

- Underlying Hypervisors for EC2 - Xen, Nitro

- You must stop your Amazon EBS–backed instance before you can change its instance type

- While changing instance type, instance store backed instances must be migrated to the new instance

- When you stop and start an instance, be aware of the following:

- We move the instance to new hardware; however, the instance ID does not change.

- If your instance has a public IPv4 address, we release the address and give it a new public IPv4 address

- The instance retains its private IPv4 addresses, any Elastic IP addresses, and any IPv6 addresses

- If your instance is in an Auto Scaling group, the Amazon EC2 Auto Scaling service marks the stopped instance as unhealthy, and may terminate it and launch a replacement instance. To prevent this, you can suspend the scaling processes for the group while you're resizing your instance

- If your instance is in a cluster placement group and, after changing the instance type, the instance start fails, try the following: stop all the instances in the cluster placement group, change the instance type for the affected instance, and then restart all the instances in the cluster placement group

- The public IPv4 address of an instance does not change on reboot

- The VM is not returned to AWS on reboot

- For Amazon EC2 Linux instances using the cloud-init service, when a new instance from a standard AWS AMI is launched, the public key of the Amazon EC2 key pair is appended to the initial operating system user’s ~/.ssh/authorized_keys file

- For Amazon EC2 Windows instances using the ec2config service, when a new instance from a standard AWS AMI is launched, the ec2config service sets a new random Administrator password for the instance and encrypts it using the corresponding Amazon EC2 key pair’s public key

- You can set up the operating system authentication mechanism you want, which might include X.509 certificate authentication, Microsoft Active Directory, or local operating system accounts

- For spot instance,

- The Termination Notice will be available 2 minutes before termination

- The Termination Notice is accessible to code running on the instance via the instance’s metadata at

http://169.254.169.254/latest/meta-data/spot/termination-time - The spot/termination-time metadata field will become available when the instance has been marked for termination

- The spot/termination-time metadata field will contain the time when a shutdown signal will be sent to the instance’s operating system

- The Spot Instance Request’s bid status will be set to marked-for-termination

- The bid status is accessible via the DescribeSpotInstanceRequests API for use by programs that manage Spot bids and instances

- Amazon recommends that interested applications poll for the termination notice at five-second intervals

- If you get an InstanceLimitExceeded error when you try to launch a new instance or restart a stopped instance, you have reached the limit on the number of instances that you can launch in a region. Possible solutions

- request an instance limit increase on a per-region basis

- Launch the instance in a different region

- If you get an InsufficientInstanceCapacity error when you try to launch an instance or restart a stopped instance, it indicates AWS does not currently have enough available On-Demand capacity to service your request. Possible solutions

- Wait a few minutes and then submit your request again; capacity can shift frequently

- Submit a new request with a reduced number of instances

- If you're launching an instance, submit a new request without specifying an Availability Zone

- If you're launching an instance, submit a new request using a different instance type

- If you are launching instances into a cluster placement group, you can get an insufficient capacity error

- The following are a few reasons why an instance might immediately terminate:

- You've reached your EBS volume limit

- An EBS snapshot is corrupt

- The root EBS volume is encrypted and you do not have permissions to access the KMS key for decryption.

- The instance store-backed AMI that you used to launch the instance is missing a required part (an image.part.xx file)

- If the reason is Client.VolumeLimitExceeded: Volume limit exceeded, you have reached your EBS volume limit

- If the reason is Client.InternalError: Client error on launch, that typically indicates that the root volume is encrypted and that you do not have permissions to access the KMS key for decryption

- Make sure the private key (pem file) on your linux machine has 400 permissions, else you will get Unprotected Private Key File error

- Make sure the username for the OS is given correctly when logging via SSH, else you will get Host key not found error

- Possible reasons for ‘connection timeout’ to EC2 instance via SSH :

- Security Group is not configured correctly

- CPU load of the instance is high

- EC2 shutdown behavior (Behavior when shutdown signal is sent from inside the OS by running the shutdown command) -

- Stopped (Default) - The instance will be stopped on receiving the shutdown signal

- Terminated - The instance will be terminated on receiving the shutdown signal

- With shutdown protection turned on, the instnce cannot be terminated from the console until the shutdown protection is turned off

- Even with shutdown protection on, if the instance has its shutdown behavior as terminated, the shutdown initiated from the OS will terminate the instance

- AWS Support offers four support plans: Basic, Developer, Business, and Enterprise

- Reserved instances (both standard & Convertible) can be Zonal (restricted to a single availability zone for capacity reservation) or Regional (having instance size and availability zone flexibility)

- If your applications benefit from high packet-per-second performance and/or low latency networking, Enhanced Networking will provide significantly improved performance, consistence of performance and scalability. There is no additional fee for Enhanced Networking. To take advantage of Enhanced Networking you need to launch the appropriate AMI on a supported instance type in a VPC

EFS

- Supports Network File System version 4 (NFSv4)

- Read after write consistency

- Data is stored across multiple AZ's within a region

- No pre-provisioning required

- To use EFS

- install amazon-efs-utils

- mount the EFS at the appropriate location

- A security group needs to be attached with EFS allowing NFS(2049) inbound traffic from the security groups of the connecting EC2 instances

- To access EFS file systems from on-premises, you must have an AWS Direct Connect or AWS VPN connection between your on-premises datacenter and your Amazon VPC

- Amazon EFS offers a Standard and an Infrequent Access storage class

- Moving files to EFS IA starts by enabling EFS Lifecycle Management and choosing an age-off policy

- EFS Standard is designed to provide single-digit latencies on average, and EFS IA is designed to provide double-digit latencies on average

- Performance mode -

- General Purpose - default. Appropriate for most file systems

- Max I/O - optimized for applications where tens, hundreds, or thousands of EC2 instances are accessing the file system

- All file systems deliver a consistent baseline performance of 50 MB/s per TB of Standard class storage, all file systems (regardless of size) can burst to 100 MB/s, and file systems with more than 1TB of Standard class storage can burst to 100 MB/s per TB

- Due to the distributed storage, it experiences higher latency than EBS

- AWS DataSync is an online data transfer service that makes it faster and simpler to move data between on-premises storage and Amazon EFS

ELB

- ELB types -

- Classic Load Balancer (V1 - old generation) - Lower cost than ALB, but less flexibility

- Application Load Balancer (V2 - new generation) - Layer 7 - application aware

- Network Load Balancer (V2 - new generation) - Layer 4 - extreme performance

- ELB provides health check for instances

- ALB can handle multiple applications where each application has a traget group and load for that application is balanced across instances within the particular target group

- ALB supports HTTP/HTTPS & Websocket protocols

- ALB - True IP, port and protocol details of the client are inserted in HTTP headers - X-Forwarded-For, X-Forwarded-Port and X-Forwarded-Proto respectively

- ALB can route based on hostname in URL and route in URL

- ALB supports SNI (Server Name Indication). This allows ALB to support multiple TLS certificates

- Network Load Balancers are mostly used for extreme performance and should not be the default load balancer

- Network Load Balancers have less latency ~100 ms (vs 400 ms for ALB)

- Load Balancers have static host name. DO NOT resolve & use underlying IP

- LBs can scale but not instantaneously – contact AWS for a “warm-up”

- ELBs do not have a predefined IPv4 address. We resolve to them using a DNS name

- 504 error means the gateway has timed out and it is an application issue and NOT a load balancer issue

- Sticky session - required if the ec2 instance is writing a file to the local disk. Traffic will not go to other ec2 instances for the session (uses cookie and the validity duration of the cookie is configured at the time of enabling sticky session)

- If one AZ does not receive any traffic, check

- if Cross zone load balancing is enabled

- if the AZ is added in the load balancer config

- Path Patterns - Allows to route traffic based on the URL patterns

- The VPC and subnets need to be specified during configuration

- Elastic Load Balancing provides access logs that capture detailed information about requests sent to your load balancer

- Elastic Load Balancing captures the access logs and stores them in the Amazon S3 bucket (that you specify) as compressed and encrypted files

- Each access log file is automatically encrypted before it is stored in your S3 bucket and decrypted when you access it

- ALBs are priced per http request, Classic ELBs are priced by bandwidth consumption. Also ALBs are charged per routing rule. For a very high volume of small requests, ALBs can be much more expensive than an ELB

- Access logs are automatically encrypted

- Connection draining enables the load balancer to complete in-flight requests made to instances that are de-registering or unhealthy.

Auto Scaling

- Auto scaling group is configured to register new instances to a traget group of ELB

- IAM role attached to the ASG will get assigned to the instances

- If instance gets terminated, ASG will restart it

- If instance is marked as unhealthy by load balancer, ASG will restart it

- ASG can scale based on CloudWatch alarms

- ASG can scale based on custom metric sent by applications to CloudWatch

- If all subnets in different availability zones are selected, the ASG will distribute the instances across multiple AZ

- During the configured warm up period the EC2 instance will not contribute to the auto scaling metrics

- Scaling out is increasing the number of instances and scaling up is increasing the resources

- The cooldown period helps to ensure that the Auto Scaling group doesn't launch or terminate additional instances before the previous scaling activity takes effect

- The default cooldown period is 300 seconds

- Cooldown period is applicable only for simple scaling policy

- Launch Configuration specifies the properties of the launched EC2 instances such as AMI etc.

- Launch configuration cannot be changed once created

- Scaling Policy -

- Target tracking scaling — Increase or decrease the current capacity of the group based on a target value for a specific metric. E.g. CPU Utilization or any other metric that will increase or decrease proportionally with the no. of instances

- Step scaling — Increase or decrease the current capacity of the group based on a set of scaling adjustments, known as step adjustments, that vary based on the size of the alarm breach. The configuration defines the desired number of instances for a range of value for the given metric. There could be multiple such steps defined

- Simple scaling — Increase or decrease the current capacity of the group based on a single scaling adjustment

- When there are multiple policies in force at the same time, there's a chance that each policy could instruct the Auto Scaling group to scale out (or in) at the same time. When these situations occur, Amazon EC2 Auto Scaling chooses the policy that provides the largest capacity for both scale out and scale in

- Default termination policy -

- Determine which Availability Zone(s) have the most instances, and at least one instance that is not protected from scale in

- Determine which instance to terminate so as to align the remaining instances to the allocation strategy for the On-Demand or Spot Instance that is terminating and your current selection of instance types

- Determine whether any of the instances use the oldest launch template

- Determine whether any of the instances use the oldest launch configuration

- Instances are closest to the next billing hour

- You can launch and automatically scale a fleet of On-Demand Instances and Spot Instances within a single Auto Scaling group. In addition to receiving discounts for using Spot Instances, if you specify instance types for which you have matching Reserved Instances, your discounted rate of the regular On-Demand Instance pricing also applies. The only difference between On-Demand Instances and Reserved Instances is that you must purchase the Reserved Instances in advance. All of these factors combined help you to optimize your cost savings for Amazon EC2 instances, while making sure that you obtain the desired scale and performance for your application

- You enhance availability by deploying your application across multiple instance types running in multiple Availability Zones. You must specify a minimum of two instance types, but it is a best practice to choose a few instance types to avoid trying to launch instances from instance pools with insufficient capacity. If the Auto Scaling group's request for Spot Instances cannot be fulfilled in one Spot Instance pool, it keeps trying in other Spot Instance pools rather than launching On-Demand Instances, so that you can leverage the cost savings of Spot Instances

- Amazon EC2 Auto Scaling provides two types of allocation strategies that can be used for Spot Instances:

- capacity-optimized - The capacity-optimized strategy automatically launches Spot Instances into the most available pools by looking at real-time capacity data and predicting which are the most available. By offering the possibility of fewer interruptions, the capacity-optimized strategy can lower the overall cost of your workload

- lowest-price - Amazon EC2 Auto Scaling allocates your instances from the number (N) of Spot Instance pools that you specify and from the pools with the lowest price per unit at the time of fulfillment

- An Auto Scaling group is associated with one launch configuration at a time, and you can't modify a launch configuration after you've created it. To change the launch configuration for an Auto Scaling group, use an existing launch configuration as the basis for a new launch configuration. Then, update the Auto Scaling group to use the new launch configuration. After you change the launch configuration for an Auto Scaling group, any new instances are launched using the new configuration options, but existing instances are not affected

EBS

- An EBS volume is a network drive

- An EC2 machine by default loses its root volume when terminated

- It's locked to an AZ. To move a volume to a different AZ, a snapshot needs to be created

- EBS Volume types:

- General Purposse SSD (GP2) - General purpose SSD volume

- Provisioned IOPS SSD (IO1) - Highest-performance SSD volume for mission-critical low-latency or high throughput workloads. Good for Databases.

- Throughput Optimized HDD (ST1) - Low cost HDD volume designed for frequently accessed, throughput intensive workloads. Good for big data and datawarehouses

- Cold HDD (SC1) - Lowest cost HDD volume designed for less frequently accessed workloads. Good for file servers

- EBS Magnetic HDD (Standard) - Previous generation HDD. For workloads where data is infrequently accessed

- io1 can be provisioned from 100 IOPS up to 64,000 IOPS per volume on Nitro system instance families and up to 32,000 on other instance families. The maximum ratio of provisioned IOPS to requested volume size (in GiB) is 50:1. Therefore, with a 10 Gib volume, the maximum provisioned IOPS should be 500

- The use of io1 is justified only when there is a requirement for sustained IOPS

- st1 bursts up to 250 MB/s per TB, with a baseline throughput of 40 MB/s per TB and a maximum throughput of 500 MB/s per volume

- st1 is good for MapReduce, Kafka, log processing, data warehouse, and ETL workloads

- sc1 bursts up to 80 MB/s per TB, with a baseline throughput of 12 MB/s per TB and a maximum throughput of 250 MB/s per volume

- When your workload consists of large, sequential I/Os, we recommend that you configure the read-ahead setting to 1 MiB

- Some instance types can drive more I/O throughput than what you can provision for a single EBS volume. You can join multiple gp2, io1, st1, or sc1 volumes together in a RAID 0 configuration to use the available bandwidth for these instances

- A factor that can impact your performance is if your application isn’t sending enough I/O requests. This can be monitored by looking at your volume’s queue depth. The queue depth is the number of pending I/O requests from your application to your volume. For maximum consistency, a Provisioned IOPS volume must maintain an average queue depth (rounded to the nearest whole number) of one for every 1000 provisioned IOPS in a minute. For example, for a volume provisioned with 3000 IOPS, the queue depth average must be 3. For more information about ensuring consistent performance of your volumes

- RAID 0

- Striping in multiple disk volumes

- When I/O performance is more important than fault tolerance

- Loss of a single volume results in complete data loss

- RAID 1

- When fault tolerance is more important than I/O performance

- Even in the absence of RAID 1, EBS is already replicated within AZ

- Max IOPS/Volume

- io1 - 64,000 (based on 16K I/O size)

- gp2 - 16,000 (based on 16K I/O size)

- st1 - 500 (based on 1 MB I/O size)

- sc1 - 250 (based on 1 MB I/O size)

- IOPS/GiB

- io1

- You can provision up to 50 IOPS per GiB

- Min 100 IOPS

- gp2

- Baseline performance is 3 IOPS per GiB

- Minimum of 100 IOPS

- General Purpose (SSD) volumes under 1000 GiB can burst up to 3000 IOPS

- io1

- SSD is good for short random access. HDD is good for heavy sequential access

- SSD provides high IOPS (np. of read-write per second). HDD provides high throughput (no. of bits read/written per second)

- The size and IOPS (only for IO1) can be increased

- Increasing the size or the volume does not automatically increase the size of the partition

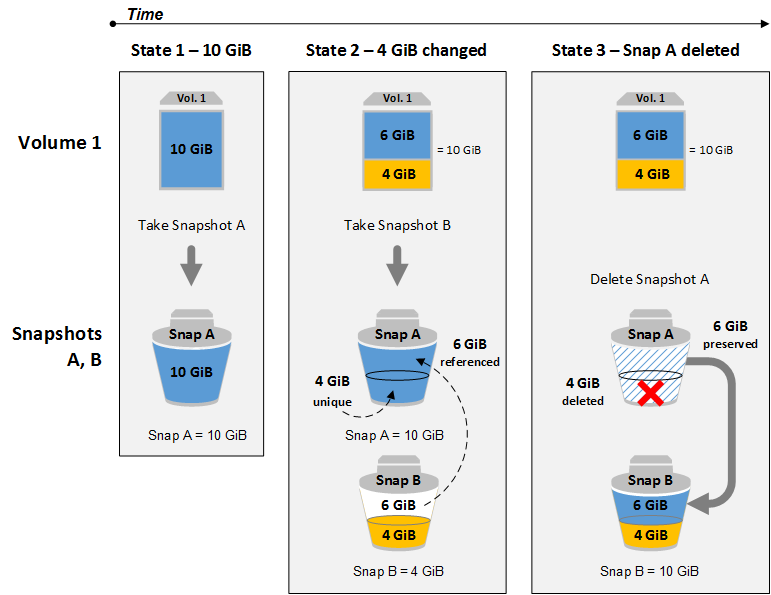

- EBS volumes can be backed up using snapshots

- Snapshots are also used to resizing a volume down, changing the volume type and encrypting a volume

- Snapshots occupy only the size of data

- Snapshots exist on S3

- Snapshots are incremental

- It is sufficient to keep only the latest snapshot

- You can share your unencrypted snapshots with specific AWS accounts, or you can share them with the entire AWS community by making them public

- You can share an encrypted snapshot only with specific AWS accounts. For others to use your shared, encrypted snapshot, you must also share the CMK key that was used to encrypt it

- Snapshots of encrypted volumes are always encrypted

- Volumes restored from encrypted snapshots are encrypted automatically

- To take a snapshot of the root device, the instance needs to be stopped or volume needs to be detached (it is not required but recommended)

- Copying an unencrypted snapshot allows encryption

- EBS Encryption leverages keys from KMS (AES-256)

- All the data in flight moving between the instance and an encrypted volume is encrypted

- Encryption of a root volume involves following steps,

- Take a snapshot

- Copy the snapshot and choose the ecryption option (Once encrypted, it cannot be uncrypted by again making a copy)

- Create an Image (AMI) from the encrypted snapshot

- EBS backups use IO and hence backups should be taken during off-peak hours

- Each EBS volume is automatically replicated within its own AZ to protect from component failure and provide high availability and durability

- EC2 instance and its volume are going to be in the same AZ

- Migrating EBS to a different AZ or Region involves the following steps

- Create a snapshot

- Create an AMI

- Copy the AMI to a different Region

- Launch an EC2 instance in a different Region with the AMI

- The size and type of the EBS volumes can be changed without even stopping the EC2 instance

- AMI can be created directly from the volume as well

- AMI root device storage can be

- Instance Store (Ephemeral Stores)

- EBS Backed Volumes

- Instance store volumes are created from a template stored in Amazon S3

- Instance stores are attached to the host where the EC2 is running, whereas EBS volumes are network volumes. However, in 90% of the use cases the difference in latency with the two types of stores does not make any difference

- Instance stores survive reboot, but NOT termination

- gp2 Throughput (in MiB/sec) = (Volume size in GiB) * (IOPS per GiB) * (I/O size in KiB). The I/O size is 256KiB (earlier 16KiB).

- gp2 - Max Throughput 250 MiB/sec (volumes >= 334 GiB won't increase throughput)

- io1 Throughput (in MiB/sec) = (IOPS per GiB) * (I/O size in KiB). If the IOPS provisioned is 500, the instance can achieve 500 * 256KiB writes per second

- io1 - Max Throughput 500 MiB/sec (at 32,000 IOPS) and 1000 MiB/sec (at 64,000 IOPS)

- EBS Optimized Instances - With small additional fee, customers can launch certain Amazon EC2 instance types as EBS-optimized instances. EBS-optimized instances enable EC2 instances to fully use the IOPS provisioned on an EBS volume. Contention between Amazon EBS I/O and other traffic from the EC2 instance is minimized

- Amazon Data Lifecycle Manager (Amazon DLM) automates the creation, deletion and retention of EBS snapshots

- Improving I/O performance

- Use RAID 0

- Increase size of EC2 instance

- Use appropriate volume types

- Enhanced Networking feature can provide higher I/O performance and lower CPU utilization to the EC2 instance. However, HVM AMI instead of PV AMI is required

- EBS can provide the lowest latency store to a single EC2 instance. EBS latency is lesser than S3 even with VPC endpoint

- You can configure your AWS account to enforce the encryption of your EBS volumes and snapshots. Activating encryption by default has two effects:

- AWS encrypts new EBS volumes on launch

- AWS encrypts new copies of unencrypted snapshots

- Encryption by default is a Region-specific setting. If you enable it for a Region, you cannot disable it for individual volumes or snapshots in that Region

- Newly created EBS resources are encrypted by your account's default customer master key (CMK) unless you specify a customer managed CMK in the EC2 settings or at launch

- EBS encrypts your volume with a data key using the industry-standard AES-256 algorithm. Your data key is stored on-disk with your encrypted data, but not before EBS encrypts it with your CMK; it never appears on disk in plaintext

- When you have access to both an encrypted and unencrypted volume, you can freely transfer data between them. EC2 carries out the encryption and decryption operations transparently

- To create snapshots for Amazon EBS volumes that are configured in a RAID array, there must be no data I/O to or from the EBS volumes that comprise the RAID array. These same precautions and steps should be followed whenever you create a snapshot of an EBS volume that serves as the root device for an EC2 instance

- Although snapshots are incremental, snapshots are designed in such a way that retaining the last snapshot is sufficient to recover the complete volume data. Deleting the old snapshots may not reduce the occupied storage. Snapshots copy only the data that have changed since the last snapshot was taken and points to the unchanged data of the last snapshot

- Volumes can be resized

- increase in size

- increase in IOPS (for io1)

- change in volume type

- After resizing, the volume needs to be repartitioned

- After resizing, the volume will remain in "optimization" phase for some time. While the volume will remai usable, but the performancce will not be good

- EBS volumes restored from the snapshots need to be pre-warmed for optimal performance (fio or dd command will read the entire volume ad thus helps in pre-warming)

- EBS Volume migration to a different region

- Take a snapshot

- Copy snapshot to a different region

- Restore volume from the snapshot

- EBS Volume migration to a different AZ

- Take a snapshot

- Restore volume from the snapshot in a different AZ

- High wait time of SSD can be resolved by provisioning more IOPS in io1

- You can stripe multiple volumes together to achieve up to 75,000 IOPS or 1,750 MiB/s when attached to larger EC2 instances. However, performance for st1 and sc1 scales linearly with volume size so there may not be as much of a benefit to stripe these volumes together

- Note that you can't delete a snapshot of the root device of an EBS volume used by a registered AMI. You must first deregister the AMI before you can delete the snapshot

- To take a consistent snapshot, unmount the volume, initiate the snapshot and mount it back (The ongoing reads and writes do not affect the snapshot once it is started)

CloudWatch

- CloudWatch is for monitoring performance, whereas CloudTrail is for auditing API calls

- CloudWatch with EC2 will monitor events every 5 min by default - basic monitoring

- With detailed monitoring, the interval will be 1 min. Use detailed monitoring for prompt scaling actions in ASG

- CloudWatch alarms can be created to trigger notifications when a certain metric reaches a certain value

- We can create a CloudWatch Events Rule That triggers on an AWS API Call Using AWS CloudTrail

- Enabling CloudWatch logs for EC2

- Assign appropriate CloudWatch access policy to the IAM role

- Install CloudWatch agent (awslogsd) in EC2

- Since EC2 does not have access to the underlying OS, some metrics are missing including disk and memory utilization

- CloudWatch can collect metrics and logs from services, resources and applications on AWS as well on-premise services

- CloudWatch Alarms can be created to

- send SNS notifications

- do EC2 autoscaling when a certain metrics satisfies a configured condition

- do EC2 actions (Against EC2 metrics only)

- Recover - Recover the instance on different hardware

- Stop

- Terminate

- Reboot

- CloudWatch Events allow users to do some activity (by triggering a Lambda function) on real time when some system change happens. CloudTrail cannot do this because CloudTrail deliver logs in around 15 minutes interval

- CloudWatch Events doesn't get triggered on read events

- CloudWatch Alarm Status

- impaired - checks failed

- insufficient data - checks in progress

- ok - all checks passed

- Amazon CloudWatch does not aggregate data across Regions. Therefore, metrics are completely separate between Regions

- For EC2, AWS provides the following CloudWatch metrics

- CPU - CPU Utilization + Burst Credit Usage / Balance

- Network - Network In / Out

- Disk - Read / Write for Ops / Bytes (only for instance store)

- Status Check

- Instance status - checks the EC2 VM

- System status - checks the underlying hardware

- For custom metrics (API - PutMetricData)

- Basic resolution - 1 min interval

- High resolution - 1 sec interval

- CloudWatch dashboards are global. Dashboards can include graphs from different regions

- Metric Filter can be used to find a specific log message based on pattern and create a metric on the number of occurence of the message

- Using AWS CLI, we can tail CloudWatch logs

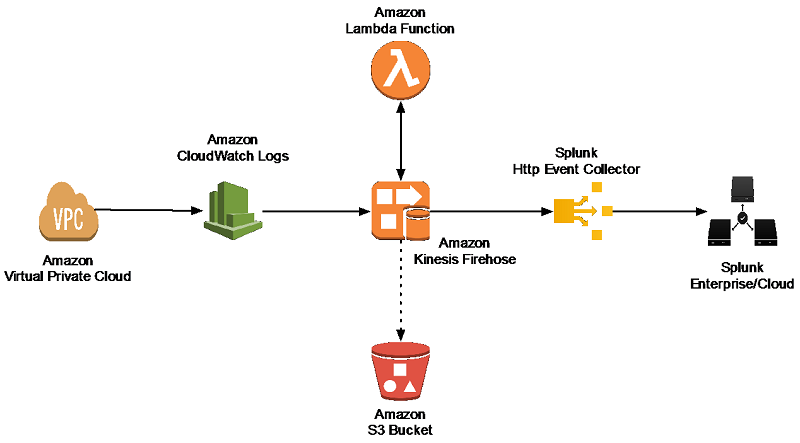

- You can use subscriptions to get access to a real-time feed of log events from CloudWatch Logs and have it delivered to other services such as a Amazon Kinesis stream, Amazon Kinesis Data Firehose stream, or AWS Lambda for custom processing, analysis, or loading to other systems. To begin subscribing to log events, create the receiving source, such as a Kinesis stream, where the events will be delivered. A subscription filter defines the filter pattern to use for filtering which log events get delivered to your AWS resource, as well as information about where to send matching log events to

- CloudWatch logs can go to

- S3 for archival (one time batch)

- Stream to Elastic Search

- Stream to Lambda (Lambda provides blueprints for streaming log events to Splunk or other services)

- Logs are not automatically encrypted. Need to be enabled at log group level

CloudTrail

- By default CloudTrail keeps account activity details upto 90 days

- These events are limited to management events with create, modify, and delete API calls and account activity. For a complete record of account activity, including all management events, data events, and read-only activity, you’ll need to configure a CloudTrail trail

- By setting up a CloudTrail trail you can deliver your CloudTrail events to Amazon S3, Amazon CloudWatch Logs, and Amazon CloudWatch Events

- You can create up to five trails in an AWS region. A trail that applies to all regions exists in each region and is counted as one trail in each region

- By default, CloudTrail log files are encrypted using S3 Server Side Encryption (SSE) and placed into your S3 bucket

- CloudTrail integration with CloudWatch logs enables you to receive SNS notifications of account activity captured by CloudTrail. For example, you can create CloudWatch alarms to monitor API calls that create, modify and delete Security Groups and Network ACL’s

- Logs are automatically encrypted

CloudFormation

- AWS CloudFormation templates are JSON or YAML-formatted text files that are comprised of five types of elements:

- An optional list of template parameters (input values supplied at stack creation time)

- An optional list of output values (e.g. the complete URL to a web application)

- An optional list of data tables used to lookup static configuration values (e.g., AMI names)

- The list of AWS resources and their configuration values (mandatory)

- A template file format version number

Route 53

- In AWS, the most common records are:

- A: URL to IPv4

- AAAA: URL to IPv6

- CNAME: URL to URL

- Alias: URL to AWS resource.

- Prefer Alias over CNAME for AWS resources (for performance reasons)

- Route53 has advanced features such as:

- Load balancing (through DNS – also called client load balancing)

- Health checks (although limited…)

- Routing policy: simple, failover, geolocation, geoproximity, latency, weighted, multivalue answer

- IPv4 - 32 bit, IPv6 - 128 bit

- Simple Routing - Multiple IP addresses against a single A record. Route 53 returs all of them in random order

- Wighted Routing - A separate A record for each IP with a percentage weight. A separate health check can be associated with each IP or A record. SNS notification can be sent if a health check fails. If a health check fails, the server is removed from Route 53, until the health check passes

- Latency Based Routing - A separate A record for each IP with a percentage weight. A separate health check can be associated with each IP or A record. Routing happens to the server with lowest latency

- Failover Routing - 2 separate A records - one for primary and one for secondary. Health check can be associated with each, If primary goes down, traffic will all be ruted to secondary

- Geolocation Based Routing - A separate A record for each IP. Each A record is mapped to a location and the routing happens to a specific server depending on which location the DNS query originated. Good for scenarios where different website will have different language labels based on location

- Multivalue Answer - Simple routing with health checks of each IP

- Geoproximity - Must use Route 53 Traffic Flow. Routes traffic based on geographic location of users and resources. This can be further influenced with biases

- You configure active-active failover using any routing policy (or combination of routing policies) other than failover, and you configure active-passive failover using the failover routing policy

- Amazon Route 53’s DNS services does NOT support DNSSEC at this time

RDS

- Databases supported -

- Postgres

- Oracle

- MySQL

- MariaDB

- Microsoft SQL Server

- Aurora

- Upto 5 Read Replicas (Async Replication - within AZ, cross AZ or cross Region)

- Read replicas of read replicas are possible

- Each read replica will have its own DNS endpoint

- Read replica can be created in a separate region as well

- If a read replica is promoted to its own database, the replication will stop

- Read replica cannot be enabled unless the automatic backups are also enabled

- Oracle does not support Read replica

- Read replicas themselves can be Multi-AZ* for disaster recovery

- Read Replicas can help in Disaster Recovery by cross-region read-replica

- Each Read Replica will have its own read endpoint

- Read Replicas can be used to run BI / Analytics reports

- A failover in a Multi-AZ deployment can be forced by rebooting the DB

- Multi-AZ cannot be cross-region

- Multi-AZ happens in the following use cases

- Primary DB instance fails

- Availability zone outage

- DB instance server type changed

- DB instance OS undergoing software patching

- Manual failover using reboot with failover

- With Multi-AZ there is less impact on primary for backup & maintenance as the backup happens from standby and maintenance patches are first applied on standby and then the standby is promoted to become the primary

- Primary database and the Multi-AZ Standby will have a common DNS name

- Two ways of improving performance

- Read replicas

- ElastiCache

- Replication for Disaster Recovery is synchronous (across AZ - Automatic failover - DNS endpoint remains same) - Multi AZ

- Replicas can be promoted to their own DB

- Automated backups:

- Daily full snapshot of the database

- Capture transaction logs in real time

- Ability to restore to any point in time

- 7 days retention (can be increased to 35 days)

- The backup data is stored in S3

- Backups are taken during specified window. The application may experience elavated latency during backup

- Restoring DB from automatic backup or snapshots always creates a new RDS instance with a new DNS endpoint

- DB Snapshots:

- Manually triggered by the user

- Retention of backup for as long as we want

- Can be taken on the Multi AZ standby and thus minimizing impact on the master

- Incremental after the first which is full

- Can copy and share snapshots

- Steps to encrypt unencrypted DB - Snapshot => copy snapshot as encrypted => create DB from snapshot

- Encryption at rest capability with AWS KMS - AES-256 encryption

- To enforce SSL:

- PostgreSQL: rds.force_ssl=1 in the AWS RDS Console (Paratemer Groups)

- MySQL: Within the DB: GRANT USAGE ON . TO 'mysqluser'@'%' REQUIRE SSL;

- To connect using SSL:

- Provide the SSL Trust certificate (can be download from AWS)

- Provide SSL options when connecting to database

- RDS, in general, is not serverless (except Aurora Serverless which is serverless)

- We cannot access the RDS virtual machines. Patching the RDS operating system is Amazon's responsibility

- CloudWatch Metrics (Gathered from the Hypervisor) -

- DatabaseConnections

- SwapUsage

- ReadIOPS / WriteIOPS

- ReadLatency / WriteLatency

- ReadThroughPut / WriteThroughPut

- DiskQueueDepth

- FreeStorageSpace

- Enhanced Monitoring metrics are useful when it is required to see how different processes or threads on a DB instance use the CPU

- IAM users can be used to manage DB (only for MySQL/Aurora)

- Key RDS APIs

- DescribeDBInstances - Lists DB instances including read replicas and also provides DB version

- CreateDBSnapshot

- DescribeEvents

- RebootDBInstance

- RDS Performance Insights

- By Waits - find the resource that is bottleneck (CPU, IO, lock etc.)

- By SQL Statements - find the SQL statement that is problem

- By Hosts - find the server that is using the DB most

- By Users - find the user that is using the DB most

DynamoDB

- Supports both document and key-value data model

- Stored on SSD storage

- Spread across 3 geographically distributed data centers

- Supports both Eventual Consistant Reads (Default) & Strongly Consistant Reads

- Serverless service

- Amazon DynamoDB Accelerator (DAX) is a fully managed, highly available, in-memory cache that can reduce Amazon DynamoDB response times from milliseconds to microseconds

- DynamoDB auto scaling modifies provisioned throughput settings only when the actual workload stays elevated (or depressed) for a sustained period of several minutes

- To enable DynamoDB auto scaling for the ProductCatalog table, you create a scaling policy. This policy specifies the following:

- The table or global secondary index that you want to manage

- Which capacity type to manage (read capacity or write capacity)

- The upper and lower boundaries for the provisioned throughput settings

- Your target utilization

Redshift

- Amazon's data warehouse solution

- Single node (160 GB) or multi node (leader node and compute node - upto 128 compute nodes)

- Column based data store, column based compression techniques and multiple other compression techniques

- No indexes or materialized views

- Massively parallel processing

- Redshift attempts to maintain 3 copies of data (the original and replica on the compute nodes and a backup in S3)

- Available in only 1 AZ

- Backup retention period is 1 day by default which can be extended to 35 days

- Can asynchronously replicate to S3 in a different region for disaster recovery