There are several options in how to deploy HDI Kafka Clusters. Other then Azure Portal, CLI, PowerShell and Azure Rest API, template based options are as follows:

(*)Terraform does not support private link and outbound network setup as the time of this document preperation.

For now we have no ready template for this configuration. if you want to deploy kafka cluster with private link and no public IPs,there are some key prep points that you should be following. Private link configuration is only available with HDInsight RM behavior being Outbound.

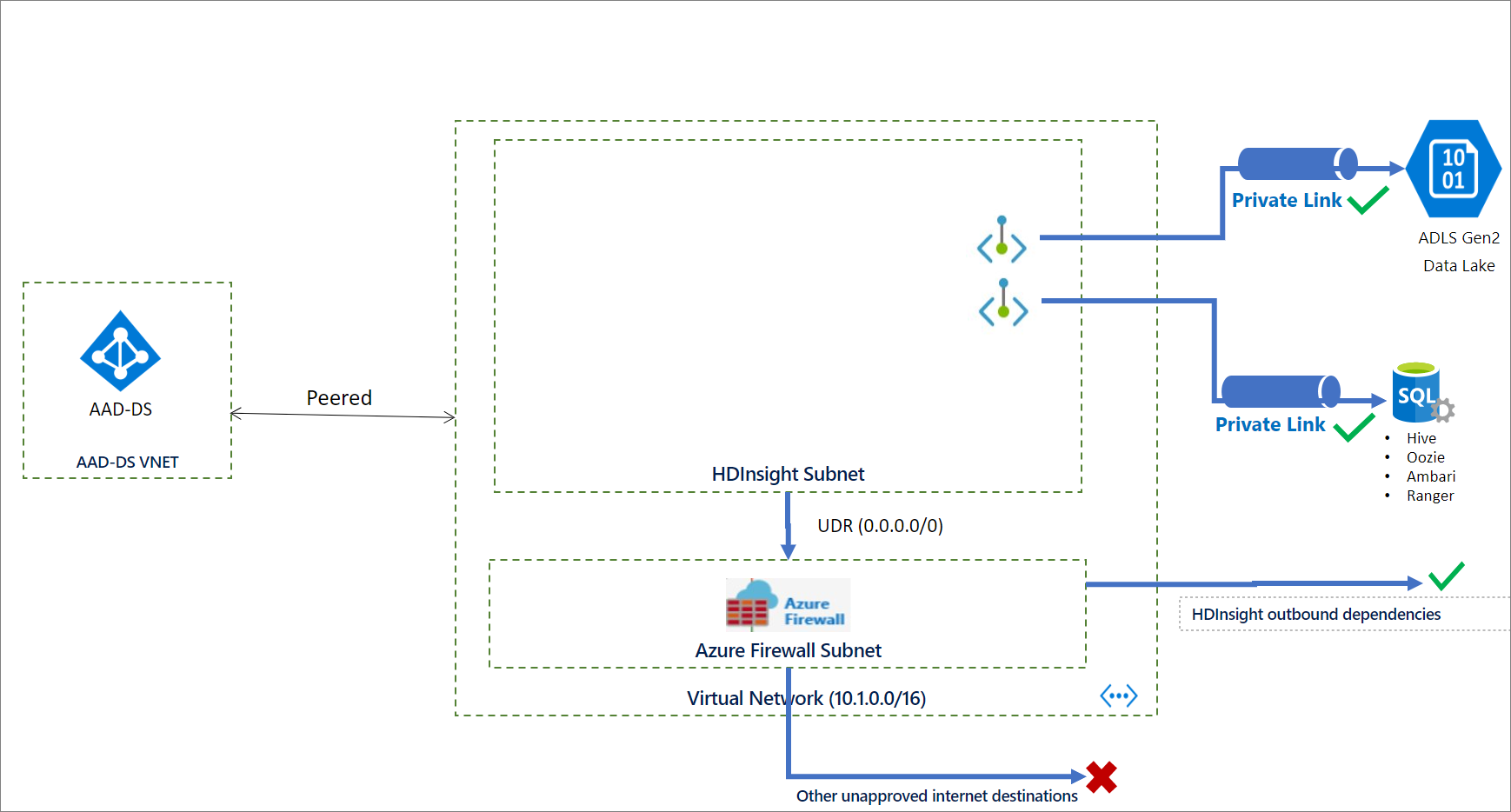

Blocking inbound traffic and restricting outbound traffic requires some preparation steps including, virtual network preparation with necessary subnets.

- Creating a Logical SQL server with 3 Azure SQL Databases for External Ambari, Hive and Oozie Metastores.:star:

- For the SQL server you need to whitelist HDI Management service IPs.:star:

- Allow access all Azure Services

- Create and provide the storage account upfront.

- Keep Storage account as public 😟

- If you set storage type as Data lake Gen2(Hierarchical) you also need to create a User Assigned Managed Identitiy for access.:star:

- Assign Storage Blob Data Owner access to the created managed identity on Azure Storage form Access Control Menu:star:

- Whitelist HDI Management service IPs.:star:

- Keep Storage account as public 😟

- Create a subnet for hdinsight with an NSG.

- Whitelist HDI Management service IPs for outbound. ⭐

- Create private links for the Logical SQL server and Storage account into the HDInsight dedicated subnet.:star:

- This may sound weird, but disable private endpoint policies for HDInsight subnet 😮.

az network vnet subnet update --name <your HDInsight Subnet> --resource-group <your HDInsight vnets resource group> --vnet-name <your HDInsight vnet> --disable-private-link-service-network-policies true

Because HDinsight privatelink cluster have Standart load balancers isntead of basic, we need to congfigure a NAT solution or Firewall to route the outbound traffic to the HDInSight Resource manager IPs.

- Create a Firewall in a dedicated firewall subnet.

- Create a route table.

- Configure Firewall app and network rules and routing table accourding to this guidance.

- Associate the routing table with the HDInsight Subnet.

The network and service architecture will be like below before provisioning:

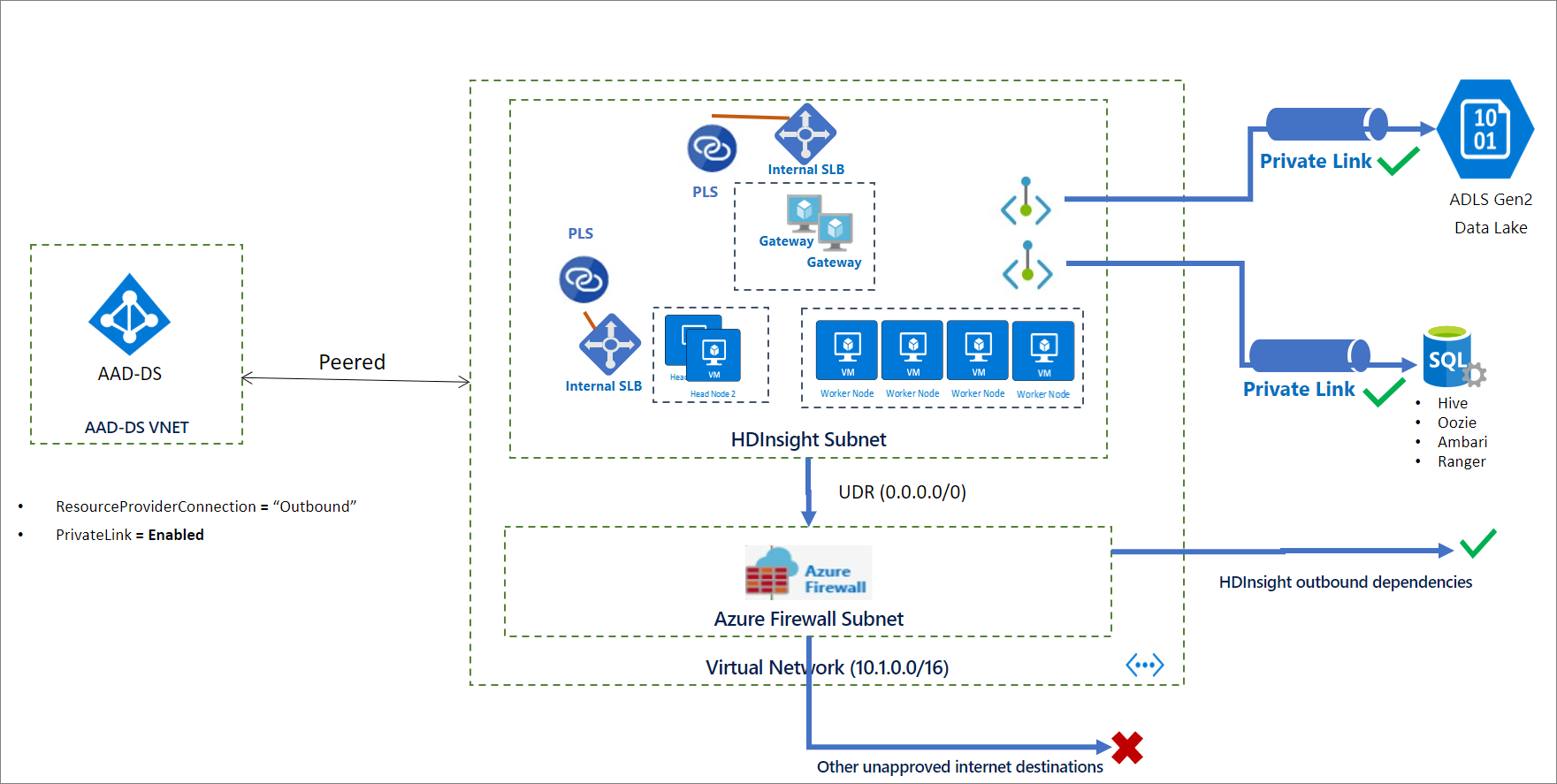

During the provisioning,

- Remove public IP addresses by setting

resourceProviderConnectiontooutbound. ⭐ - Enable Azure Private Link and use Private Endpoints by setting

privateLinktoenabled.

After provisioning the architecture will be like below:

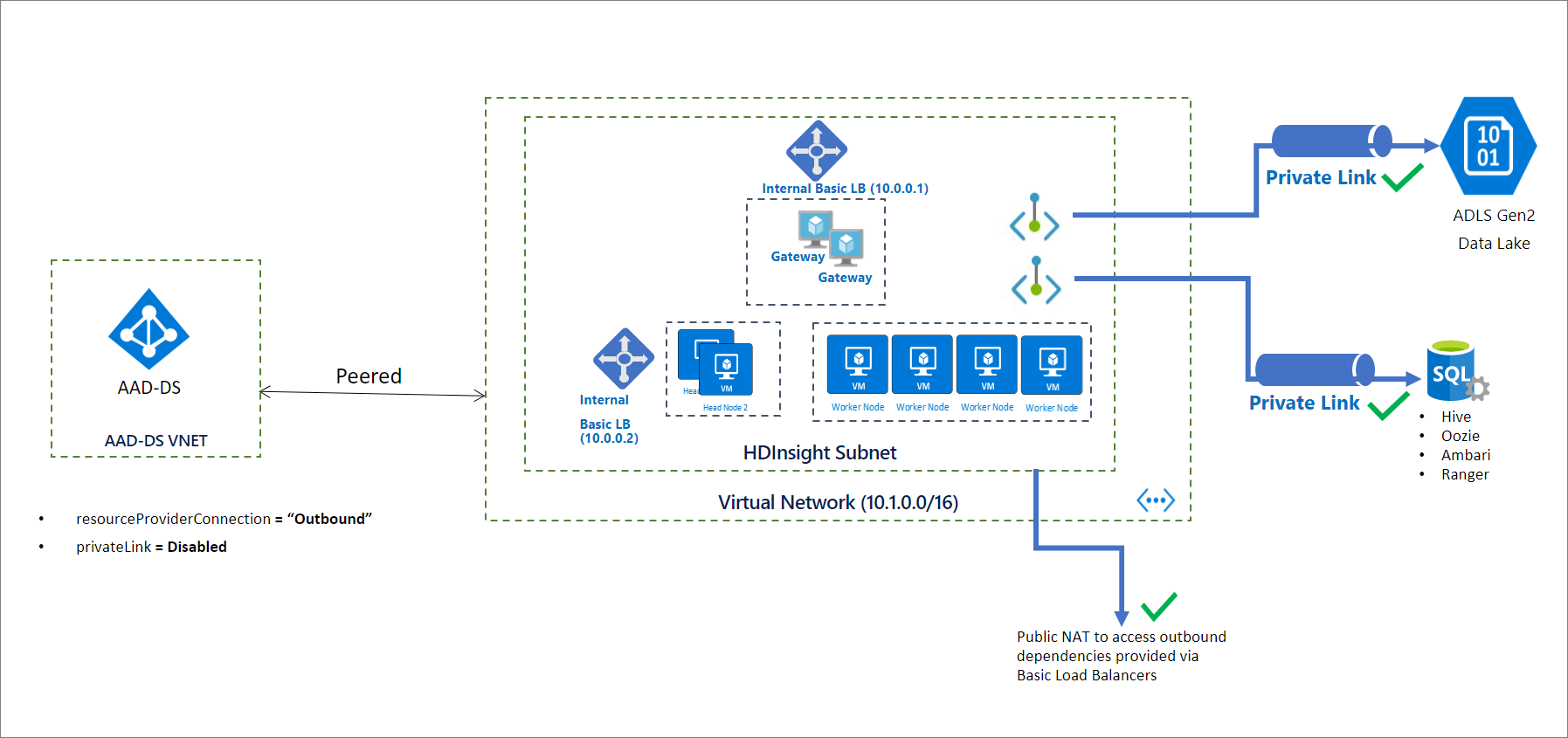

If you only aim to limit outbound connection without private link your Cluster will still have the basic load balancers, you can perform only the steps that are marked with ⭐. Since the cluster will be deployed with basic load balancers which provides NAT by default you wont need a Firewall definition. So your network architecture will be as below:

For Additional information on how to control network traffic you can use this gudaince.

If you want to use Kafka with vnet peering, you need to change the kafka broker setting to advertise ip addresses instead of FQDNs.

reference guide doc for below steps: https://docs.microsoft.com/en-us/azure/hdinsight/kafka/apache-kafka-get-started#connect-to-the-cluster

-

ssh to cluster:

ssh sshuser@<yourclustername>-ssh.azurehdinsight.net -

set config for the session:

Install jq, a command-line JSON processor.

sudo apt -y install jq -

convert password to env variable for the seesion to use in the comments:

export password='<youradminpassword>' -

Extract the correctly cased cluster name

export clusterName=$(curl -u admin:$password -sS -G "http://headnodehost:8080/api/v1/clusters" | jq -r '.items[].Clusters.cluster_name') -

set an environment variable with Zookeeper host information,

export KAFKAZKHOSTS=$(curl -sS -u admin:$password -G https://$clusterName.azurehdinsight.net/api/v1/clusters/$clusterName/services/ZOOKEEPER/components/ZOOKEEPER_SERVER | jq -r '["\(.host_components[].HostRoles.host_name):2181"] | join(",")' | cut -d',' -f1,2); echo $KAFKAZKHOSTS

-

set an environment variable with Apache Kafka broker host information

export KAFKABROKERS=$(curl -sS -u admin:$password -G https://$clusterName.azurehdinsight.net/api/v1/clusters/$clusterName/services/KAFKA/components/KAFKA_BROKER | jq -r '["\(.host_components[].HostRoles.host_name):9092"] | join(",")' | cut -d',' -f1,2); echo $KAFKABROKERS

-

create topic (You can create topics automatically for test or reliability purposes. To do that we need to go to Ambari, kafka, config, and set

auto.create.topics.enabletotruefrom Advanced kafka broker settings, then restart all)/usr/hdp/current/kafka-broker/bin/kafka-topics.sh --create --replication-factor 3 --partitions 4 --topic test --zookeeper $KAFKAZKHOSTS -

list topic:

/usr/hdp/current/kafka-broker/bin/kafka-topics.sh --list --zookeeper $KAFKAZKHOSTS

1 . write records to the topic:

console /usr/hdp/current/kafka-broker/bin/kafka-console-producer.sh --broker-list $KAFKABROKERS --topic test

- read records from the topic:

/usr/hdp/current/kafka-broker/bin/kafka-console-consumer.sh --bootstrap-server $KAFKABROKERS --topic test --from-beginning

You can also use below sample python consumer and producer applicataions from a VM client:

- Producer: KafkaProducer.ipynb

- ProducConsumerer: KafkaConsumer.ipynb

Besides many possible other architectures of Kafka Connect Clusters, We can have a standalone or distributed Kafka Connect Cluster created as Edge nodes to the cluster. To test that configuration you can follow this lab

HDInsight Kafka clusters does not support autoscale due to the nature of broker and partition setup of auto downscale behavior.

You should rebalance kafka partitions and replicas after scaling operations.

HDInsight cluster nodes are spread into different Fault domains to prevent loss in case of an issue in a specific fault domain.

Kafka as an application is not rack and in case of Azure Fault Domain aware. To get the Kafka topics also highly available as the nodes by means of Fault Domains, you need to run partition rebalance tool in case of new cluster creation, when a new topic/partition is created,cluster is scaled up.

There is no built in multi region configuration for HDI Kafka. you can use MirrorMaker to replicate the cluster.

https://docs.microsoft.com/en-us/azure/hdinsight/kafka/apache-kafka-high-availability

-

Upgrading HDI and Kafka version: HDInsight does not support in-place upgrades where an existing cluster is upgraded to a newer component version. You must create a new cluster with the desired component and platform version and then migrate your applications to use the new cluster. For more detail read here

-

Patching HDI: Running clusters aren't auto-patched. Customers must use script actions or other mechanisms to patch a running cluster. For more detail read here

- Network Security: We described network securty options above.

- Authentication: Enterprise Security Package from HDInsight provides Active Directory-based authentication, multi-user support, and role-based access control. The Active Directory integration is achieved through the use of Azure Active Directory Domain Services. With these capabilities, you can create an HDInsight cluster joined to an Active Directory domain. Then configure a list of employees from the enterprise who can authenticate to the cluster. For example, the admin can configure Apache Ranger to set access control policies for Hive. This functionality ensures row-level and column-level filtering (data masking). And filter the sensitive data from unauthorized users.

User syncing for Apache Ranger and Ambari access has to be done seperately since these services dont use the same metastore. The guidance can be find here

-

Auditing: The HDInsight admin can view and report all access to the HDInsight cluster resources and data. The admin can view and report changes to the access control policies. To access Apache Ranger and Ambari audit logs, and ssh access logs, enable Azure Monitor and view the tables that provide auditing records.

-

Encryption: HDInsight supports data encryption at rest with both platform managed and customer managed keys. Encryption of data in transit is handled with both TLS and IPSec. See Encryption in transit for Azure HDInsight for more information.

Important: Using private endpoints for Azure Key Vault is not supported by HDInsight. If you're using Azure Key Vault for CMK encryption at rest, the Azure Key Vault endpoint must be accessible from within the HDInsight subnet with no private endpoint.

More details on Security you can find here: https://docs.microsoft.com/en-us/azure/hdinsight/domain-joined/hdinsight-security-overview

For ACL integration with ADLS you can follow below guidance: https://azure.microsoft.com/en-us/blog/azure-hdinsight-integration-with-data-lake-storage-gen-2-preview-acl-and-security-update/