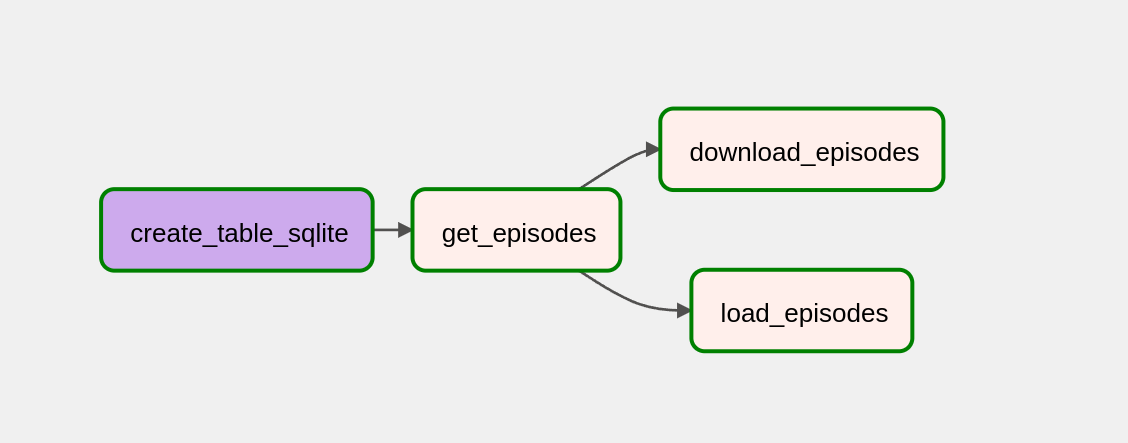

This Airflow DAG named podcast_summary2 is designed to extract, transform and load podcast episodes from

the Marketplace podcast feed, and store them in a SQLite database. It also downloads the audio files of the podcast

episodes to the specified directory.

- Airflow

- requests library

- xmltodict library

- SQLite

dag_id: podcast_summary2description: podcastsstart_date: March 15, 2023schedule_interval: Dailycatchup: False

This task creates a table named episodes in the SQLite database, with columns link,

title, filename, published, and description.

This task retrieves the XML data from the Marketplace podcast feed, and parses it to extract the podcast episodes. The episodes are returned as a list.

This task loads the new episodes into the SQLite database. It checks if an episode already exists in the database by

comparing its link, and only inserts new episodes. The task also generates the filename of the episode and inserts

it into the filename column.

This task downloads the audio files of the podcast episodes to the specified directory. It iterates over the list of episodes, and downloads the audio file for each episode if it does not already exist in the specified directory.

This DAG assumes that a SQLite database connection named podcasts has been created in Airflow, and the

path of the database file is ~/airflow/dags/episodes.db. The downloaded audio files will

be stored in /~/airflow/dags/episodes/. You may need to modify the file paths in the code

to match your environment.