requirements.txt is included in each stage directory (All of them are the same so you can use any of them).

If you use the Anaconda virtual environment,

conda create -n sasv python=3.9 cudatoolkit=11.3

conda activate sasv

Install all dependency packages,

pip3 install -r requirements.txt

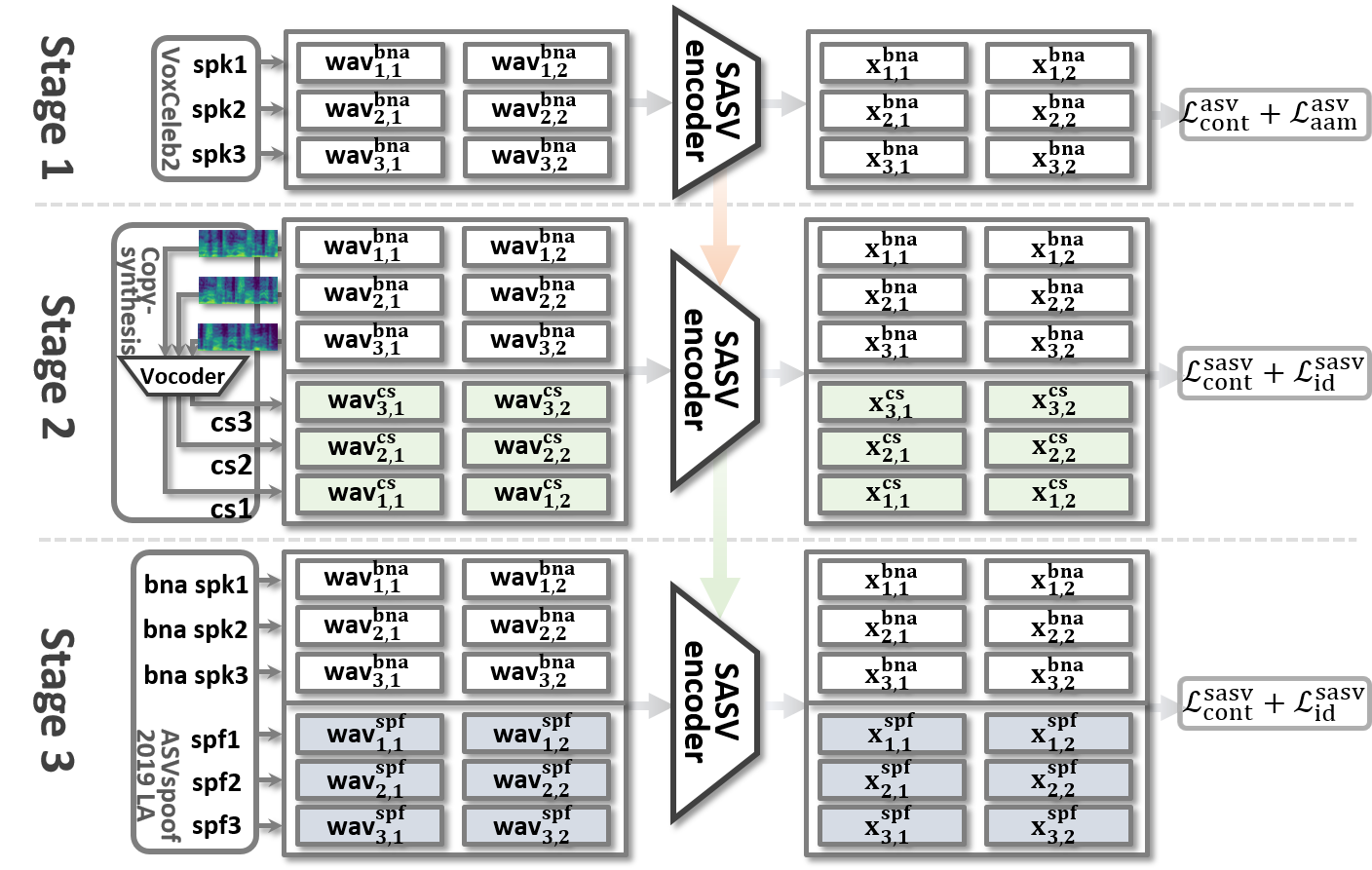

In Stage 1, the ability to discriminate between target and bona fide non-target speakers can be learned using the VoxCeleb2 database which contains data collected from thousands of bona fide speakers. In this repository, we provide the pre-trained weights of the following models:

- ECAPA-TDNN: ecapa_tdnn.model (64MB)

- MFA_Conformer: mfa_conformer.model (89MB)

- SKA-TDNN: ska_tdnn.model (123MB)

| Model | params | SASV-EER (%) | SV-EER (%) | SPF-EER (%) |

|---|---|---|---|---|

ECAPA-TDNN |

16.7M | 20.66 | 0.74 | 27.30 |

MFA-Conformer |

20.9M | 20.22 | 0.41 | 26.52 |

SKA-TDNN |

29.4M | 16.74 | 0.38 | 22.38 |

You can evaluate the pre-trained weights using the following commands:

cd stage3

python trainSASVNet.py

--eval \

--test_list ./protocols/ASVspoof2019.LA.asv.eval.gi.trl.txt \

--test_path /path/to/dataset/ASVSpoof/ASVSpoof2019/LA/ASVspoof2019_LA_eval/wav \

--model ECAPA_TDNN \

--initial_model /path/to/weight/ecapa_tdnn.model

python trainSASVNet.py

--eval \

--test_list ./protocols/ASVspoof2019.LA.asv.eval.gi.trl.txt \

--test_path /path/to/dataset/ASVSpoof/ASVSpoof2019/LA/ASVspoof2019_LA_eval/wav \

--model MFA_Conformer \

--initial_model /path/to/weight/mfa_conformer.model

python trainSASVNet.py

--eval \

--test_list ./protocols/ASVspoof2019.LA.asv.eval.gi.trl.txt \

--test_path /path/to/dataset/ASVSpoof/ASVSpoof2019/LA/ASVspoof2019_LA_eval/wav \

--model SKA_TDNN \

--initial_model /path/to/weight/ska_tdnn.modelIn Stage 2, we augment the model with the ability to discriminate between bona fide and spoofed inputs by using large-scale data generated through an oracle speech synthesis system, referred to as copy synthesis.

This repository has the copy-synthesis training using copy-synthesized data from VoxCeleb2 dev or ASVspoof2019 LA train/train+dev.

Even though training in Stages 1 and 2 learn to discriminate bona fide non-target and spoof non-target inputs, there is a remaining domain mismatch with the evaluation protocol. Furthermore, artefacts from the acoustic model have yet to be learned. Hence, in Stage 3, we fine-tune the model using in-domain bona fide and spoofed data contained within the ASVspoof2019 LA dataset.

| Stage1 | Stage2 | Stage3 | SASV-EER | SASV-EER | SASV-EER | SASV-EER | |

|---|---|---|---|---|---|---|---|

| ASV-based Pre-training |

Copy-synthesis Training |

In-domain Fine-tuning |

SKA-TDNNtrain |

SKA-TDNNtrain+dev |

MFA-Conformertrain |

MFA-Conformertrain+dev |

|

| 1 | - | - | ASVspoof2019(bna+spf) |

9.55 | 5.94 | 11.47 | 7.67 |

| 2 | VoxCeleb2(bna) |

- | - | - | 16.74 | - | 20.22 |

| 3 | VoxCeleb2(bna) |

- | ASVspoof2019(bna+spf) |

2.67 | 1.25 | 2.13 | 1.51 |

| 4 | - | VoxCeleb2(bna+cs) |

- | - | 13.11 | - | 14.27 |

| 5 | - | VoxCeleb2(bna+cs) |

ASVspoof2019(bna+spf) |

2.47 | 1.93 | 1.91 | 1.35 |

| 6 | VoxCeleb2(bna) |

VoxCeleb2(bna+cs) |

- | - | 10.24 | - | 12.33 |

| 7 | VoxCeleb2(bna) |

VoxCeleb2(bna+cs) |

ASVspoof2019(bna+spf) |

1.83 | 1.56 | 1.19 | 1.06 |

| 8 | - | ASVspoof2019(bna+cs) |

- | 13.10 | 10.49 | 13.63 | 12.48 |

| 9 | - | ASVspoof2019(bna+cs) |

ASVspoof2019(bna+spf) |

9.57 | 6.17 | 13.46 | 10.11 |

| 10 | VoxCeleb2(bna) |

ASVspoof2019(bna+cs) |

- | 5.62 | 4.93 | 9.31 | 8.32 |

| 11 | VoxCeleb2(bna) |

ASVspoof2019(bna+cs) |

ASVspoof2019(bna+spf) |

2.48 | 1.44 | 2.72 | 1.76 |

You can download each pre-trained weight from the above links:

If you utilize this repository, please cite the following paper,

@inproceedings{chung2020in,

title={In defence of metric learning for speaker recognition},

author={Chung, Joon Son and Huh, Jaesung and Mun, Seongkyu and Lee, Minjae and Heo, Hee Soo and Choe, Soyeon and Ham, Chiheon and Jung, Sunghwan and Lee, Bong-Jin and Han, Icksang},

booktitle={Proc. Interspeech},

year={2020}

}

@inproceedings{jung2022pushing,

title={Pushing the limits of raw waveform speaker recognition},

author={Jung, Jee-weon and Kim, You Jin and Heo, Hee-Soo and Lee, Bong-Jin and Kwon, Youngki and Chung, Joon Son},

booktitle={Proc. Interspeech},

year={2022}

}

@inproceedings{mun2022frequency,

title={Frequency and Multi-Scale Selective Kernel Attention for Speaker Verification},

author={Mun, Sung Hwan and Jung, Jee-weon and Han, Min Hyun and Kim, Nam Soo},

booktitle={Proc. IEEE SLT},

year={2022}

}