Human Activity Recognition (HAR) using smartphones dataset and an LSTM RNN. Classifying the type of movement amongst six categories:

- WALKING,

- WALKING_UPSTAIRS,

- WALKING_DOWNSTAIRS,

- SITTING,

- STANDING,

- LAYING.

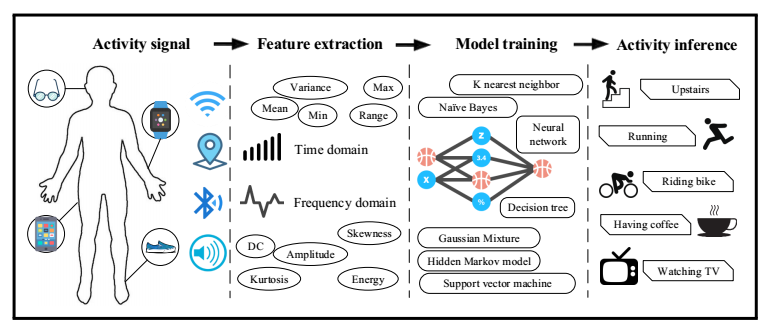

Compared to the classical Machine Learning approaches, Recurrent Neural Networks (RNN) with Long Short-Term Memory cells (LSTMs) require no or almost no feature engineering at all. Remember, we needed a lot of feature engineering to be done in for our Machine Learning models to achieve the accuracy it has! (96% max). In RNN-LSTMs, raw data can be fed directly into the neural network which acts like a black box, without ay feature engineering whatsoever! Other research in this topic of activity recognition uses a huge amount of feature engineering, which are signal processing approaches combined with classical Machine Learning/Data Science techniques. The approach here is rather very simple in terms of how much was the data preprocessed. Almost none. All we need to take care is how we design our Deep Learning models.

We will TensorFlow and Keras Libraries to demonstrate the usage of an LSTM, a type of Artificial Neural Network that can process sequential data / time series data.

Please watch this Youtube video link I have attached below to see how the data was recorded.

[Watch video]I will be using a special type of Recurrent Neural Network called LSTM on the dataset to learn (as a cellphone attached on the waist) to recognise what type of activity the user is doing. Few very important points about the dataset are as follows:

-

These sensor signals are preprocessed by applying noise filters and then sampled in fixed-width windows(sliding windows) of 2.56 seconds each with 50% overlap. ie., each window has 128 readings.

-

From Each window, a feature vector was obtianed by calculating variables from the time and frequency domain. In our dataset, each datapoint represents a window with different readings

-

The accelertion signal was saperated into Body and Gravity acceleration signals(tBodyAcc-XYZ and tGravityAcc-XYZ) using some low pass filter with corner frequecy of 0.3Hz.

-

After that, the body linear acceleration and angular velocity were derived in time to obtian jerk signals (tBodyAccJerk-XYZ and tBodyGyroJerk-XYZ).

-

The magnitude of these 3-dimensional signals were calculated using the Euclidian norm. This magnitudes are represented as features with names like tBodyAccMag, tGravityAccMag, tBodyAccJerkMag, tBodyGyroMag and tBodyGyroJerkMag.

-

Finally, We've got frequency domain signals from some of the available signals by applying a FFT (Fast Fourier Transform). These signals obtained were labeled with prefix 'f' just like original signals with prefix 't'. These signals are labeled as fBodyAcc-XYZ, fBodyGyroMag etc.,.

-

These are the signals that we got so far.

- tBodyAcc-XYZ

- tGravityAcc-XYZ

- tBodyAccJerk-XYZ

- tBodyGyro-XYZ

- tBodyGyroJerk-XYZ

- tBodyAccMag

- tGravityAccMag

- tBodyAccJerkMag

- tBodyGyroMag

- tBodyGyroJerkMag

- fBodyAcc-XYZ

- fBodyAccJerk-XYZ

- fBodyGyro-XYZ

- fBodyAccMag

- fBodyAccJerkMag

- fBodyGyroMag

- fBodyGyroJerkMag

-

In the dataset, Y_labels are represented as numbers from 1 to 6 as their identifiers.

- WALKING as 1

- WALKING_UPSTAIRS as 2

- WALKING_DOWNSTAIRS as 3

- SITTING as 4

- STANDING as 5

- LAYING as 6

For details about what RNNs are please visit this wonderful article written by Andrej Karpathy titled "The Unreasonable Effectiveness of Recurrent Neural Networks". As explained in this article, an RNN takes many input vectors to process them and output other vectors. It can be roughly pictured like in the image below, imagining each rectangle has a vectorial depth and other special hidden quirks in the image below. In our case, the "many to one" architecture is used: we accept time series of feature vectors (one vector per time step) to convert them to a probability vector at the output for classification. Note that a "one to one" architecture would be a standard feedforward neural network.

An LSTM is an improved RNN. It is more complex, but easier to train, avoiding what is called the vanishing gradient problem. I recommend this article for you to learn more on LSTMs.