3Surrey Institute for People-Centred Artificial Intelligence, UK

4KAUST, Saudi Arabia 5Meta, London

Under review in TPAMI

|

- (June, 2023) We released MUPPET training and inference code for ActivityNetv1.3 dataset.

- (March, 2023) MUPPET is available on Arxiv.

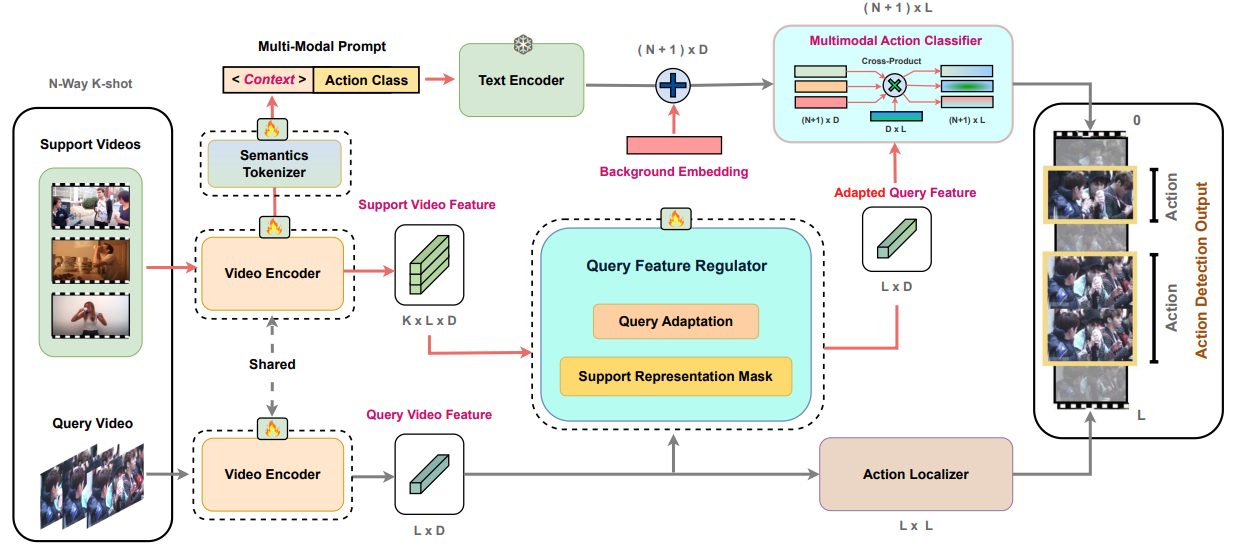

- First prompt-guided framework for Any-Shot (Few-Shot/Multi-Modal Few-Shot/Zero-Shot) Temporal Action Detection task.

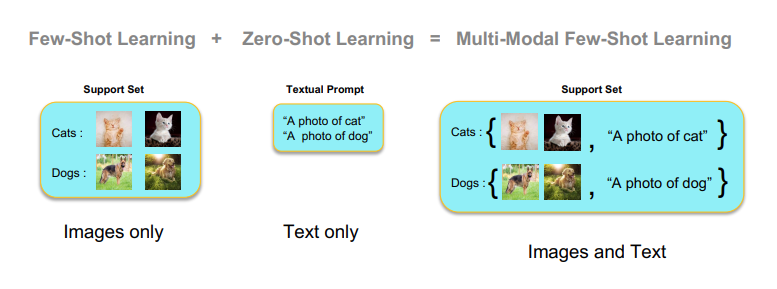

- Introduced a new setting of Multi-Modal Few-Shot Temporal Action Detection.

- Adapter based approach for episodic adaptation of few-shot video examples.

- First approach to show that multi-modal few-shot can be implemented using textual inversion of video examples.

Few-shot (FS) and zero-shot (ZS) learning are two different approaches for scaling temporal action detection (TAD) to new classes. The former adapts a pretrained vision model to a new task represented by as few as a single video per class, whilst the latter requires no training examples by exploiting a semantic description of the new class. In this work, we introduce a new multi-modality few-shot (MMFS) TAD problem, which can be considered as a marriage of FS-TAD and ZS-TAD by leveraging few-shot support videos and new class names jointly. To tackle this problem, we further introduce a novel MUlti-modality PromPt mETa-learning (MUPPET) method. This is enabled by efficiently bridging pretrained vision and language models whilst maximally reusing already learned capacity. Concretely, we construct multi-modal prompts by mapping support videos into the textual token space of a vision-language model using a meta-learned adapter-equipped visual semantics tokenizer. To tackle large intra-class variation, we further design a query feature regulation scheme. Extensive experiments on ActivityNetv1.3 and THUMOS14 demonstrate that our MUPPET outperforms state-of-the-art alternative methods, often by a large margin. We also show that our MUPPET can be easily extended to tackle the few-shot object detection problem and again achieves the state-of-the-art performance on MS-COCO dataset.

- Python 3.7

- PyTorch == 1.9.0 (Please make sure your pytorch version is atleast 1.8)

- 8 x NVIDIA 3090 GPU

- Hugging-Face Transformers

- Detectron

It is suggested to create a Conda environment and install the following requirements

pip3 install -r requirements.txtWe have used the implementation of Maskformer for Representation Masking.

git clone https://github.com/sauradip/MUPPET.git

cd MUPPET

git clone https://github.com/facebookresearch/MaskFormerFollow the Installation instructions to install Detectron and other modules within this same environment if possible. After this step, place the files in /MUPPET/extra_files into /MUPPET/MaskFormer/mask_former/modeling/transformer/.

Download the video features and update the Video paths/output paths in config/anet.yaml file. For now ActivityNetv1.3 dataset config is available. We are planning to release the code for THUMOS14 dataset soon.

| Dataset | Feature Backbone | Pre-Training | Link |

|---|---|---|---|

| ActivityNet | ViT-B/16-CLIP | CLIP | Google Drive |

| THUMOS | ViT-B/16-CLIP | CLIP | Google Drive |

| ActivityNet | I3D | Kinetics-400 | Google Drive |

| THUMOS | I3D | Kinetics-400 | Google Drive |

We followed the pre-processing of AFSD to pre-process the video data. For the Adaptformer we selected Kinetics pre-trained weights available at this link. We use this as initialized weights and only learn few parameters in adapter for the downstream few-shot episodes.

Currently we support the training-splits provided by QAT as there is no publicly available split apart from random split.

The model training happens in 2 phases. But first set up all weights and paths in config/anet.yaml file.

To pre-train MUPPET on base-class split , first set the parameter ['fewshot']['mode'] = 0 , ['fewshot']['shot'] = 1/2/3/4/5, ['fewshot']['nway'] = 1/2/3/4/5 in config/anet.yaml file. Then run the following command.

python stale_pretrain_base.pyAfter Training with Base split , now our model is ready to transfoer knowledge to novel classes. But still we need to adapt the decoder head using few-support (few-shot) samples. For this we need another stage training.

To pre-train MUPPET on novel-class split , first set the parameter ['fewshot']['mode'] = 2 , ['fewshot']['shot'] = 1/2/3/4/5, ['fewshot']['nway'] = 1/2/3/4/5 in config/anet.yaml file. Then run the following command.

python stale_inference_meta_pretrain.pyDuring this stage the Adapter should learn the parameters specific to training episodes.

The checkpoints will be automatically saved to output directly if properly set-up in config/anet.yaml file. Additionally, set the parameter ['fewshot']['mode'] = 3 , ['fewshot']['shot'] = 1/2/3/4/5, ['fewshot']['nway'] = 1/2/3/4/5 in config/anet.yaml file. Then run the following command.

python stale_inference_meta_pretrain.pySame .py file as meta-train but the few-shot mode is changed. This step shows a lot of variation as random classes are picked up as query and intra-class variation causes mAP to vary. SO a high number of few-shot episodes is/should be kept. This command saves the video output and post-processes.

To evaluate our MUPPET model run the following command.

python eval.py- Fix the learnable-prompt issue in Huggig-Face Transformer

- Fix the NaN bug during Model-Training

- Support for THUMOS14 dataset

- Enable multi-gpu training

Our source code is based on implementations of STALE, DenseCLIP, MaskFormer and CoOP. We thank the authors for open-sourcing their code.

If you find this project useful for your research, please use the following BibTeX entry.

@article{nag2022multi,

title={Multi-Modal Few-Shot Temporal Action Detection via Vision-Language Meta-Adaptation},

author={Nag, Sauradip and Xu, Mengmeng and Zhu, Xiatian and Perez-Rua, Juan-Manuel and Ghanem, Bernard and Song, Yi-Zhe and Xiang, Tao},

journal={arXiv preprint arXiv:2211.14905},

year={2022}

}

@article{nag2021few,

title={Few-shot temporal action localization with query adaptive transformer},

author={Nag, Sauradip and Zhu, Xiatian and Xiang, Tao},

journal={arXiv preprint arXiv:2110.10552},

year={2021}

}

@inproceedings{nag2022zero,

title={Zero-shot temporal action detection via vision-language prompting},

author={Nag, Sauradip and Zhu, Xiatian and Song, Yi-Zhe and Xiang, Tao},

booktitle={Computer Vision--ECCV 2022: 17th European Conference, Tel Aviv, Israel, October 23--27, 2022, Proceedings, Part III},

pages={681--697},

year={2022},

organization={Springer}

}