This is a set of tutorials for the CMS Machine Learning Hands-on Advanced Tutorial Session (HATS). They are intended to show you how to build machine learning models in python (Keras/TensorFlow) and use them in your ROOT-based analyses. We will build event-level classifiers for differentiating VBF Higgs and standard model background 4 muon events and jet-level classifiers for differentiating boosted W boson jets from QCD jets.

0-setup-libraries.ipynb: setting up libraries usingCMSSW1-datasets-uproot.ipynb: reading/writing datasets fromROOTfiles withuproot2-plotting.ipynb: plotting withpyROOTandmatplotlib3-dense.ipynb: building, training, and evaluating a fully connected (dense) neural network inKeras3.1-dense-pytorch.ipynb: The same as3-dense.ipynb, but usingPyTorchrather thanKeras/`TensorFlow.3.2-dense-bayesian-optimization.ipynb: The same as3-dense.ipynb, but using Bayesian optimization of the hyperparameters of the model4-preprocessing.ipynb: preprocessing CMS open data to build jet-images (optional)5-conv2d.ipynb: building, training, and evaluating a 2D convolutional neural network inKeras7-vae-mnist.ipynb: building, training, and evaluating a variational autoencoder inKeras8-gan-mnist.ipynb: building, training, and evaluating a generative adversarial neural network inKeras

We will be using the Vanderbilt JupyterHub. Point your browser to:

https://jupyter.accre.vanderbilt.edu/

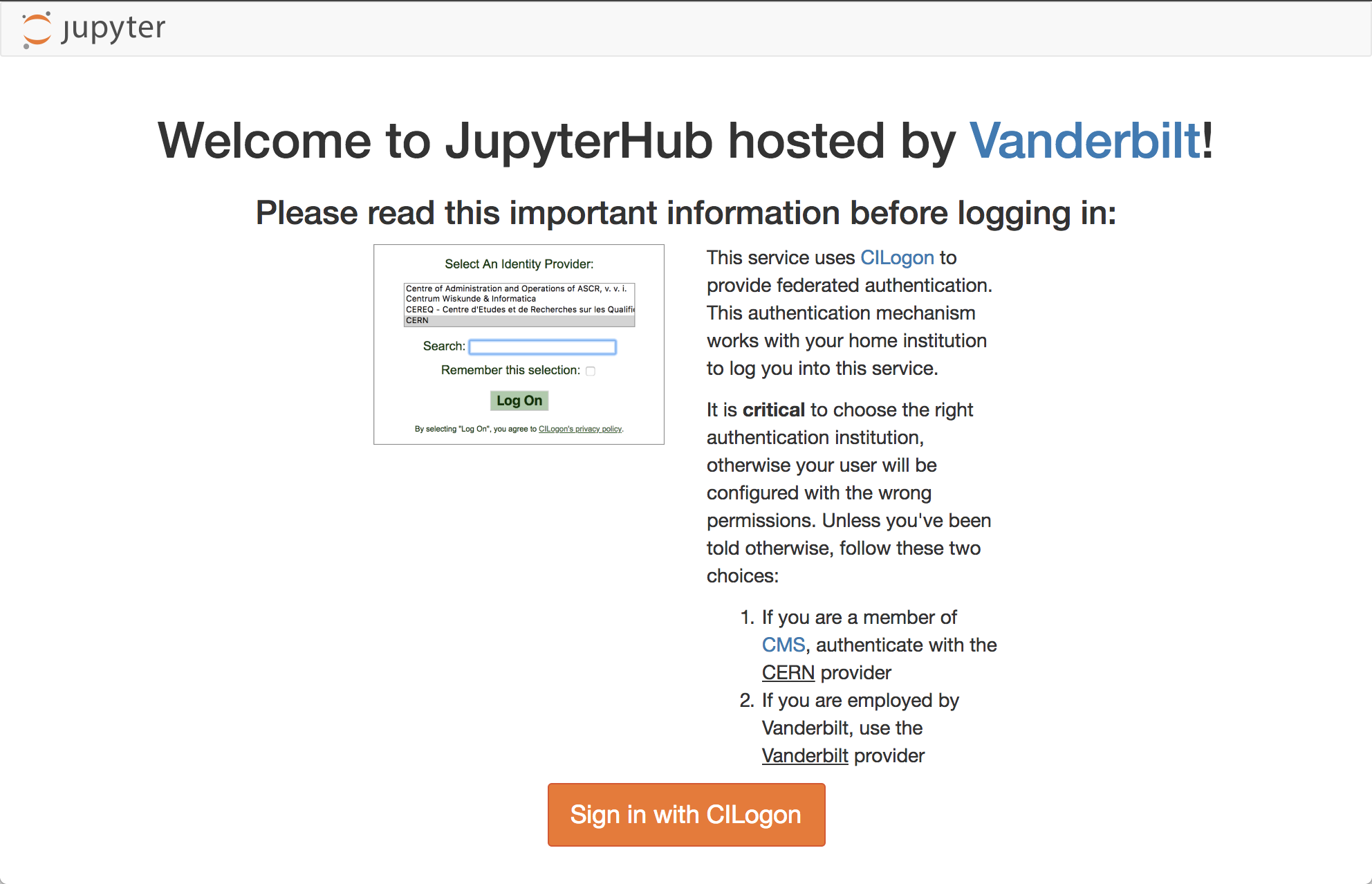

If this is the first time using this JupyterHub, you should see:

Click the "Sign in with Jupyter ACCRE" button. On the following page, select CERN as your identity provider and click the "Log On" button. Then, enter your CERN credentials or use your CERN grid certificate to autheticate. Click "Spawn" to start a "Default ACCRE Image v5" image with "8 Cores, 8 GB RAM, 1 day timeout."

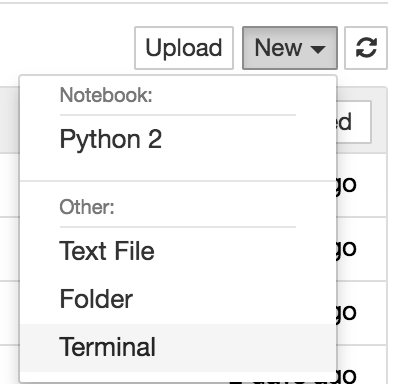

Now you should see the JupyterHub home directory. Click on "New" then "Terminal" in the top right to launch a new terminal.

To download the tutorials, type

git clone https://github.com/FNALLPC/machine-learning-hats machine-learning-hats-2021

Now, in your directory tab, there should be a new directory called machine-learning-hats-2021. All of the tutorials and exercises are in there. Start by clicking on 0-setup-libraries.ipynb and running it. Please note that the first cell may take up to 20 minutes to run.

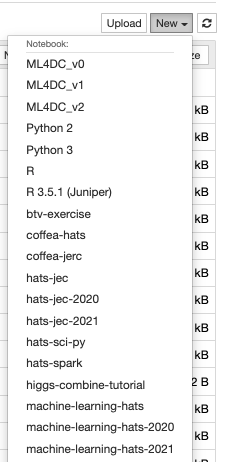

Now close this notebook by clicking on File->Close and Halt. Click the "New" button again. You should see a new kernal called machine-learning-hats-2021. If you do not, refresh the page and look again.

- Please remember to close and halt your notebooks when you're done with them. While it's possible to have multiple notebooks open, this can sometimes cause issues with a lack of memory and kernels becoming unstable. The best procatice is to close the notebooks you aren't using. The notebook symbol will be green while it is in use and gray when it is shutdown.

- Remember to shutdown your server when your are done using it. Go to

Control Panel --> Stop My Serverwhen you are completely finished.

In this case you will want to open a terminal, just as you did when you first cloned the repository. Move (cd) to the repository directory if not already there. Then do git pull origin master. This will pull the latest changes from GitHub. If you've already made edits to a notebook which will be updated, those changes will be conflict. You may want to stash those changes or copy your modified notebook.

To revert the changes made to all of your notebooks you can use the command:

git checkout .

If you would like to save your changes first, you can copy your modified notebook to a new name and then run the checkout command.

Alternatively, to stash your un-staged changes, use the command:

git stash

You can then return those changes with the command:

git stash pop

Be aware that you may have conflicts that need to be fixed before the notebook can be run again.

This is possible, but with a little less user support. You will need to use the following command to open up your ssh connection:

ssh -L localhost:8888:localhost:8888 <username>@cmslpc-sl7.fnal.govReplace <username> with your LPC username. Then cd to the directory of your choice where you will clone the repository as before:

git clone https://github.com/FNALLPC/machine-learning-hatsIn order to open Jupyter with all the appopriate libraries, you will need to have either installed a conda environment with Jupyter in your nobackup area (where you have more space) or have a CMSSW environment setup. To install and activate a conda environment you can do:

wget https://repo.anaconda.com/miniconda/Miniconda3-latest-Linux-x86_64.sh -O $HOME/nobackup/miniconda3.sh

bash $HOME/nobackup/miniconda3.sh -b -f -u -p $HOME/nobackup/miniconda3

source $HOME/nobackup/miniconda3/etc/profile.d/conda.sh

conda env create -f environment.yml --name machine-learning-hats-2021

conda activate machine-learning-hats-2021Once you have a conda environment, open Jupyter (which is actually the Jupyter that gets installed as part of the conda environment):

jupyter notebook --no-browser --port=8888 --ip 127.0.0.1If everything worked, the last line of the output should be a url of the form:

http://127.0.0.1:8888/?token=<long string of numbers and letters>Copy this url into your browser. You may now perform the rest of the exercise like normal, except you will have to change the kernel to the default python3 one (it will have all the necessary libararies because you are using the Jupyter version that is part of your conda installation).

In the future, when you need access to Jupyter and want to run this exercise, you can do source $HOME/nobackup/miniconda3/etc/profile.d/conda.sh; conda activate machine-learning-hats-2021

The indico page is: https://indico.cern.ch/event/1042663/

The Mattermost for live support is: https://mattermost.web.cern.ch/cms-exp/channels/hatslpc-2021