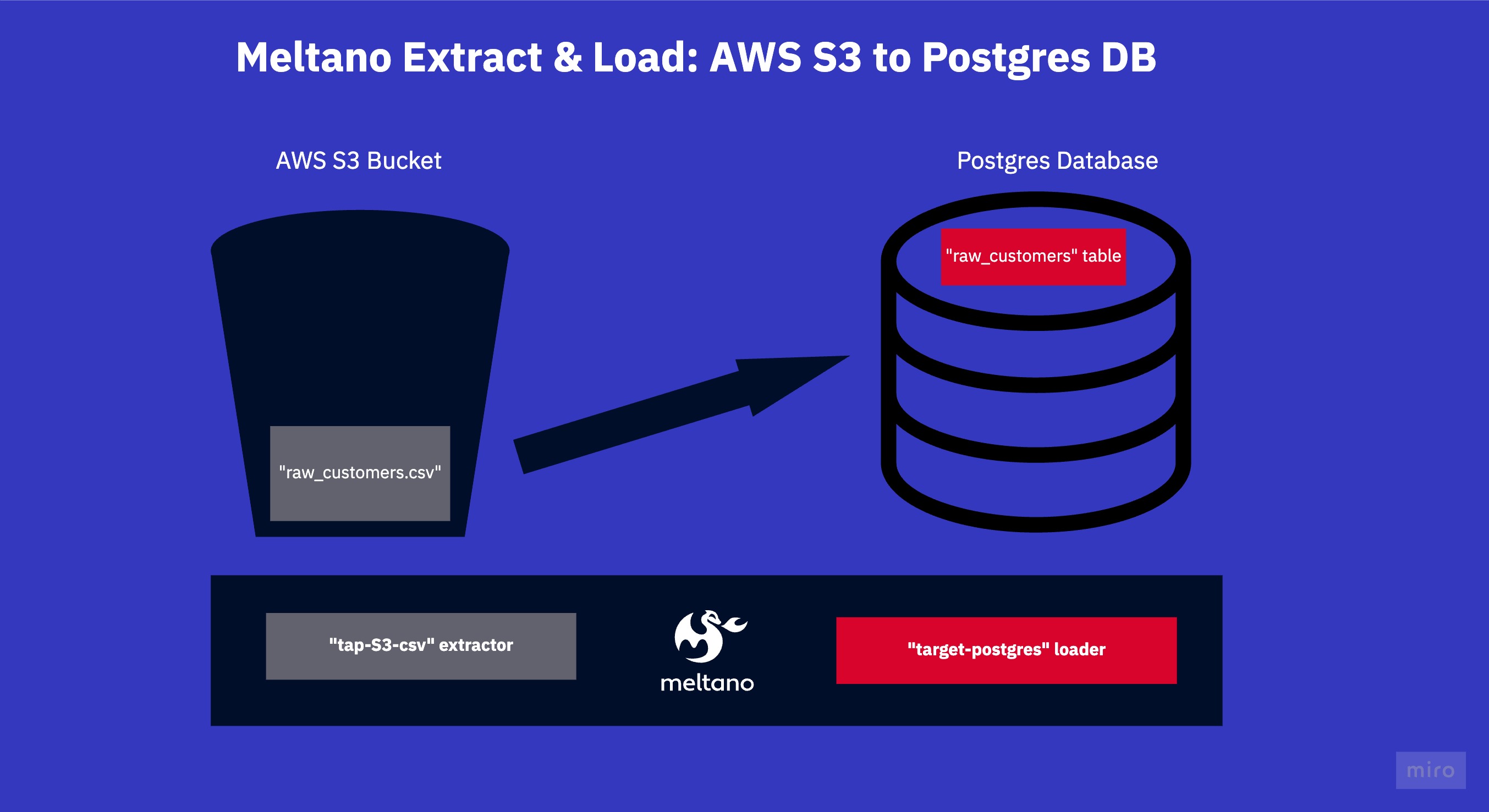

This project extends the jaffle shop sandbox project created by DbtLabs for the data built tool dbt. This meltano project sources one CSV file from AWS S3 and puts them into one table inside a Postgres database.

What this repo is:

A self-contained sandbox meltano project. Useful for testing out scripts, yaml configurations and understanding some of the core meltano concepts.

What this repo is not:

This repo is not a tutorial or an extensive walk-through. It contains some bad practices. To make it self-contained, it contains a AWS S3 mock, as well as a dockerized Postgres database.

We're focusing on simplicity here!

This repo contains an AWS S3 mock with one CSV file inside. The raw customer data.

The meltano project extracts this CSV using the tap-s3-csv extractor, and loads them into the PostgreSQL database using

the loader target-postgres.

Using this repository is really easy as it all runs inside docker via batect, a light-weight wrapper around docker.

We batect because it makes it possible for you to run this project without even installing meltano. Batect requires Java & Docker to be installed to run.

The repository has a few configured "batect tasks" which essentially all spin up docker or docker-compose for you and do things inside these containers.

Run ./batect --list-tasks to see the list of commands.

./batect launch_mock for instance will launch two docker containers one with a mock AWS S3 endpoint and one with a postgres database.

Batect automatically tears down & cleans up after the task finishes.

-

Launch the mock endpoints in a separate terminal window

./batect launch_mock. -

Launch meltano with batect via

./batect melt. 2.1. Alternatively you can use your local meltano, installed withpip install meltano. (The mocks will still work.) -

Run

meltano installto install the two plugins, the S3 extractor and the PostgreSQL loader as specified in the meltano.yml.

Here is an extract from the meltano.yml:

...

plugins:

extractors:

- name: tap-s3-csv

variant: transferwise

pip_url: pipelinewise-tap-s3-csv

config:

bucket: test

tables:

- search_prefix: ""

search_pattern: "raw_customers.csv"

table_name: "raw_customers"

key_properties: ["id"]

delimiter: ","

start_date: 2000-01-01T00:00:00Z

aws_endpoint_url: http://host.docker.internal:5005

aws_access_key_id: s

aws_secret_access_key: s

aws_default_region: us-east-1

loaders:

- name: target-postgres

variant: transferwise

pip_url: pipelinewise-target-postgres

config:

host: host.docker.internal

port: 5432

user: admin

password: password

dbname: demo-

Finally, run

meltano run tap-s3-csv target-postgresto execute the extraction and loading. -

Check inside the local database afterwards to see that your data has arrived, use the connection data below.

...

host: localhost

port: 5432

user: admin

password: password

dbname: demo