ExOpenAI is an (unofficial) Elixir SDK for interacting with the OpenAI APIs

This SDK is fully auto-generated using metaprogramming and should always reflect the latest state of the OpenAI API.

Note: Due to the nature of auto-generating something, you may encounter stuff that isn't working yet. Make sure to report if you notice anything acting up.

- Elixir SDK for OpenAI APIs

- Up-to-date and complete thanks to metaprogramming and code-generation

- Implements everything the OpenAI has to offer

- Strictly follows the official OpenAI APIs for argument/function naming

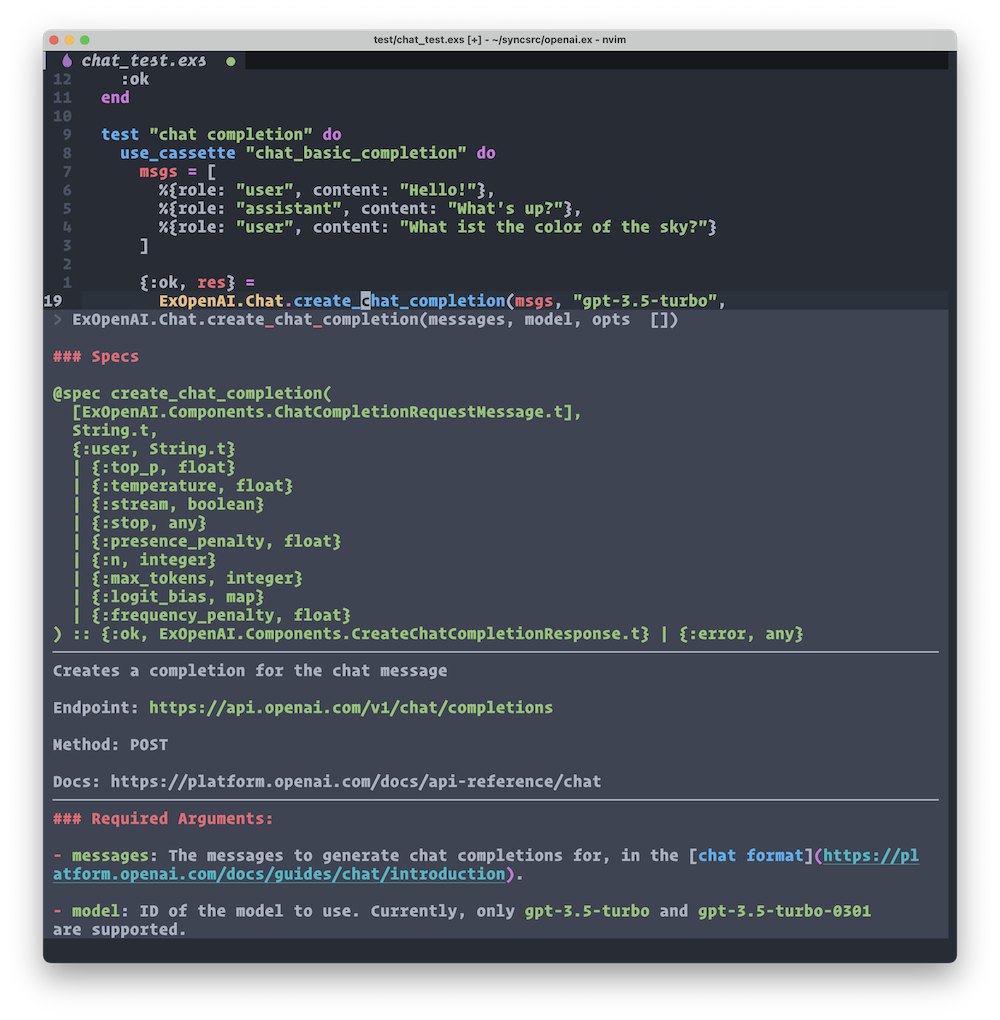

- Handling of required arguments as function parameters and optional arguments as Keyword list in true Elixir-fashion

- Auto-generated embedded function documentation

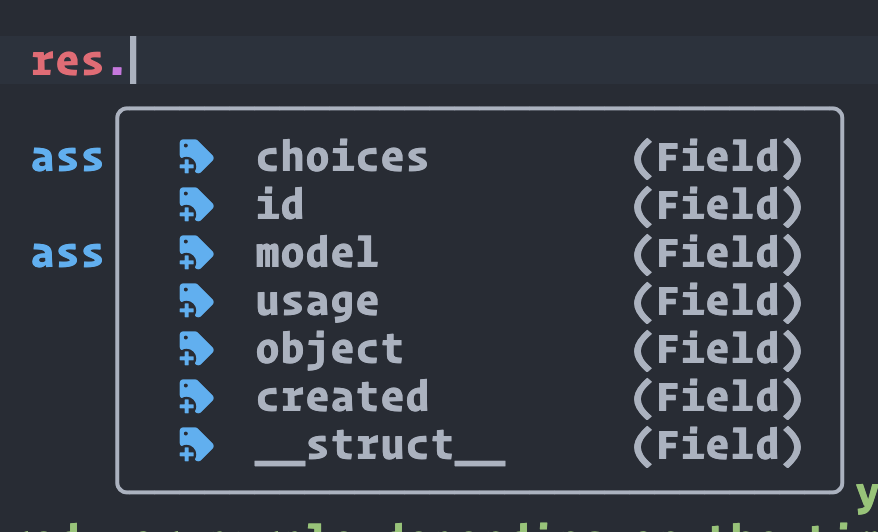

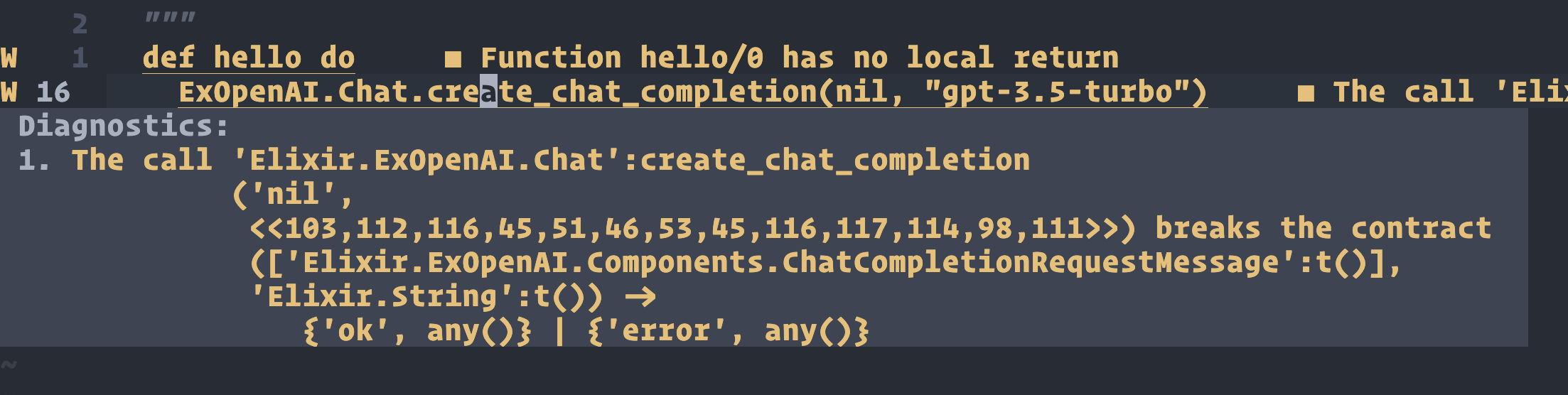

- Auto-generated @spec definitions for dialyzer, for strict parameter typing

- Support for streaming responses with SSE

Add :ex_openai as a dependency in your mix.exs file.

def deps do

[

{:ex_openai, "~> 1.2.0"}

]

end- /answers

- /chat/completions

- /classifications

- /completions

- /edits

- /embeddings

- /engines/{engine_id}

- /engines

- /files

- /files/{file_id}/content

- /fine-tunes/{fine_tune_id}/events

- /fine-tunes/{fine_tune_id}/cancel

- /fine-tunes/{fine_tune_id}

- /fine-tunes

- /images/generations

- /images/variations

- /images/edits

- /models

- /moderations

- /engines/{engine_id}/search

- /audio/translations

- /audio/transcriptions

- Typespecs for

oneOfinput types, currently represented asany() - Streams don't have complete typespecs yet

import Config

config :ex_openai,

# find it at https://platform.openai.com/account/api-keys

api_key: System.get_env("OPENAI_API_KEY"),

# find it at https://platform.openai.com/account/api-keys

organization_key: System.get_env("OPENAI_ORGANIZATION_KEY"),

# optional, passed to [HTTPoison.Request](https://hexdocs.pm/httpoison/HTTPoison.Request.html) options

http_options: [recv_timeout: 50_000]You can also pass api_key and organization_key directly by passing them into the opts argument when calling the openai apis:

ExOpenAI.Models.list_models(openai_api_key: "abc", openai_organization_key: "def")Make sure to checkout the docs: https://hexdocs.pm/ex_openai

ExOpenAI.Models.list_models

{:ok,

%{

data: [

%{

"created": 1649358449,

"id": "babbage",

"object": "model",

"owned_by": "openai",

"parent": nil,

"permission": [

%{

"allow_create_engine": false,

"allow_fine_tuning": false,

"allow_logprobs": true,

"allow_sampling": true,

"allow_search_indices": false,

"allow_view": true,

"created": 1669085501,

"group": nil,

"id": "modelperm-49FUp5v084tBB49tC4z8LPH5",

"is_blocking": false,

"object": "model_permission",

"organization": "*"

}

],

"root": "babbage"

},

...Required parameters are converted into function arguments, optional parameters into the opts keyword list:

ExOpenAI.Completions.create_completion "text-davinci-003", prompt: "The sky is"

{:ok,

%{

choices: [

%{

"finish_reason": "length",

"index": 0,

"logprobs": nil,

"text": " blue\n\nThe sky is a light blue hue that may have a few white"

}

],

created: 1677929239,

id: "cmpl-6qKKllDPsQRtyJ5oHTbkQVS9w7iKM",

model: "text-davinci-003",

object: "text_completion",

usage: %{

"completion_tokens": 16,

"prompt_tokens": 3,

"total_tokens": 19

}

}}msgs = [

%{role: "user", content: "Hello!"},

%{role: "assistant", content: "What's up?"},

%{role: "user", content: "What ist the color of the sky?"}

]

{:ok, res} =

ExOpenAI.Chat.create_chat_completion(msgs, "gpt-3.5-turbo",

logit_bias: %{

"8043" => -100

}

)Load your file into memory, then pass it into the file parameter

duck = File.read!("#{__DIR__}/testdata/duck.png")

{:ok, res} = ExOpenAI.Images.create_image_variation(duck)

IO.inspect(res.data)Streaming data is still experimental, YMMV!

Create a new client for receiving the streamed data with use ExOpenAI.StreamingClient. You'll have to implement the @behaviour ExOpenAI.StreamingClient:

defmodule MyStreamingClient do

use ExOpenAI.StreamingClient

@impl true

# callback on data

def handle_data(data, state) do

IO.puts("got data: #{inspect(data)}")

{:noreply, state}

end

@impl true

# callback on error

def handle_error(e, state) do

IO.puts("got error: #{inspect(e)}")

{:noreply, state}

end

@impl true

# callback on finish

def handle_finish(state) do

IO.puts("finished!!")

{:noreply, state}

end

endThen use it in requests that support streaming by setting stream: true and specifying stream_to: pid:

{:ok, pid} = MyStreamingClient.start_link nil

ExOpenAI.Completions.create_completion "text-davinci-003", prompt: "hello world", stream: true, stream_to: pidYour client will now receive the streamed chunks

- Type information for streamed data is not correct yet. For Completions.create_completion it's fine, however Chat.create_chat_completion requests use a different struct with a

deltafield - Return types for when setting

stream: trueis incorrect, dialyzer may complain

Run mix update_openai_docs and commit the new docs.yaml file

- Elixir ChatGPT

- https://fixmyjp.d.sh

- https://github.com/dvcrn/gpt-slack-bot

- https://david.coffee/mini-chatgpt-in-elixir-and-genserver/

The package is available as open source under the terms of the MIT License.

- Inspired by https://github.com/BlakeWilliams/Elixir-Slack

- Client/config handling from https://github.com/mgallo/openai.ex