Imitation learning algorithms (with PPO [1]):

python main.py --imitation [AIRL|BC|DRIL|FAIRL|GAIL|GMMIL|PUGAIL|RED]

Options include:

- State-only imitation learning:

--state-only - Absorbing state indicator [12]:

--absorbing - R1 gradient regularisation [13]:

--r1-reg-coeff 1(default)

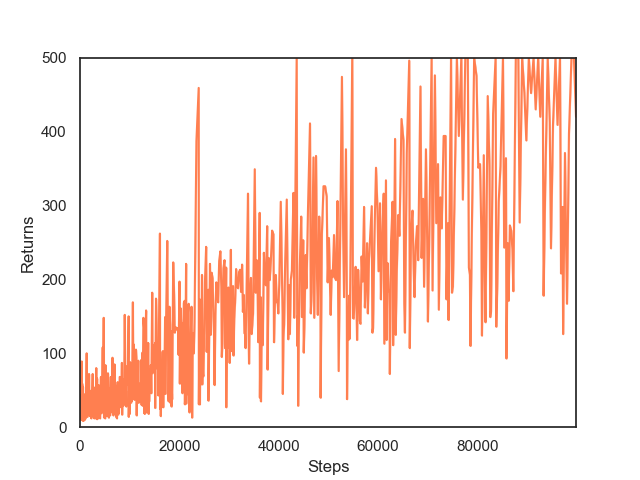

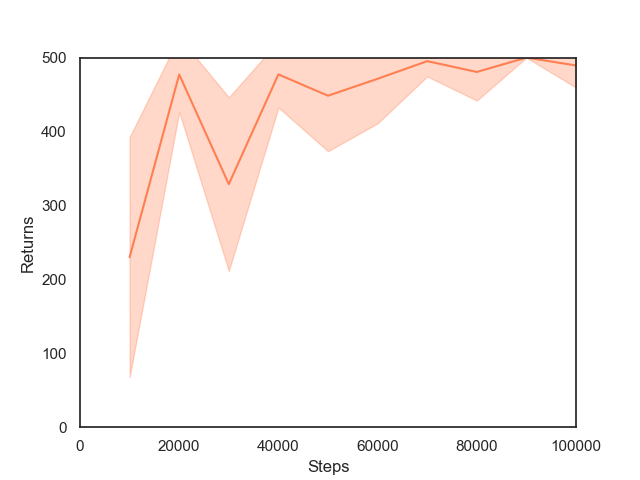

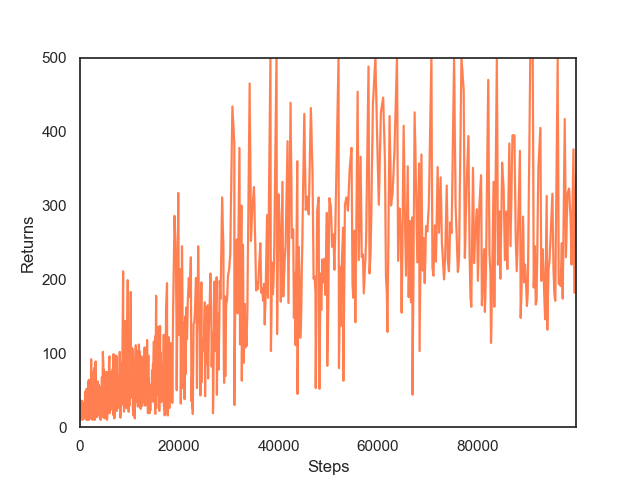

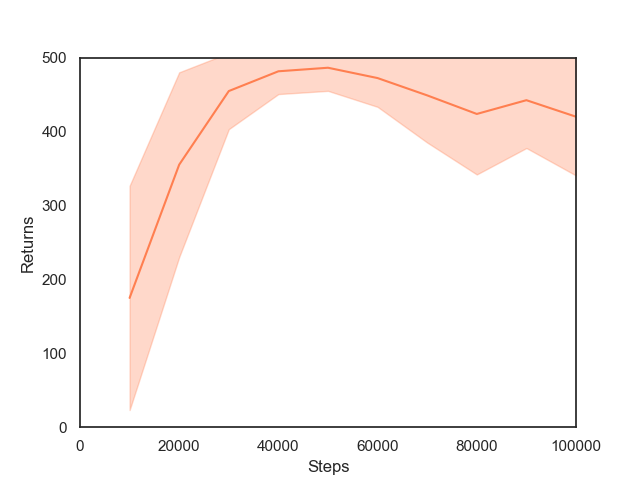

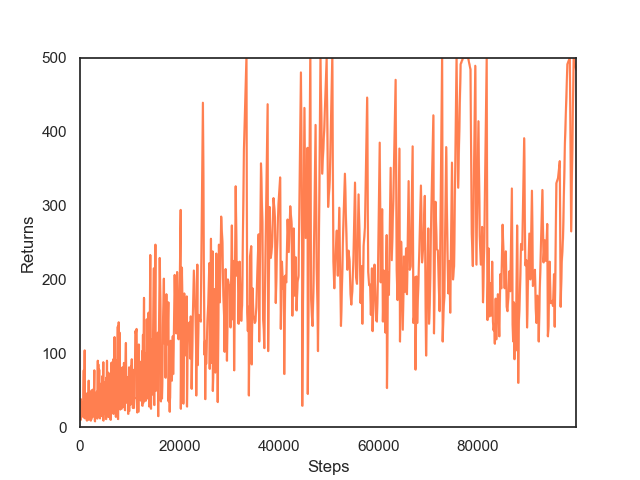

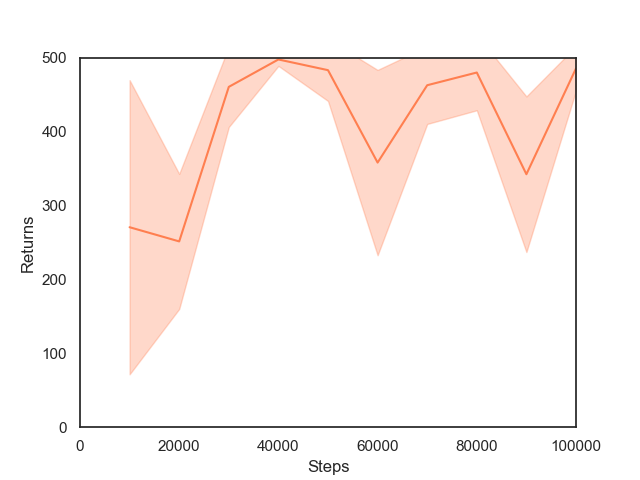

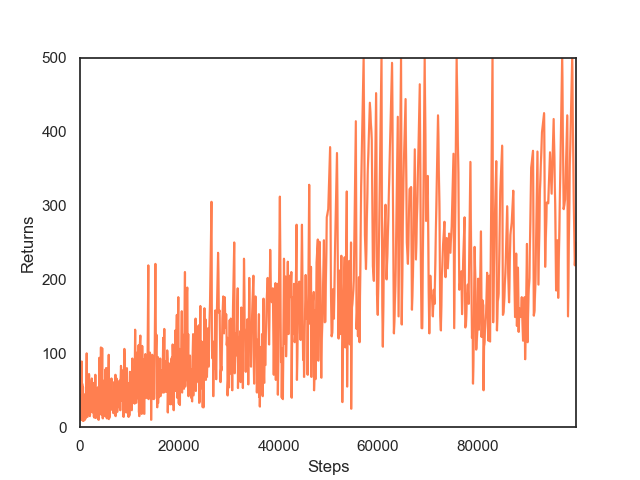

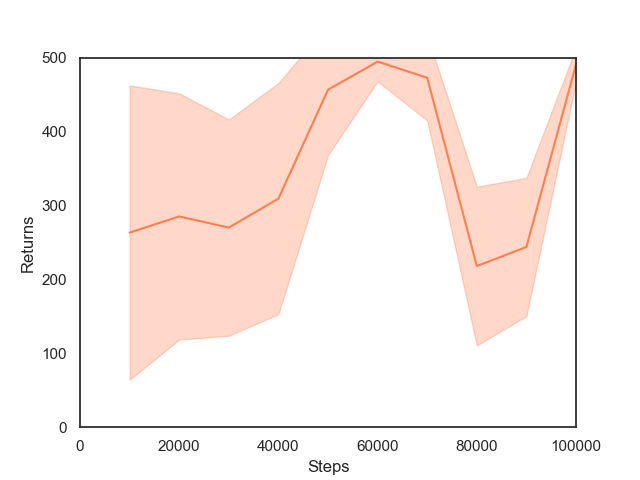

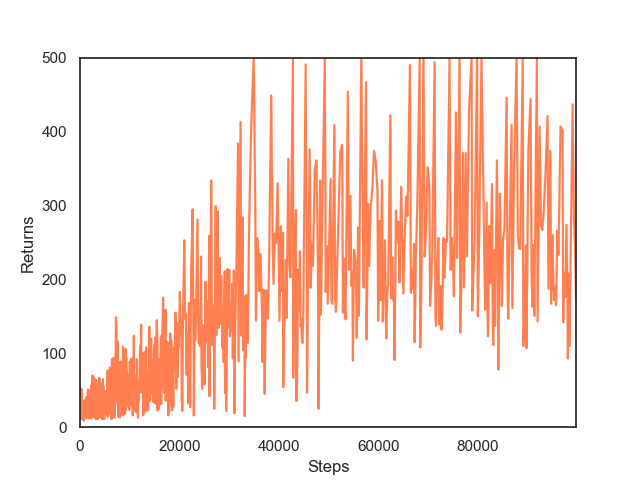

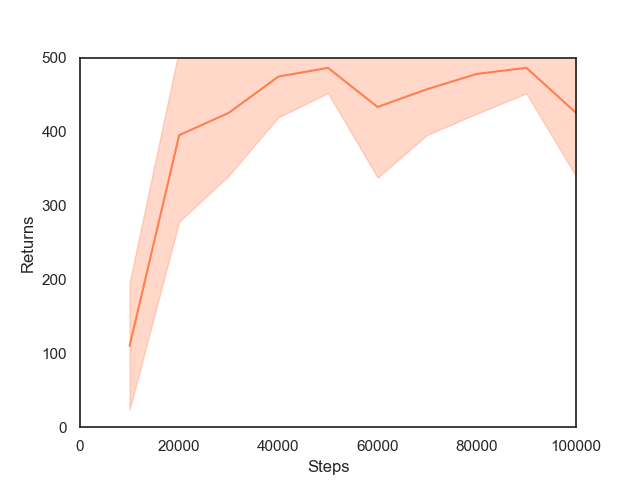

PPO

| Train | Test |

|---|---|

|

|

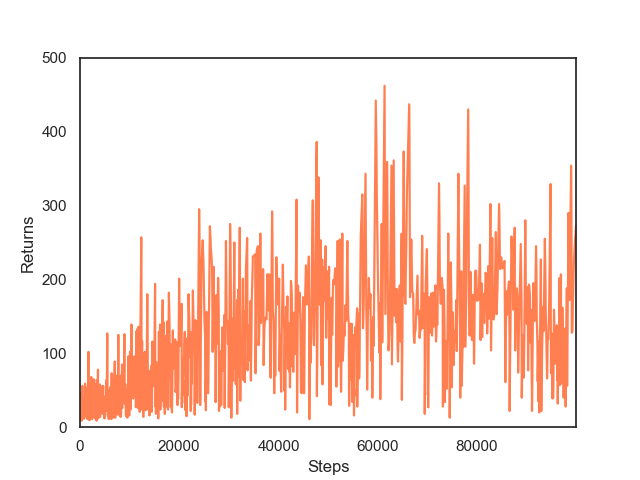

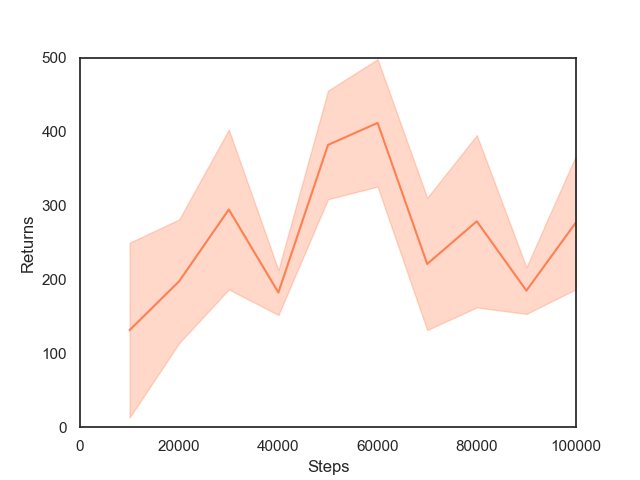

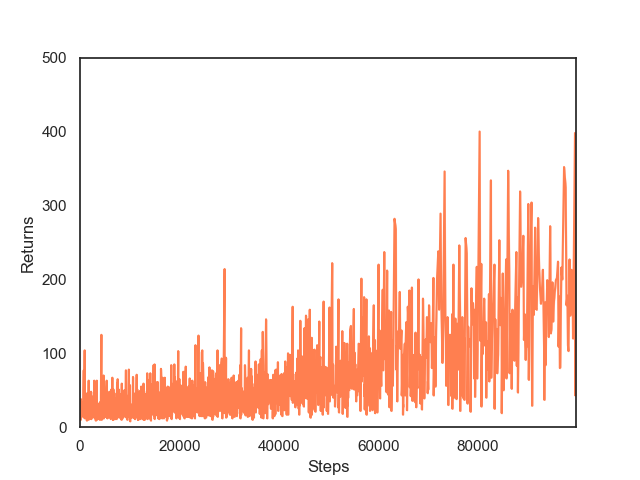

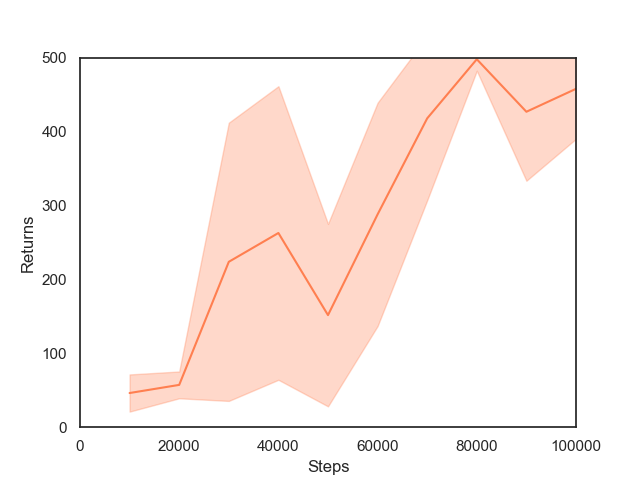

AIRL

| Train | Test |

|---|---|

|

|

BC

| Train | Test |

|---|---|

|

|

DRIL

| Train | Test |

|---|---|

|

|

FAIRL

| Train | Test |

|---|---|

|

|

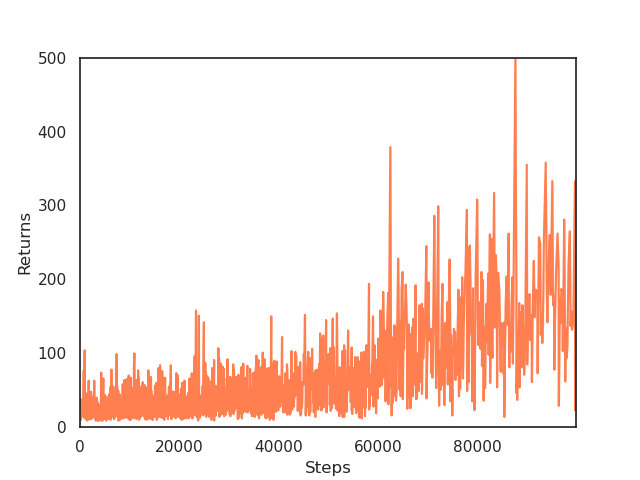

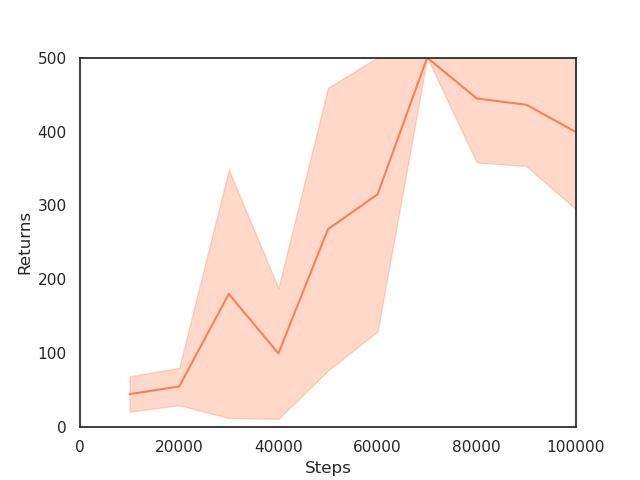

GAIL

| Train | Test |

|---|---|

|

|

GMMIL

| Train | Test |

|---|---|

|

|

nn-PUGAIL

| Train | Test |

|---|---|

|

|

RED

| Train | Test |

|---|---|

|

|

If you find this work useful and would like to cite it, the following would be appropriate:

@misc{arulkumaran2020pragmatic,

author = {Arulkumaran, Kai},

title = {A Pragmatic Look at Deep Imitation Learning},

url = {https://github.com/Kaixhin/imitation-learning},

year = {2020}

}

[1] Proximal Policy Optimization Algorithms

[2] Adversarial Behavioral Cloning

[3] Learning Robust Rewards with Adversarial Inverse Reinforcement Learning

[4] Efficient Training of Artificial Neural Networks for Autonomous Navigation

[5] Disagreement-Regularized Imitation Learning

[6] A Divergence Minimization Perspective on Imitation Learning Methods

[7] Generative Adversarial Imitation Learning

[8] Imitation Learning via Kernel Mean Embedding

[9] Positive-Unlabeled Reward Learning

[10] Primal Wasserstein Imitation Learning

[11] Random Expert Distillation: Imitation Learning via Expert Policy Support Estimation

[12] Discriminator-Actor-Critic: Addressing Sample Inefficiency and Reward Bias in Adversarial Imitation Learning

[13] Which Training Methods for GANs do actually Converge?