Simple experiment of Apex: PyTorch Extension with Tools to Realize the Power of Tensor Cores

python CIFAR.py --GPU gpu_name --mode 'FP16' --batch_size 128 --iteration 100

python make_plot.py --GPU 'gpu_name1' 'gpu_name2' 'gpu_name3' --method 'FP32' 'FP16' 'amp' --batch 128 256 512 1024 2048

The following shows basic folder structure.

├── cifar

├── CIFAR.py # training code

├── utils.py

├── make_plot.py

└── results

└── gpu_name # results to be saved here

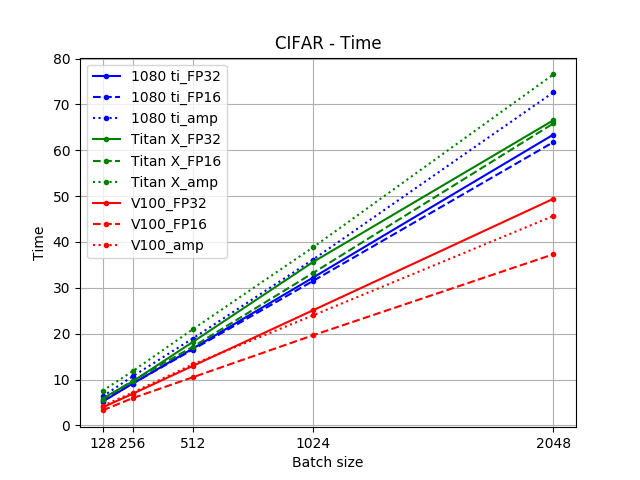

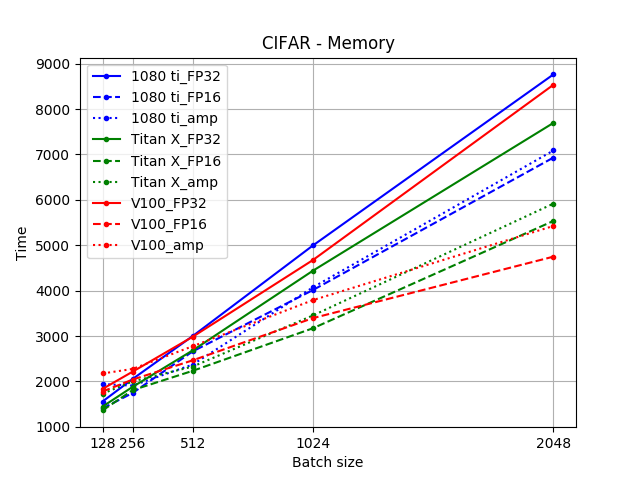

- Network: vgg16

- Dataset: CIFAR10

- Method: FP32 (float32), FP16 (float16; half tensor), AMP (Automatic Mixed Precision)

- GPU: GTX 1080 Ti, GTX TITAN X, Tesla V100

- Batch size: 128, 256, 512, 1024, 2048

- All random seeds are fixed

- Result: The mean and std of 5 times (each 100 iterations)

- Ubuntu 16.04

- Python 3

- Cuda 9.0

- PyTorch 0.4.1

- torchvision 0.2.1

| GPU - Method | Metric | Batch size | ||||

| 128 | 256 | 512 | 1024 | 2048 | ||

| 1080 Ti - FP32 | Accuracy (%) | 40.92 ± 2.08 | 50.74 ± 3.64 | 61.32 ± 2.43 | 64.79 ± 1.56 | 63.44 ± 1.76 |

| Time (sec) | 5.16 ± 0.73 | 9.12 ± 1.20 | 16.75 ± 2.05 | 32.23 ± 3.23 | 63.42 ± 4.89 | |

| Memory (Mb) | 1557.00 ± 0.00 | 2053.00 ± 0.00 | 2999.00 ± 0.00 | 4995.00 ± 0.00 | 8763.00 ± 0.00 | |

| 1080 Ti - FP16 | Accuracy (%) | 43.35 ± 2.04 | 51.00 ± 3.75 | 57.70 ± 1.58 | 63.79 ± 3.95 | 62.64 ± 1.91 |

| Time (sec) | 5.42 ± 0.71 | 9.11 ± 1.14 | 16.54 ± 1.78 | 31.49 ± 3.01 | 61.79 ± 5.15 | |

| Memory (Mb) | 1405.00 ± 0.00 | 1745.00 ± 0.00 | 2661.00 ± 0.00 | 4013.00 ± 0.00 | 6931.00 ± 0.00 | |

| 1080 Ti - AMP | Accuracy (%) | 41.11 ± 1.19 | 47.59 ± 1.79 | 60.37 ± 2.48 | 63.31 ± 1.92 | 63.41 ± 3.75 |

| Time (sec) | 6.32 ± 0.70 | 10.70 ± 1.11 | 18.95 ± 1.80 | 36.15 ± 3.01 | 72.64 ± 5.11 | |

| Memory (Mb) | 1941.00 ± 317.97 | 1907.00 ± 179.63 | 2371.00 ± 0.00 | 4073.00 ± 0.00 | 7087.00 ± 0.00 | |

| TITAN X - FP32 | Accuracy (%) | 42.90 ± 2.42 | 45.78 ± 1.22 | 60.88 ± 1.78 | 64.22 ± 2.62 | 63.79 ± 1.62 |

| Time (sec) | 5.86 ± 0.80 | 9.59 ± 1.29 | 18.19 ± 1.84 | 35.62 ± 4.07 | 66.56 ± 4.62 | |

| Memory (Mb) | 1445.00 ± 0.00 | 1879.00 ± 0.00 | 2683.00 ± 0.00 | 4439.00 ± 0.00 | 7695.00 ± 0.00 | |

| TITAN X - FP16 | Accuracy (%) | 39.13 ± 3.56 | 49.87 ± 2.42 | 59.77 ± 1.77 | 65.57 ± 2.82 | 64.08 ± 1.80 |

| Time (sec) | 5.66 ± 0.97 | 9.72 ± 1.23 | 17.14 ± 1.82 | 33.23 ± 3.50 | 65.86 ± 4.94 | |

| Memory (Mb) | 1361.00 ± 0.00 | 1807.00 ± 0.00 | 2233.00 ± 0.00 | 3171.00 ± 0.00 | 5535.00 ± 0.00 | |

| TITAN X - AMP | Accuracy (%) | 42.57 ± 1.25 | 49.59 ± 2.14 | 59.76 ± 1.60 | 63.76 ± 4.24 | 65.14 ± 2.93 |

| Time (sec) | 7.55 ± 1.03 | 11.82 ± 1.07 | 20.96 ± 1.83 | 38.82 ± 3.17 | 76.54 ± 6.60 | |

| Memory (Mb) | 1729.00 ± 219.51 | 1999.00 ± 146.97 | 2327.00 ± 0.00 | 3453.00 ± 0.00 | 5917.00 ± 0.00 | |

| V100 - FP32 | Accuracy (%) | 42.56 ± 1.37 | 49.50 ± 1.81 | 60.91 ± 0.88 | 65.26 ± 1.76 | 63.93 ± 3.69 |

| Time (sec) | 3.93 ± 0.54 | 6.90 ± 0.82 | 12.97 ± 1.27 | 25.11 ± 1.83 | 49.43 ± 3.46 | |

| Memory (Mb) | 1834.00 ± 0.00 | 2214.00 ± 0.00 | 2983.60 ± 116.80 | 4674.00 ± 304.00 | 8534.80 ± 826.40 | |

| V100 - FP16 | Accuracy (%) | 43.37 ± 2.13 | 51.78 ± 2.48 | 58.46 ± 1.81 | 64.72 ± 2.37 | 63.21 ± 1.60 |

| Time (sec) | 3.28 ± 0.52 | 5.95 ± 1.03 | 10.50 ± 1.27 | 19.65 ± 1.95 | 37.32 ± 3.73 | |

| Memory (Mb) | 1777.20 ± 25.60 | 2040.00 ± 0.00 | 2464.00 ± 0.00 | 3394.00 ± 0.00 | 4748.00 ± 0.00 | |

| V100 - AMP | Accracy (%) | 42.39 ± 2.35 | 51.33 ± 1.84 | 61.41 ± 2.10 | 65.05 ± 3.29 | 61.67 ± 3.13 |

| Time (sec) | 4.27 ± 0.54 | 7.18 ± 0.90 | 13.31 ± 1.26 | 23.99 ± 2.29 | 45.68 ± 3.77 | |

| Memory (Mb) | 2174.80 ± 211.74 | 2274.00 ± 172.15 | 2775.20 ± 77.60 | 3790.80 ± 154.40 | 5424.00 ± 0.00 | |

| Time | Memory |

|

|

| Time with std | Memory with std |

.png) |

.png) |