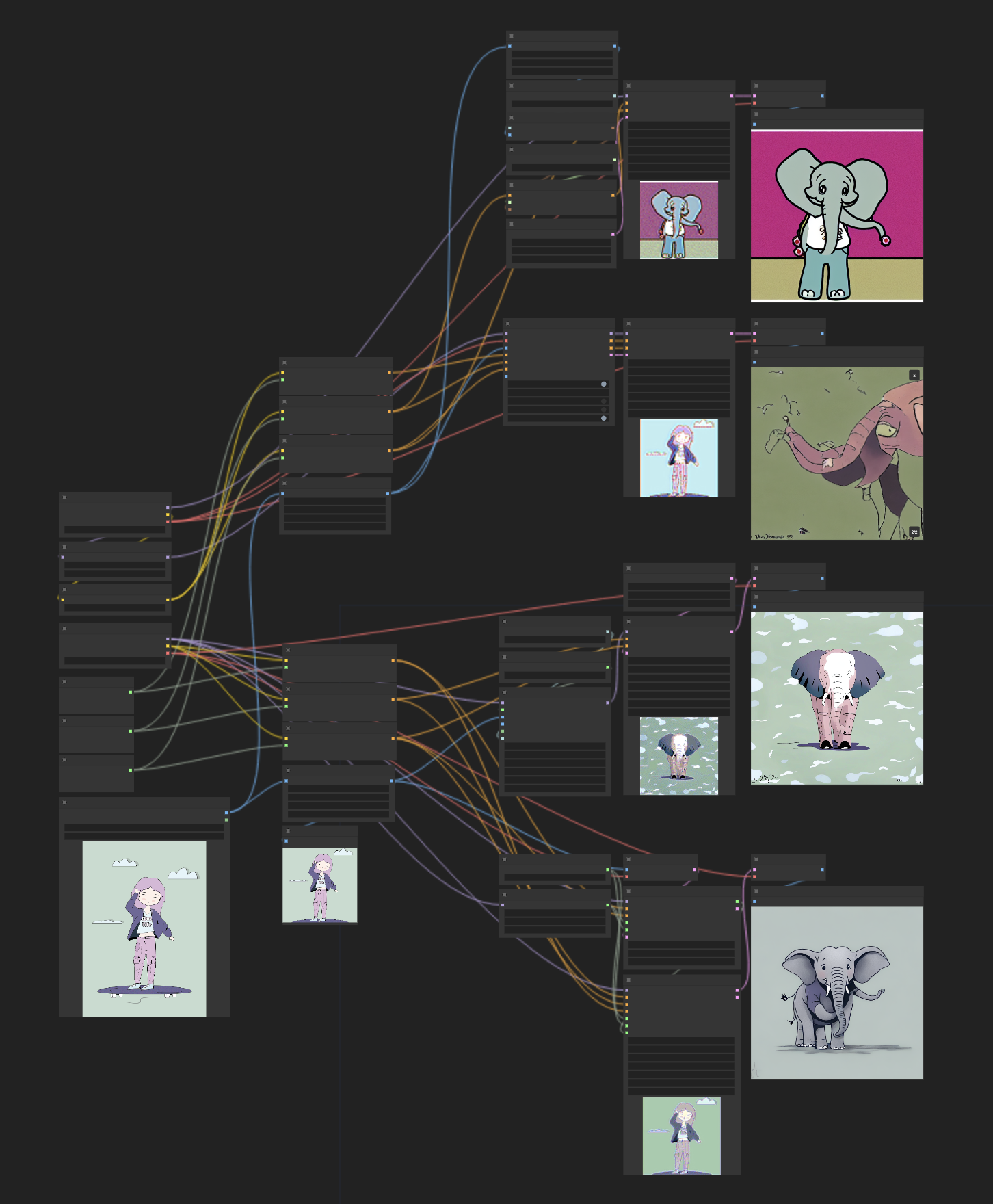

This repository contains a workflow to test different style transfer methods using Stable Diffusion. The workflow is based on ComfyUI, which is a user-friendly interface for running Stable Diffusion models. The workflow is designed to test different style transfer methods from a single reference image.

- The SD 1.5 Style ControlNet Coadapter

- Required nodes are built into ComfyUI

- Docs: https://github.com/TencentARC/T2I-Adapter/blob/SD/docs/coadapter.md

- Visual Style Prompt for SD 1.5

- ComfyUI node: https://github.com/ExponentialML/ComfyUI_VisualStylePrompting

- Project page: https://curryjung.github.io/VisualStylePrompt/

- The SD XL IPAdapter with style transfer weight types

- ComfyUI node: https://github.com/cubiq/ComfyUI_IPAdapter_plus

- Project page: https://ip-adapter.github.io/

- StyleAligned for SD XL

- ComfyUI node: https://github.com/brianfitzgerald/style_aligned_comfy

- Project page: https://style-aligned-gen.github.io/

| Style Method | an elephant |

a robot |

a car |

|---|---|---|---|

| SD 1.5 Style Adapter |  |

|

|

| SD 1.5 Visual Style Prompt |  |

|

|

| SD XL IPAdapter |  |

|

|

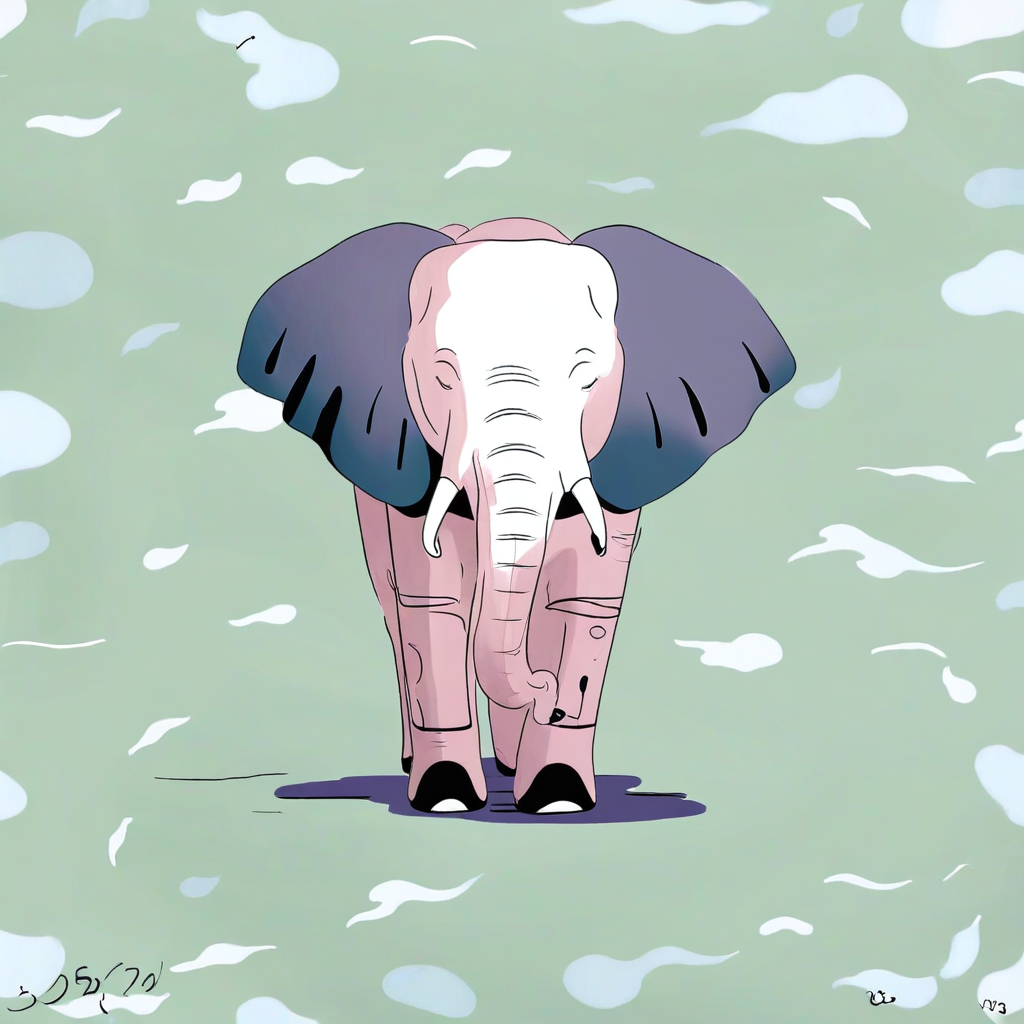

| SD XL StyleAligned |  |

|

|

| Style Method | an elephant |

a robot |

a car |

|---|---|---|---|

| SD 1.5 Style Adapter |  |

|

|

| SD 1.5 Visual Style Prompt |  |

|

|

| SD XL IPAdapter |  |

|

|

| SD XL StyleAligned |  |

|

|

-

Custom nodes you'll need:

-

Models you'll need:

- SD 1.5 Style ControlNet Coadapter: https://huggingface.co/TencentARC/T2I-Adapter/blob/main/models/coadapter-style-sd15v1.pth (add to

models/controlnet) - Clip Vision Large: https://huggingface.co/openai/clip-vit-large-patch14/blob/main/pytorch_model.bin (add to

models/clip_vision) - OpenCLIP: https://huggingface.co/laion/CLIP-ViT-H-14-laion2B-s32B-b79K/blob/main/open_clip_pytorch_model.safetensors (add to

models/clip_vision) - SD XL IPAdapter: https://huggingface.co/h94/IP-Adapter/resolve/main/sdxl_models/ip-adapter-plus_sdxl_vit-h.safetensors (add to

models/ipadapter) - An SD 1.5 checkpoint

- An SD XL checkpoint

- SD 1.5 Style ControlNet Coadapter: https://huggingface.co/TencentARC/T2I-Adapter/blob/main/models/coadapter-style-sd15v1.pth (add to

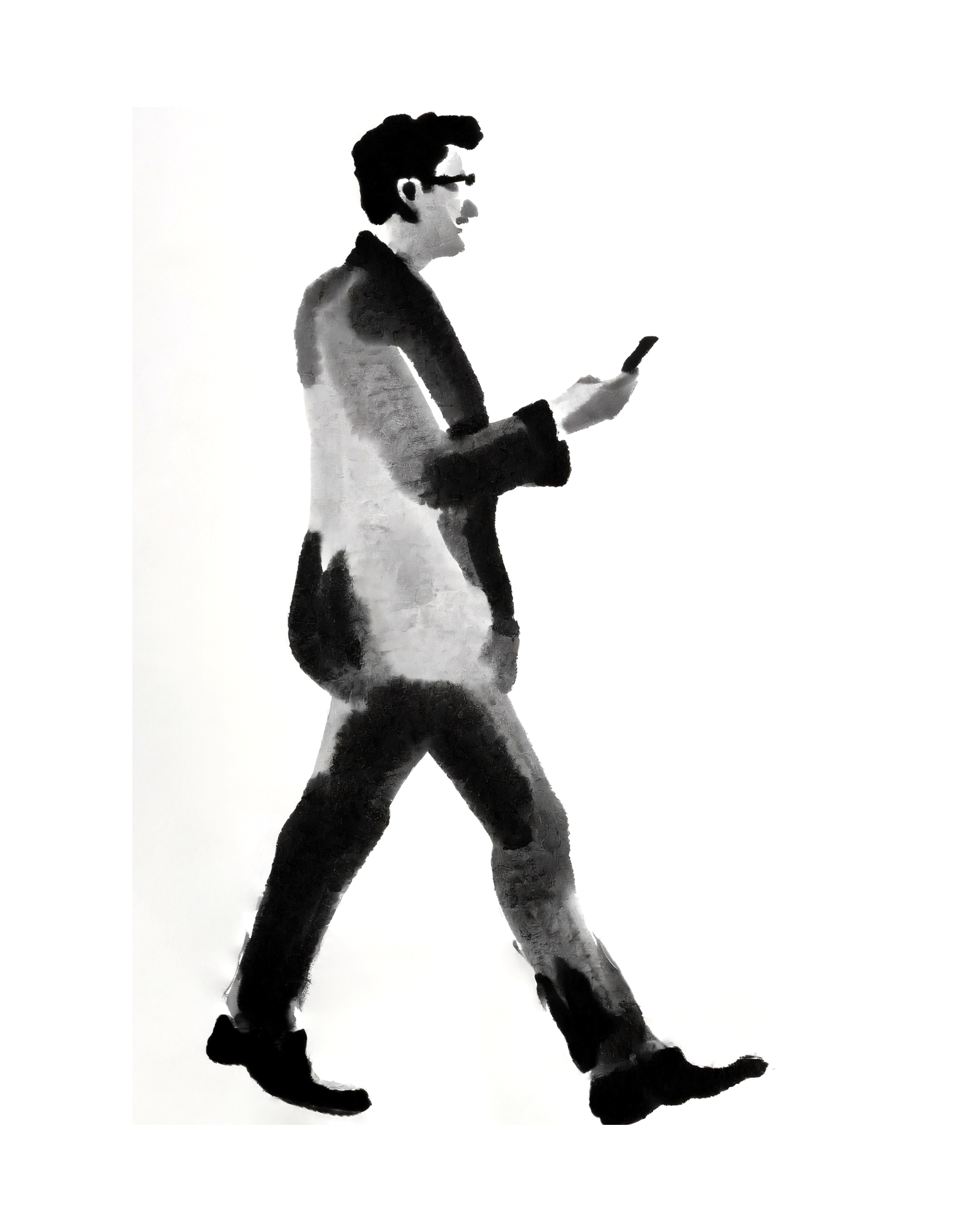

For the purpose of this test, we tried to keep prompts as simple as possible, and not include style details in the generating prompt. This way, we aim to test each style transfer method in a more isolated/prompt independent way.

For the best results, you should engineer the prompts to better fit your needs.

Make sure to read the documentation of each method and each ComfyUI node to understand how to use them properly.

When loading the workflow, the ControlNet branch is disabled by default. You can enable it by selecting all the top nodes, and pressing CTRL+M. This is because the current implementation of Visual Style Prompting is incompatible with the ControlNet style model (see below). You need to use them one at a time, and restart ComfyUI if you want to switch between them.

- tends to also capture and transfer semantic information from the reference image;

- you can use this together with other controlnets for better composition results;

- no parameters to tweak;

- we spent a lot of time fishing for good seeds to cherry pick the best results.

- in order to get the best results, you must engineer both the positive and reference image prompts correctly;

- focus on the details you want to derive from the image reference, and the details you wish to see in the output;

- in the current implementation, the custom node we used updates model attention in a way that is incompatible with applying controlnet style models via the "Apply Style Model" node;

- once you run the "Apply Visual Style Prompting" node, you won't be able to apply the controlnet style model anymore and need to restart ComfyUI if you plan to do so;

- this method tends to produce more abstract/creative results, which can be a good thing if you're looking for a more artistic output.

- The style transfer weight types are a feature of the IPAdapter v2 node for SD XL only;

- Outputs are consistently good and style-aligned with the original;

- Tends to focus on/add detail to faces;

Weight/start at/end atare adjustable parameters;- Maybe the best style transfer method at the time of writing this.

- The StyleAligned method is a bit more complex to use, but it's worth the effort;

- Outputs are good but it looks like some semantics are also transfered;

- Allows customization via the

share_attnandshare_normparameters.

Made with 💚 by the CozyMantis squad. Check out our ComfyUI nodes and workflows!

Please check licenses and terms of use for each of the nodes and models required by this workflow.

The workflow should not be used to intentionally create or disseminate images that create hostile or alienating environments for people. This includes generating images that people would foreseeably find disturbing, distressing, or offensive; or content that propagates historical or current stereotypes.