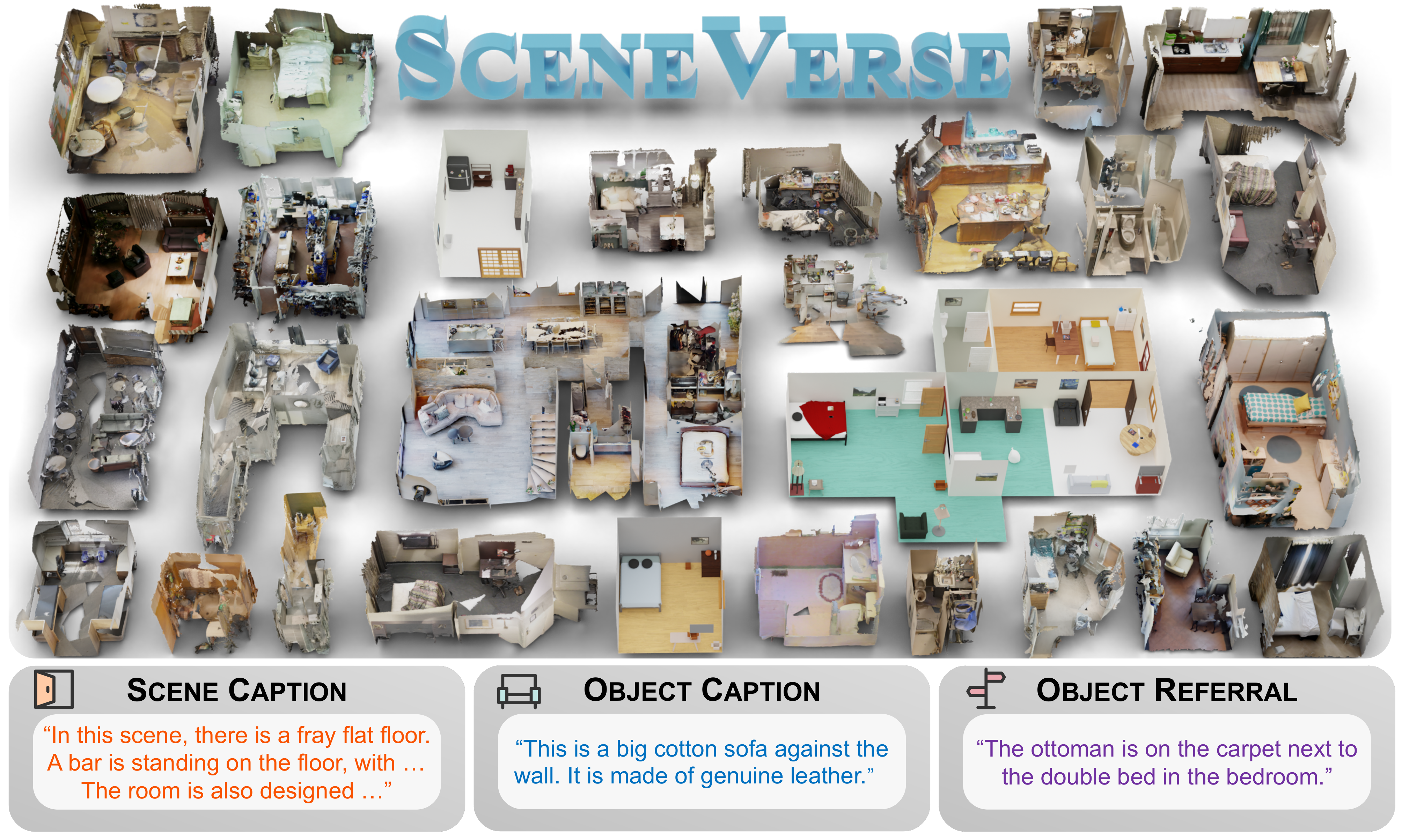

We propose SceneVerse, the first million-scale 3D vision-language dataset with 68K 3D indoor scenes and 2.5M vision-language pairs. We demonstrate the scaling effect by (i) achieving state-of-the-art on all existing 3D visual grounding benchmarks and (ii) showcasing zero-shot transfer capabilities with our GPS (Grounded Pre-training for Scenes) model.

[2024-10] Pre-trained checkpoints are now available, find detailed instructions in TRAIN.md!

[2024-09] The scripts for scene graph generation are released.

- [2024-07] Training & Inference code as well as preprocessing code is released and checkpoints & logs are on the way!

- [2024-07] Preprocessing codes for scenes used in SceneVerse are released.

- [2024-07] SceneVerse is accepted by ECCV 2024! Training and inference codes/checkpoints will come shortly, stay tuned!

- [2024-03] We release the data used in SceneVerse. Fill out the form for the download link!

- [2024-01] We release SceneVerse on ArXiv. Checkout our paper and website.

See DATA.md for detailed instructions on data download, processing, visualization. The data inventory is listed below:

| Dataset | Object Caption | Scene Caption | Ref-Annotation | Ref-Pairwiserel2 |

Ref-MultiObjectrelm |

Ref-Starstar |

Ref-Chain (Optional)chain |

|---|---|---|---|---|---|---|---|

| ScanNet | ✅ | ✅ | ScanRefer Nr3D |

✅ | ✅ | ✅ | ✅ |

| MultiScan | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| ARKitScenes | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| HM3D | template |

✅ | ✅ | ✅ | ✅ | ✅ | ✅ |

| 3RScan | ✅ | ✅ | ❌ | ✅ | ✅ | ✅ | ✅ |

| Structured3D | template |

✅ | ❌ | ✅ | ✅ | ✅ | ❌ |

| ProcTHOR | template |

❌ | ❌ | template |

template |

template |

❌ |

See TRAIN.md for the inventory of available checkpoints and detailed instructions on training and testing with pre-trained checkpoints. The checkpoint inventory is listed below:

| Setting | Description | Corresponding Experiment | Checkpoint based on experiment setting |

|---|---|---|---|

pre-trained |

GPS model pre-trained on SceneVerse | 3D-VL grounding (Tab.2) | Model |

scratch |

GPS model trained on datasets from scratch | 3D-VL grounding (Tab.2) SceneVerse-val (Tab. 3) |

ScanRefer, Sr3D, Nr3D, SceneVerse-val |

fine-tuned |

GPS model fine-tuned on datasets with grounding heads | 3D-VL grounding (Tab.2) | ScanRefer, Sr3D, Nr3D |

zero-shot |

GPS model trained on SceneVerse without data from ScanNet and MultiScan | Zero-shot Transfer (Tab.3) | Model |

zero-shot text |

GPS | Zero-shot Transfer (Tab.3) | ScanNet, SceneVerse-val |

text-ablation |

Ablations on the type of language used during pre-training | Ablation on Text (Tab.7) | Template only, Template+LLM |

scene-ablation |

Ablations on the use of synthetic scenes during pre-training | Ablation on Scene (Tab.8) | Real only, S3D only, ProcTHOR only |

model-ablation |

Ablations on the use of losses during pre-training | Ablation on Model Design (Tab.9) | Refer only, Refer+Obj-lvl, w/o Scene-lvl |

@inproceedings{jia2024sceneverse,

title={Sceneverse: Scaling 3d vision-language learning for grounded scene understanding},

author={Jia, Baoxiong and Chen, Yixin and Yu, Huangyue and Wang, Yan and Niu, Xuesong and Liu, Tengyu and Li, Qing and Huang, Siyuan},

booktitle={European Conference on Computer Vision (ECCV)},

year={2024}

}We thank the authors from ScanRefer, ScanNet, 3RScan, ReferIt3D, Structured3D, HM3D, ProcTHOR, ARKitScenes, MultiScan for open-sourcing their awesome datasets. We also heavily adapted codes from ScanQA, SQA3D, and 3D-VisTA for training and inference.