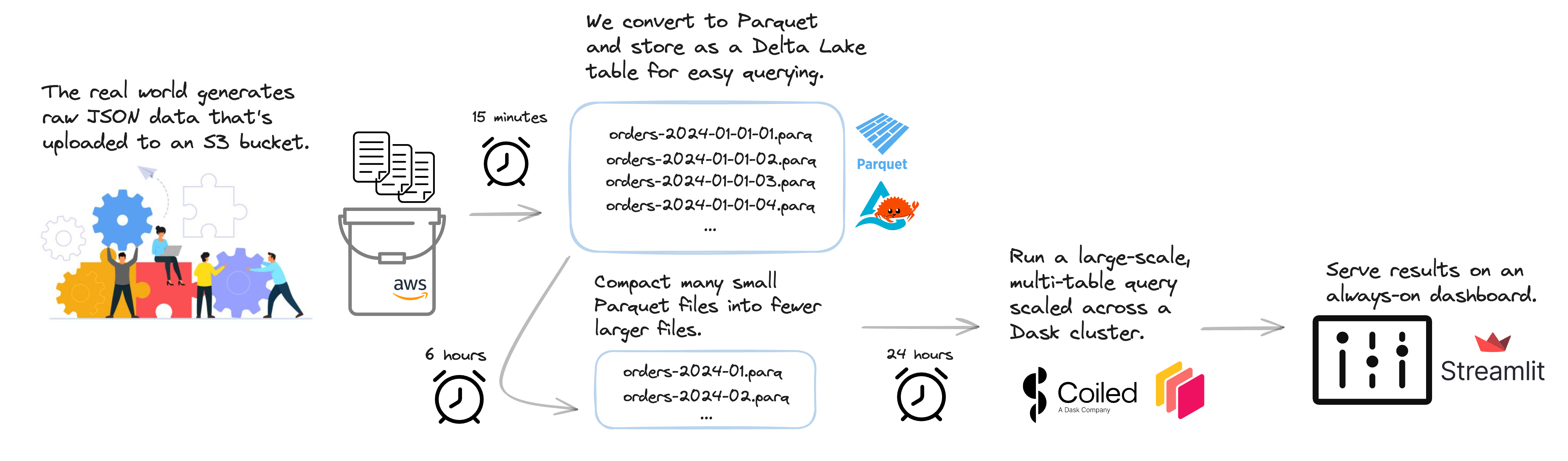

This repository is a lightweight scalable example pipeline that runs large Python jobs on a schedule in the cloud. We hope this example is easy to copy and modify for your own needs.

It’s common to run regular large-scale Python jobs on the cloud as part of production data pipelines. Modern workflow orchestration systems like Prefect, Dagster, Airflow, Argo, etc. all work well for running jobs on a regular cadence, but we often see groups struggle with complexity around cloud infrastructure and lack of scalability.

This repository contains a scalable data pipeline that runs regular jobs on the cloud with Coiled and Prefect. This approach is:

- Easy to deploy on the cloud

- Scalable across many cloud machines

- Cheap to run on ephemeral VMs and a small always-on VM

You can run this pipeline yourself, either locally or on the cloud.

Make sure you have a Prefect cloud account and have authenticated your local machine.

Clone this repository and install dependencies:

git clone https://github.com/coiled/etl-tpch

cd etl-tpch

mamba env create -f environment.yml

mamba activate etl-tpchIn your terminal run:

python workflow.py # Run data pipeline locallyIf you haven't already, create a Coiled account and follow the setup guide at coiled.io/start.

Next, adjust the pipeline/config.yml

configuration file by setting local: false and data-dir to an

S3 bucket where you would like data assets to be stored.

Set AWS_ACCESS_KEY_ID / AWS_SECRET_ACCESS_KEY and AWS_REGION

environment variables that enable access to your S3 bucket and

specify the region the bucket is in, respectively.

export AWS_ACCESS_KEY_ID=...

export AWS_SECRET_ACCESS_KEY=...

export AWS_REGION=...Finally, in your terminal run:

coiled prefect serve \

--vm-type t3.medium \ # Small, always-on VM

--region $AWS_REGION \ # Same region as data

-f dashboard.py -f pipeline \ # Include pipeline files

-e AWS_ACCESS_KEY_ID=$AWS_ACCESS_KEY_ID \ # S3 bucket access

-e AWS_SECRET_ACCESS_KEY=$AWS_SECRET_ACCESS_KEY \

workflow.py