This is a C++ statistical library that provides an interface similar to Pandas package in Python.

A DataFrame can have one index column and many data columns of any built-in or user-defined type.

You could slice the data in many different ways. You could join, merge, group-by the data. You could run various statistical, summarization and ML algorithms on the data. You could add your custom algorithms easily. You could multi-column sort, custom pick and delete the data. And more …

I have followed a few principles in this library:

- Support any type either built-in or user defined without needing new code

- Never chase pointers ala

linked lists,std::any,pointer to base, ..., includingvirtual function calls - Have all column data in continuous memory space. Also, be mindful of cache-line aliasing misses between multiple columns

- Never use more space than you need ala

unions,std::variant, ... - Avoid copying data as much as possible. Unfortunately, sometimes you have to

- Use multi-threading but only when it makes sense

- Do not attempt to protect the user against

garbage in, garbage out

Views

- You can slice the data frame and instead of getting another data frame you can opt to get a view. A view is a data frame that is a reference to a slice of the original data frame. So if you change the data in the view the corresponding data in the original data frame will also be changed (and vice versa).

Multithreading

- DataFrame uses static containers to achieve type heterogeneity. By default, these static containers are unprotected. This is done by design. So by default, there is no locking overhead. If you use DataFrame in a multithreaded program you must provide a SpinLock defined in ThreadGranularity.h file. DataFrame will use your SpinLock to protect the containers.

Please see documentation, set_lock(), remove_lock(), and dataframe_tester.cc for code example. - In addition, instances of DataFrame are not multithreaded safe either. In other words, a single instance of DataFrame must not be used in multiple threads without protection, unless it is used as read-only.

- In the meantime, DataFrame utilizes multithreading in two different ways internally:

- Async Interface: There are asynchronous versions of some methods. For example, you have sort()/sort_async(), visit()/visit_async(), ... more. The latter versions return a std::future that could execute in parallel.

- DataFrame uses multiple threads, internally and unbeknown to the user, in some of its algorithms when appropriate. User can control (or turn off) the multithreading by calling set_thread_level() which sets the max number of threads to be used. The default is 0. The optimal number of threads is a function of users hardware/software environment and usually obtained by trail and error. set_thread_level() and threading level in general is a static property and once set, it applies to all instances.

- Async Interface: There are asynchronous versions of some methods. For example, you have sort()/sort_async(), visit()/visit_async(), ... more. The latter versions return a std::future that could execute in parallel.

- DateTime class included in this library is a very cool and handy object to manipulate date/time with nanosecond precision.

DataFrame Test File

DataFrame Test File 2

Heterogeneous Vectors Test File

Date/Time Test File

mkdir [Debug | Release]

cd [Debug | Release]

cmake -DCMAKE_BUILD_TYPE=[Debug | Release] ..

make

make installcd [Debug | Release]

make uninstallIf you are using Conan to manage your dependencies, merely add dataframe/x.y.z@ to your requires, where x.y.z is the release version you want to use. Conan will acquire DataFrame, build it from source in your computer, and provide CMake integration support for your projects. See the conan docs for more information.

Sample conanfile.txt:

[requires]

dataframe/1.7.0@

[generators]

cmake

There is a test program dataframe_performance that should give you some sense of how this library performs. As a comparison, there is also a Pandas Python pandas_performance script that does exactly the same thing.

dataframe_performance.cc uses DataFrame async interface and is compiled with gcc compiler with -O3 flag.

pandas_performance.py is ran with Python 3.7.

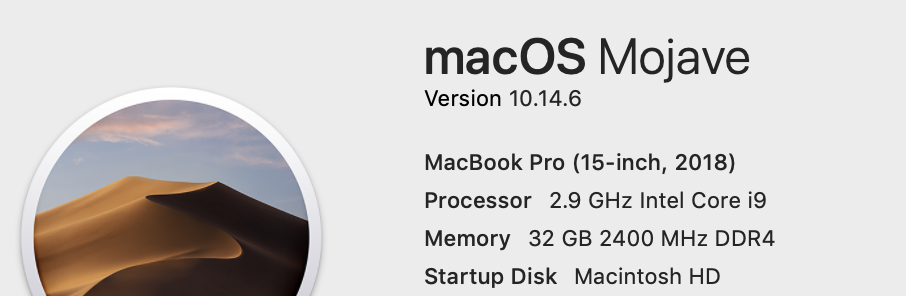

I ran both on my mac-book, doing the following:

- Generate ~1.6 billion second resolution timestamps and load it into the DataFrame/Pandas as index.

- Generate ~1.6 billion random numbers each for 3 columns with normal, log normal, and exponential distributions and load them into the DataFrame/Pandas.

- Calculate the mean of each of the 3 columns.

Result:

MacBook> time python pandas_performance.py

All memory allocations are done. Calculating means ...

real 17m18.916s

user 4m47.113s

sys 5m31.901s

MacBook>

MacBook>

MacBook> time ../bin/Linux.GCC64/dataframe_performance

All memory allocations are done. Calculating means ...

real 6m40.222s

user 2m54.362s

sys 2m14.951sThe interesting part:

- Pandas script, I believe, is entirely implemented in Numpy which is in C.

- In case of Pandas, allocating memory + random number generation takes almost the same amount of time as calculating means.

- In case of DataFrame 85% of the time is spent in allocating memory + random number generation.

- You load data once, but calculate statistics many times. So DataFrame, in general, is about 8x faster than parts of Pandas that are implemented in Numpy. I leave parts of Pandas that are purely in Python to imagination.