Paper: Do different tracking tasks require different appearance model?

[ArXiv] [Project Page]

UniTrack is a simple and Unified framework for addressing multiple tracking tasks.

Being a fundamental problem in computer vision, tracking has been fragmented into a multitude of different experimental setups. As a consequence, the literature has fragmented too, and now the novel approaches proposed by the community are usually specialized to fit only one specific setup. To understand to what extend this specialization is actually necessary, we present UniTrack, a solution to address multiple different tracking tasks within the same framework. All tasks share the same appearance model. UniTrack

-

Does NOT need training on a specific tracking task.

-

Shows competitive performance on six out of seven tracking tasks considered.

-

Can be easily adapted to even more tasks.

-

Can be used as an evaluation platform to test pre-trained self-supervised models.

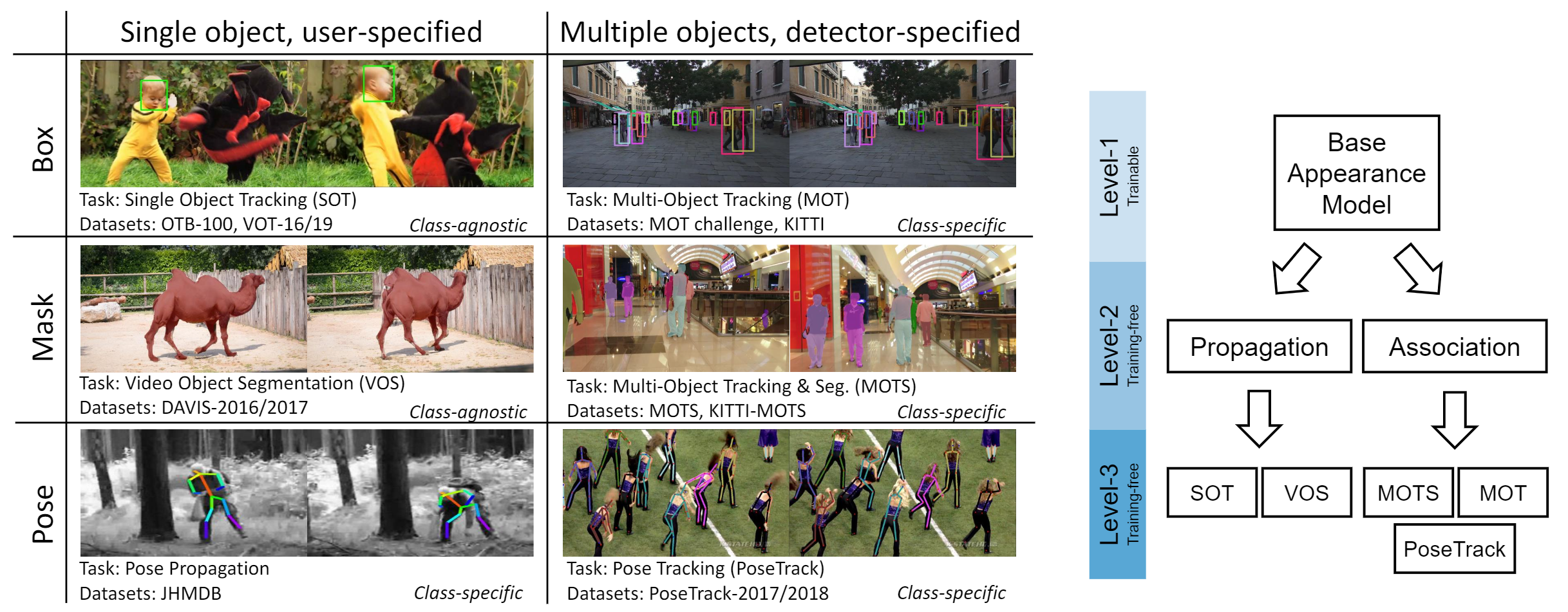

We classify existing tracking tasks along four axes: (1) Single or multiple targets; (2) Users specify targets or automatic detectors specify targets; (3) Observation formats (bounding box/mask/pose); (2) Class-agnostic or class-specific (i.e. human/vehicles). We mainly expriment on 5 tasks: SOT, VOS, MOT, MOTS, and PoseTrack. Task setups are summarized in the above figure.

An appearance model is the only learnable component in UniTrack. It should provide universal visual representation, and is usually pre-trained on large-scale dataset in supervised or unsupervised manners. Typical examples include ImageNet pre-trained ResNets (supervised), and recent self-supervised models such as MoCo and SimCLR (unsupervised).

Propagation and Association are the two core primitives used in UniTrack to address a wide variety of tracking tasks (currently 7, but more can be added), Both use the features extracted by the pre-trained appearance model. For propagation, we adopt exiting methods such as cross correlation, DCF, and mask propation. For association we employ a simple algorithm as in JDE and develop a novel reconstruction-based similairty metric that allows to compare objects across shapes and sizes.

- Installation: Please check out docs/INSTALL.md

- Data preparation: Please check out docs/DATA.md

- Appearance model preparation: Please check out docs/MODELZOO.md

- Run evaluation on all datasets: Please check out docs/RUN.md

Below we show results of UniTrack with a simple ImageNet Pre-trained ResNet-18 as the appearance model. More results (other tasks/datasets, more visualization) can be found in RESULTS.md.

Single Object Tracking (SOT) on OTB-2015

Video Object Segmentation (VOS) on DAVIS-2017 val split

Multiple Object Tracking (MOT) on MOT-16 test set private detector track (Detections from FairMOT)

Multiple Object Tracking and Segmentation (MOTS) on MOTS challenge test set (Detections from COSTA_st)

Pose Tracking on PoseTrack-2018 val split (Detections from LightTrack)

Single Object Tracking (SOT) on OTB-2015

| Method | SiamFC | SiamRPN | SiamRPN++ | UDT* | UDT+* | LUDT* | LUDT+* | UniTrack_XCorr* | UniTrack_DCF* |

|---|---|---|---|---|---|---|---|---|---|

| AUC | 58.2 | 63.7 | 69.6 | 59.4 | 63.2 | 60.2 | 63.9 | 55.5 | 61.8 |

* indicates non-supervised methods

Video Object Segmentation (VOS) on DAVIS-2017 val split

| Method | SiamMask | FeelVOS | STM | Colorization* | TimeCycle* | UVC* | CRW* | VFS* | UniTrack* |

|---|---|---|---|---|---|---|---|---|---|

| J-mean | 54.3 | 63.7 | 79.2 | 34.6 | 40.1 | 56.7 | 64.8 | 66.5 | 58.4 |

* indicates non-supervised methods

Multiple Object Tracking (MOT) on MOT-16 test set private detector track

| Method | POI | DeepSORT-2 | JDE | CTrack | TubeTK | TraDes | CSTrack | FairMOT* | UniTrack* |

|---|---|---|---|---|---|---|---|---|---|

| IDF-1 | 65.1 | 62.2 | 55.8 | 57.2 | 62.2 | 64.7 | 71.8 | 72.8 | 71.8 |

| IDs | 805 | 781 | 1544 | 1897 | 1236 | 1144 | 1071 | 1074 | 683 |

| MOTA | 66.1 | 61.4 | 64.4 | 67.6 | 66.9 | 70.1 | 70.7 | 74.9 | 74.7 |

* indicates methods using the same detections

Multiple Object Tracking and Segmentation (MOTS) on MOTS challenge test set

| Method | TrackRCNN | SORTS | PointTrack | GMPHD | COSTA_st* | UniTrack* |

|---|---|---|---|---|---|---|

| IDF-1 | 42.7 | 57.3 | 42.9 | 65.6 | 70.3 | 67.2 |

| IDs | 567 | 577 | 868 | 566 | 421 | 622 |

| sMOTA | 40.6 | 55.0 | 62.3 | 69.0 | 70.2 | 68.9 |

* indicates methods using the same detections

Pose Tracking on PoseTrack-2018 val split

| Method | MDPN | OpenSVAI | Miracle | KeyTrack | LightTrack* | UniTrack* |

|---|---|---|---|---|---|---|

| IDF-1 | - | - | - | - | 52.2 | 73.2 |

| IDs | - | - | - | - | 3024 | 6760 |

| sMOTA | 50.6 | 62.4 | 64.0 | 66.6 | 64.8 | 63.5 |

* indicates methods using the same detections

[2021.07.05]: Paper released on arXiv.

[2021.06.24]: Code released.

VideoWalk by Allan A. Jabri

SOT code by Zhipeng Zhang