This is a challenge I set myself to use Open Source components to demonstrate how great Cloud, and specifically Azure is.

The challenge was to use Machine Learning to churn through A LOT of data and do something with it. I wanted it to be cool, but also a good demomnstration of how Cloud Services and OSS can work together to reduce the amount of time you would need to get an MVP up and running.

- Cosmos DB

- Azure Service Bus

- Azure Web Apps (for Containers)

- Azure Storage

- Azure Kubernetes Service

- Node

- React

- Python

- Express

- Tensorflow and Keras

For the data to ingest and test I used the COCO unlabelled images

You can use any images you like, just put it on a storage account in a container called images

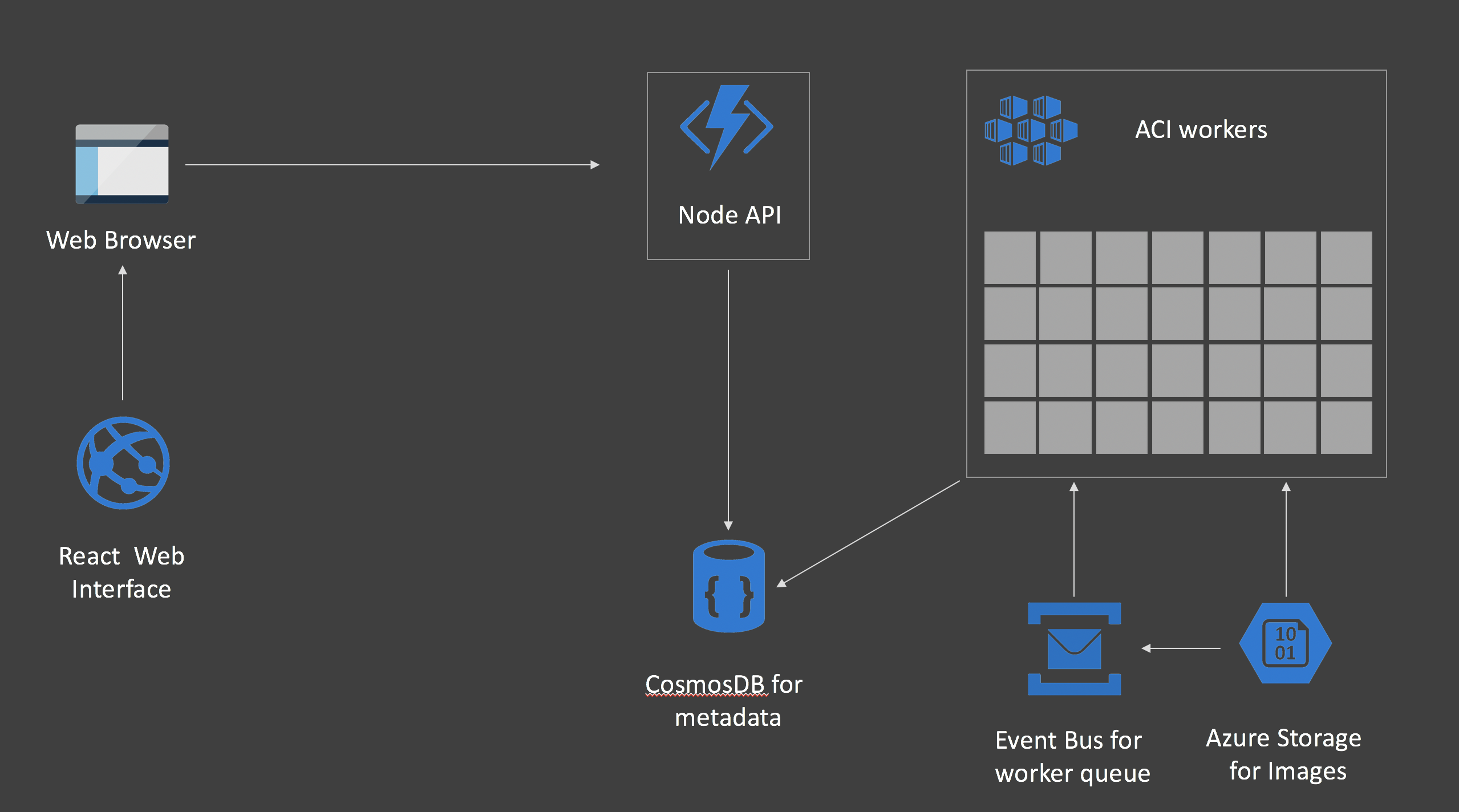

This is a demo running on Azure - as such it is tightly coupled to some of our services. In infer.py for example, we poll on the Azure Event Hub to see when a new message is received (in this case, the relative path of an image stored on Azure Blob Storage), it is then that the infer worker will pull the message of the queue and then process it. As we scale the pods up using ACI/Virtual Kubelet, the queueing mechanism is the key to making sure we have consistency.

You will need the following Azure Services:

- Service Bus

- CosmosDB

- Azure Blob Storage

Place the following information in the envs_template.sh and infer_secrets_template.yml files. We use the connection details for both the Inference model on Kubernetes, as well as to inject data into the Service Bus (so that the Inference workers actually do something).

You will need to capture:

- SERVICEBUS_NAMESPACE=name of your Service Bus

- SERVICEBUS_ACCESSKEY_NAME=RootManageSharedAccessKey (It's usually this)

- SERVICEBUS_ACCESSKEY=Your Service Bus Access Key

This could be a kube service (MongoDB) too, it's using the pymongo and mongoose drivers in the ingest and API stages

You will need to capture:

- MONGODB=CosmosDB Connection String

- STORAGE_ACCOUNT=Storage account name

- STORAGE_KEY=Storage account key

Follow the instructions here so get VK on your cluster.

Edit the file infer_secrets_template.yml and add your Storage, Cosmos and Service Bus credentials.

$ kubectl apply -f infer_secrets_template.yml$ kubectl apply -f infer.ymlYou should now see a single worker sitting there (after a couple of minutes).

It wont be doing much as nothing is in the Queue.

The script inject.py will need to have environment variables set, so edit the file envs_template.sh with your backing credentials, and source the environment.

And run the script...

$ ./utils/inject.pyThe script will read the list of files in your storage account and start pushing those onto the Queue.

The worker will pull each image and then try to categorise it.

You can check this is working by seeing the pod CPU utilisation (It should be falt out at 200%)

$ kubectl top podNow that the worker has been verified, it's time to setup the API and UI.

For the demo, I used WebApp for Containers for the API. To deploy it, spin up a WebApp instance using the container inklin/cocoapi. You will need to set the environmnet variable within the WebApp dashboard for MONGODB to your CosmosDB connection string.

For the demo, I used WebApp for Containers for the Frontend too.

As this is a React application we have to inject environment variables at build time. To do this, edit the file .env.production and replace

REACT_APP_API_SERVER=https://api-osssumit.azurewebsites.net

with the location of the API server you installed in the previous step.

You will then need to build the React App (The Dockerfile will take care of it for you, just do a docker build -t stats .) and push your Frontend to a container registry and use that as the UI.