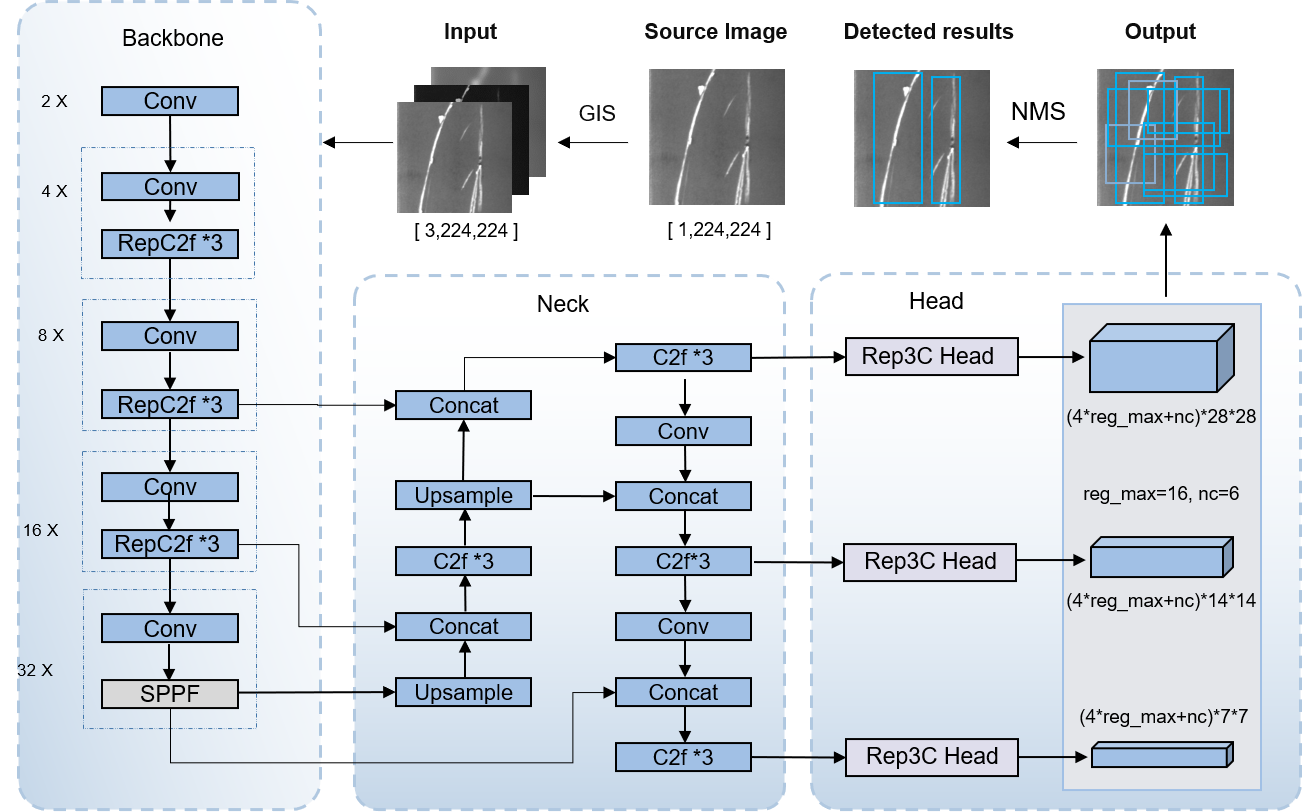

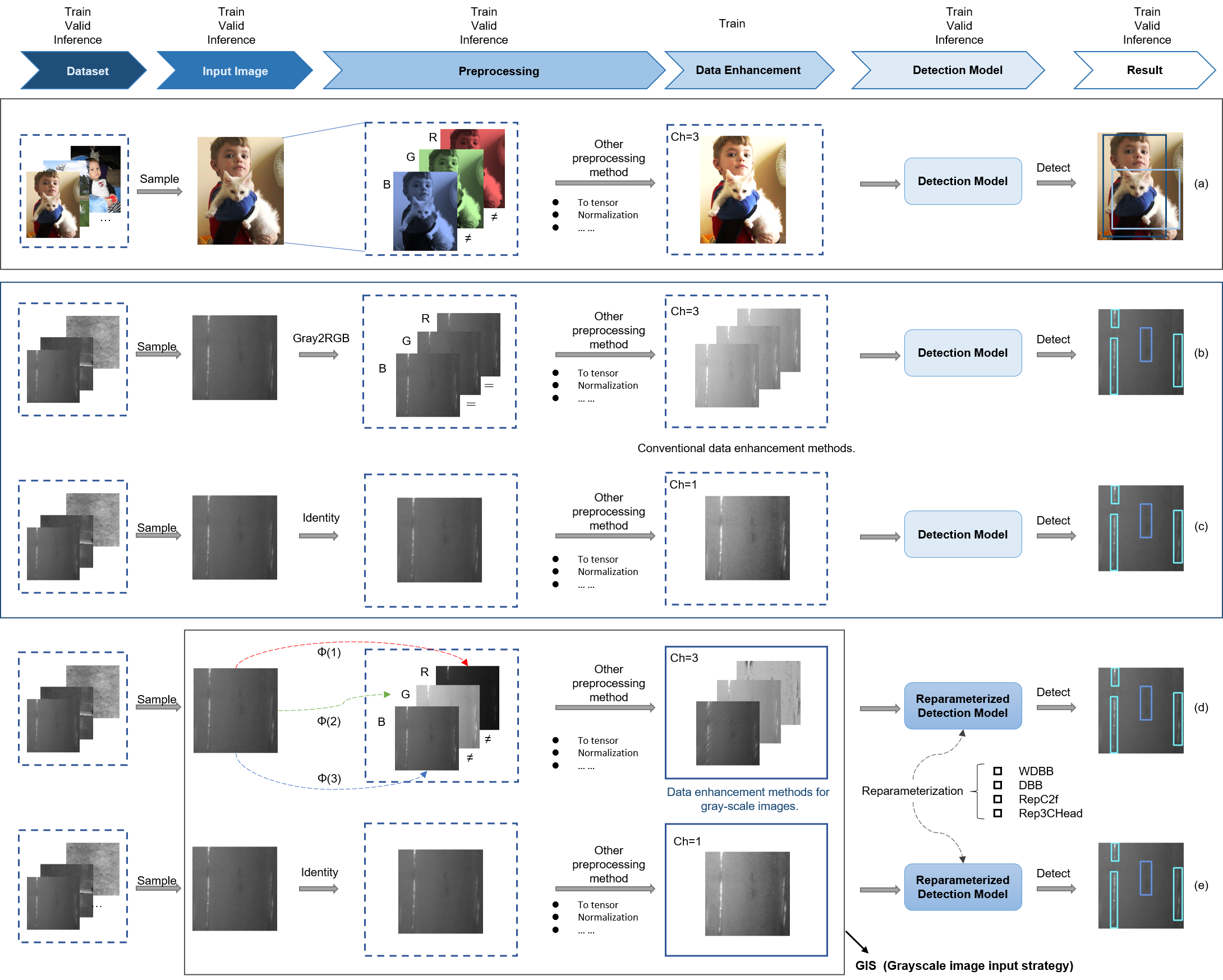

This paper proposes an enhanced object detection network, YOLO-MIF, for addressing the challenges of object detection in gray-scale images. The network integrates multiple multi-information fusion strategies to improve the YOLOv8 network. The paper first introduces a technique for creating pseudo multi-channel gray-scale images to increase the network's channel information and alleviate potential image noise and defocus blur issues. Subsequently, by using network structure reparameterization techniques, the detection performance of the network is improved without increasing the inference time. Additionally, a novel decoupled detection head is introduced to enhance the model's expressive power when dealing with gray-scale images. The algorithm is evaluated on two open-source gray-scale image detection datasets (NEU-DET and FLIR-ADAS). The results show that at the same speed, the algorithm outperforms YOLOv8 by 2.1% and Faster R-CNN by 4.8% in balancing detection efficiency and effectiveness.

- YOLO-MIF: An object detection network designed for gray-scale images

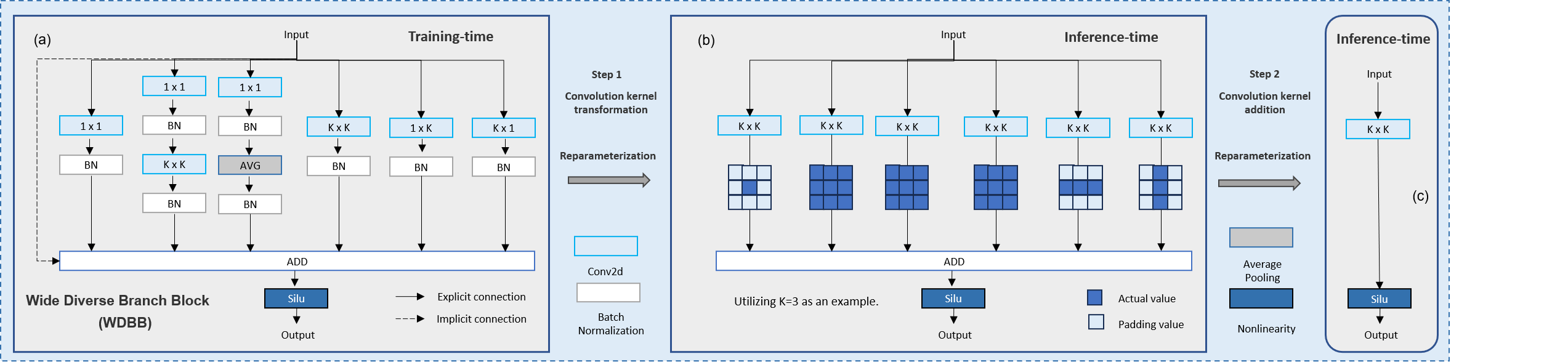

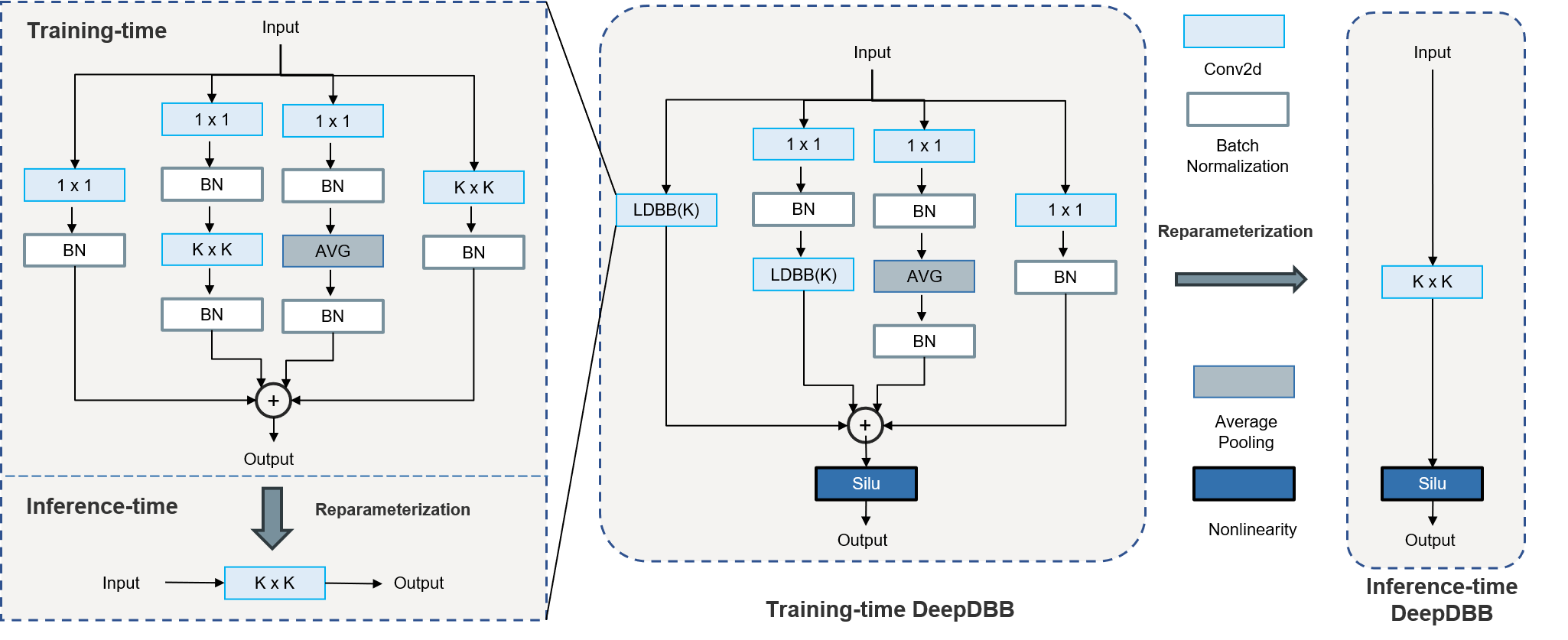

- New reparameterization modules: WDBB, RepC2f

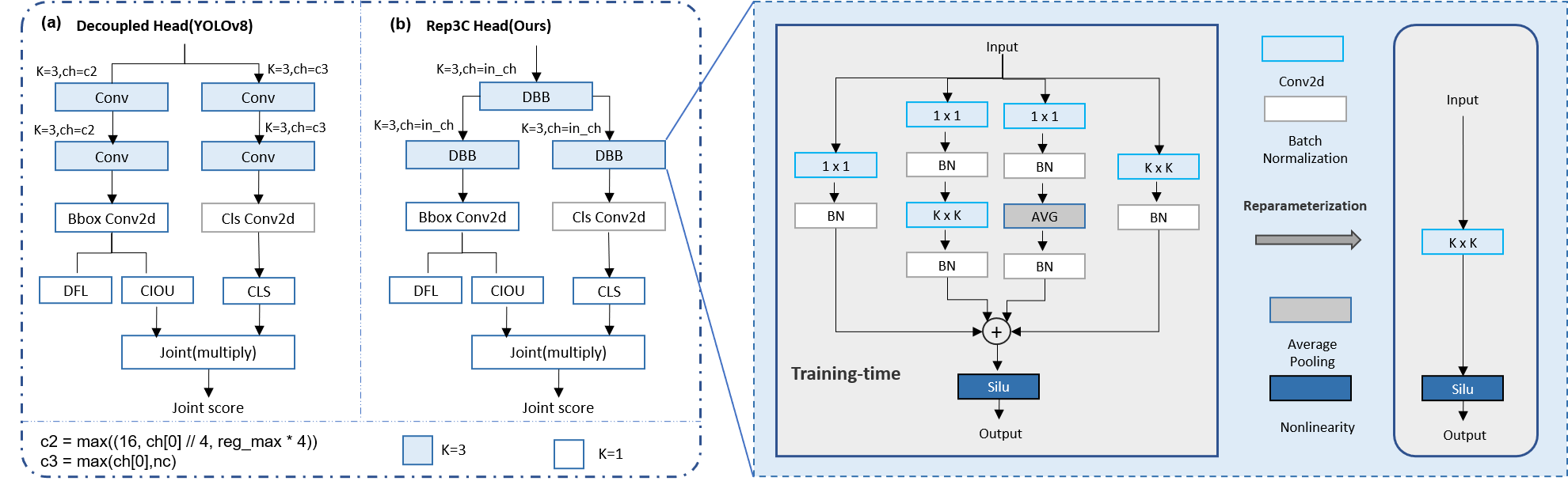

- Rep3C Head

- GIS: Input strategy for gray-scale images

- uint8: 'Gray' Single-channel 8-bit gray-scale image.

- uint16: 'Gray16bit' Single-channel 16-bit gray-scale image.

- uint8: 'SimOTM' 'SimOTMBBS' Single-channel 8-bit gray-scale image TO Three-channel 8-bit gray-scale image.

- uint8: 'BGR' Three-channel 8-bit color image.

- [YOLO-MIF: Improved YOLOv8 with Multi-Information Fusion for Object Detection in Gray-Scale Images]

Install

Pip install the ultralytics package including all requirements in a Python>=3.7 environment with PyTorch>=1.7.

pip install -r requirements.txtUsage

- NEU-DET

python train_NEU-DET-RepDC.py

- FLIR-ADAS

python train_FLIR_ADAS-16-RepDCHead.py

Correspondence between Paper and Code

- RIR=True + SimOTMBBS = GIS

- SimOTM yields better results but reduces speed, while the SimOTMBBS used in this paper almost does not reduce speed. If readers need, SimOTM will be open-sourced separately on arXiv without further journal submissions. Original paper and details can be found at: Link

- Function.cpp contains CUDA and C++ (CPU) implementations

- Code related to GIS can be found in ultralytics/yolo/data/base.py

- Code related to NEU-DET can be found in train_NEU-DET-RepDC.py

parser.add_argument('--use_rir', action='store_true', default=False, help='RIR: random_interpolation_resize ')

parser.add_argument('--use_simotm', type=str, choices=['Gray2BGR', 'SimOTM', 'SimOTMBBS','Gray'], default='SimOTMBBS', help='simotm')- Reparameterization Modules

- Code related to WDBB can be found in ultralytics/nn/modules/rep_block.py

['DiverseBranchBlock','DeepACBlockDBB','WideDiverseBranchBlock','DeepDiverseBranchBlock','ACBlockDBB','ACBlock']

# WideDiverseBranchBlock corresponds to WDBB mentioned in the paper, other modules need further experimentation and verification-

DeepDBB (experimental and theoretical details not explained in the paper)

-

Code related to RepC2f can be found in ultralytics/nn/modules/block.py

'C2f_ACDBB', 'C2f_DeepACDBB', 'C2f_DeepDBB', 'C2f_DeepACDBBMix', 'C2f_DBB', 'C2f_ACNET', 'C2f_WDBB'

# C2f_WDBB in the code corresponds to RepC2f in the paper, details about C2f_DeepDBB will be used in the next paper. Others need further experimentation and verification- Code related to Rep3C Head can be found in ultralytics/nn/modules/head.py

'Detect', 'Segment', 'Pose', 'Classify', 'RTDETRDecoder','DetectDBB','DetectACDBB','DetectAC','DetectDeepDBB',\

'DetectDeepACDBB' , 'Detect_Efficient','DetectSingleDBB','Detect2AC2DBB',\

'Detect2DBB2AC','Detect2DBBAC','Detect2ACDBB','Detect_Efficient3DBB','Detect_Efficient3DBBR'

# Detect_Efficient3DBB in the code corresponds to Rep3C Head in the paper, some modules have been validated effectively but not included in the paper yet. Others need further experimentation and verification- [Chinese Interpretation of YOLO-MIF](Chinese Interpretation Link) [TODO: Will be written and updated later if needed]

- Video Tutorial and Secondary Innovation Solutions for YOLO-MIF [TODO: Detailed tutorial in text-based PPT format]

- Secondary Innovation Solutions [The last page of the PPT tutorial provides some secondary innovation solutions. TODO: Will be written and updated later if needed]

Yolo-Mif: Improved Yolov8 with Multi-Information Fusion for Object Detection in Gray-Scale Images

https://doi.org/10.2139/ssrn.4767026

- Codebase used for overall framework: YOLOv8

- Reparameterization reference code by Ding Xiaohan: DiverseBranchBlock

- Some modules reference from Devil Mask's open-source repository

- YOLOv7

- Albumentations Data Augmentation Library

- Reparameterization validation code references from Handwritten AI's reparameterization course

Thank you for your interest and support in this project. The authors strive to provide the best quality and service, but there is still much room for improvement. If you encounter any issues or have any suggestions, please let us know. Furthermore, this project is currently maintained by the author personally, so there may be some oversights and errors. If you find any issues, feel free to provide feedback and suggestions.

Other open-source projects are being organized and released gradually. Please check the author's homepage for downloads in the future. Homepage

- Added README.md file (Completed)

- Detailed tutorials (TODO)

- Project environment setup (The entire project is based on YOLOv8 version as of November 29, 2023, configuration referenced in README-YOLOv8.md file and requirements.txt)

- Explanation of folder correspondences (Consistent with YOLOv8, hyperparameters unchanged) (TODO: Detailed explanation)

- Summary of secondary innovation points and code implementation (TODO)

- Paper illustrations:

- Principle diagrams, network structure diagrams, flowcharts: PPT (Personal choice, can also use Visio, Edraw, AI, etc.)

- Experimental comparisons: Orgin (Matlab, Python, R, Excel all applicable)