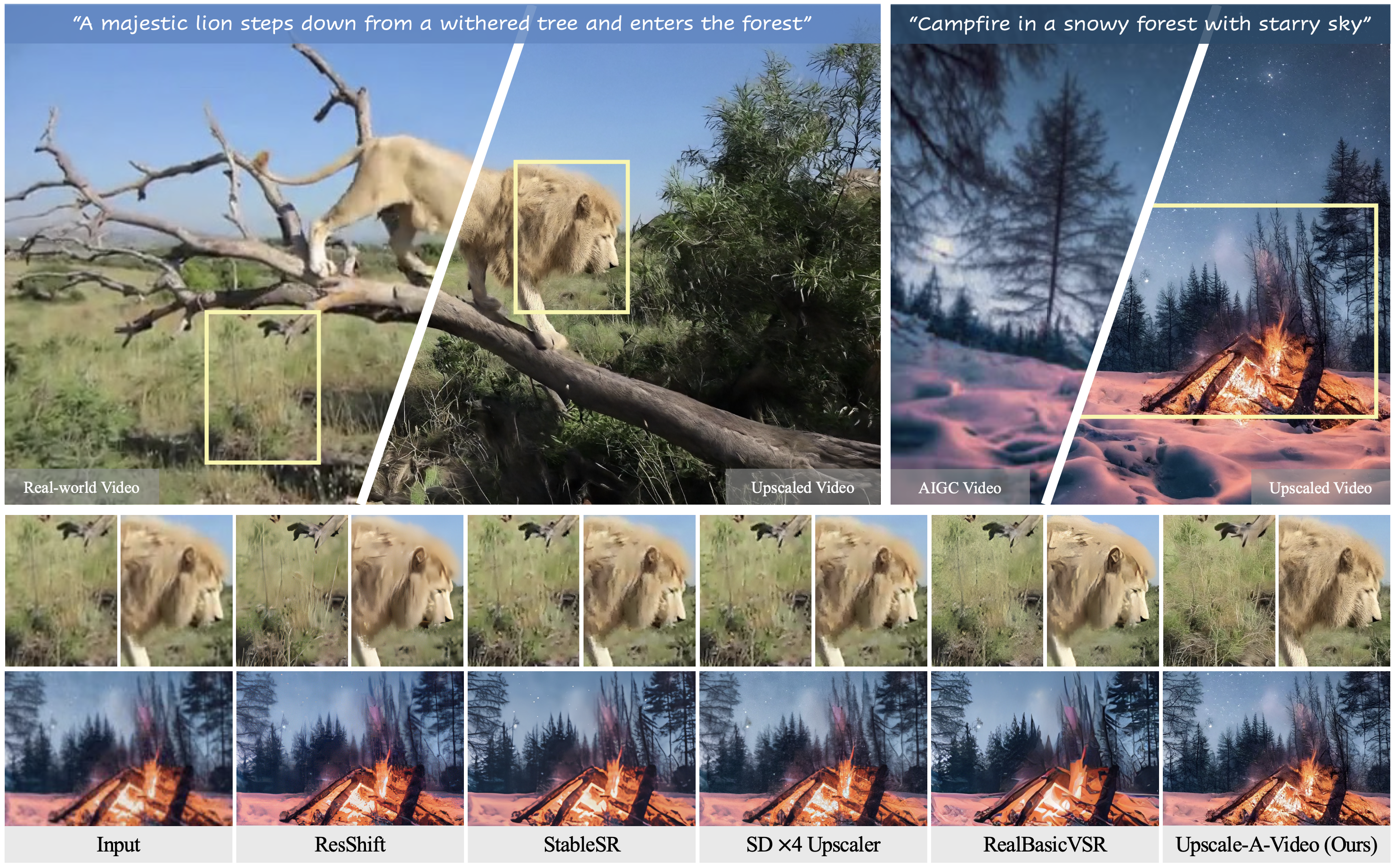

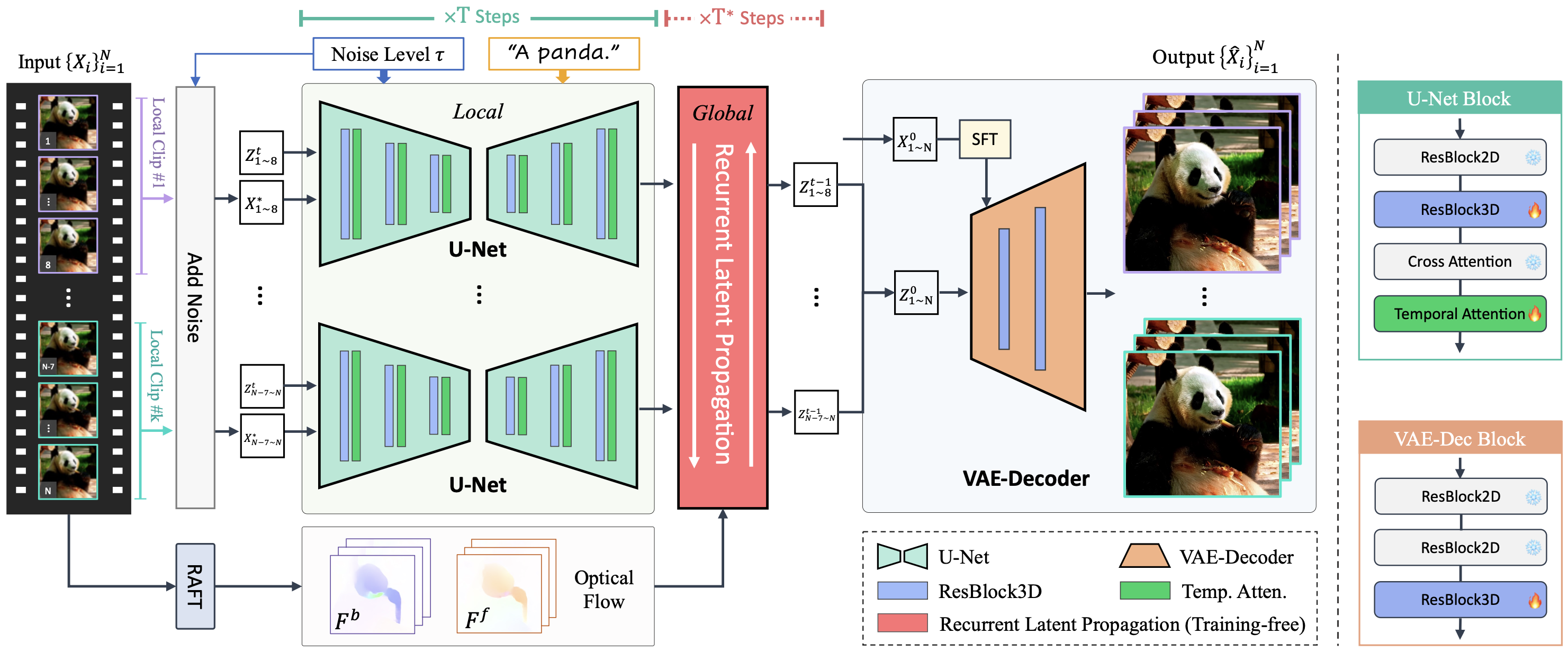

Upscale-A-Video is a diffusion-based model that upscales videos by taking the low-resolution video and text prompts as inputs.

📖 For more visual results, go checkout our project page

- [2024.09] Inference code is released.

- [2024.02] YouHQ dataset is made publicly available.

- [2023.12] This repo is created.

-

Clone Repo

git clone https://github.com/sczhou/Upscale-A-Video.git cd Upscale-A-Video -

Create Conda Environment and Install Dependencies

# create new conda env conda create -n UAV python=3.9 -y conda activate UAV # install python dependencies pip install -r requirements.txt

-

Download Models

(a) Download pretrained models and configs from Google Drive and put them under the

pretrained_models/upscale_a_videofolder.The

pretrained_modelsdirectory structure should be arranged as:├── pretrained_models │ ├── upscale_a_video │ │ ├── low_res_scheduler │ │ ├── ... │ │ ├── propagator │ │ ├── ... │ │ ├── scheduler │ │ ├── ... │ │ ├── text_encoder │ │ ├── ... │ │ ├── tokenizer │ │ ├── ... │ │ ├── unet │ │ ├── ... │ │ ├── vae │ │ ├── ...(a) (Optional) LLaVA can be downloaded automatically when set

--use_llavatoTrue, for users with access to huggingface.

The --input_path can be either the path to a single video or a folder containing multiple videos.

We provide several examples in the inputs folder.

Run the following commands to try it out:

## AIGC videos

python inference_upscale_a_video.py \

-i ./inputs/aigc_1.mp4 -o ./results -n 150 -g 6 -s 30 -p 24,26,28

python inference_upscale_a_video.py \

-i ./inputs/aigc_2.mp4 -o ./results -n 150 -g 6 -s 30 -p 24,26,28

python inference_upscale_a_video.py \

-i ./inputs/aigc_3.mp4 -o ./results -n 150 -g 6 -s 30 -p 20,22,24## old videos/movies/animations

python inference_upscale_a_video.py \

-i ./inputs/old_video_1.mp4 -o ./results -n 150 -g 9 -s 30

python inference_upscale_a_video.py \

-i ./inputs/old_movie_1.mp4 -o ./results -n 100 -g 5 -s 20 -p 17,18,19

python inference_upscale_a_video.py \

-i ./inputs/old_movie_2.mp4 -o ./results -n 120 -g 6 -s 30 -p 8,10,12

python inference_upscale_a_video.py \

-i ./inputs/old_animation_1.mp4 -o ./results -n 120 -g 6 -s 20 --use_video_vaeIf you notice any color discrepancies between the output and the input, you can set --color_fix to "AdaIn" or "Wavelet". By default, it is set to "None".

The datasets are hosted on Google Drive

| Dataset | Link | Description |

|---|---|---|

| YouHQ-Train | Google Drive | 38,576 videos for training, each of which has around 32 frames. |

| YouHQ40-Test | Google Drive | 40 video clips for evaluation, each of which has around 32 frames. |

If you find our repo useful for your research, please consider citing our paper:

@inproceedings{zhou2024upscaleavideo,

title={{Upscale-A-Video}: Temporal-Consistent Diffusion Model for Real-World Video Super-Resolution},

author={Zhou, Shangchen and Yang, Peiqing and Wang, Jianyi and Luo, Yihang and Loy, Chen Change},

booktitle={CVPR},

year={2024}

}This project is licensed under NTU S-Lab License 1.0. Redistribution and use should follow this license.

If you have any questions, please feel free to reach us at shangchenzhou@gmail.com or peiqingyang99@outlook.com.