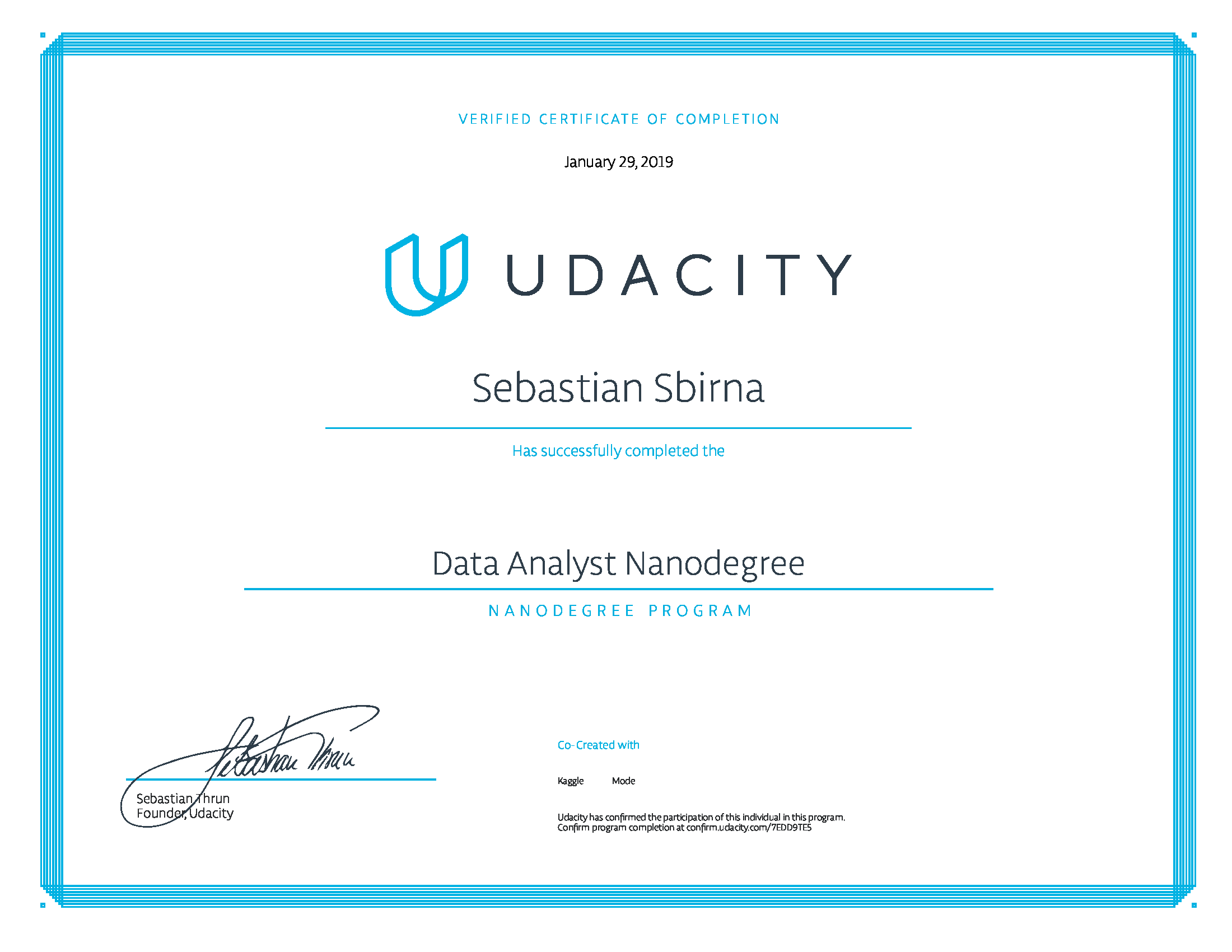

A collection of all the module projects analyzed during the Data Analyst Nanodegree - Udacity:

Project 5: Data Exploration of the performance of globally-selected 15/16-year-old students in Mathematics, Reading and Science Literacy, based on the results of the PISA 2012 test

PISA is a survey of students' skills and knowledge as they approach the end of compulsory education. It is not a conventional school test. Rather than examining how well students have learned the school curriculum, it looks at how well prepared they are for life beyond school.

Around 510,000 students in 65 economies took part in the PISA 2012 assessment of reading, mathematics and science representing about 28 million 15-year-olds globally. Of those economies, 44 took part in an assessment of creative problem solving and 18 in an assessment of financial literacy.

After looking throughout the Dataset Dictionary to find out what each of these columns represents, a number of leads to be explored have been considered:

-

We are interested in finding out how students from individual countries perform in Math, Reading and Science literacy.

-

Considering that we can see the countries' average literacy patters in different subjects, we are also curious about from which countries do the "geniuses" stem, meaning which countries have students with exceptionally high literacy scores.

-

Lastly, we would like to find out whether students whose parents have different cultural backgrounds will report any changes in average scores, compared with students raised in a homogenous family background.

After completing this project, I gained skills in:

- Supplementing statistics with visualizations to create an understanding of data

- Choosing appropriate plots, limits, transformations, and aesthetics to explore a dataset, allowing people to understand distributions of variables and relationships between features

- Using design principles to create effective visualizations for communicating findings to an audience

Project: Data Exploration of the performance of globally-selected 15/16-year-old students in Mathematics, Reading and Science Literacy, based on the results of the PISA 2012 test

Real-world data rarely comes clean. Using Python and its libraries, we need to gather data from a variety of sources and in a variety of formats, assess its quality and tidiness, then clean it; a process also known as data wrangling.

The dataset that I have wrangled (and analyzed and visualized) is the tweet archive of Twitter user @dog_rates, also known as WeRateDogs. WeRateDogs is a Twitter account that rates people's dogs with a humorous comment about the dog. These ratings almost always have a denominator of 10. The numerators, though? Almost always greater than 10. 11/10, 12/10, 13/10, etc. Why? Because "they're good dogs Brent". WeRateDogs has over 7.5 million followers and has received international media coverage.

WeRateDogs downloaded their Twitter archive for Udacity students exclusively to use in this project. This archive contains basic tweet data (tweet ID, timestamp, text, etc.) for all 5000+ of their tweets as they stood on August 1, 2017.

After completing the project, I understood the entire processes of:

- Data wrangling, which consists of:

- Gathering data

- Assessing data

- Cleaning data

- Storing, analyzing, and visualizing my wrangled data

- Reporting on:

- my data wrangling steps and efforts

- my data analyses and visualizations

A/B tests are very commonly performed by data analysts and data scientists to test changes on a web page by running an experiment where a control group sees the old version, while the experiment group sees the new version. A metric (in our case, the "converted" rate) is then chosen to measure the level of engagement from users in each group. These results are then used to judge whether one version is more effective than the other.

An e-commerce company has developed a new web page in order to try and increase the number of users who "convert," meaning the number of users who decide to pay for the company's product. Through this notebook, the company's decision will be tested to let the company understand if they should implement this new page, keep the old page, or perhaps run the experiment longer to make their decision.

This project will analyze the statistical likelihood of increasing conversion rates due to a newer version of the landing page, and will consolidate and validate its results by demonstrating the same statistical effects using three statistical methods: probability, hypothesis testing, and, lastly, logistic regression.

After completing the project, I understood how to handle:

- Applying inferential statistics and probability to important, real-world scenarios

- Builiding supervised learning models

As medical clinics all over the world have experienced, patient arrival at their scheduled appointments is not guaranteed. Moreover, it seems hard for the medical personnel to explain what are the reasons behind such behaviour.

This data analysis project aims to describe some possible factors behind patient absence from scheduled appointments in Brazil, using descriptive statistics upon a dataset of 100.000+ medical appointments collected from various medical clinics in Brazil's many neighbourhoods.

After completing the project, I developed my skills in:

- Knowing all the steps involved in a typical data analysis process

- Posing questions that can be answered with a given dataset and then answering those questions

- Knowing how to investigate problems in a dataset and wrangle the data into a usable format

- Having practice communicating the results of my analysis

- Being able to use vectorized operations in NumPy and pandas to speed up my data analysis code.

- Being familiar with pandas' Series and DataFrame objects, which lets us access data more conveniently

- Knowing how to use Matplotlib to produce plots showing my findings

This project demonstrates several found trends in the fluctuations of the average weather temperature of the world in the time interval between the years 1750 to 2013, compared with the local yearly average temperature in Copenhagen, Denmark, where I am currently living in.