This repo contains all the code and test cases for the LLift project

For verification, we provide a docker image to run the program with several cases (a super set of Table 4 in the paper)

-

set OPENAI_API_KEYin thedocker-composefile with your own key. -

docker-compose build appto build image -

docker-compose up -dstart the containers -

docker exec -it llift_ae_container /bin/bashto go to the container "app" -

cd /app/appto go to the project directory -

/bin/bash run_min_expr.shto run the program

The program will run for a while (30min), and then put back all the results to the database

connect to the database with the following configuration:

"host": "127.0.0.1",

"database": "ubidb1",

"user": "ubiuser1",

"password": "ubitect",

"port": 5433

(the port is weird, but it is the port we use in the docker-compose file)

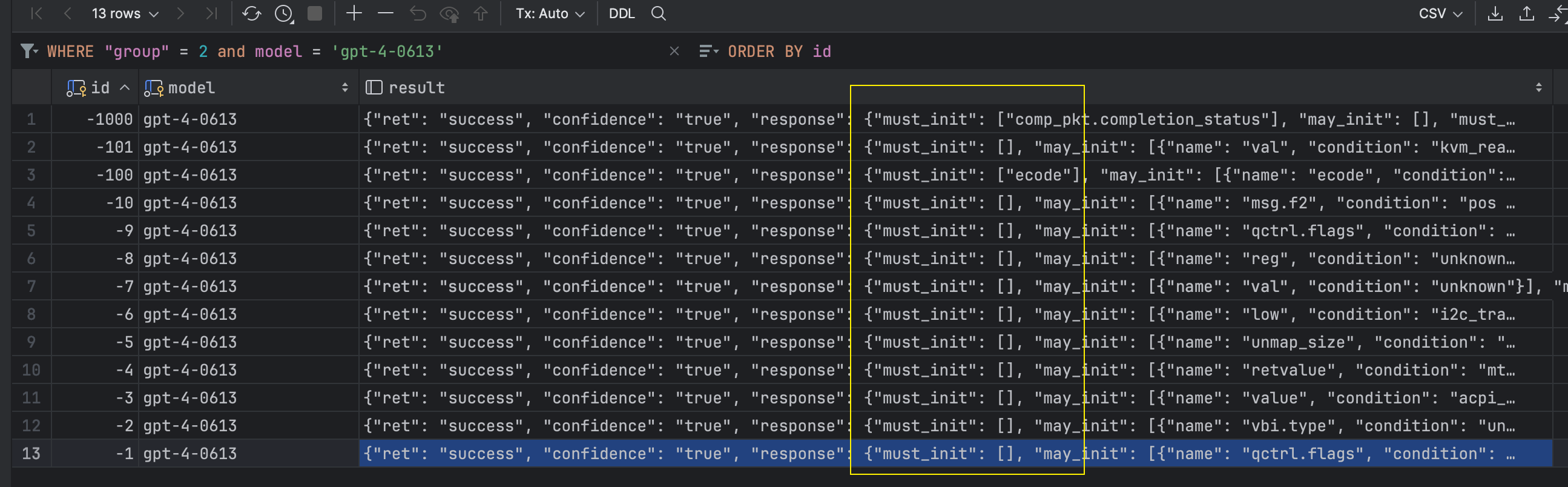

And in the table "sampling_res", you can find the result.

The expected result should be:

focusing on the "must_init", it should be either empty or "something" (i.e., a varaible)

Note: the result may be slightly different due to the randomness of GPT-4

use python run.py --id <n> --group <m> to run a single case, where n is the case number and m is the group number. The group number has the following rule:

- 20: TP cases of UBITect, use for test (bug-50)

- 3: selective cases for comparison (Cmp-40)

- 11: random-1000

- 2: a small subset of "20", for quick test

For Cmp-40. The id from -412 to -400 are 13 real bugs, and -626 to -600 are 27 false positives from UBITect.