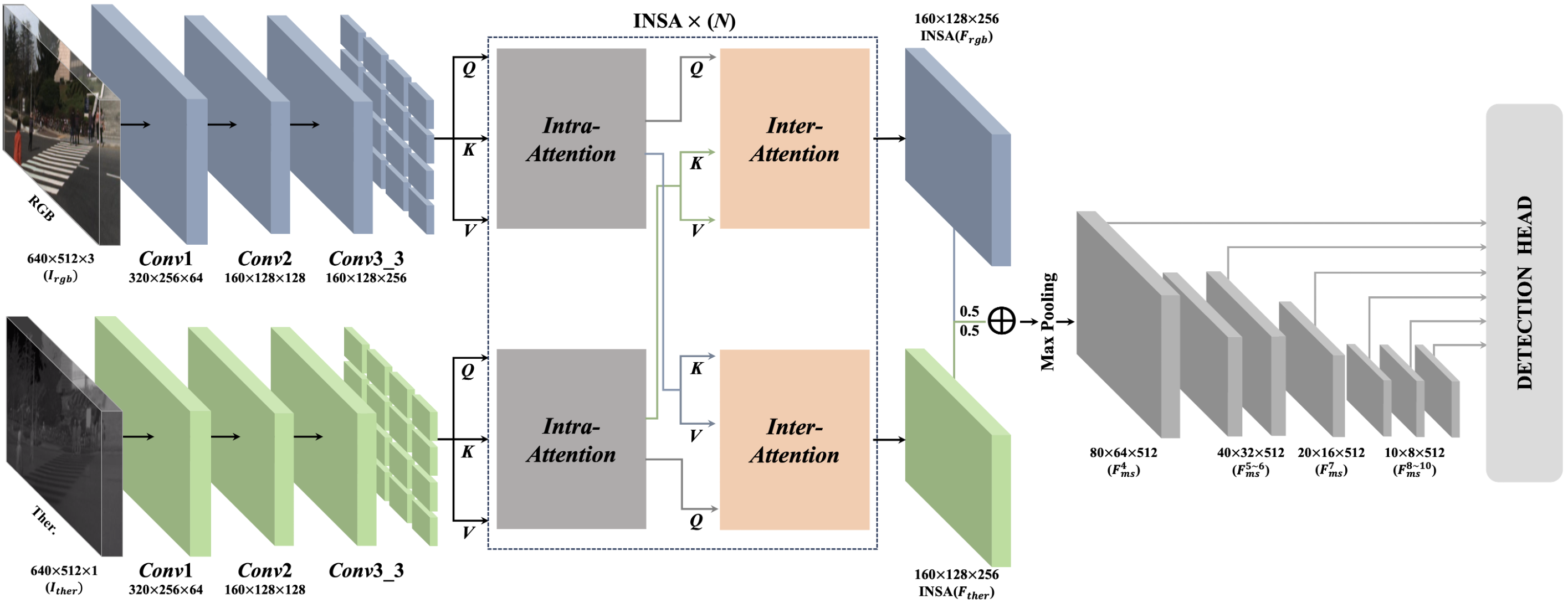

INSANet: INtra-INter Spectral Attention Network for Effective Feature Fusion of Multispectral Pedestrian Detection

Official Pytorch Implementation of INSANet: INtra-INter Spectral Attention Network for Effective Feature Fusion of Multispectral Pedestrian Detection

Authors: Sangin Lee, Taejoo Kim, Jeongmin Shin, Namil Kim, Yukyung Choi

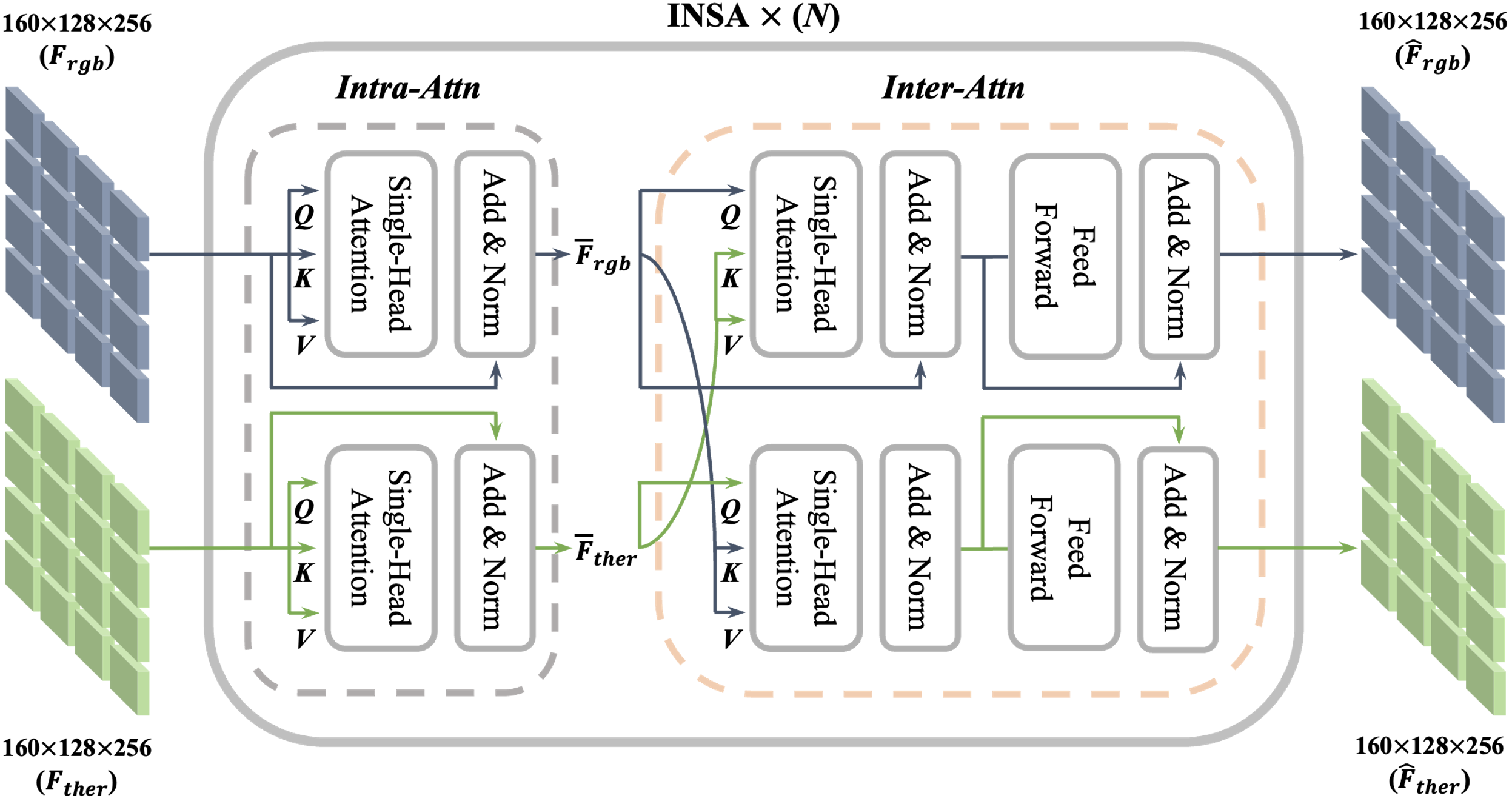

Pedestrian detection is a critical task for safety-critical systems, but detecting pedestrians is challenging in low-light and adverse weather conditions. Thermal images can be used to improve robustness by providing complementary information to RGB images. Previous studies have shown that multi-modal feature fusion using convolution operation can be effective, but such methods rely solely on local feature correlations, which can degrade the performance capabilities. To address this issue, we propose an attention-based novel fusion network, referred to as INSANet (INtra- INter Spectral Attention Network), that captures global intra- and inter-information. It consists of intra- and inter-spectral attention blocks that allow the model to learn mutual spectral relationships. Additionally, we identified an imbalance in the multispectral dataset caused by several factors and designed an augmentation strategy that mitigates concentrated distributions and enables the model to learn the diverse locations of pedestrians. Extensive experiments demonstrate the effectiveness of the proposed methods, which achieve state-of-the-art performance on the KAIST dataset and LLVIP dataset. Finally, we conduct a regional performance evaluation to demonstrate the effectiveness of our proposed network in various regions.

- OS: Ubuntu 20.04

- CUDA-cuDNN: 11.3.0-8

- GPU: NVIDIA-A100

- Python-Torch: 3.7-1.11.0

See environment.yaml for more details

The environment file has all the dependencies that are needed for INSANet.

We offer guides on how to install dependencies via docker and conda.

First, clone the repository:

git clone https://github.com/sejong-rcv/INSANet.git

cd INSANet

-

Prerequisite

- nvidia-container-toolkit

- Note that nvidia-cuda:11.3.0 is deprecated. See issue.

-

Build Image & Make Container

cd docker

make docker-make

- Run Container

cd ..

nvidia-docker run -it --name insanet -v $PWD:/workspace -p 8888:8888 -e NVIDIA_VISIBLE_DEVICES=all --shm-size=32G insanet:maintainer /bin/bash

- Prerequisite

- Required dependencies are listed in environment.yaml.

conda env create -f environment.yml

conda activate insanet

If your environment support CUDA 11.3,

conda env create -f environment_cu113.yml

conda activate insanet

The datasets used to train and evaluate model are as follows:

The dataloader in datasets.py assumes that the dataset is located in the data folder and structured as follows:

- First, you should download the dataset. we provide the script to download the dataset (please see data/download_kaist).

- Train: We use paired annotations provided in AR-CNN.

- Evaluation: We use sanitized (improved) annotations provided in MSDS-RCNN.

├── data

└── kaist-rgbt

├── annotations_paired

├── set00

├── V000

├── lwir

├── I00000.txt

├── ...

├── visible

├── I00000.txt

├── ...

├── V001

├── lwir

├── I00000.txt

├── ...

├── visible

├── I00000.txt

├── ...

└── ...

├── ... (set02-set10)

└── set11

├── V000

├── lwir

├── I00019.txt

├── ...

├── visible

├── I00019.txt

├── ...

├── images

├─ The structure is identical to the "annotations_paired" folder:

└─ A pair of images has its own train annotations with the same file name.

├── src

├── kaist_annotations_test20.json

├── imageSets

├── train-all-02.txt # List of file names for train.

└── test-all-20.txt

Our pre-trained model on the KAIST dataset can be downloaded from pretrained/download_pretrained.py or google drive.

You can infer and evaluate a pre-trained model on the KAIST dataset as follows the below.

python pretrained/download_pretrained.py

sh src/script/inference.sh

All train and inference scripts can be found in src/script/train_eval.sh and src/script/inference.sh.

We provide a per-epoch evaluation in the training phase for convenience. However, you might see OOM in the early epoch so the per-epoch evaluation is proceed after 10 epochs.

cd src/script

sh train_eval.sh

If you want to identify the number of (multiple) GPUs and THREADs, add 'CUDA_VISIBLE_DEVICES' and 'OMP_NUM_THREADS'(optional).

CUDA_VISIBLE_DEVICES=0,1 OMP_NUM_THREADS=1 python src/train_eval.py

If you only want to evaluate, please see the scripts in src/utils/evaluation_script.sh and src/utils/evaluation_scene.sh.

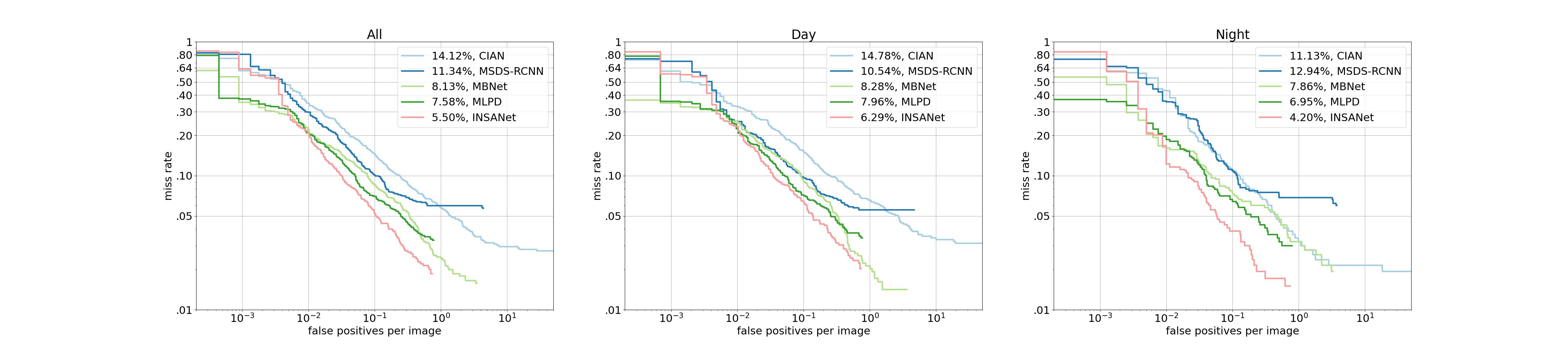

As mentioned in the paper, we evaluate the performance for standard evaluation protocol (All, Day, and Night) on the KAIST dataset, as well as the performance by region (Campus, Road, Downtown), and provide them as evaluation_script.sh and evaluation_scene.sh, respectively.

If you want to evaluate a experiment result files (.txt format), follow:

cd src/utils

python evaluation_script.py \

--annFile '../kaist_annotations_test20.json' \

--rstFiles '../exps/INSANet/Epoch010_test_det.txt'

Note that this step is primarily used to evaluate performance per training epochs (result files saved in src/exps).

If you don't want to bother writing down the names of all those files, follow:

cd src/utils

python evaluation_script.py \

--annFile '../kaist_annotations_test20.json' \

--jobsDir '../exps/INSANet'

Arguments, jobsDir, evaluates all result files in the folder in a sequential.

You can evaluate the result of the model with the scripts and draw all the state-of-the-art methods in a figure.

The figure represents the log-average miss rate (LAMR), the most popular metric for pedestrian detection tasks.

Annotation files only support a JSON format, whereas result files support a JSON and text format (multiple result files are supported). See the below.

cd evaluation

python evaluation_script.py \

--annFile ./KAIST_annotation.json \

--rstFile state_of_arts/INSANet_result.txt \

state_of_arts/MLPD_result.txt \

state_of_arts/MBNet_result.txt \

state_of_arts/MSDS-RCNN_result.txt \

state_of_arts/CIAN_result.txt \

--evalFig KAIST_BENCHMARK.jpg

(optional) $ sh evaluation_script.sh

Note that † is the re-implemented performance with the proposed fusion method (other settings, such as the backbone and the training parameters, follow our approach).

| Method | Miss-Rate (%) | |||||

|---|---|---|---|---|---|---|

| ALL | DAY | NIGHT | Campus | Road | Downtown | |

| ACF | 47.32 | 42.57 | 56.17 | 16.50 | 6.68 | 18.45 |

| Halfway Fusion | 25.75 | 24.88 | 26.59 | - | - | - |

| MSDS-RCNN | 11.34 | 10.53 | 12.94 | 11.26 | 3.60 | 14.80 |

| AR-CNN | 9.34 | 9.94 | 8.38 | 11.73 | 3.38 | 11.73 |

| Halfway Fusion† | 8.31 | 8.36 | 8.27 | 10.80 | 3.74 | 11.00 |

| MBNet | 8.31 | 8.36 | 8.27 | 10.80 | 3.74 | 11.00 |

| MLPD | 7.58 | 7.95 | 6.95 | 9.21 | 5.04 | 9.32 |

| ICAFusion | 7.17 | 6.82 | 7.85 | - | - | - |

| CFT† | 6.75 | 7.76 | 4.59 | 9.45 | 3.47 | 8.72 |

| GAFF | 6.48 | 8.35 | 3.46 | 7.95 | 3.70 | 8.35 |

| CFR | 5.96 | 7.77 | 2.40 | 7.45 | 4.10 | 7.25 |

| Ours(w/o shift) | 6.12 | 7.19 | 4.37 | 9.05 | 3.24 | 7.25 |

| Ours(w/ shift) | 5.50 | 6.29 | 4.20 | 7.64 | 3.06 | 6.72 |

This paper would not have been possible without some awesome researches: MLPD, Swin Transformer, KAIST.

We would also like to thank all the authors of our references for their excellent research.

If our work is useful in your research, please consider citing our paper:

@article{lee2024insanet,

title={INSANet: INtra-INter spectral attention network for effective feature fusion of multispectral pedestrian detection},

author={Lee, Sangin and Kim, Taejoo and Shin, Jeongmin and Kim, Namil and Choi, Yukyung},

journal={Sensors},

volume={24},

number={4},

pages={1168},

year={2024},

publisher={Multidisciplinary Digital Publishing Institute}

}