BasedXL is a demo project that showcases an implementation of Patch Parallelism from the DistriFusion paper to speed up multi-GPU performance of Stable Diffusion XL with minimal quality degradation. This project is intended to be run locally and is not a full library.

- Implements Patch Parallelism for accelerated multi-GPU inference

- Employs techniques from stable-fast and TensorRT for additional speedup

- Minimal dependencies and no build step

To run this demo locally, ensure that you have PyTorch, Diffusers, and Fire (for CLI) installed. You can install the dependencies using the following command:

pip install torch diffusers fire# Multi-GPU

torchrun --nproc-per-node=2 sample.py

# Single GPU

python sample.pyOptionally compile the UNet with torch.compile for a slight speedup. (warning: can take minute or two)

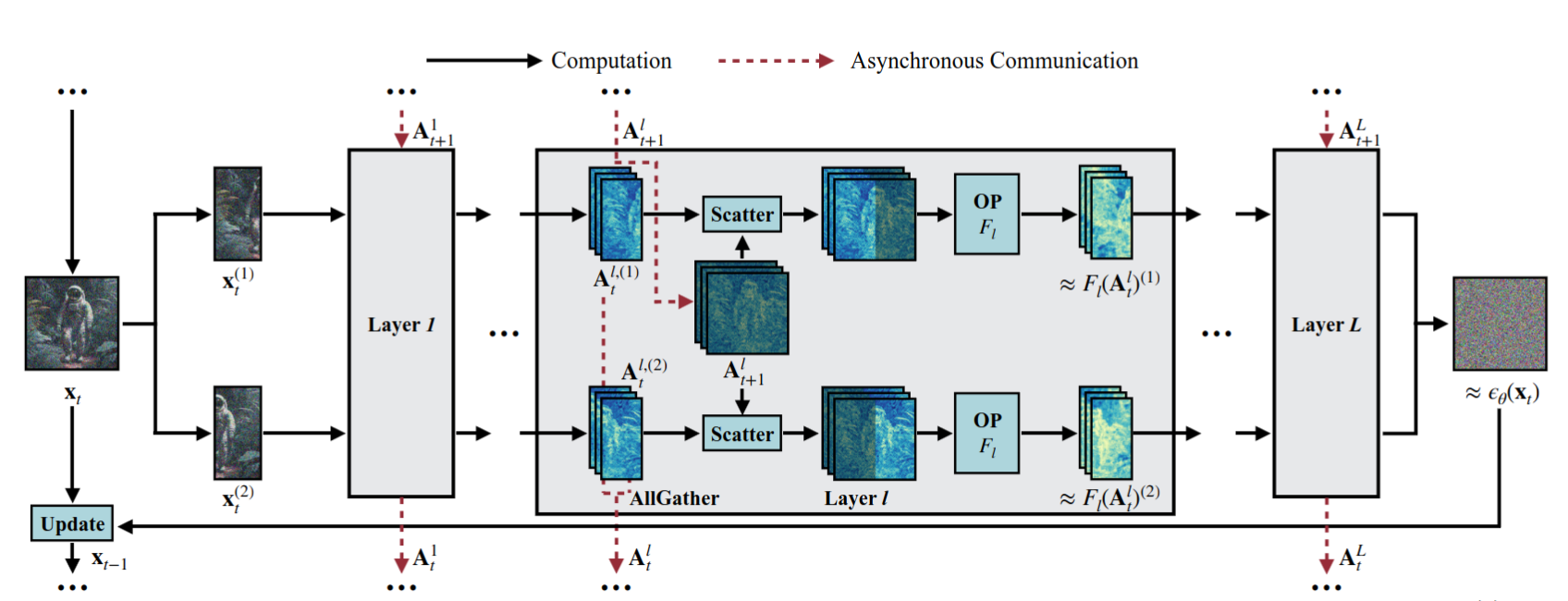

torchrun --nproc-per-node=2 sample.py --compile_unetDistriFusion is a method that enables the generation of high-resolution images using diffusion models by leveraging parallelism across multiple GPUs. The approach splits the model input into patches, assigns each patch to a GPU, and utilizes the similarity between adjacent diffusion steps to provide context for the current step, allowing for asynchronous communication and pipelining. Experiments demonstrate that DistriFusion can be applied to Stable Diffusion XL without quality degradation, achieving up to a 6.1× speedup on eight NVIDIA A100s compared to a single GPU.

This project builds on the following works:

- DistriFusion: Distributing Diffusion Models with Patch Parallelism (arXiv, code)

- The Hugging Face Diffusers library (code)

- Stable Fast (code)

- Nvidia's TensorRT inference SDK (code)

This project is released under the MIT License.