Grounding Visual Representations with Texts for Domain Generalization

Seonwoo Min, Nokyung Park, Siwon Kim, Seunghyun Park, Jinkyu Kim

ECCV 2022 | Official Pytorch implementation

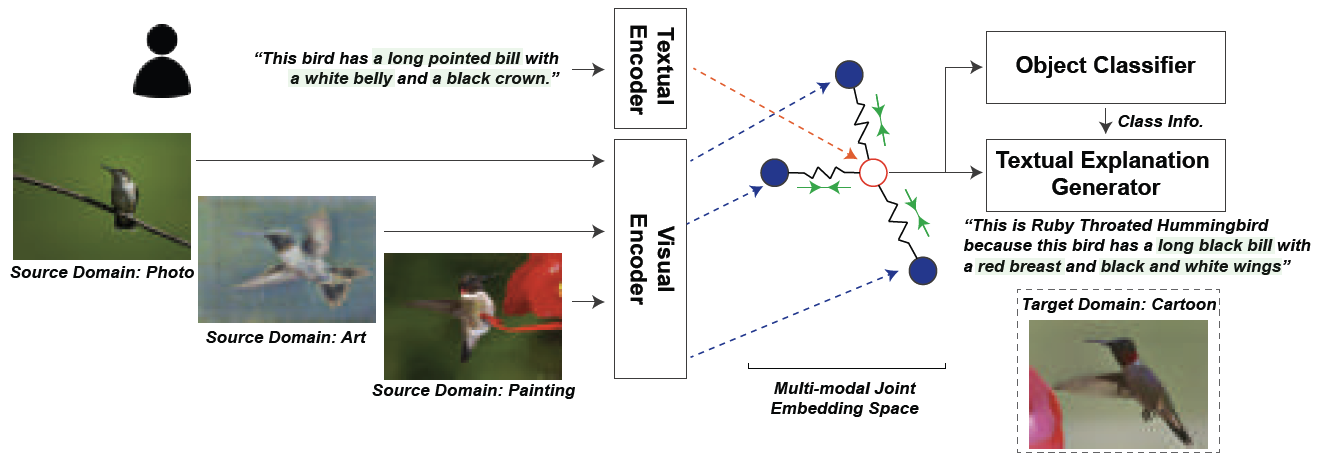

We advocate for leveraging the vision-and-language cross-modality supervision for the DG task.

- Two modules to ground visual representations with texts containing typical reasoning of humans.

- Visual and Textual Joint Embedder aligns visual representations with the pivot sentence embedding.

- Textual Explanation Generator generates explanations justifying the rationale behind its decision.

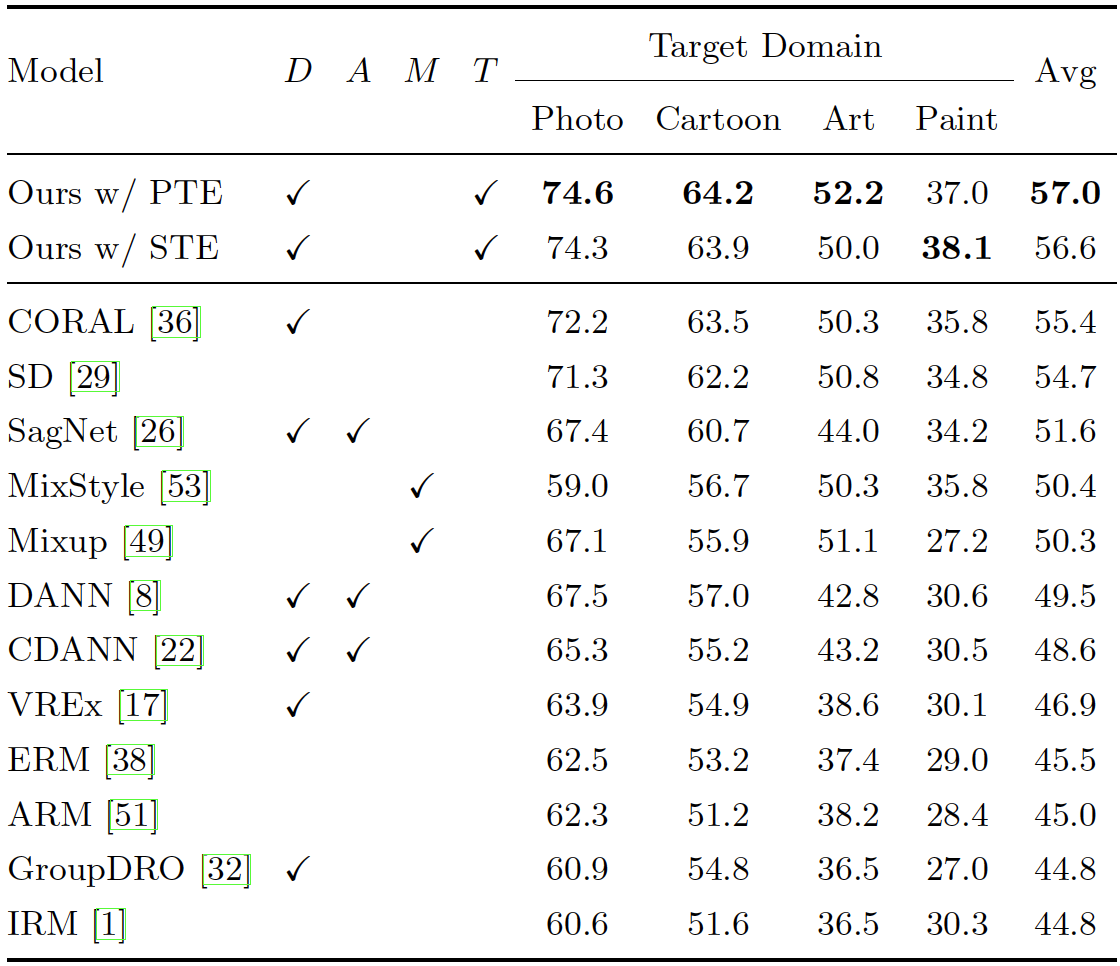

- Our method achieves state-of-the-art results both in CUB-DG and DomainBed benchmarks!

We recommend creating a conda environment and installing the necessary python packages as:

git clone https://github.com/mswzeus/GVRT.git

cd GVRT

ln -s ../src DomainBed_GVRT/src

conda create -n GVRT python=3.8

conda activate GVRT

conda install pytorch==1.10.2 torchvision==0.11.3 cudatoolkit=11.3 -c pytorch -c conda-forge

pip install -r requirements.txt

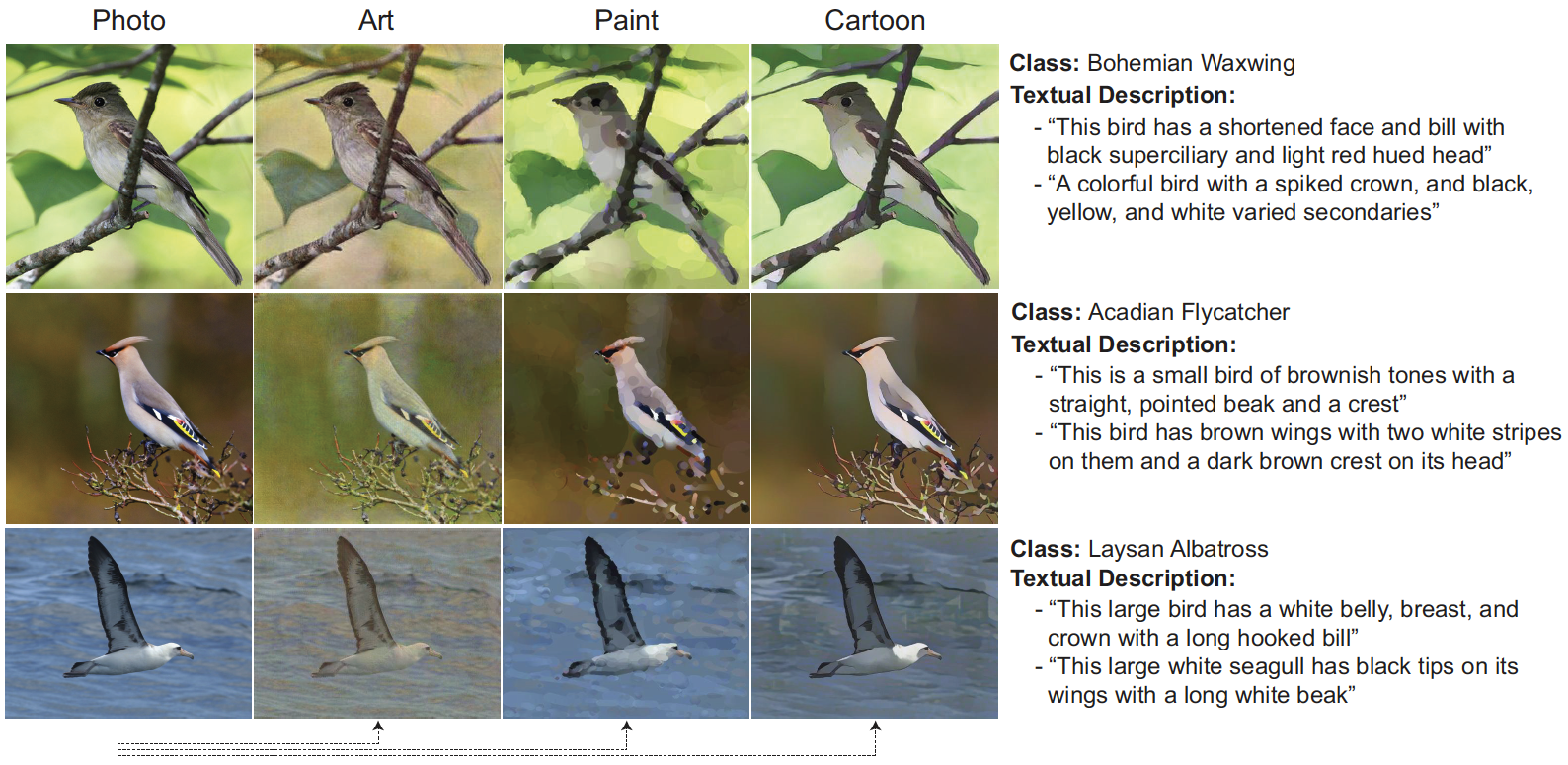

We created CUB-DG to investigate the cross-modality supervision in the DG task (Download Link).

CUB is an image dataset with photos of 200 bird species. For more information, please see the original repo.

We used pre-trained style transfer models to obtain images from three other domains, i.e. Art, Paint, and Cartoon.

- Photo-to-Art: CycleGAN (Monet)

- Photo-to-Paint: Stylized-Neral-Painting (Watercolor)

- Photo-to-Cartoon: White-Box-Cartoonization model

We provide the following pre-trained models for three independent runs (Download Link).

- Ours trained with PTE (pre-trained textual encoder)

- Ours trained with STE (self-supervised textual encoder)

You can use the train_model.py script with the necessary configurations as:

CUDA_VISIBLE_DEVICES=0 python train_model.py --algorithm GVRT --test-env 0 --seed 0 --output-path results/PTE_test0_seed0

You can use the evaluate_model.py script with the necessary configurations as:

CUDA_VISIBLE_DEVICES=0 python evaluate_model.py --algorithm GVRT --test-env 0 --seed 0 --output-path results/PTE_test0_seed0 --checkpoint pretrained_models/PTE_test0_seed0.pt

We report averaged results across three independent runs.

If you find our work useful, please kindly cite this paper:

@article{min2022grounding,

author = {Seonwoo Min and Nokyung Park and Siwon Kim and Seunghyun Park and Jinkyu Kim},

title = {Grounding Visual Representations with Texts for Domain Generalization},

journal = {arXiv},

volume = {abs/2207.10285},

year = {2022},

url = {https://arxiv.org/abs/2207.10285}

}