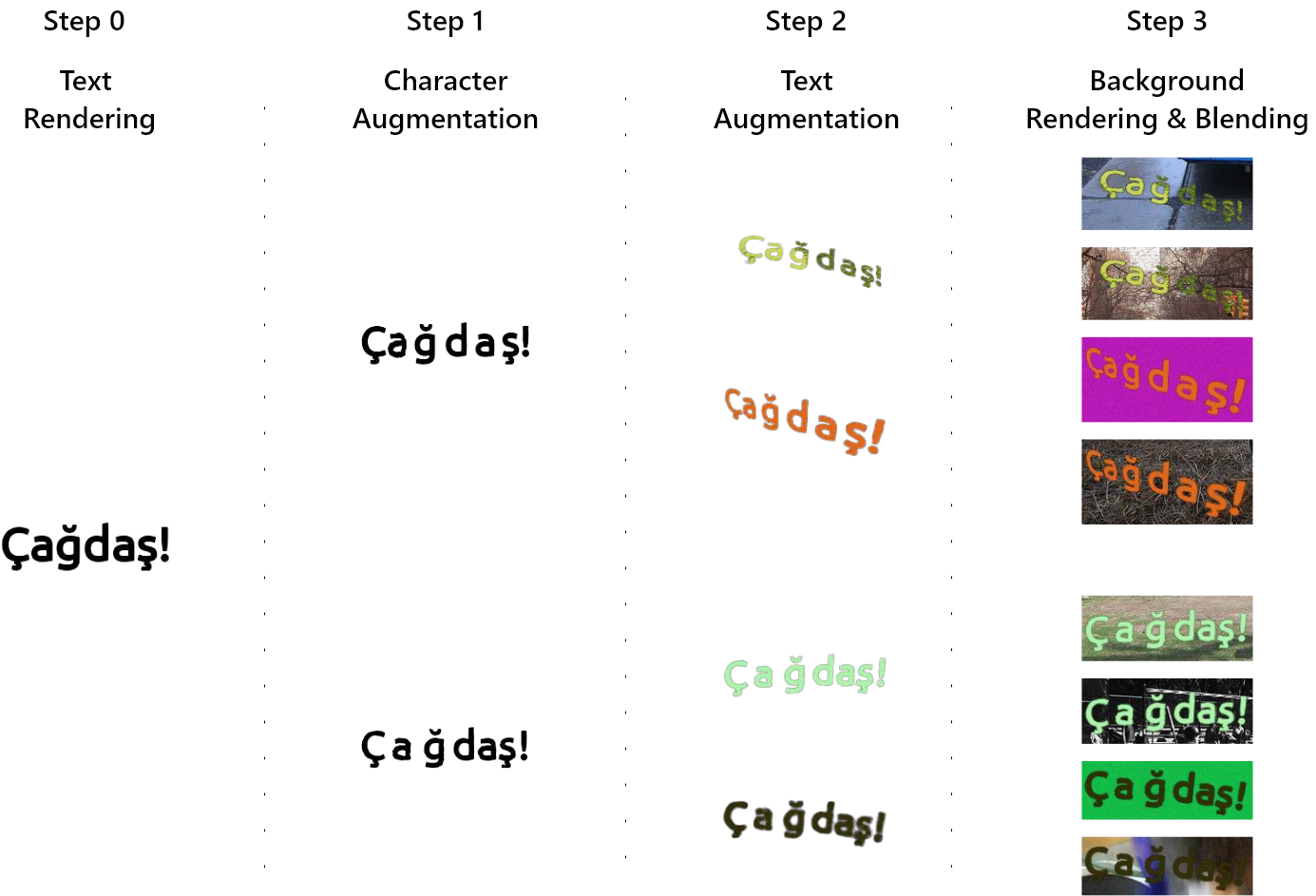

The Synthetic Turkish Scene Text Recognition (STS-TR) dataset is a comprehensive synthetic dataset created to complement the real-world Turkish Scene Text Recognition (TS-TR) dataset, featuring over 12 million synthetic samples designed to simulate various textual scenarios. It includes a wide array of Turkish words and phrases rendered in diverse fonts, sizes, and orientations on generic background scenes with added realistic effects like shadows, blurs, and environmental distortions. This dataset enhances training data availability for models, particularly those focusing on the Turkish language.

Figure 1: MViT-TR architecture.

To set up MViT-TR for training and evaluation, follow these steps:

-

Clone the repository:

git clone https://github.com/serdaryildiz/STS-TR.git cd STS-TR -

Install required dependencies:

pip install -r requirements.txt

-

Adjust sources folder.

. ├── background │ └── val2017 ├── fonts ├── text └── textures └── dtd └── images -

Run

main.pypython main.py

Figure 2: Samples from STS-TR dataset.

If you find this work useful, please cite our paper:

@article{YILDIZ2024101881,

title = {Turkish scene text recognition: Introducing extensive real and synthetic datasets and a novel recognition model},

journal = {Engineering Science and Technology, an International Journal},

volume = {60},

pages = {101881},

year = {2024},

issn = {2215-0986},

doi = {https://doi.org/10.1016/j.jestch.2024.101881},

url = {https://www.sciencedirect.com/science/article/pii/S2215098624002672},

author = {Serdar Yıldız},

keywords = {Scene text recognition dataset, Synthetic scene text recognition dataset, Patch masking, Position attention, Vision transformers},

}