✨ News: We release the source code of the current state-of-the-art model SeqNet(AAAI 2021), which achieves 🏆 94.8% mAP on CUHK-SYSU.

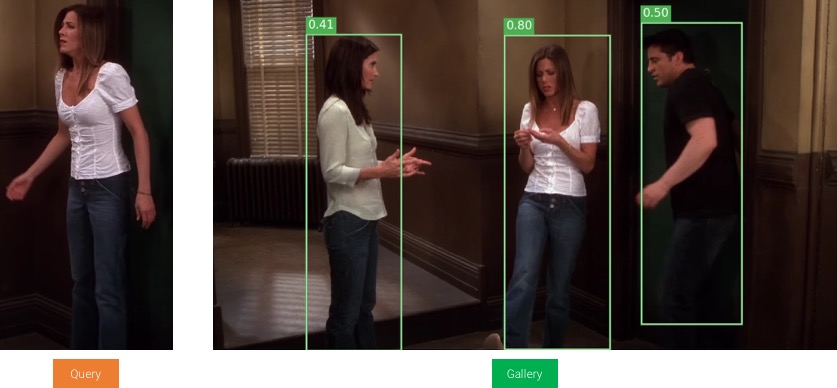

A pytorch implementation for CVPR 2017 "Joint Detection and Identification Feature Learning for Person Search".

The code is based on the offcial caffe version.

You can find a better one achieving about 85% mAP in mmdetection branch!

Note: The implementaion of Faster R-CNN in mmdetection branch is better than that described in original paper.

- Simpler code: After reduction and refactoring, the current version is simpler and easier to understand.

- Pure Pytorch code: Numpy is not used, except for data loading.

Run pip install -r requirements.txt in the root directory of the project

torchvision must be greater than 0.3.0, as we need torchvision.ops.nms

Let's say $ROOT is the root directory.

- Download CUHK-SYSU (google drive or baiduyun) dataset, unzip to

$ROOT/data/dataset/ - Download our trained model (google drive or baiduyun) (extraction code:

uuti) to$ROOT/data/trained_model/

After the above two steps, the directory structure should look like this:

$ROOT/data

├── dataset

│ ├── annotation

│ ├── Image

│ └── README.txt

└── trained_model

└── checkpoint_step_50000.pth

BTW, $ROOT/data saves all experimental data, include: dataset, pretrained model, trained model, and so on.

- Run

python tools/demo.py --gpu 0 --checkpoint data/trained_model/checkpoint_step_50000.pth. And then you can checkout the result inimgsdirectory.

- Prepare dataset as described in Quick Start section.

- Download pretrained model (google drive or baiduyun) (extraction code

ucnw) to$ROOT/data/pretrained_model/ python tools/train_net.py --gpu 0- Trained model will be saved to

$ROOT/data/trained_model/

You can check the usage of train_net.py by running python tools/train_net.py -h

python tools/test_net.py --gpu 0 --checkpoint data/trained_model/checkpoint_step_50000.pth

The result should be around:

Search ranking:

mAP = 76.78%

Top- 1 = 77.48%

Top- 5 = 88.48%

Top-10 = 91.52%