This service is an API that returns a specific set of information for a specified block on one of the supported blockchain networks (sochain) and the details for a specific transaction.

- Getting started

- Continuous Integration

- Deployment

- Monitoring

- Troubleshooting

- Dependencies

- Limitations

These instructions will get you a copy of the project up and running on your local machine for development and testing purposes.

Docker is the only prerequisite as the api binary runs inside a container that installs go and other useful tools used for testing like: CompileDaemon that ensures our binary is getting re-compiled on changes, Godog for our integration tests (using BDD) and various go linters.

Pre-commit is used in order to ensure that whenever we commit code we run a set of checks to ensure code quality and

standards. To set it up run pre-commit install.

In order to build and start the docker container run the following:

make dev-upOnce the containers are built and ready, the API will be served on http://localhost:8080 but it

will be using wiremock in order to simulate the sochain API. In order to use the actual API

please replace SOCHAIN_BASE_URL with https://sochain.com/api/v2 or alternatively simply remove the entry in the

docker-compose.yaml file as the config package will use as default the real API URL for sochain.

To run tests for the whole project locally please run the following:

make test // runs all tests

make test-unit // runs unit tests

make test-integration // runs integration testsPlease ensure that the containers are up and running before running the integration tests as all make commands will run by default inside the container.

The project does not have a CI solution built into it, but some solutions that could be considered are:

The configuration should be available under the ci folder.

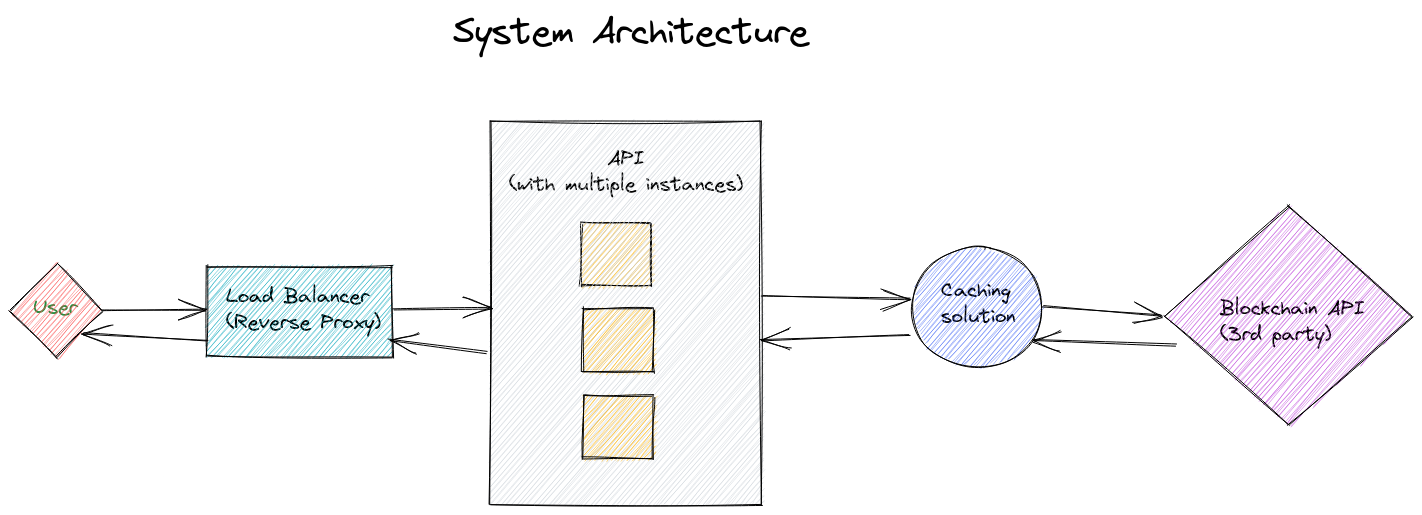

In order to deploy the application Kubernetes should be used together with Helm to manage our deployment charts (which we could store under helm) and a production Dockerfile for the API Pod.

In order to further reduce the complexity of the deployment and to help with load balancing, service-to-service authentication, monitoring and much more, Istio could be used on top of Kubernetes.

To be able to also benefit greatly from K8S service management 2 additional endpoints should be added:

- Readiness Endpoint Check (check that the application started correctly)

- Liveness Endpoint Check (check against sochain api status)

Due to time limitations tracing and monitoring were left out of the challenge, but these could be easily added with tools and libraries such as:

- OpenTelemetry (tracing and metrics)

- Grafana (for monitoring dashboards)

In order to debug locally Delve could be used. The only limitation is that the binary is running inside a container. In order for the debugger to be able to connect and get debugging data from the running api the following details could be added to the container configuration: lines-to-add and then attach to the running application as such.

The project uses the following dependencies:

-

heimdall used as an http client in order to be able to make large number of requests at scale and using a circuit breaker to control failing requests.

-

httprouter used for routing as it is a very lightweight and highly performant library.

-

envconfig to load the environment variables into our application.

-

go-respond as a small library to ease up handling common HTTP JSON responses.

-

Code coverage could be expanded further, specially when it comes to the integration tests as only happy paths were tested in there due to time limitations. Further tests to add around error handling should be added.

-

In most cases where we encounter an error in the handler we just throw back a generic default message based on the status code. This could be further improved by giving more details as to exactly what went wrong.

-

Authentication was not handled at all in the application but the service should consume a JWT token created by an auth service connected to an API gateway. e.g. Kong

-

The Sochain API seems to have a rate limit so we don't make too many requests in a short time frame. This logic could be added to the application as well.

-

For caching, if the response for given hashes is not subject to change, nginx caching instead of application level cache handling could be considered in order to minimize response times of our application. nginx-cache Alternatively we could have a separate layer that would be abstracted from the view point of the application separately and be responsible of caching. There are 2 main reasons for having caching in the system: 1st faster reponse times for our API and 2nd in case the 3rd party is down we are still up and running.