Gathers scalable Tensorflow, Python infrastructure deployment and practices, 100% Docker.

- Requirements

- Tensorflow deployment

- Simple Backend

- Apache stack

- simple data pipeline

- Realtime ETL

- Unit test

- Stress test

- Monitoring

- Mapping

- Miscellaneous

- Practice PySpark

- Practice PyFlink

- Printscreen

- Docker

- Docker compose

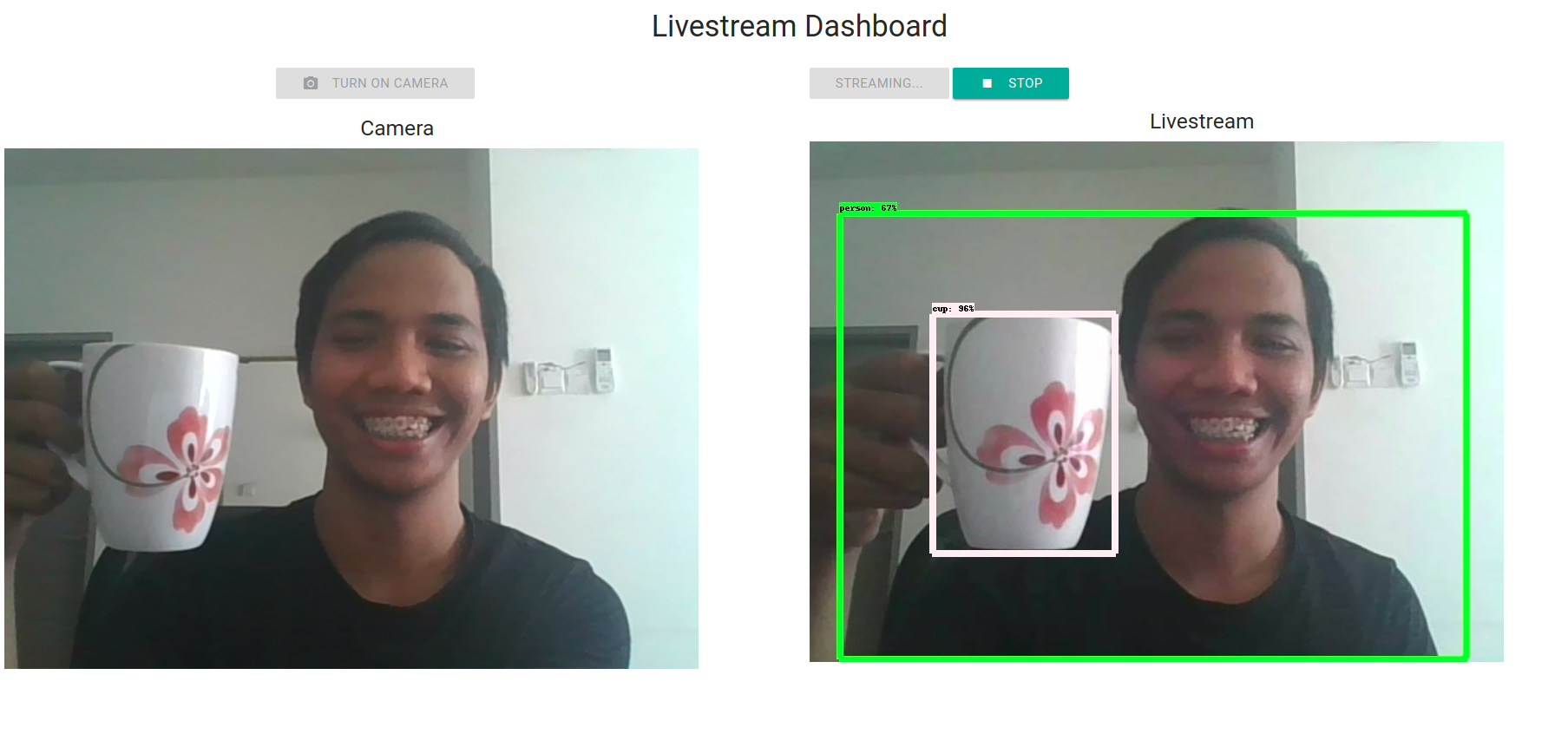

- Object Detection. Flask SocketIO + WebRTC

Stream from webcam using WebRTC -> Flask SocketIO to detect objects -> WebRTC -> Website.

- Object Detection. Flask SocketIO + opencv

Stream from OpenCV -> Flask SocketIO to detect objects -> OpenCV.

- Speech streaming. Flask SocketIO

Stream speech from microphone -> Flask SocketIO to do realtime speech recognition.

- Text classification. Flask + Gunicorn

Serve Tensorflow text model using Flask multiworker + Gunicorn.

- Image classification. TF Serving

Serve image classification model using TF Serving.

- Image Classification using Inception. Flask SocketIO

Stream image using SocketIO -> Flask SocketIO to classify.

- Object Detection. Flask + opencv

Webcam -> Opencv -> Flask -> web dashboard.

- Face-detection using MTCNN. Flask SocketIO + opencv

Stream from OpenCV -> Flask SocketIO to detect faces -> OpenCV.

- Face-detection using MTCNN. opencv

Webcam -> Opencv.

- Image classification using Inception. Flask + Docker

Serve Tensorflow image model using Flask multiworker + Gunicorn on Docker container.

- Image classification using Inception. Flask + EC2 Docker Swarm + Nginx load balancer

Serve inception on multiple AWS EC2, scale using Docker Swarm, balancing using Nginx.

- Text classification. Hadoop streaming MapReduce

Batch processing to classify texts using Tensorflow text model on Hadoop MapReduce.

- Text classification. Kafka

Stream text to Kafka producer and classify using Kafka consumer.

- Text classification. Distributed TF using Flask + Gunicorn + Eventlet

Serve text model on multiple machines using Distributed TF + Flask + Gunicorn + Eventlet. Means that, Distributed TF will split a single neural network model to multiple machines to do feed-forward.

- Text classification. Tornado + Gunicorn

Serve Tensorflow text model using Tornado + Gunicorn.

- Text classification. Flask + Celery + Hadoop

Submit large texts using Flask, signal queue celery job to process using Hadoop, delay Hadoop MapReduce.

- Text classification. Luigi scheduler + Hadoop

Submit large texts on Luigi scheduler, run Hadoop inside Luigi, event based Hadoop MapReduce.

- Text classification. Luigi scheduler + Distributed Celery

Submit large texts on Luigi scheduler, run Hadoop inside Luigi, delay processing.

- Text classification. Airflow scheduler + elasticsearch + Flask

Scheduling based processing using Airflow, store inside elasticsearch, serve it using Flask.

- Text classification. Apache Kafka + Apache Storm

Stream from twitter -> Kafka Producer -> Apache Storm, to do distributed minibatch realtime processing.

- Text classification. Dask

Batch processing to classify texts using Tensorflow text model on Dask.

- Text classification. Pyspark

Batch processing to classify texts using Tensorflow text model on Pyspark.

- Text classification. Pyspark streaming + Kafka

Stream texts to Kafka Producer -> Pyspark Streaming, to do minibatch realtime processing.

- Text classification. Streamz + Dask + Kafka

Stream texts to Kafka Producer -> Streamz -> Dask, to do minibatch realtime processing.

- Text classification. FastAPI + Streamz + Water Healer

Change concurrent requests into mini-batch realtime processing to speed up text classification.

- Text classification. PyFlink

Batch processing to classify texts using Tensorflow text model on Flink batch processing.

- Text classification. PyFlink + Kafka

Stream texts to Kafka Producer -> PyFlink Streaming, to do minibatch realtime processing.

- Object Detection. ImageZMQ

Stream from N camera clients using ImageZMQ -> N slaves ImageZMQ processing -> single dashboard.

- Flask

- Flask with MongoDB

- REST API Flask

- Flask Redis PubSub

- Flask Mysql with REST API

- Flask Postgres with REST API

- Flask Elasticsearch

- Flask Logstash with Gunicorn

- Flask SocketIO with Redis

- Multiple Flask with Nginx Loadbalancer

- Multiple Flask SocketIO with Nginx Loadbalancer

- RabbitMQ and multiple Celery with Flask

- Flask + Gunicorn + HAproxy

- Flask with Hadoop Map Reduce

- Flask with Kafka

- Flask with Hadoop Hive

- PySpark with Jupyter

- Apache Flink with Jupyter

- Apache Storm with Redis

- Apache Flink with Zeppelin and Kafka

- Kafka cluster + Kafka REST

- Spotify Luigi + Hadoop streaming

- Streaming Tweepy to Elasticsearch

- Scheduled crawler using Luigi Spotify to Elasticsearch

- Airflow to Elasticsearch

- MySQL -> Apache NiFi -> Apache Hive

- PostgreSQL CDC -> Debezium -> KsqlDB

- Pytest

- Locust

- PostgreSQL + Prometheus + Grafana

- FastAPI + Prometheus + Loki + Jaeger

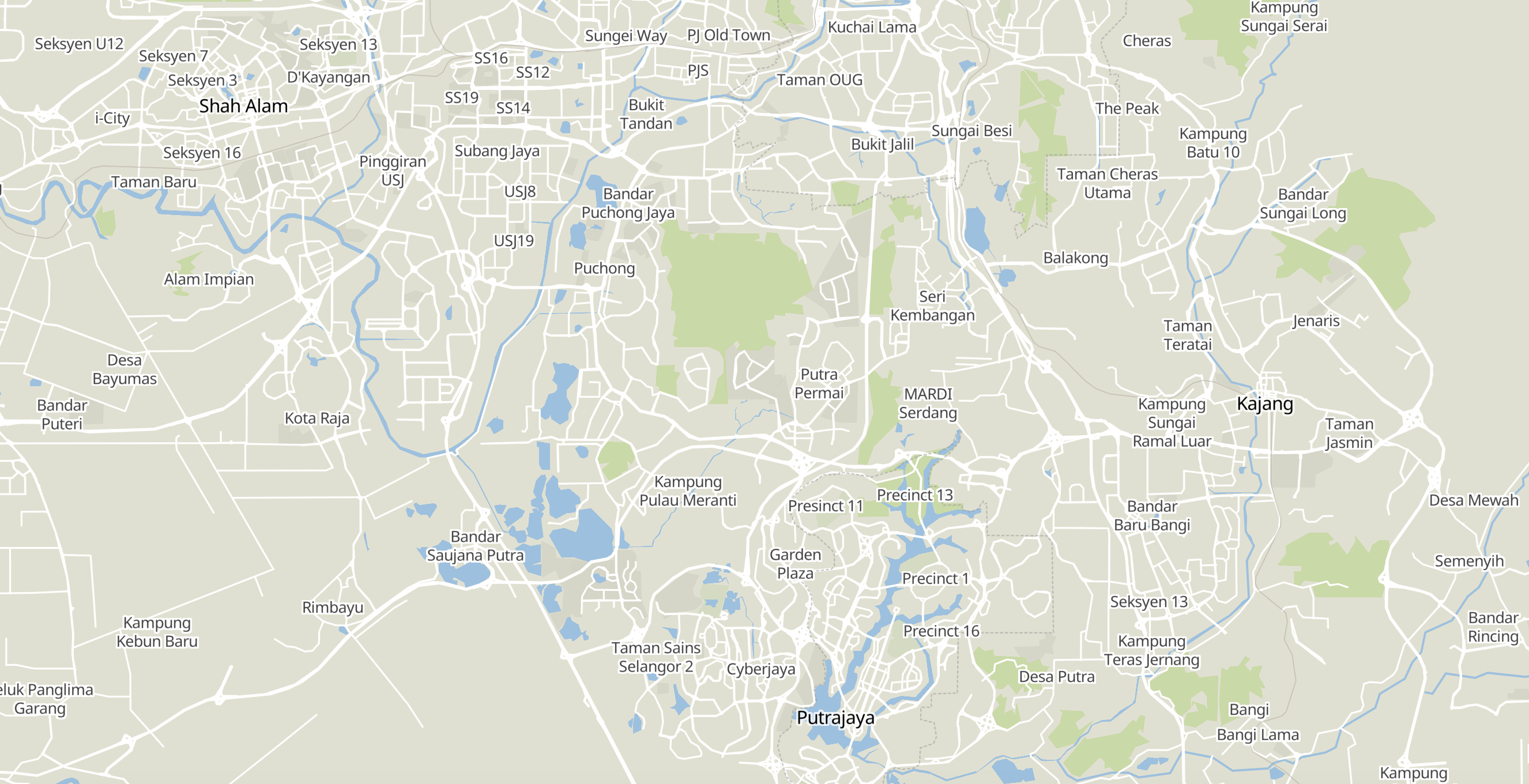

Focused for Malaysia, for other countries, you need to change download links.

- OSRM Malaysia

- Maptiler Malaysia

- OSM Style Malaysia

- Elasticsearch + Kibana + Cerebro

- Jupyter notebook

- Jupyterhub

- Jupyterhub + Github Auth

- AutoPEP8

- Graph function dependencies

- MLFlow

- Simple PySpark SQL.

- Simple PySpark SQL.

- Simple download dataframe from HDFS.

- Create PySpark DataFrame from HDFS.

- Simple PySpark SQL with Hive Metastore.

- Use PySpark SQL with Hive Metastore.

- Simple Delta lake.

- Simple Delta lake.

- Delete Update Upsert using Delta.

- Simple Delete Update Upsert using Delta lake.

- Structured streaming using Delta.

- Simple structured streaming with Upsert using Delta streaming.

- Kafka Structured streaming using Delta.

- Kafka structured streaming from PostgreSQL CDC using Debezium and Upsert using Delta streaming.

- PySpark ML text classification.

- Text classification using Logistic regression and multinomial in PySpark ML.

- PySpark ML word vector.

- Word vector in PySpark ML.

- Simple Word Count to HDFS.

- Simple Table API to do Word Count and sink into Parquet format in HDFS.

- Simple Word Count to PostgreSQL.

- Simple Table API to do Word Count and sink into PostgreSQL using JDBC.

- Simple Word Count to Kafka.

- Simple Table API to do Word Count and sink into Kafka.

- Simple text classification to HDFS.

- Load trained text classification model using UDF to classify sentiment and sink into Parquet format in HDFS.

- Simple text classification to PostgreSQL.

- Load trained text classification model using UDF to classify sentiment and sink into PostgreSQL.

- Simple text classification to Kafka.

- Load trained text classification model using UDF to classify sentiment and sink into Kafka.

- Simple real time text classification upsert to PostgreSQL.

- Simple real time text classification from Debezium CDC and upsert into PostgreSQL.

- Simple real time text classification upsert to Kafka.

- Simple real time text classification from Debezium CDC and upsert into Kafka Upsert.

- Simple Word Count to Apache Hudi.

- Simple Table API to do Word Count and sink into Apache Hudi in HDFS.

- Simple text classification to Apache Hudi.

- Load trained text classification model using UDF to classify sentiment and sink into Apache Hudi in HDFS.

- Simple real time text classification upsert to Apache Hudi.

- Simple real time text classification from Debezium CDC and upsert into Apache Hudi in HDFS.