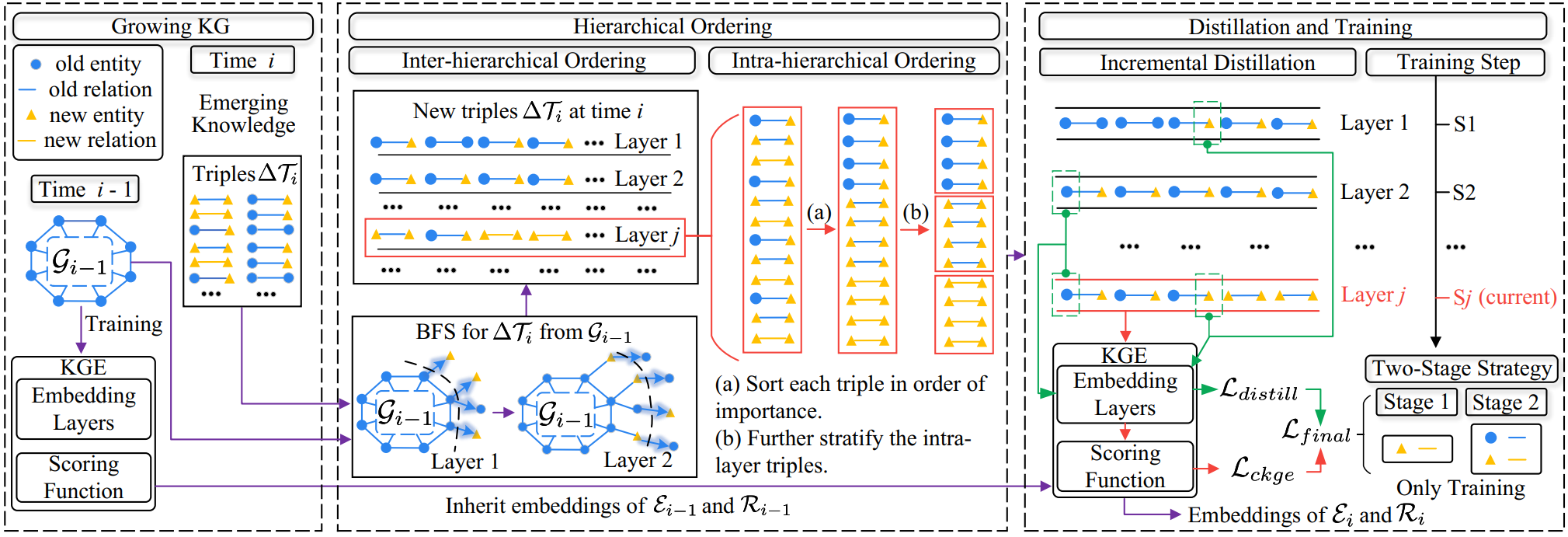

The codes and datasets for "Towards Continual Knowledge Graph Embedding via Incremental Distillation" [AAAI 2024].

The structure of the folder is shown below:

IncDE

├─checkpoint

├─data

├─logs

├─save

├─src

├─main.py

├─data_preprocess.py

└README.mdIntroduction to the structure of the folder:

- /checkpoint: The generated models are stored in this folder.

- /data: The datasets(ENTITY, RELATION, FACT, HYBRID, graph_equal, graph_higher, graph_lower) are stored in this folder.

- /logs: Logs for the training are stored in this folder.

- /save: Some temp results are in this folder.

- /src: Source codes are in this folder.

- /main.py: To run the IncDE.

- data_preprocess.py: To prepare the data processing.

- README.md: Instruct on how to realize IncDE.

All experiments are implemented on the NVIDIA RTX 3090Ti GPU with the PyTorch. The version of Python is 3.7.

Please run as follows to install all the dependencies:

pip3 install -r requirements.txt- Unzip the dataset

$data1.zip$ and$data2.zip$ in the folder of$data$ . - Prepare the data processing in the shell:

python data_preprocess.py- Run the code with this in the shell:

python main.py -dataset ENTITY -gpu 0- Run the code with this in the shell:

./ablation.shIf you find this method or code useful, please cite

@inproceedings{liu2024towards,

title={Towards Continual Knowledge Graph Embedding via Incremental Distillation},

author={Liu, Jiajun and Ke, Wenjun and Wang, Peng and Shang, Ziyu and Gao, Jinhua and Li, Guozheng and Ji, Ke and Liu, Yanhe},

booktitle={AAAI},

year={2024}

}