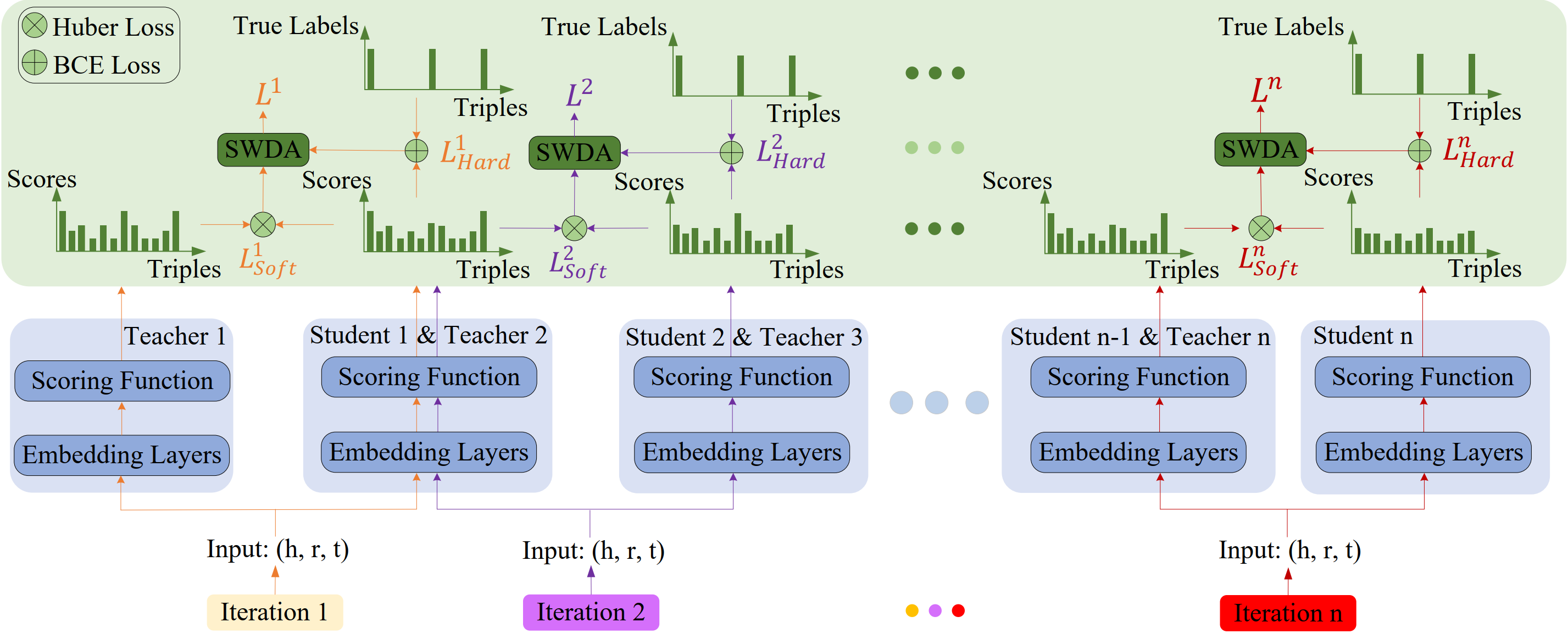

The codes and datasets for "IterDE: An Iterative Knowledge Distillation Framework for Knowledge Graph Embeddings". (AAAI2023)

The repo is expended on the basics of OpenKE.

The structure of the folder is shown below:

IterDE

├─checkpoint

├─benchmarks

├─IterDE_FB15K237

├─IterDE_WN18RR

├─openke

├─requirements.txt

└README.mdIntroduction to the structure of the folder:

-

/checkpoint: The generated models are stored in this folder.

-

/benchmarks: The datasets(FB15K237 and WN18RR) are stored in this folder.

-

/IterDE_FB15K237: Training for iteratively distilling KGEs on FB15K-237.

-

/IterDE_WN18RR: Training for iteratively distilling KGEs on WN18RR.

-

/openke: Codes for the models of distillation for KGEs

-

/requirements.txt: All the dependencies are shown in this text.

-

README.md: Instruct on how to realize IterDE.

All experiments are implemented on CPU Intel(R) Xeon(R) Silver 4210 CPU @ 2.20GHz and GPU GeForce RTX 2080 Ti. The version of Python is 3.7.

Please run as follows to install all the dependencies:

pip3 install -r requirements.txt

- Enter openke folder.

cd IterDE

cd openke

- Compile C++ files

bash make.sh

cd ../

- Firstly, we pre-train the teacher model TransE:

cp IterDE_FB15K237/transe_512.py ./

python transe_512.py

- Then we iteratively distill the student model:

cp IterDE_FB15K237/transe_512_256_new.py ./

cp IterDE_FB15K237/transe_512_256_128_new.py ./

cp IterDE_FB15K237/transe_512_256_128_64_new.py ./

cp IterDE_FB15K237/transe_512_256_128_64_32_new.py ./

python transe_512_256_new.py

python transe_512_256_128_new.py

python transe_512_256_128_64_new.py

python transe_512_256_128_64_32_new.py

- Finally, the distilled student will be generated in the checkpoint folder.

- Firstly, we pre-train the teacher model ComplEx:

cp IterDE_WN18RR/com_wn_512.py ./

python com_wn_512.py

- Then we iteratively distill the student model:

cp IterDE_WN18RR/com_512_256_new.py ./

cp IterDE_WN18RR/com_512_256_128_new.py ./

cp IterDE_WN18RR/com_512_256_128_64_new.py ./

cp IterDE_WN18RR/com_512_256_128_64_32_new.py ./

python com_512_256_new.py

python com_512_256_128_new.py

python com_512_256_128_64_new.py

python com_512_256_128_64_32_new.py

- Finally, the distilled student will be generated in the checkpoint folder.

We refer to the code of OpenKE. Thanks for their contributions.

If you find the repository helpful, please cite the following paper

@inproceedings{liu2023iterde,

title={IterDE: an iterative knowledge distillation framework for knowledge graph embeddings},

author={Liu, Jiajun and Wang, Peng and Shang, Ziyu and Wu, Chenxiao},

booktitle={AAAI},

year={2023}

}